May

v1.1.30 - Terraform Provider¶

20 May, 2024

An updated version of the Terraform provider is now available.

This release includes enhancements around resources listed below:

Existing Resources

-

rafay_aks_cluster: Now supports Azure CNI overlay configuration. Users can specify thenetwork_plugin_modeasoverlayand addpod_cidrundernetwork_profileof this resource in Day 0. -

rafay_aks_cluster_v3: Also supports Azure CNI overlay configuration. -

rafay_access_apikey: Allows non-organization admin users to manage their API keys using this resource.

v1.1.30 Terraform Provider Bug Fixes¶

| Bug ID | Description |

|---|---|

| RC-34359 | AKS: Default populator is not populating the defaults on the deployed config, instead only on the desired config ,resulting in undiffable config on terraform apply |

| RC-26487 | Imported Cluster: Blueprint sync status for an imported cluster changed to 'InProgress' after updating the cluster labels using terraform provider |

| RC-33356 | EKS cluster provisioning is in infinite loop if the BP sync fails during provisioning |

v2.6 - SaaS¶

17 May, 2024

The section below provides a brief description of the new functionality and enhancements in this release.

Amazon EKS¶

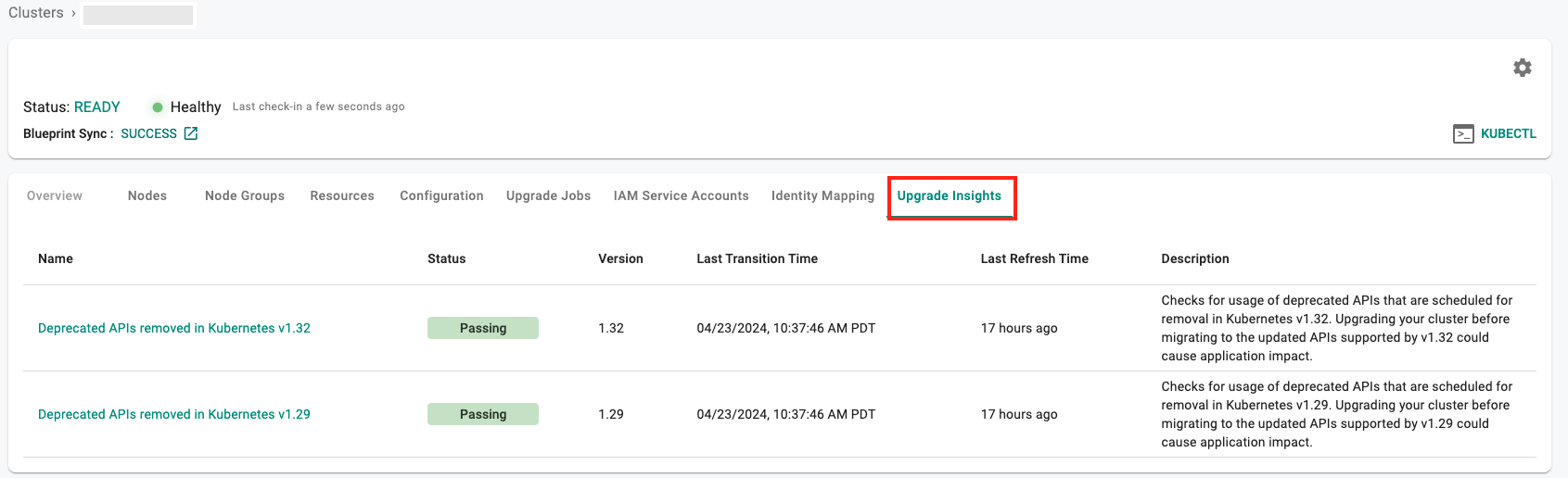

Upgrade Insights¶

Upgrade insights scans the cluster’s audit logs for events related to APIs that have been deprecated. This helps in the identification and remediation of the appropriate resources before executing the upgrade. This information is now available within Rafay's console with this release, making it easy for cluster administrators to consume information and orchestrate operations from one single place.

Upgrade Insights Permissions

For upgrade insights, ensure the IAM role associated with the cloud credential used for cluster LCM has the following permissions:

- eks:ListInsights

- eks:DescribeInsight

Please note that while these permissions are necessary for the upgrade insights capability to work, the existing cluster Lifecycle Management functionality will continue to work seamlessly even if these permissions are not present.

Known Bug

When clicking on the upgrade insight tab inside the cluster configuration, if the user does not have the required upgrade insight IAM permission, we throw an error Server Error. Please try again after some time. This essentially indicates insufficient privileges to view this. We acknowledge that the error message is unclear and plan to clarify it in the upcoming release.

More information on this feature can be found here

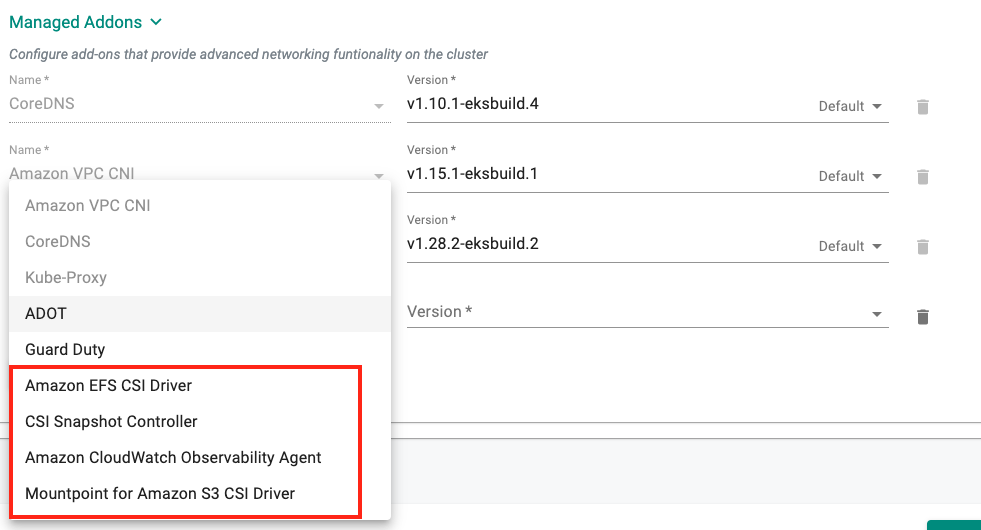

Managed Add-ons¶

Support has been added for the following EKS Managed Add-ons with this release.

- Amazon EFS CSI driver

- Mountpoint for Amazon S3 CSI Driver

- CSI snapshot controller

- Amazon CloudWatch Observability agent

For more information, please refer to the Managed Addons Documentation

Azure AKS¶

Azure Overlay CNI¶

In this release, we have added support for Azure CNI overlay in AKS clusters using RCTL/Terraform/SystemSync/Swagger API. We plan to extend this support to UI in the next release. This enhancement aims to improve scalability, alleviate address exhaustion concerns, and simplify cluster scaling.

Subset Cluster Config with Azure Overlay

networkProfile:

dnsServiceIP: 10.0.0.10

loadBalancerSku: standard

networkPlugin: azure

networkPluginMode: overlay

networkPolicy: calico

podCidr: 10.244.0.0/16

serviceCidr: 10.0.0.0/16

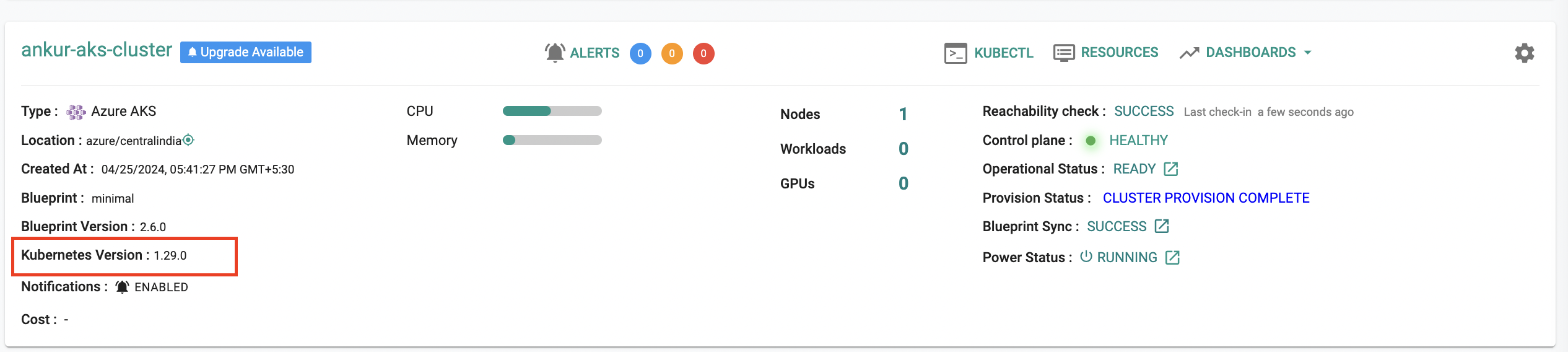

Kubernetes 1.29¶

New AKS clusters can be provisioned based on Kubernetes v1.29.x. Existing clusters managed by the controller can be upgraded in-place to Kubernetes v1.29.x.

Google GKE¶

Cluster Reservation Affinity¶

In this release, we have extended support for configuring reservation affinity beyond the UI and Terraform. Users can now utilize other interfaces such as RCTL/SystemSync to configure reservation affinity, enabling the utilization of reserved compute engine instances in GKE by setting Reservation Affinity to node pools.

Cluster Config with Reservation Affinity

{

"apiVersion": "infra.k8smgmt.io/v3",

"kind": "Cluster",

"metadata": {

"name": "my-cluster",

"project": "defaultproject"

},

"spec": {

"cloudCredentials": "dev",

"type": "gke",

"config": {

"gcpProject": "dev-382813",

"location": {

"type": "zonal",

"config": {

"zone": "us-central1-a"

}

},

"controlPlaneVersion": "1.27",

"network": {

"name": "default",

"subnetName": "default",

"access": {

"type": "public",

"config": null

},

"enableVPCNativetraffic": true,

"maxPodsPerNode": 110

},

"features": {

"enableComputeEnginePersistentDiskCSIDriver": true

},

"nodePools": [

{

"name": "default-nodepool",

"nodeVersion": "1.27",

"size": 2,

"machineConfig": {

"imageType": "COS_CONTAINERD",

"machineType": "e2-standard-4",

"bootDiskType": "pd-standard",

"bootDiskSize": 100,

"reservationAffinity": {

"consumeReservationType": "specific",

"reservationName": "my-reservation"

}

},

"upgradeSettings": {

"strategy": "SURGE",

"config": {

"maxSurge": 1

}

}

}

]

},

"blueprint": {

"name": "minimal",

"version": "latest"

}

}

}

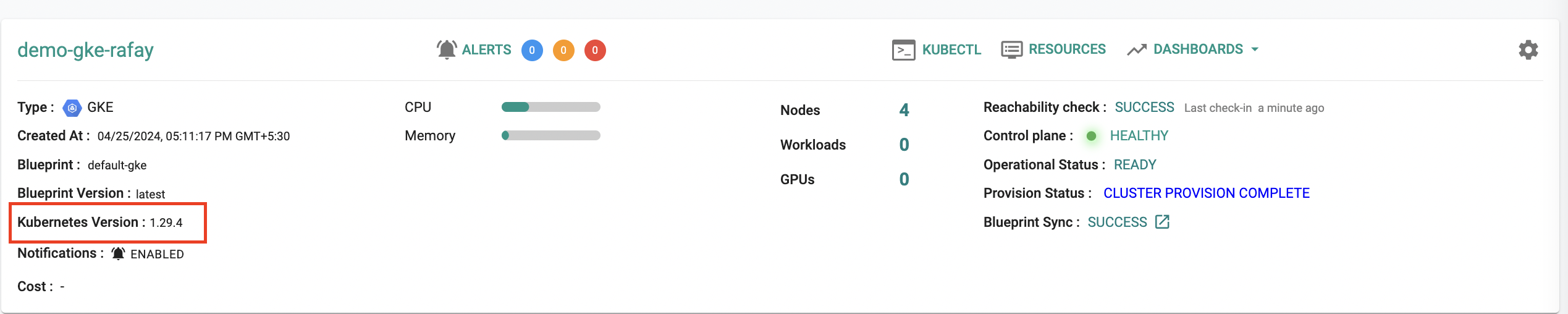

Kubernetes 1.29¶

New GKE clusters can be provisioned based on Kubernetes v1.29.x. Existing clusters managed by the controller can be upgraded in-place to Kubernetes v1.29.x.

Imported/Registered Clusters¶

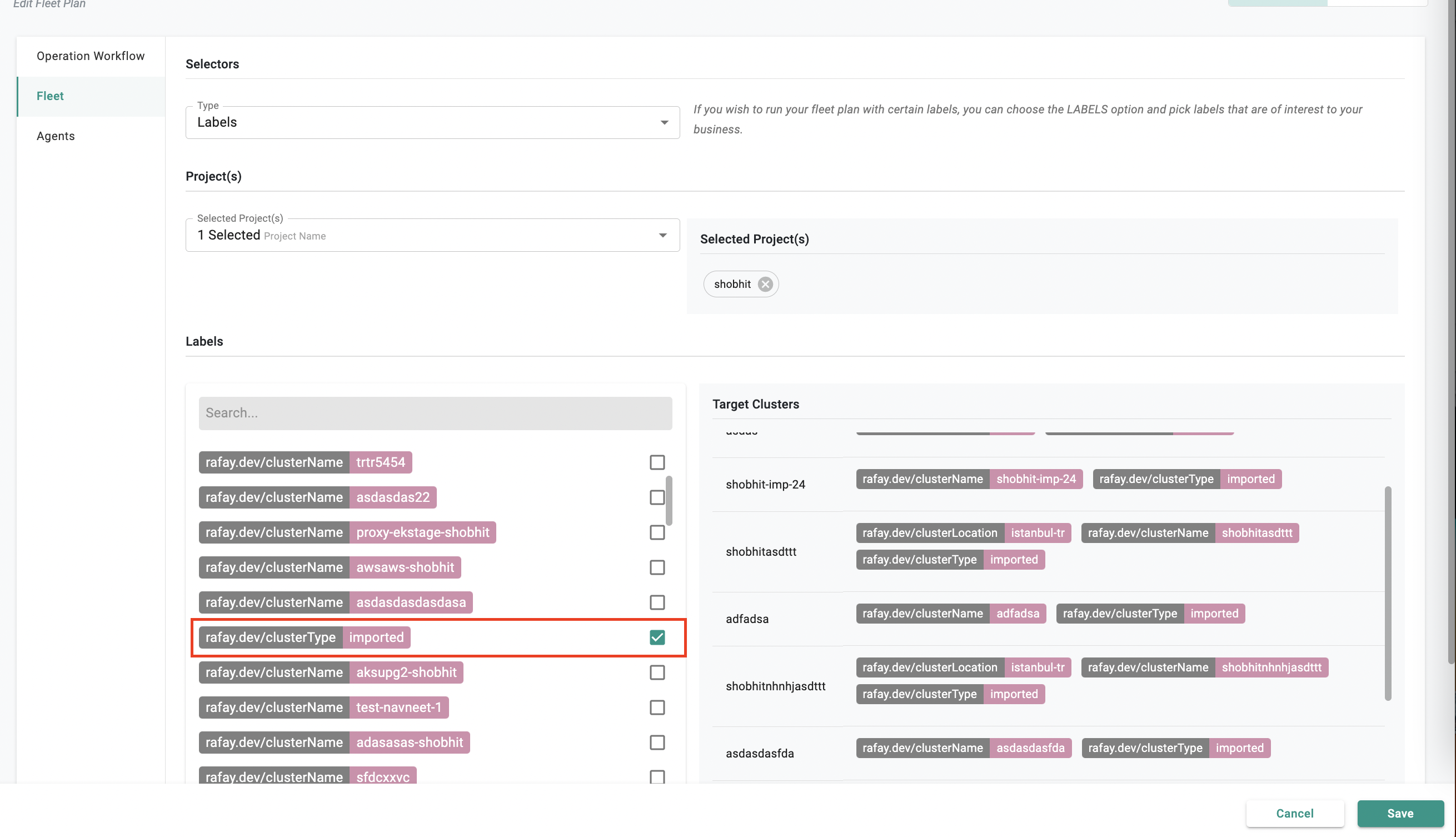

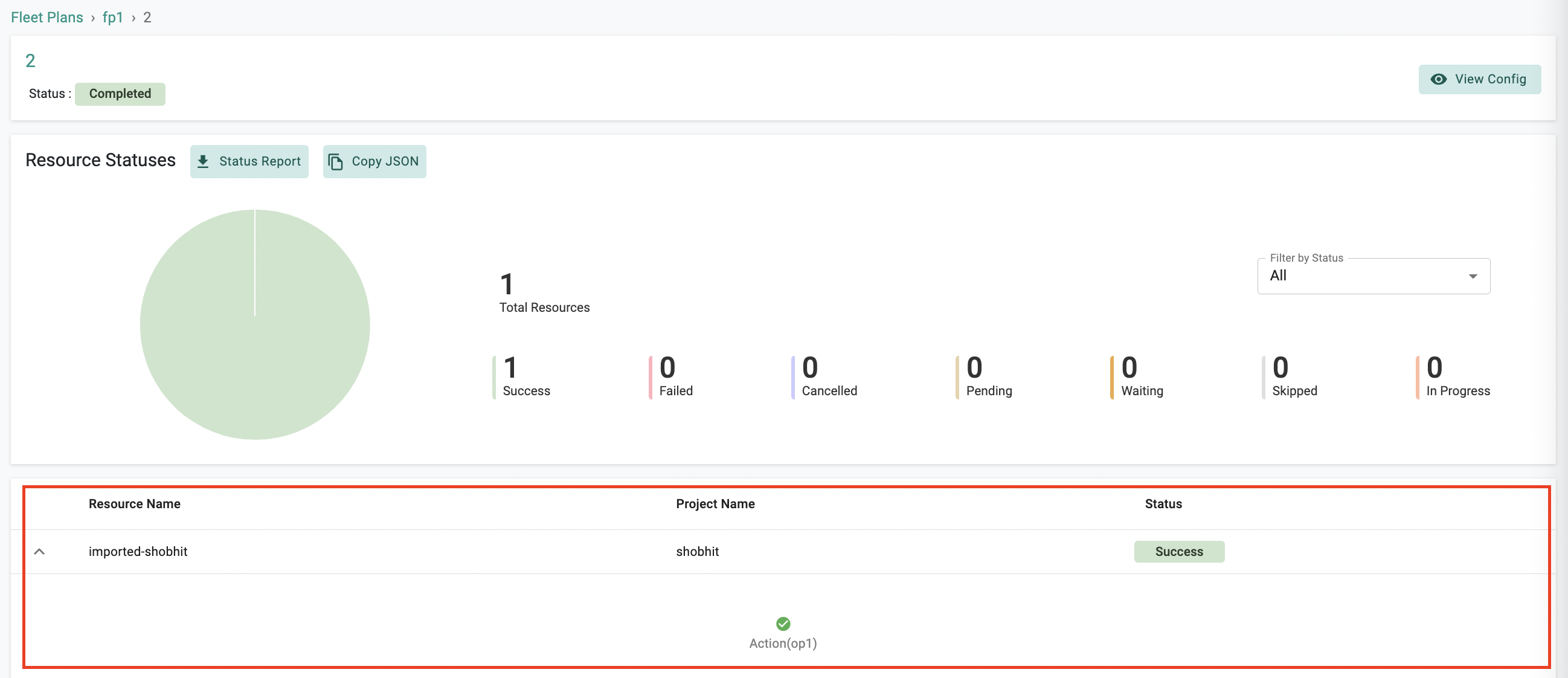

Fleet Support¶

Now, you can use fleet operations with imported cluster types. Easily update blueprints across imported clusters with fleet ops functionality.

Important

The action types like Control Plane Upgrade, Node Group And Control Plane Upgrade, Node Groups Upgrade, and Patch are applicable for EKS and AKS cluster types but not for imported cluster types. However, the type Blueprint is applicable for EKS,AKS and Imported Cluster type as well.

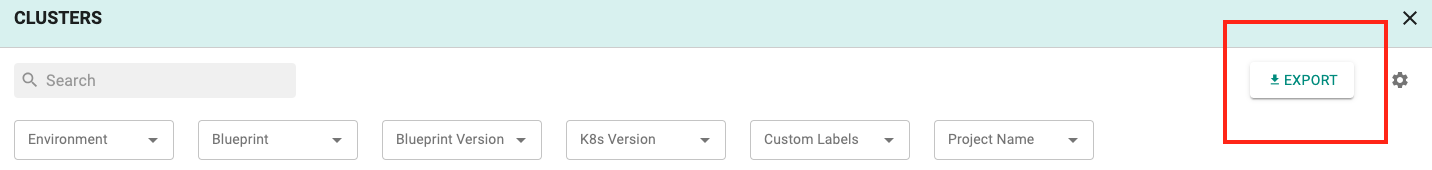

Clusters¶

Export Option¶

An export option is now available to download the list of clusters across the organization/projects with metadata including custom labels, K8s version, active nodes, project etc. This will help customers plan for operations such as upgrades, co-ordinate with cluster owners etc.

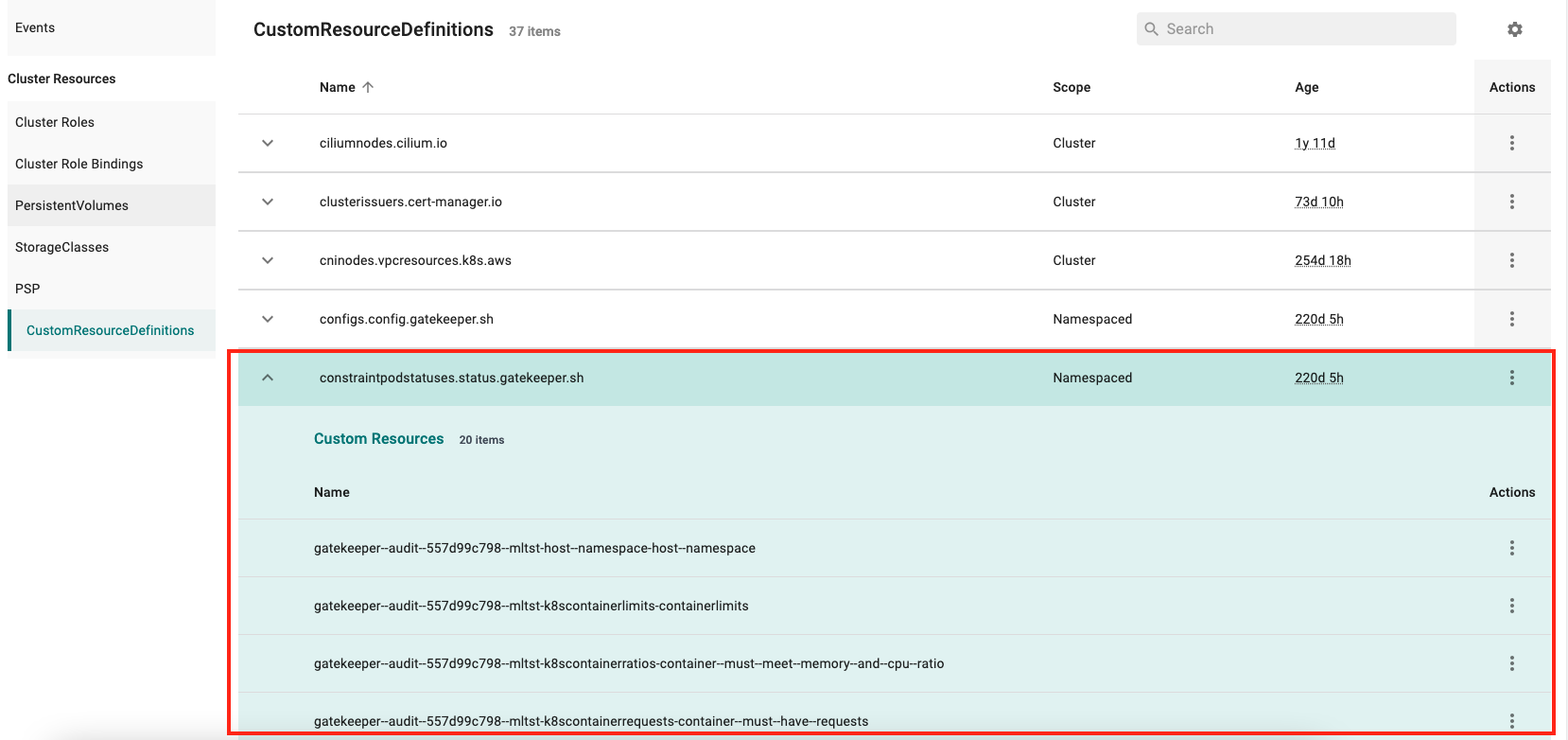

Resources Page¶

A number of improvements have been implemented to the Resources page including:

- Addition of a vertical scroller to Cluster resource grids

- Displaying information related to HPAs in the workloads debug page

- Displaying Custom Resources associated with CRDs

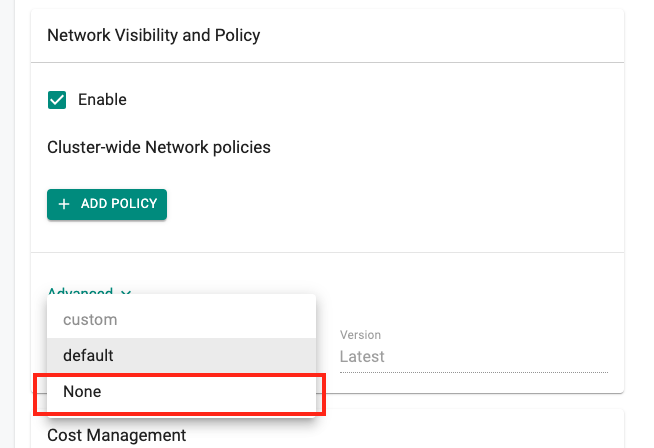

Network Policy¶

Installation Profile¶

Choosing an installation profile as part of the blueprint configuration has been made optional with this release, a 'None' option is available for the same.

This removes the restriction of installing Cilium in chaining mode through Rafay to enforce network policies. The cluster-wide and namespace scoped rules/policies framework can now be used for additional scenarios such as:

- Cilium is the primary CNI

- Use of NetworkPolicy CRDs with any CNI that can enforce Network Policies

Support for Network Policy Dashboard and Installation Profile is also being deprecated with this release.

Blueprint¶

Add-ons¶

With previous releases, deletion of add-ons is not allowed if it is referenced in any of the blueprints. With this release, add-ons can be deleted if it is part of blueprint versions that are disabled. This lets Platform Admins delete any stale or unused add-ons. There is a check still in place preventing add-ons being deleted if they are still part of any active blueprint versions.

Custom Roles for Zero-Trust Access¶

Workspace Admin Roles¶

A previous release introduced the ZTKA Custom Access feature that enables customers to define custom RBAC definitions to control the access that users have to the clusters in the organization. An example could be restricting users to read only access (get, list, watch verbs) for certain resources (e.g. pods, secrets) in a certain namespace.

In order to remove the need for a Platform Admin to create a Role definition file individually for each of the namespaces, a facility has been added to include the label k8smgmt.io/bindingtype: rolebinding in the ClusterRole definition file. This creates RoleBindings on the fly in all the namespaces associated with the Workspace Admin base role.

Note

This feature was earlier available only for Namespace Admin roles, this has been extended to Workspace Admins with this release

This table summarizes the various scenarios and the resulting behavior.

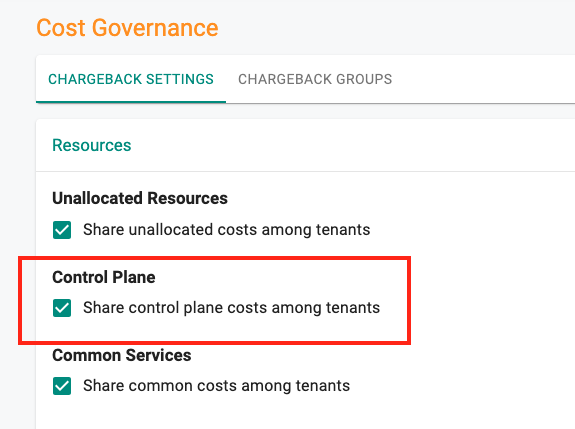

Cost Management¶

Control Plane costs¶

With this release, it is possible to configure chargeback reports to include/distribute costs for Control Plane among the various tenants sharing the cluster.

User Management¶

Orgs with large number of users, groups and roles will experience significantly faster load times for listing and searches.

v2.6 Bug Fixes¶

| Bug ID | Description |

|---|---|

| RC-32872 | ZTKA Custom role does not work when base role is Org read only |

| RC-33175 | UI: When selecting the Environment Template User role to assign to the group, all other selected roles get deselected automatically |

| RC-33698 | Namespace Admin role is not able to deploy the workload when the namespace is in terminating state |

| RC-30728 | Upstream K8s: When adding a new node as part of a Day 2 operation, node labels and taints are not being accepted |

| RC-33356 | EKS: Cluster provisioning is in an infinite loop if blueprint sync fails during provisioning via TF interface |

| RC-33361 | Backup and Restore: UI shows old agent name even when a new data agent is deployed in the same cluster |

| RC-33673 | ClusterRolebinding of a Project admin role user gets deleted for an IdP user having multiple group associations and roles including Org READ ONLY role |

| RC-32592 | MKS: Replace ntpd with systemd's timesyncd |

| RC-33845 | EKS: No bootstrap agents found error raised at drain process |

| RC-33702 | MKS: Node taints are not getting applied when a failed rctl apply attempt is retriggered from UI |