Sept

v1.1.35 - Terraform Provider¶

30 Sep, 2024

An updated version of the Terraform provider is now available.

This release includes enhancements around the resources listed below:

Existing Resources¶

-

rafay_aks_cluster: Added support for OIDC profile and workload identity profile, along with auto upgrade channels.The OIDC and workload identity flags can be enabled in this resource, which works in conjunction with the workload identity resource described below

- Documentation: For more details, refer to the Terraform documentation.

-

rafay_aks_cluster_v3: Added support for OIDC profile and workload identity profile, along with auto upgrade channels.The OIDC and workload identity flags can be enabled in this resource, which works in conjunction with the workload identity resource described below

- Documentation: For more details, refer to the Terraform documentation.

New Resources¶

- rafay_aks_workload_identity: Workload identity support is now included, allowing users to manage this sub-resource as a separate terraform resource that depends on the cluster resource.This separation facilitates easier management and segregation from the cluster resource.

- Documentation: For more details, refer to the Terraform documentation.

Import Support¶

Import support has been added in the resources listed here: Import Support.

v1.1.35 Terraform Provider Bug Fixes¶

| Bug ID | Description |

|---|---|

| RC-35635 | Support dependency for resource template hooks, workflow tasks, and environment template hooks. |

| RC-37088 | Providing an empty list of strings in set_string for Helm addon options crashes the Terraform plugin. |

| RC-35202 | Unable to import existing pipelines. |

v2.10 - SaaS¶

26 Sep, 2024

The section below provides a brief description of the new functionality and enhancements in this release.

Azure AKS¶

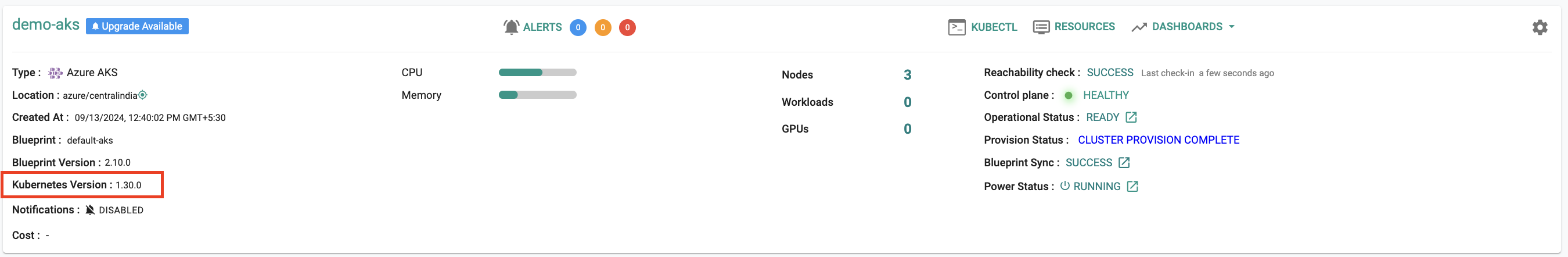

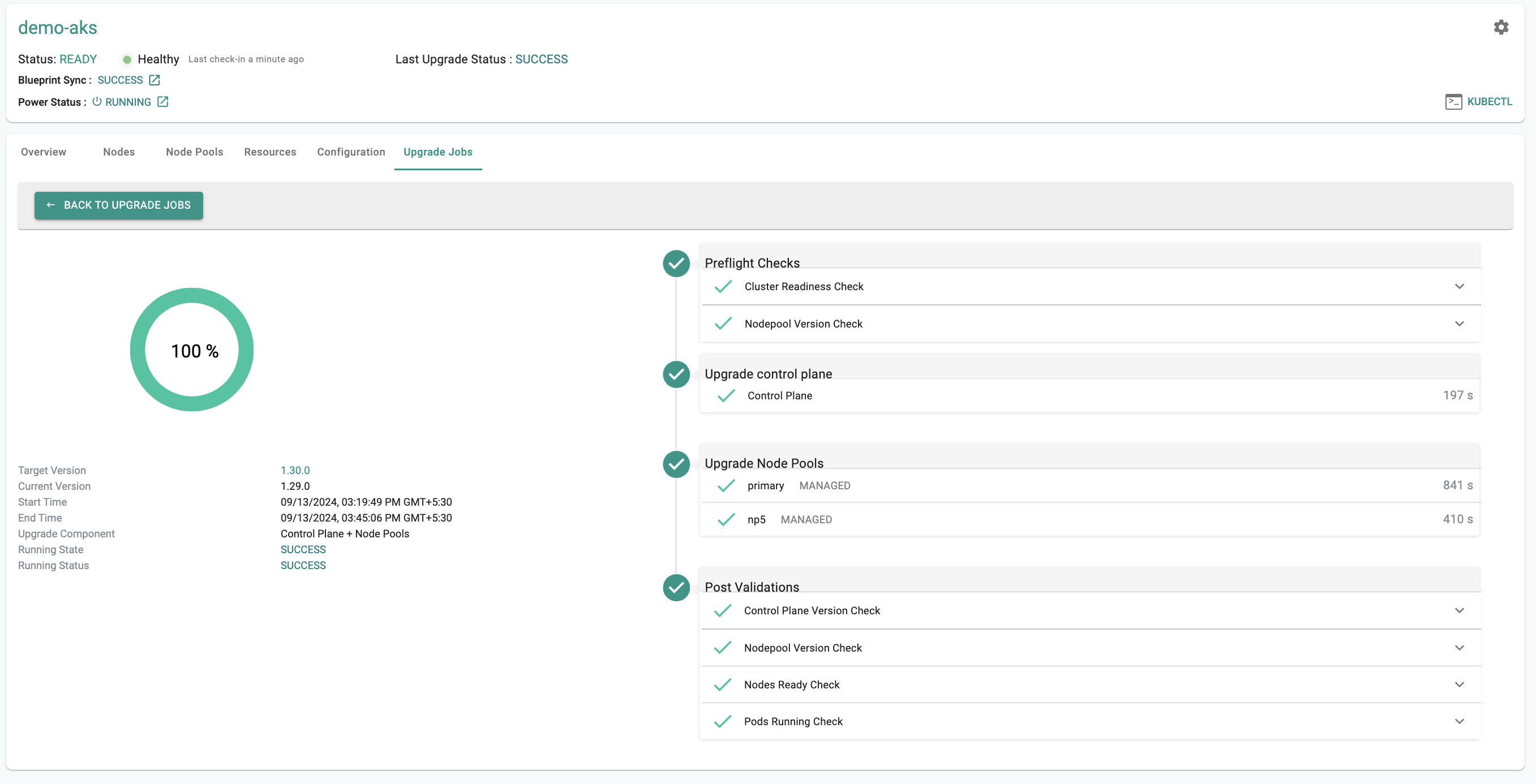

Kubernetes v1.30¶

New AKS clusters can now be provisioned based on Kubernetes v1.30.x. Existing clusters managed by the controller can be upgraded "in-place" to Kubernetes v1.30.x.

New Cluster

In-Place Upgrade

Cluster Auto-Upgrade¶

It will be possible to configure auto-upgrades for AKS clusters with the introduction of this capability. Users will be able to choose to set auto-upgrades for:

- Cluster Kubernetes version

- Node OS

For more information on this feature, please refer here.

Important

Support for this feature is available via RCTL CLI, Swagger API, GitOps and Terraform Provider. Support for doing this through UI will be added in a future release

Workload Identity¶

Azure AD Workload Identity enables Kubernetes applications to access Azure cloud resources securely with Azure Active Directory based on annotated service accounts.

Support is being added to enable Workload Identity capabilities for both Day 0 and Day 2 operations.

Important

Support for this feature is available via RCTL CLI, V3 Swagger API and Terraform Provider. Support for doing this through UI will be added in a future release

To learn more about the Workload Identity concept and its benefits, you can refer to the Workload Identity Blog.

For more information on this feature, please refer here.

To view the Known Issues for the 2.10 release of the Workload Identity feature, please refer to Known Issues .

Upstream Kubernetes for Bare Metal and VMs¶

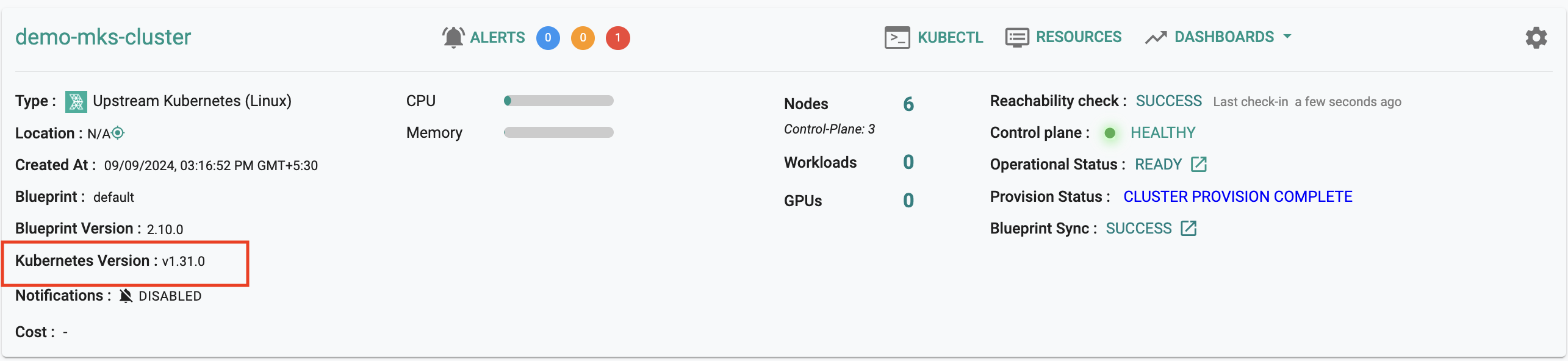

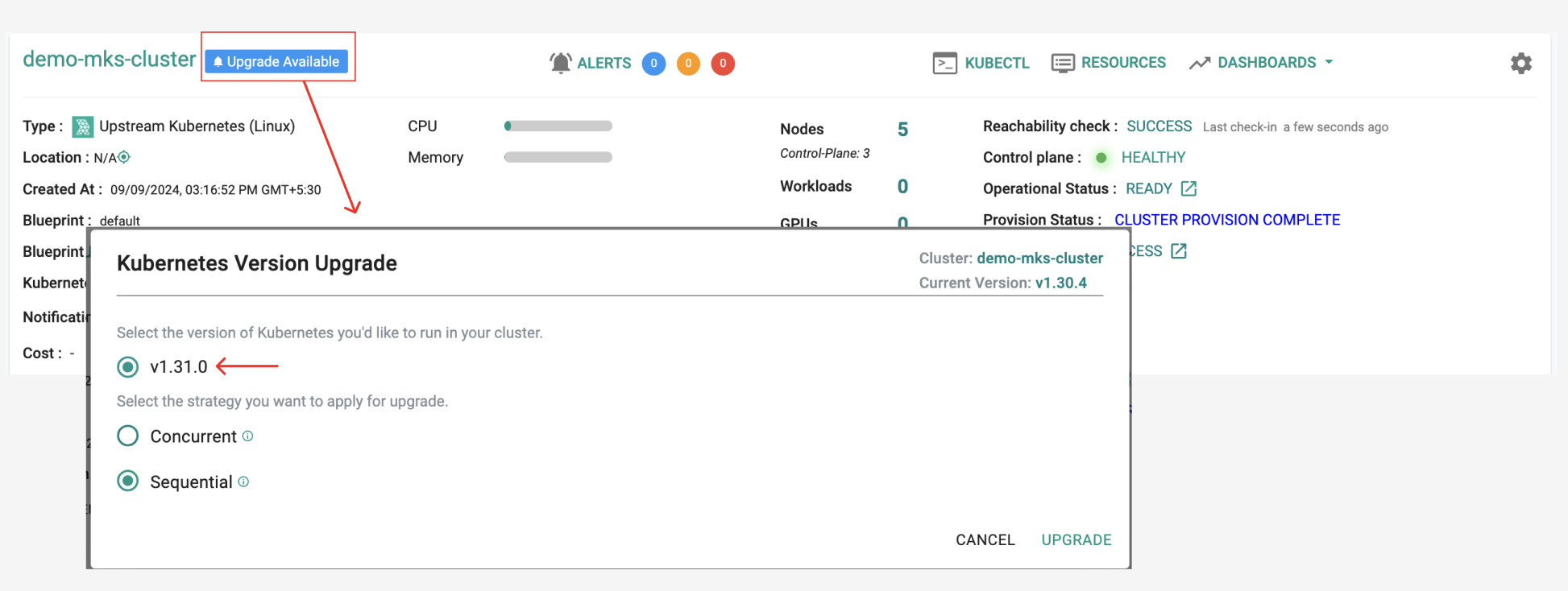

Kubernetes v1.31¶

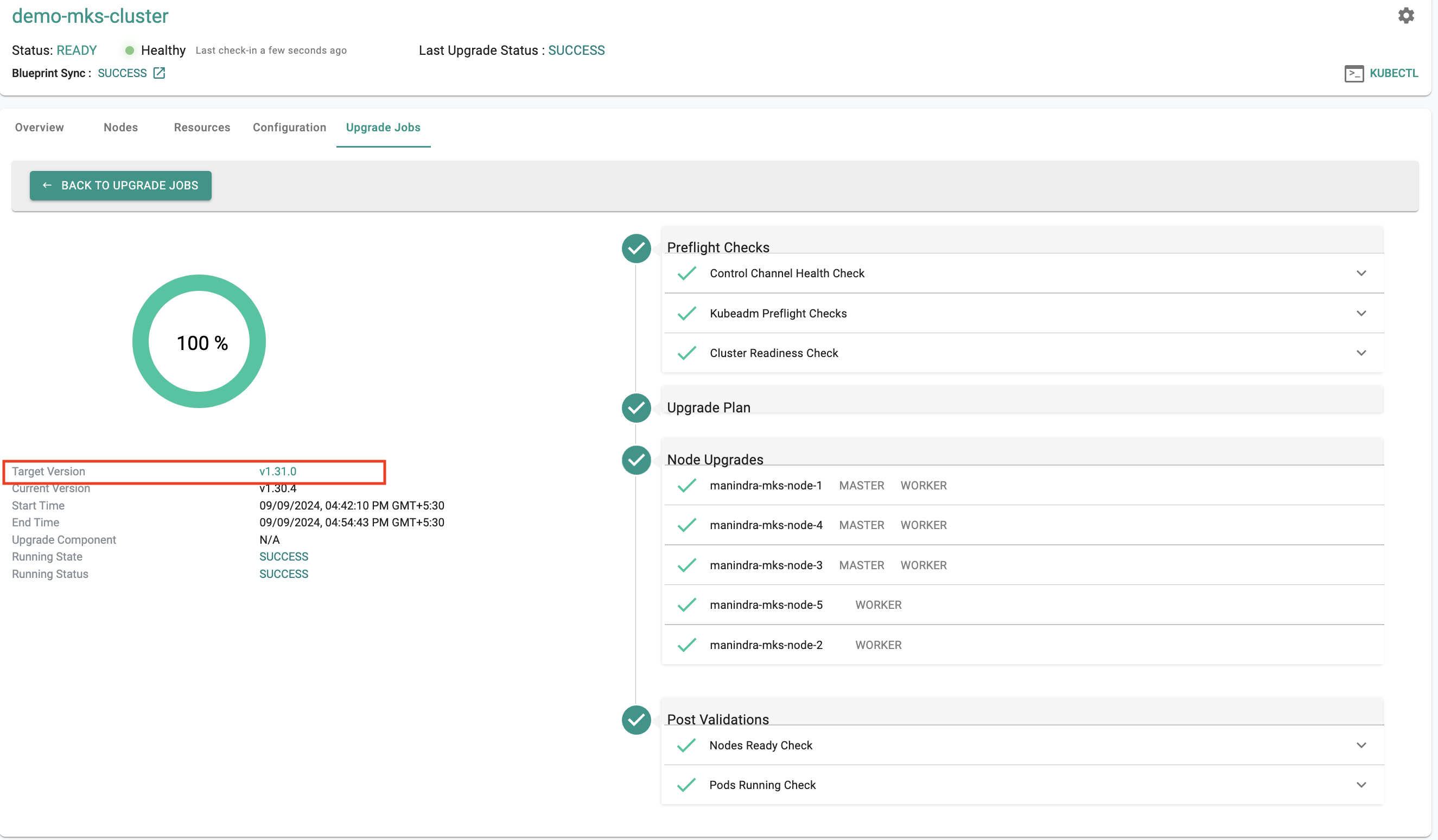

New Rafay MKS clusters based on upstream Kubernetes can now be provisioned based on Kubernetes v1.31. Existing clusters managed by the controller can be upgraded "in-place" to Kubernetes v1.31.

New Cluster

In-Place Upgrade

CNCF Conformance¶

Upstream Kubernetes clusters based on Kubernetes v1.31 (and prior Kubernetes versions) will be fully CNCF conformant.

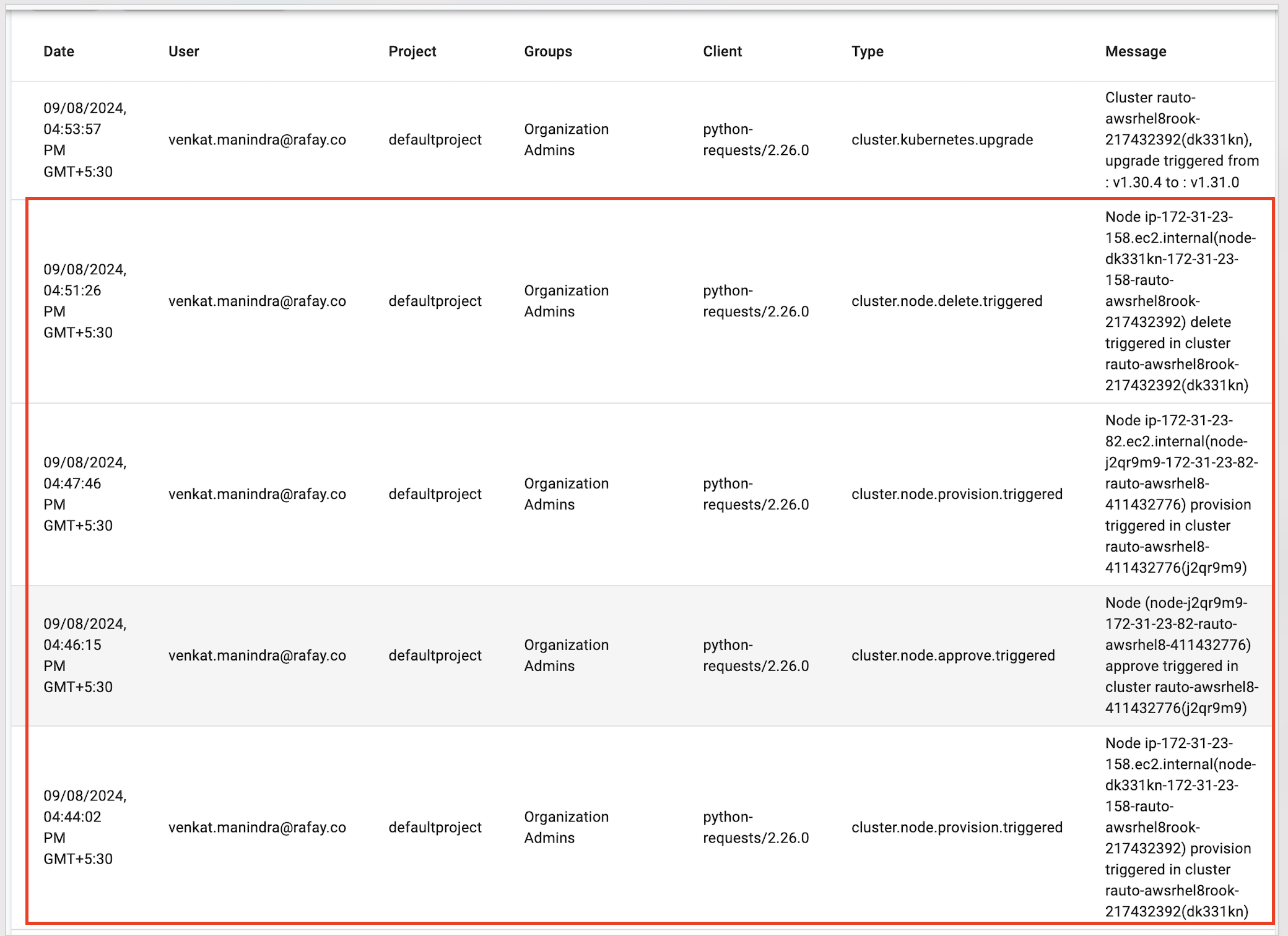

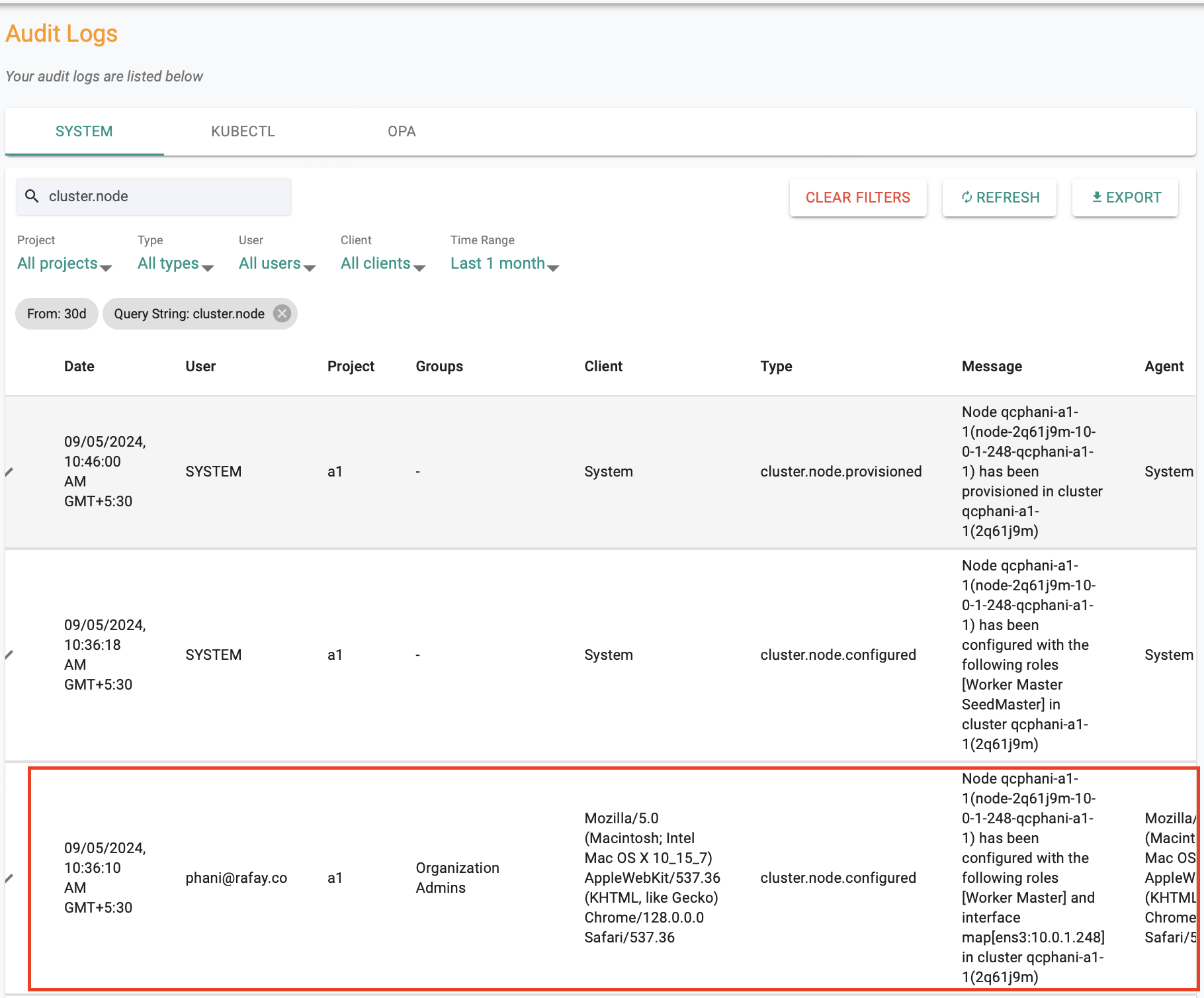

Node Audits¶

Audit logs will be enhanced to include various node related events. This enhancement will capture events related to discovery, approval, rejection, and deletion. There will be two types of audits:

- System Generated Audits: These occur when the status is updated by the system

- User Generated Audits: These capture actions taken by users

The above improvements will provide a comprehensive view of all significant activities related to node management.

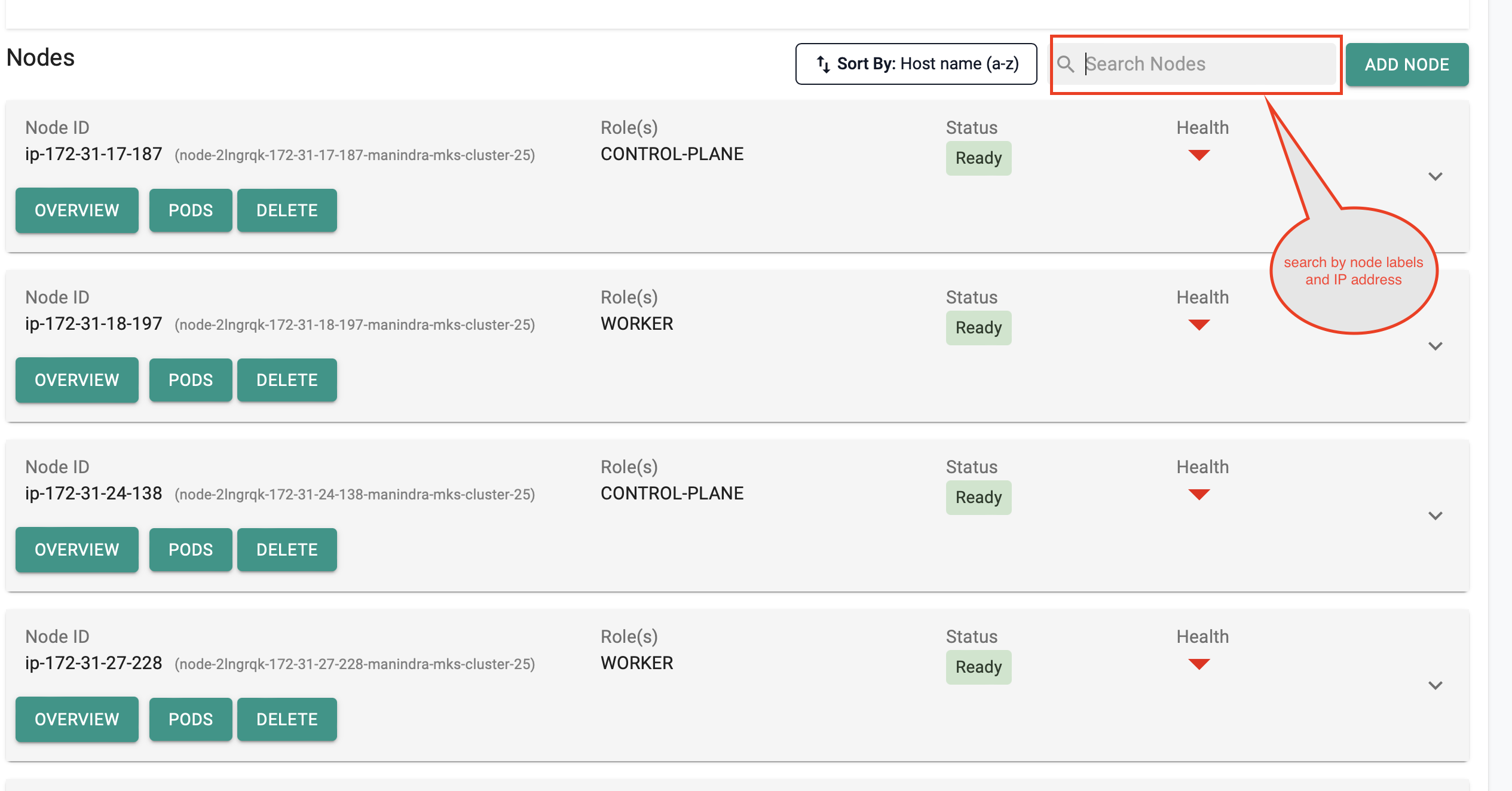

Enhanced Node Search Functionality¶

Users can now search for nodes more efficiently by utilizing node labels and node private IP addresses making it easier to locate specific nodes. This enhancement streamlines the search process and improves the overall user experience.

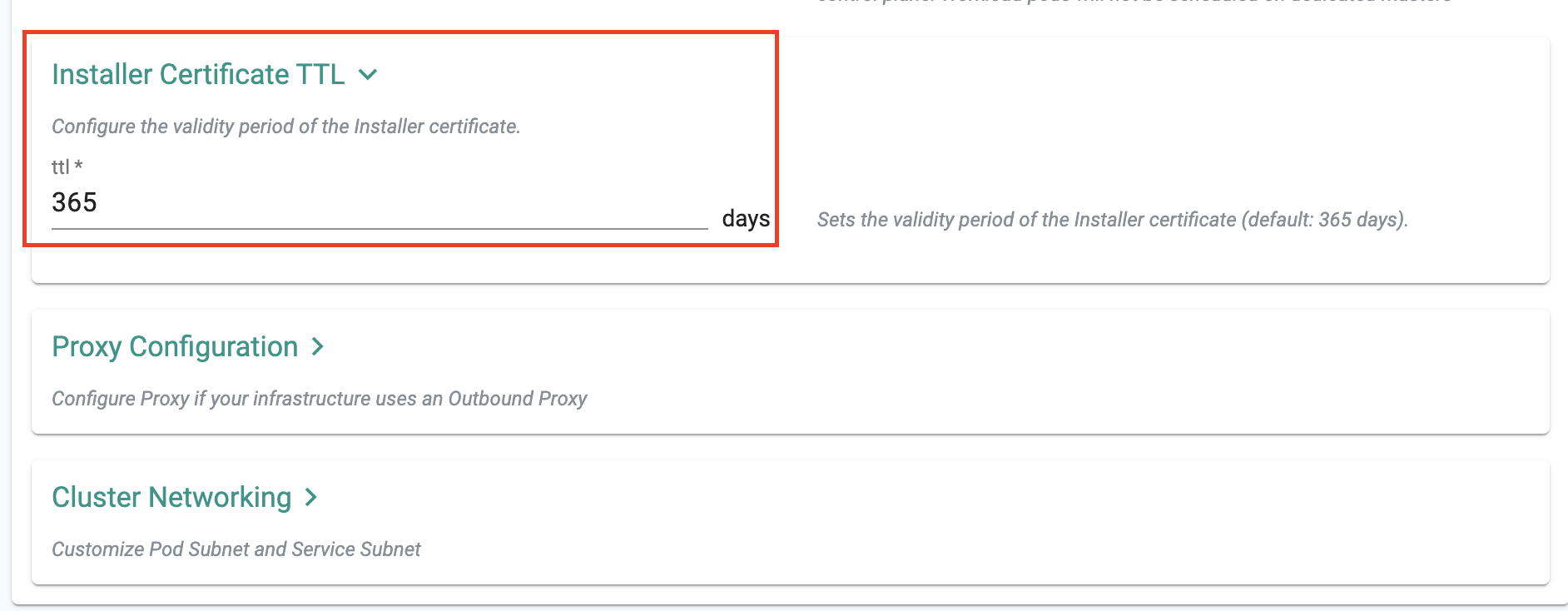

Installer Certificate TTL¶

In this release, we have introduced the ability for users to set the TTL for the Conjurer certificate required to install the agent on nodes. Previously the certificate used in the Conjurer had a pre-configured timeout leaving users without control over its expiration.

Users can now configure the TTL for the Conjurer certificate. Once the TTL expires, the certificate will no longer be accepted. Users will need to renew the certificate expiry and copy the updated Conjurer command which will include the new certificate content. The updated command must then be executed again on the node.

This enhancement provides users with greater control over the Conjurer certificate lifecycle and ensures tighter security measures for agent installations.

Day 0 Support¶

At the time of cluster provisioning in the cluster form, the user can specify the TTL as shown below.

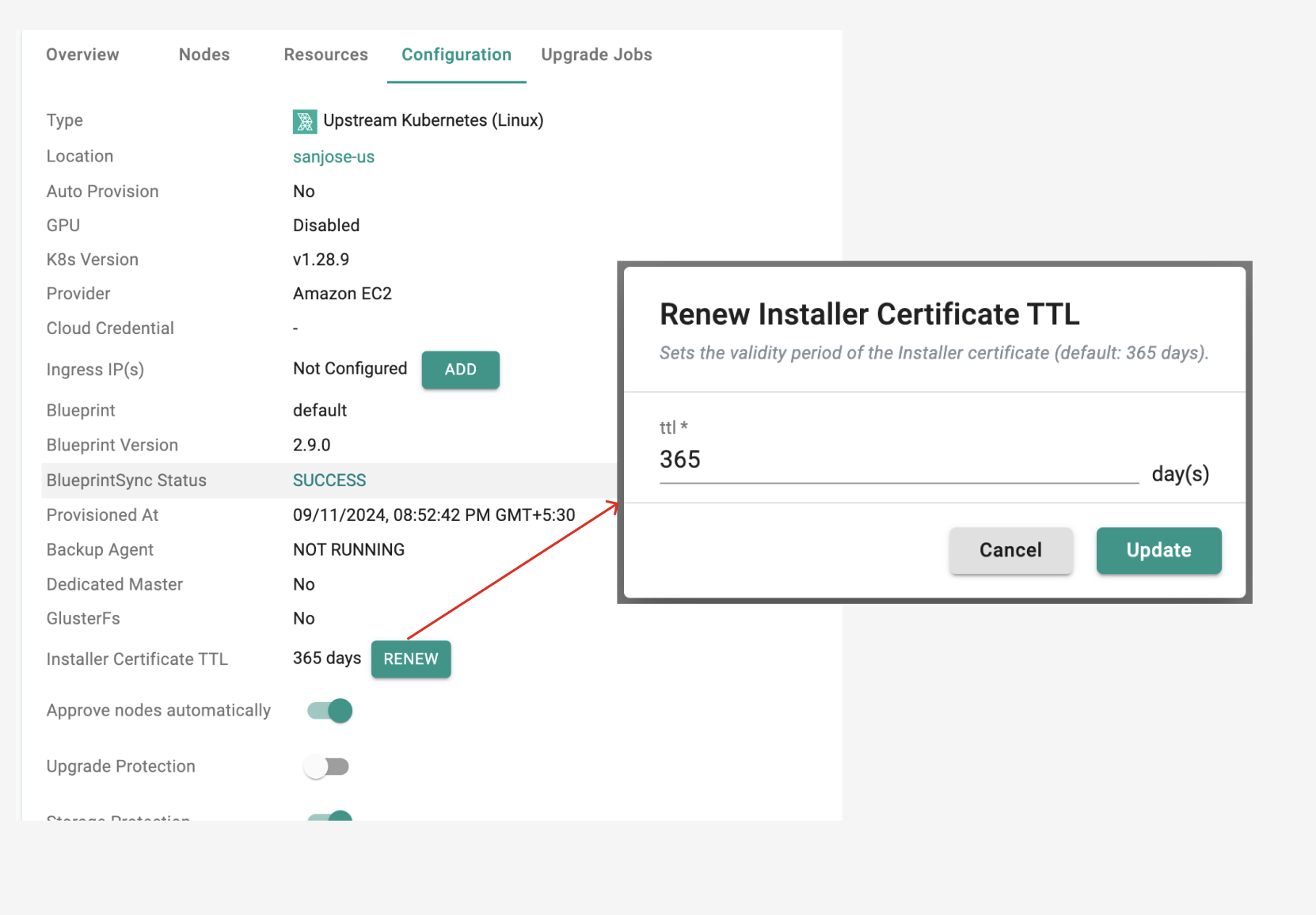

Day 2 Support¶

Users can navigate to the UI on the cluster view page and the configuration table to renew the TTL expiry, as shown below.

If the certificate is expired and the user is still using the old certificate when running the Conjurer installer, it will throw the following error:

Error: Expired Certificate. Please rotate your Conjurer certificate, then try again.

Important

Support for this feature is available via UI, RCTL CLI and Swagger API.

For more information on this feature, please refer here

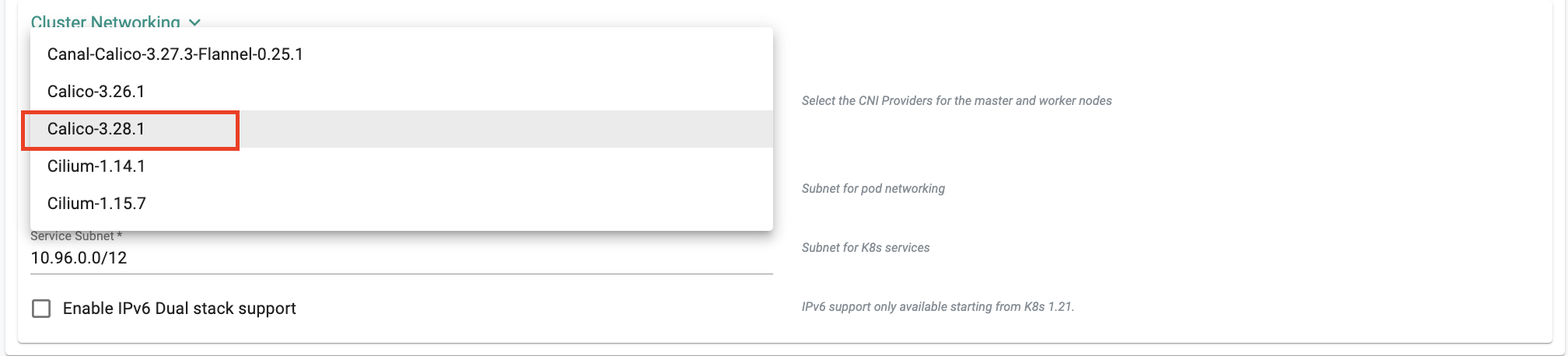

Calico Version¶

As part of this release, we have added Calico version 3.28.1. It is supported for new cluster provisioning only, allowing users to leverage this version of Calico.

Amazon EKS and Azure AKS¶

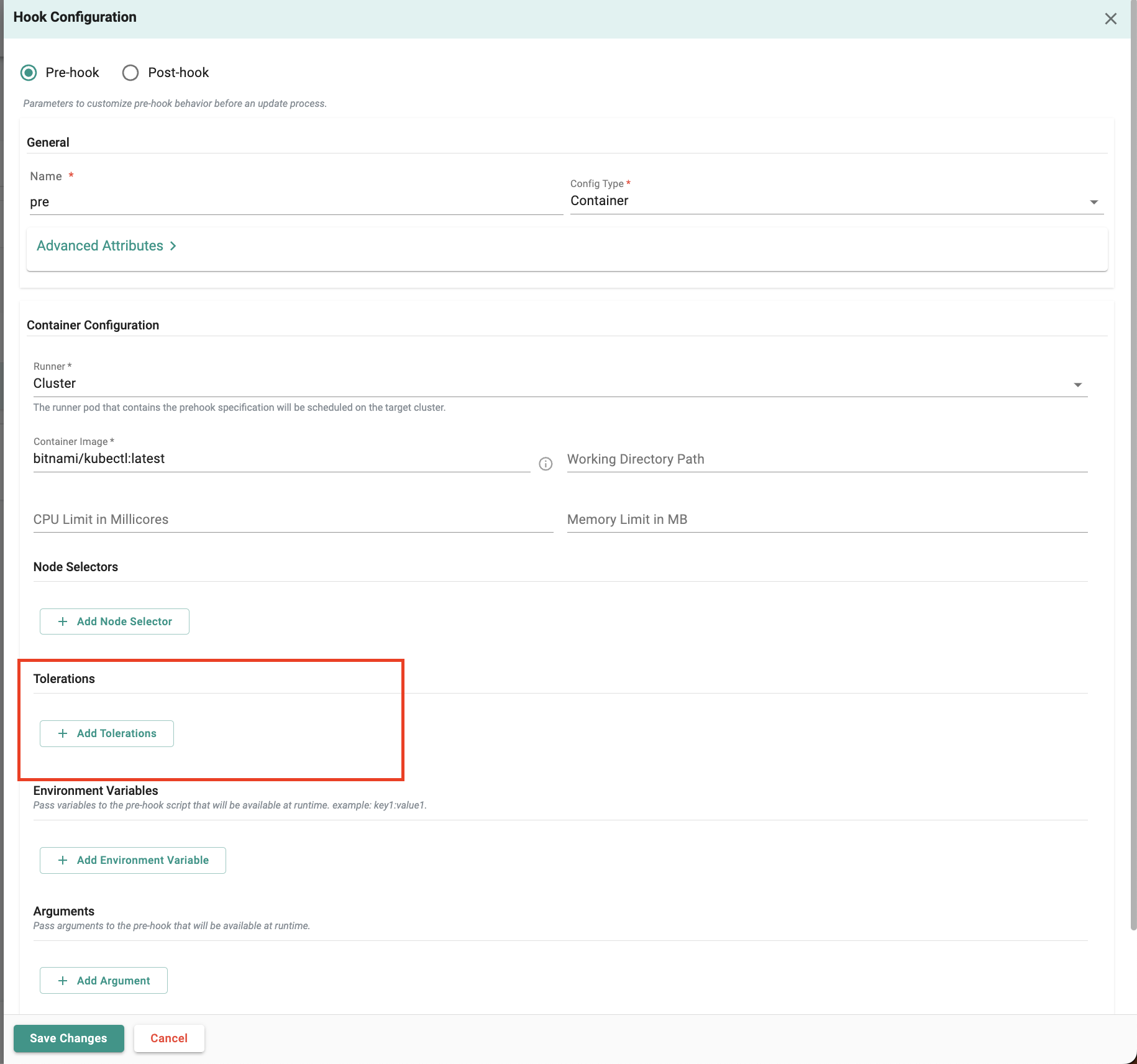

Toleration Support for FleetOps Pre-Hook/Post-Hook Configuration¶

Support for tolerations is being added to FleetOps pre-hook and post-hook configuration. This enhancement allows users to specify tolerations for hooks so that they can get scheduled on specific nodes as desired.

Important

Support for this feature will be available via UI RCTL CLI, Gitops and API. Support with Terraform provider will be added in a future release. Additionally, Node Selectors and Tolerations are applicable only when the Runner type is set to Cluster.

For more information on this feature, please refer to Fleet Tolerations here.

Blueprints¶

Cluster Overrides¶

It will be possible to share cluster overrides across projects with this enhancement. The sharing mechanism will be similar to other platform constructs (e.g. blueprints, add-ons). Only Org Admin will be able to share cluster overrides across projects. This enhancement applies to both add-on and workload overrides. Audits will be captured whenever overrides are shared (or unshared) across projects.

As part of this, the blueprint sync page is also being enhanced to show information on overrides applied to add-ons as part of the blueprint apply operation.

Policy Management¶

OPA Gatekeeper¶

Support for OPA Gatekeeper v3.16.3 is being introduced with this release. You can either update the base blueprint to 2.10 or create an installation profile selecting 3.16.3 as the version. On a blueprint sync operation, v3.16.3 will be installed on the clusters.

Environment Manager¶

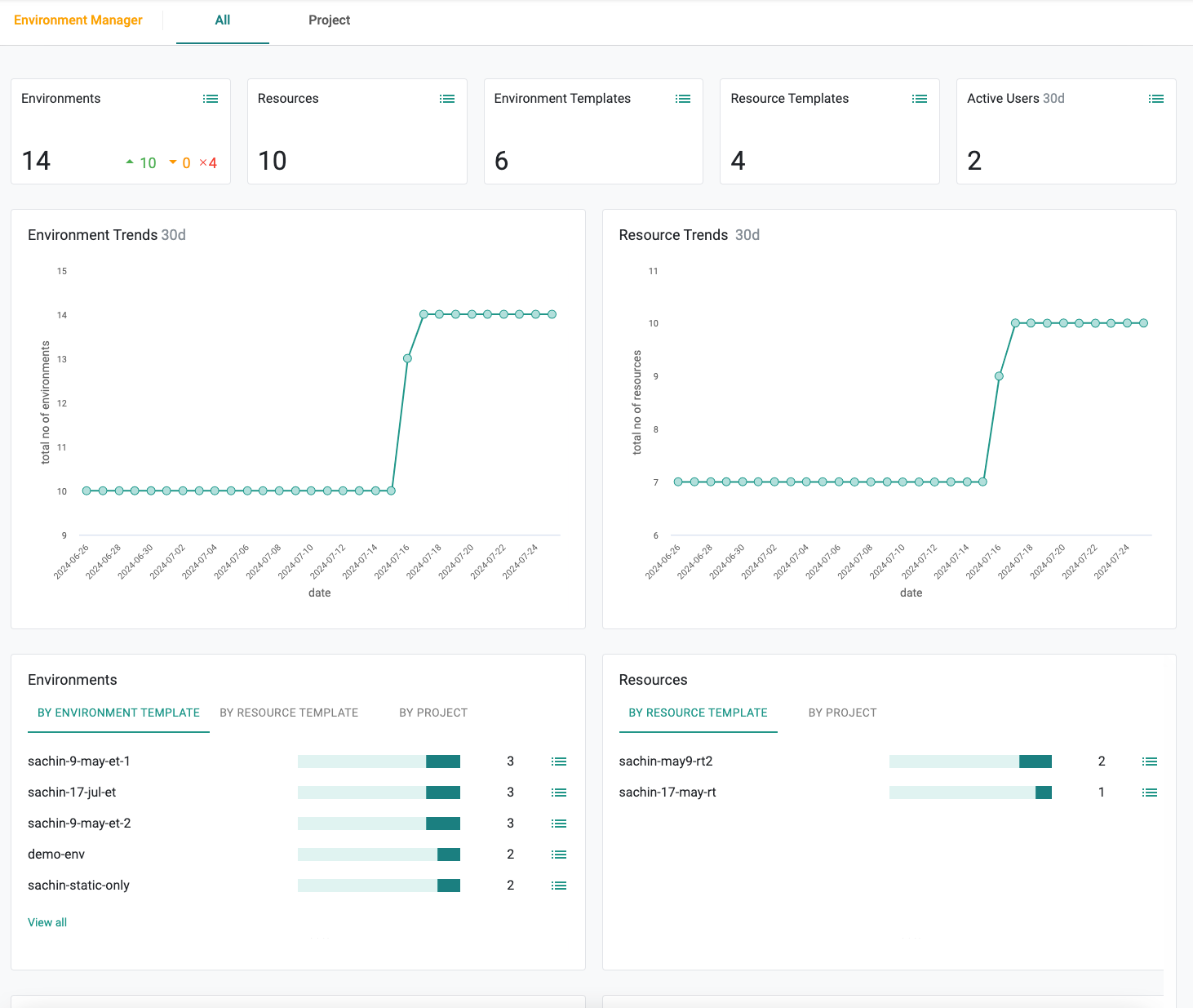

Dashboards¶

Centralized dashboards allow ongoing visibility into environments across teams, accounts and clouds, enabling accurate tracking of cost, utilization and ownership. Dashboards help with easily answering questions such as:

- How many environments are running across the organization? Are any of them in a failed state?

- How long have the environments been running?

- Who are the users who created the environments?

- Are the environments being actively used?

- What templates are the environments based on?

- How has the number of environments been trending over a period of time?

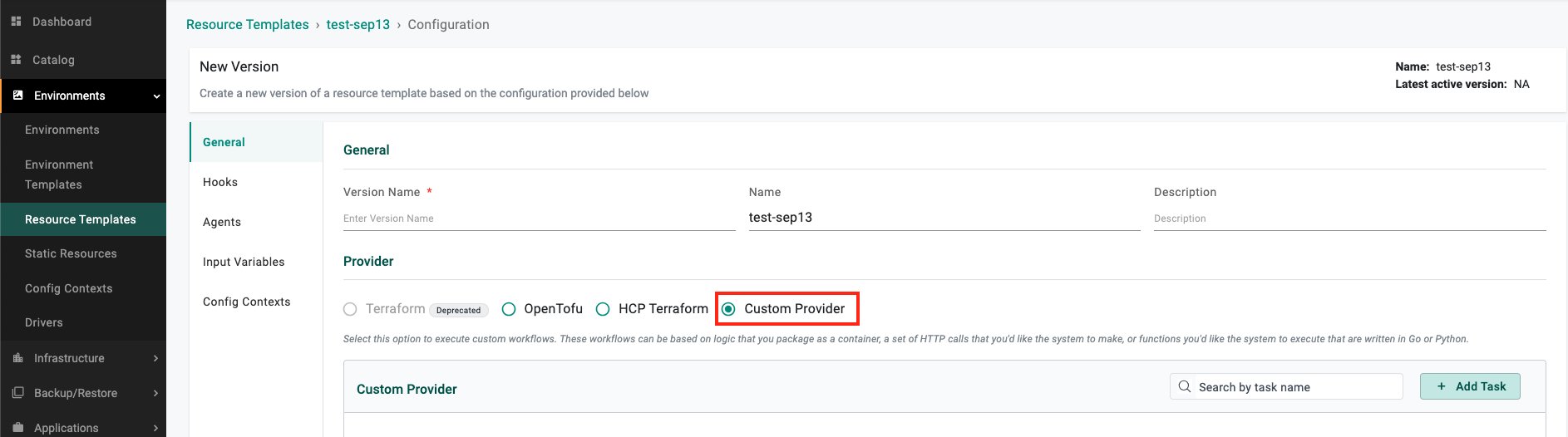

Custom Provider¶

A new provider option is being introduced for Resource Templates that will enable customers to execute custom workflows. These workflows can be based on logic that customers package as as container or execution of functions that were written in Go, Python or as Bash scripts. An example could be writing custom code to capture a snapshot of K8s resources running on the cluster on a periodic basis for the purposes of compliance.

Cost Management¶

AWS Cost Profile¶

It is now possible to specify the Use Cluster Credential option for Cloud Credential as part of the Custom Profile configuration. This will remove the need to configure multiple Cost Profiles/Blueprints in scenarios where there are clusters running across many AWS accounts. The Cloud Credential that has been configured for cluster provisioning will also be leveraged to fetch the custom pricing information for cost calculations.

Terraform Provider¶

Import¶

Import support is being added for the following resources:

- Add-ons

- Cluster Overrides

- Custom Role

- ZTKA Policy

- ZTKA Rule

Deprecation¶

Cluster Templates and EKSA BareMetal¶

The above features will no longer be available in the platform. Users are encouraged to leverage Product Blueprints based on Environment Manager for enhanced functionality and improved management capabilities.

Managed add-on: Ingress controller¶

Ingress controller add-on is being deprecated with this release. Users can leverage the catalog and continue to bring ingress as a custom add-on.

Bug Fixes¶

| Bug ID | Description |

|---|---|

| RC-34302 | Upstream K8s: Trigger approval process for nodes stuck in discovery failed state |

| RC-33946 | Unintuitive error message when Values file is missing in add-on |

| RC-32138 | Pipeline status is showing an incorrect error message on failure |

| RC-11268 | AKS: Delete a cluster and try to recreate a cluster with same rctl command fails with error RoleAssignmentUpdateNotPermitted |

| RC-32749 | Upstream k8s: Ceph dashboard for object gateway is not working in rook. |

| RC-37340 | Upstream k8s : GPU Information is not shown when there are multiple GPU's available in cluster card. |

v2.9 - SaaS¶

05 Sep, 2024

Note

There are no Terraform Provider changes with this release.

User Management¶

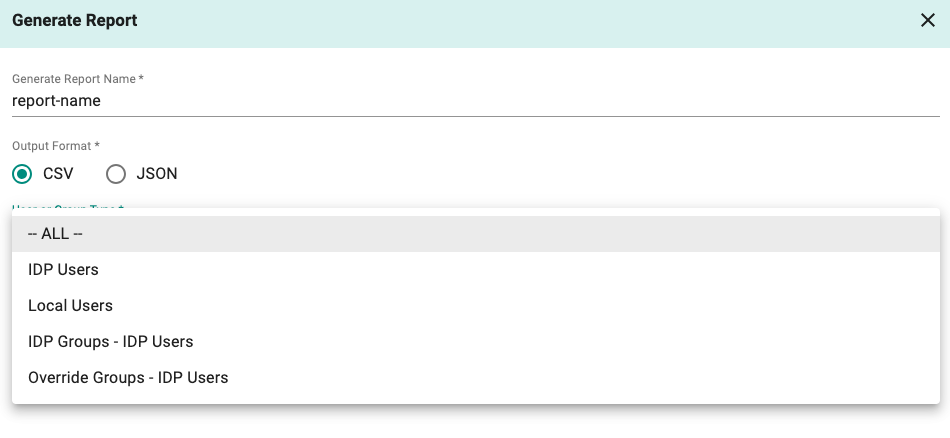

Access Report¶

It may be necessary to generate reports that detail access that users have on an ongoing basis to auditors. User Access Report makes it extremely simple to generate such reports either on-demand or on schedule. The reports can be downloaded in csv or json format.

For more information on this feature, please refer here.

Auditor role¶

A new 'Auditor Admin' role has been introduced with this release. This role will be able to generate Access Reports and view/download Audit Logs.

Environment Manager¶

Tolerations¶

Support for tolerations is being added for Drivers so that the driver can be run in a specific pool of reserved nodes in a cluster.

Upstream Kubernetes for Bare Metal and VMs¶

Enhanced Node Provisioning¶

As part of this release, we have added an enhancement to allow high-end nodes with large memory and storage capacities to be seamlessly provisioned as part of the upstream Kubernetes cluster bring-up. This will enable the deployment of resource-intensive applications such as machine learning and AI applications that require high-performance hardware.

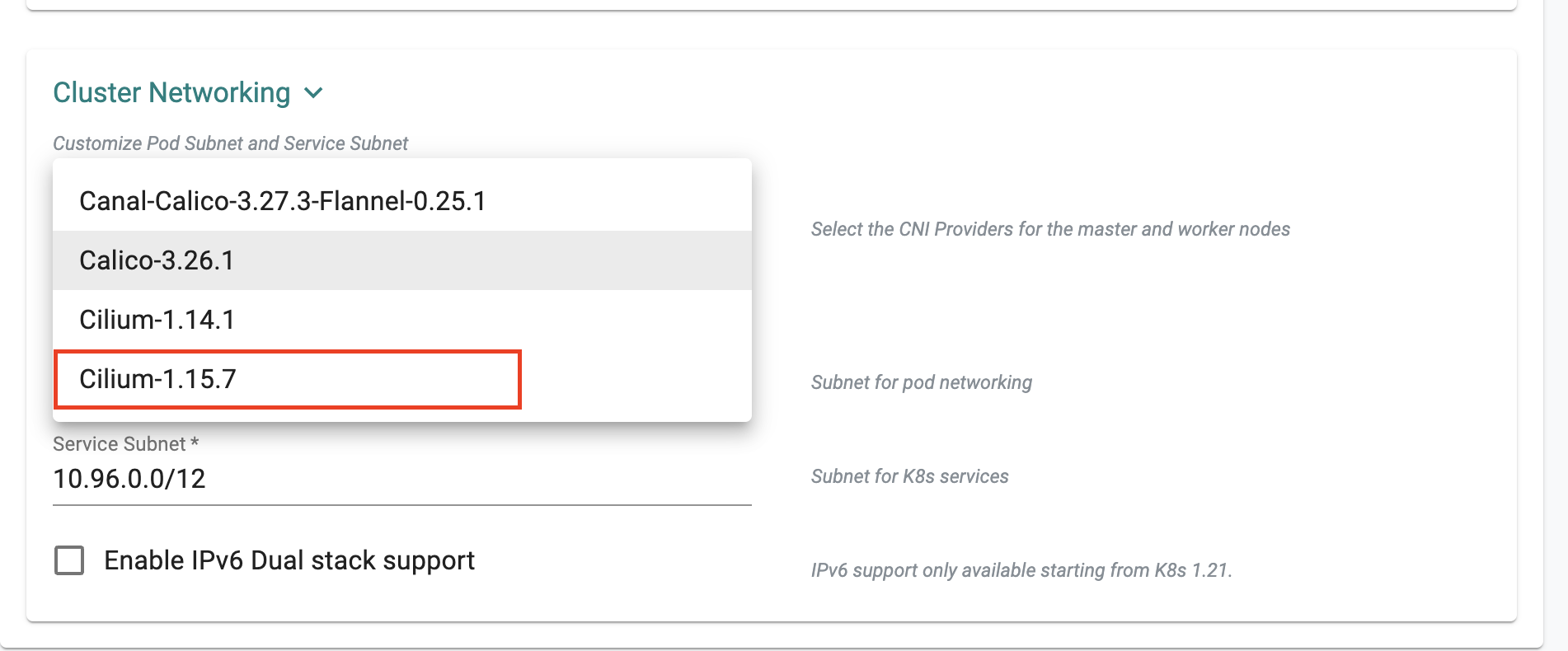

Cilium Version¶

As part of this release, we have added Cilium version 1.15.7. It is supported for new cluster provisioning only, allowing users to leverage this version of Cilium.

2.9 Release Bug Fixes¶

| Bug ID | Description |

|---|---|

| RC-36189 | Upstream K8s: Suppress CIDRNotAvailable events originating from Controller Manager on clusters when IPAM is handled by calico CNI |

| RC-34325 | UI: Golden Blueprint is not available for selection as a base blueprint in shared projects |

| RC-36850 | OpenCost fetches Windows pricing instead of Linux pricing for Standard_D4as_v5 instance |

| RC-37011 | Upstream K8s: Node Approval Failing for Nodes with High Memory |

| RC-37076 | Upstream K8s: Disable offloading in vxlan cilium to avoid network delays |