Provision

Follow the instructions below if you wish to provision a managed Kubernetes cluster using the prepackaged qcow2 image in your OpenStack environment.

Step 1: Create Cluster¶

In this step, you will configure and create a cluster object in the Controller. This step will provide you with newly generated cluster activation secrets for this cluster. You will use this (optionally inject into the HEAT template) to bring up a VM on OpenStack.

As an Org Admin or Infrastructure Admin for a Project

- Login into the Web Console and go to Infrastructure > Clusters.

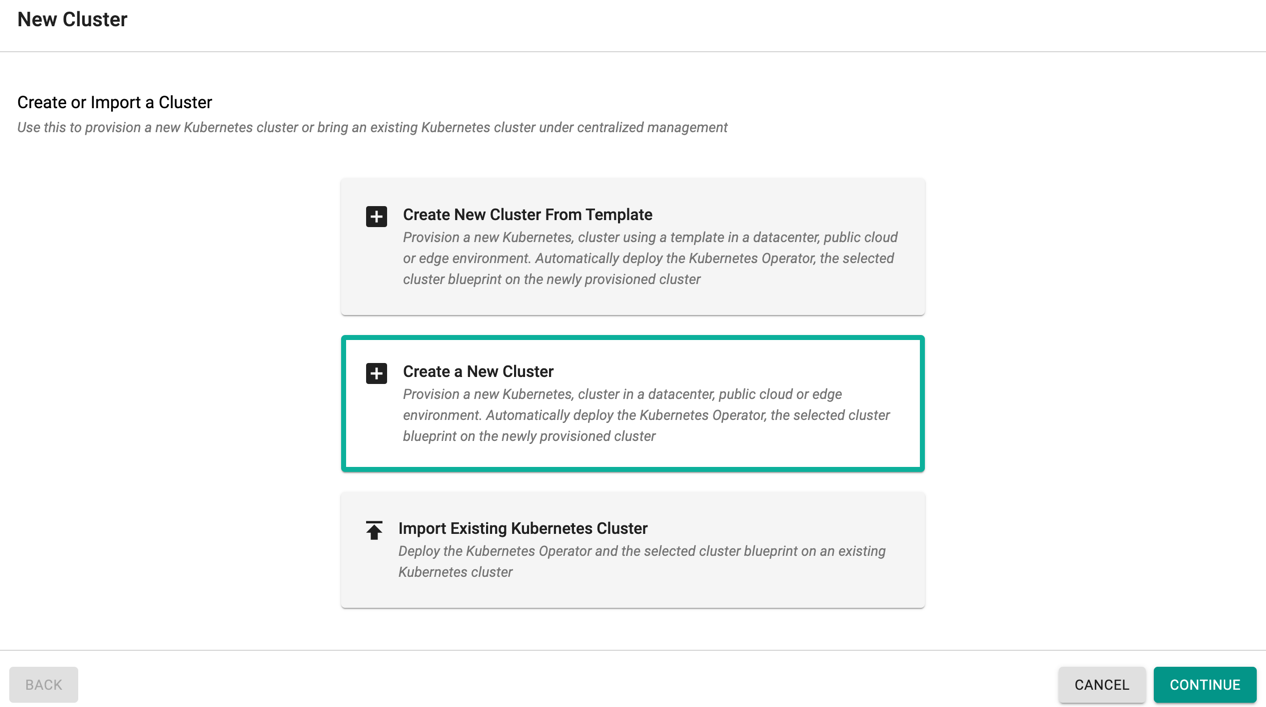

- Click on “New Cluster”.

- Select "Create a New Cluster" option

- Click "Continue" to go to the next configuration page

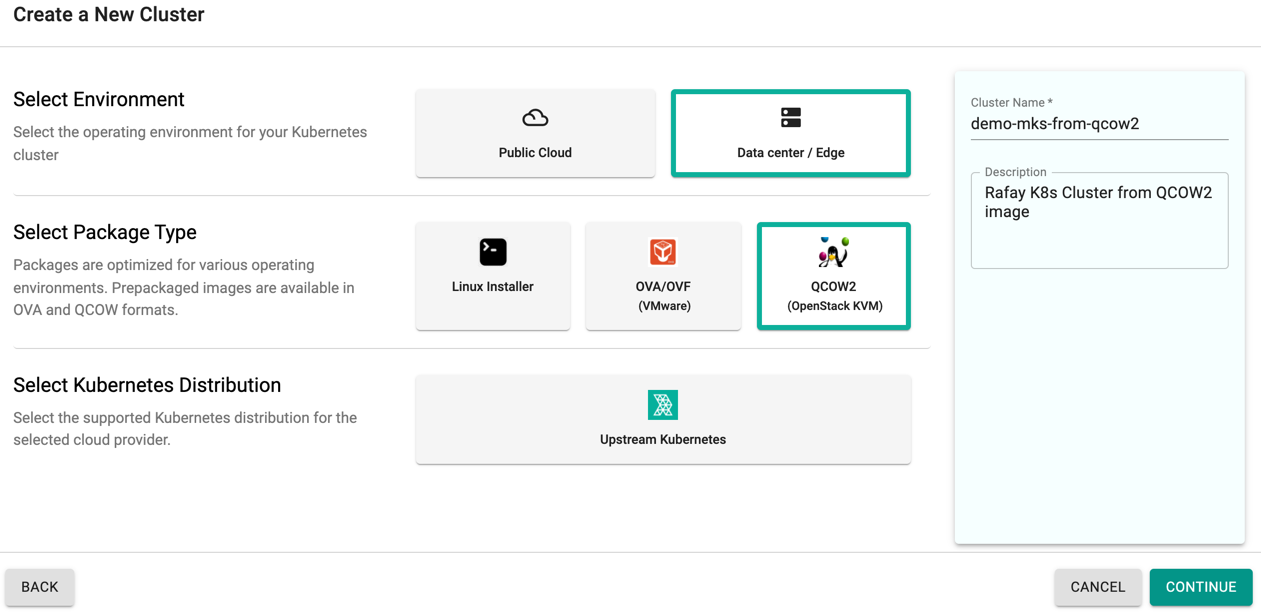

- Select "Data center/Edge" for Environment.

- Then select "QCOW2" option for Package type

- Provide a name for your cluster (the use of underscore is not allowed in the name)

- And provide an optional description for the cluster

- Click "Continue" to go to the next configuration page

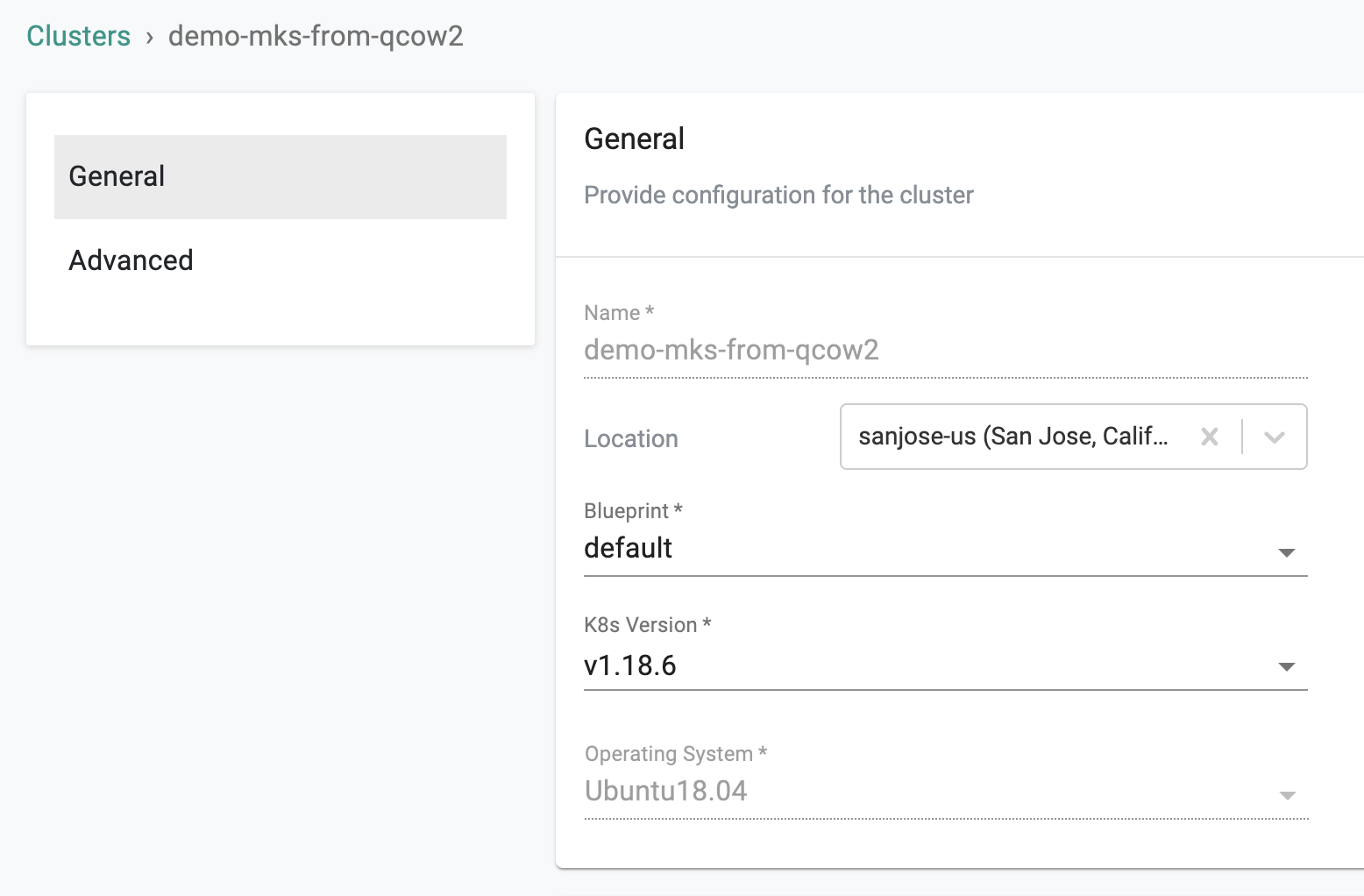

- In General settings, select a location from the "Location" drop down

- Select the cluster blueprint from the "Blueprint" drop down

- Select the Kubernetes version from the "K8s Version" drop down

- Select “Ubuntu 18.04” for the "Operating System" drop down

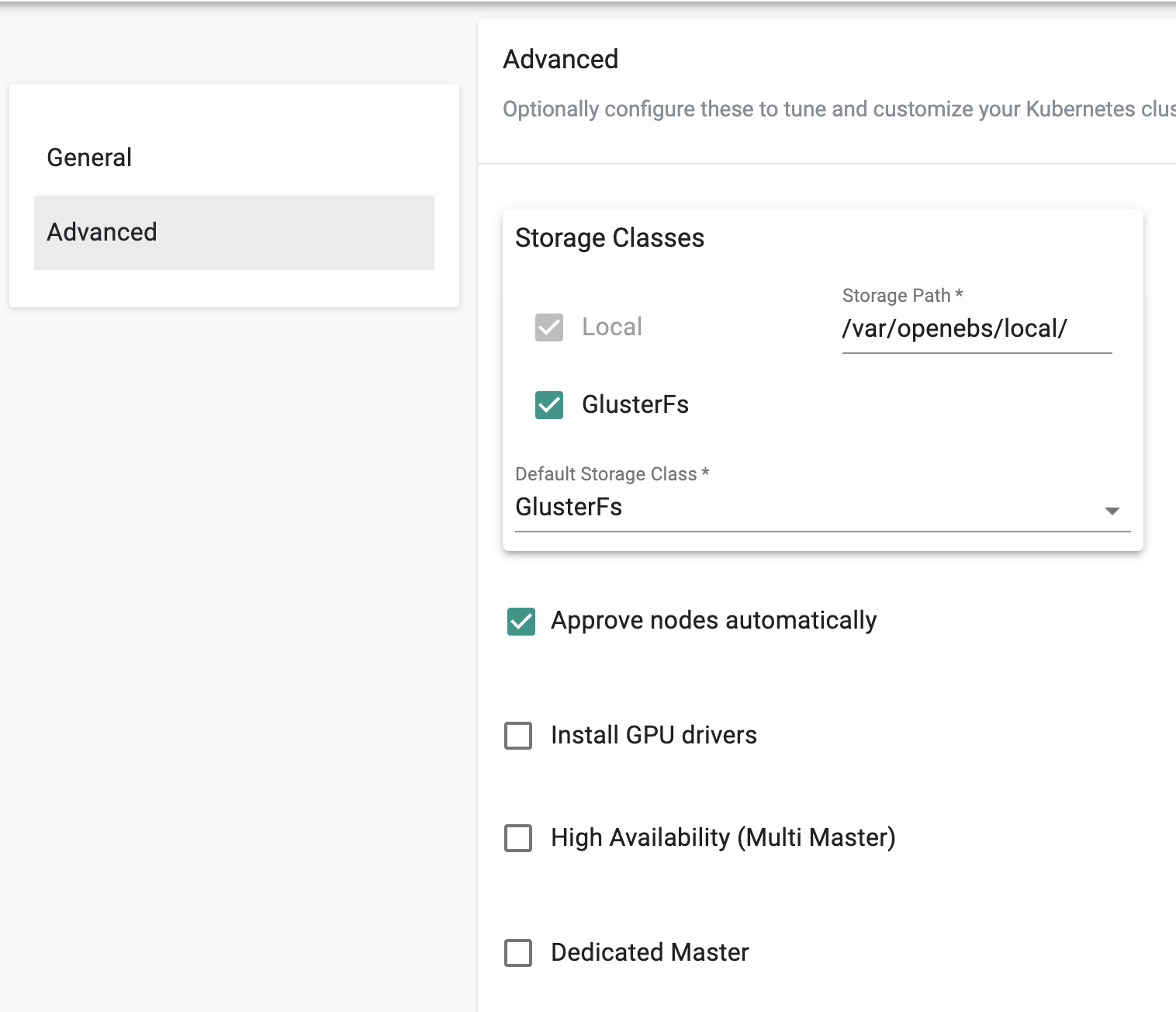

- In the "Advanced" settings, (optionally) change the "Storage Path" for the local storage

- (Optionally) enable “GlusterFS” for StorageClass (if this option is selected, make sure to attach a raw unformatted volume to your VM)

- Select the Default Storage Class in the drop down.

- Select to enable “Approve nodes automatically” option if necessary

- Select to enable "Install GPU driver" option if your have GPU enabled in all your nodes

- Select to enable "High Availability (Multi Master)" if you like to provision a multi-master cluster

- Select to enable "Dedicated Master" if you like the have a dedicate master model for the cluster

- Click "Continue" to create the cluster

NOTE: Selecting node auto approval will help avoid a manual approval step in the provisioning process. It is highly recommended for controlled, factory type assembly environments.

Considerations¶

- A default, the k8s version is pre-packaged in the provided qcow image. If another version is selected during provisioning, it will be downloaded from the Controller.

- Remote cluster provisioning in remote, low bandwidth locations with unstable networks can be very challenging. Please review how the retry and backoff mechanisms work by default and how they can be customized to suit your requirements.

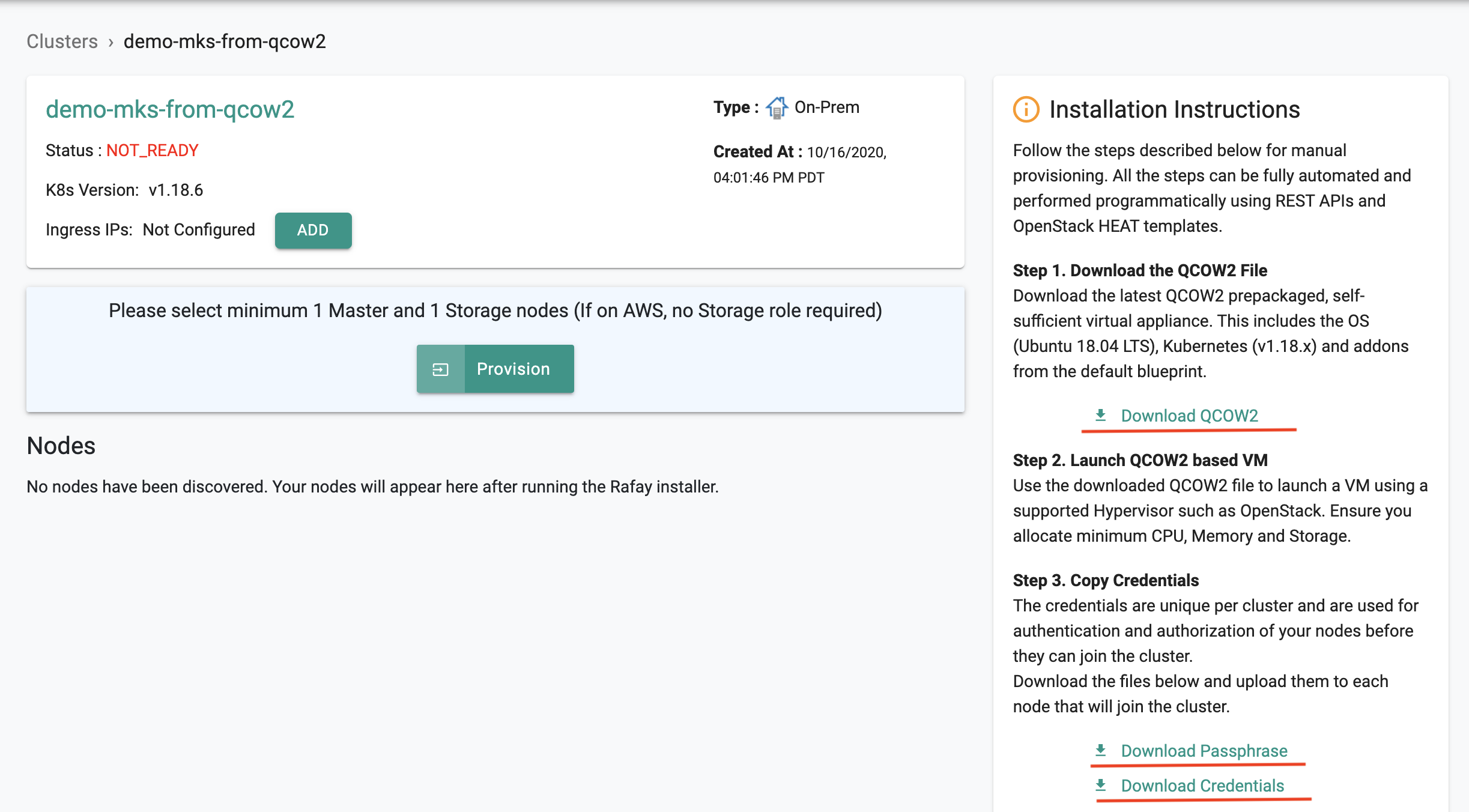

Step 2: Download Activation Secrets¶

Download the activation secrets (i.e credentials and passphrase files) from the node installation instructions. You will have two files that are ”unique” to this cluster.

- "clustername"-credentials.pem

- "clustername"-passphrase.txt

Important

The activation secrets are unique to this cluster and cannot be reused with other clusters.

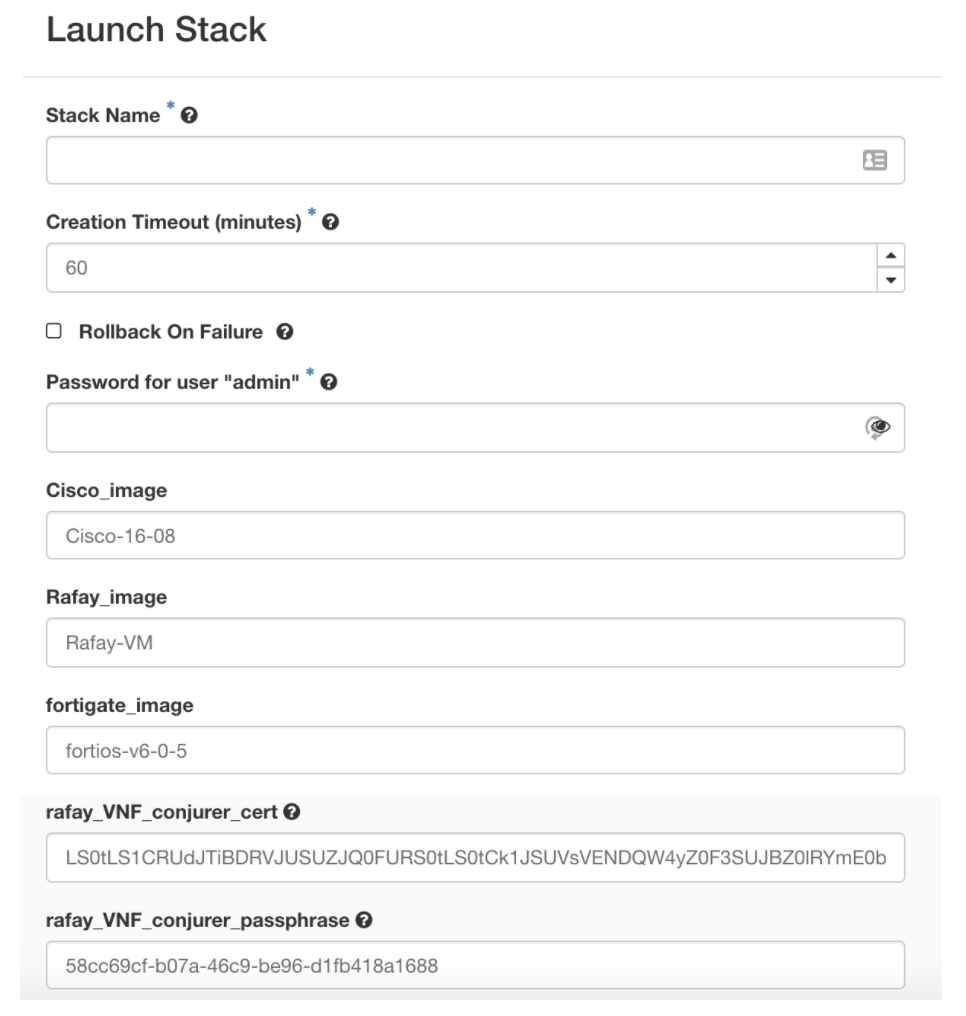

Step 3: Create Environment File¶

The custom environment file is used with the HEAT template to customize the cluster.

- Use the provided environment file “sample-parameters.yaml” and save it with the name of the “cluster” you created in Step 1.

- We will customize two parameters in this file that are unique to the newly created cluster (i.e. rafay_VNF_conjurer_cert and rafay_VNF_conjurer_passphrase).

- The HEAT template’s environment file expects the content for the parameters in Base-64 format. As result, we need to encode the “credentials.pem” file content to Base-64 format.

On Windows

certutil -f -encode <Path to certificate you downloaded> <Filename to store encoded cert>

On Linux/macOS

cat <Path to certificate you downloaded> | base64

- Copy/Paste the Base-64 encoded content for the “certificate.pem” and update the “rafay_VNF_conjurer_cert” parameter in the cluster’s environment file.

- Copy/Paste the content from “passphrase.txt” and update the “rafay_VNF_conjurer_passphrase” parameter in the cluster’s environment file.

An illustrative example of a custom environment file is shown below with the two parameters highlighted in bold.

parameters:

Rafay_image: Rafay-VM

rafay_VNF_wan_interface_cidr: 122.1.2.13/29

rafay_VNF_default_gateway: 122.1.2.11

rafay_VNF_nameservers: 8.8.8.8, 8.8.4.4

rafay_VNF_conjurer_cert: LS0tLS1CRUdJTiBDRVJUSUZJQ...<additional text removed>

rafay_VNF_conjurer_passphrase: 58cc69cf-b07a-46c9-be96-d1fb418a1688

Note

This step will likely be performed programmatically via API based integration with the Controller. Also, ensure that you do not accidentally copy/paste whitespace.

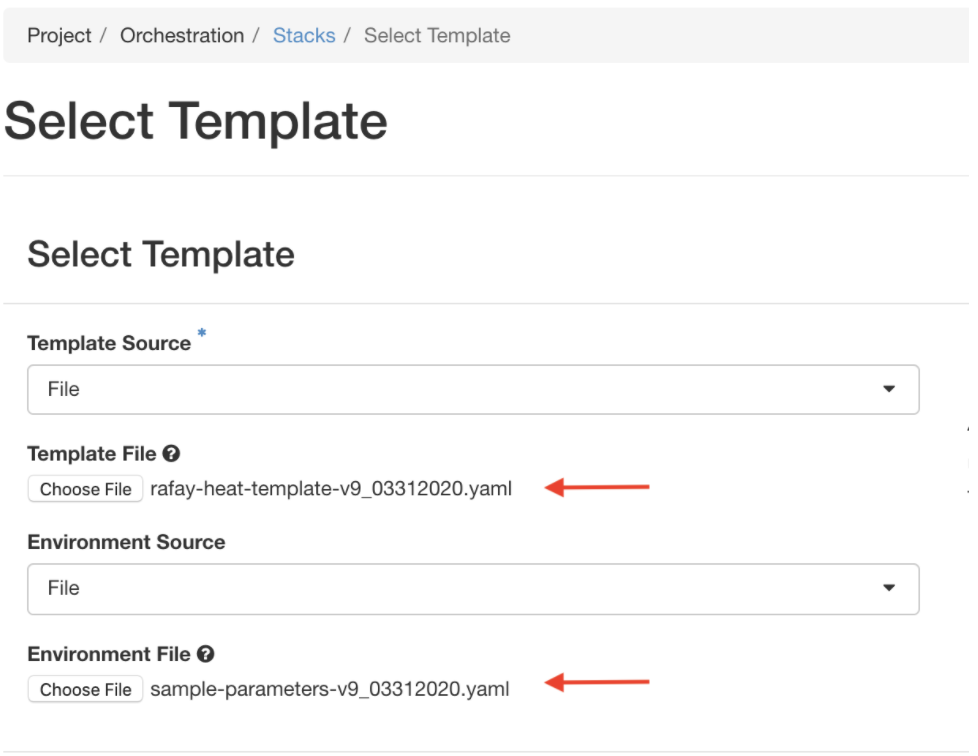

Step 4: Launch VM¶

Use the OpenStack Orchestrator to launch the VM with the two HEAT files (Standard HEAT template and the customized environment file as input). An illustrative screenshot shown below

Launch the Stack in OpenStack. Note that the stack references the activation secrets that were provided in the "custom resources file" from the previous step.

Note

It is recommended that you provide the Stack with a name that is identical/similar to the cluster name from Step 1. This will make it easier for administrators to correlate the resources across OpenStack and the controller.

Once the VM has been launched successfully, it will automatically attempt to connect and register with the Controller using the “Activation Secrets” programmed via the HEAT environment file.

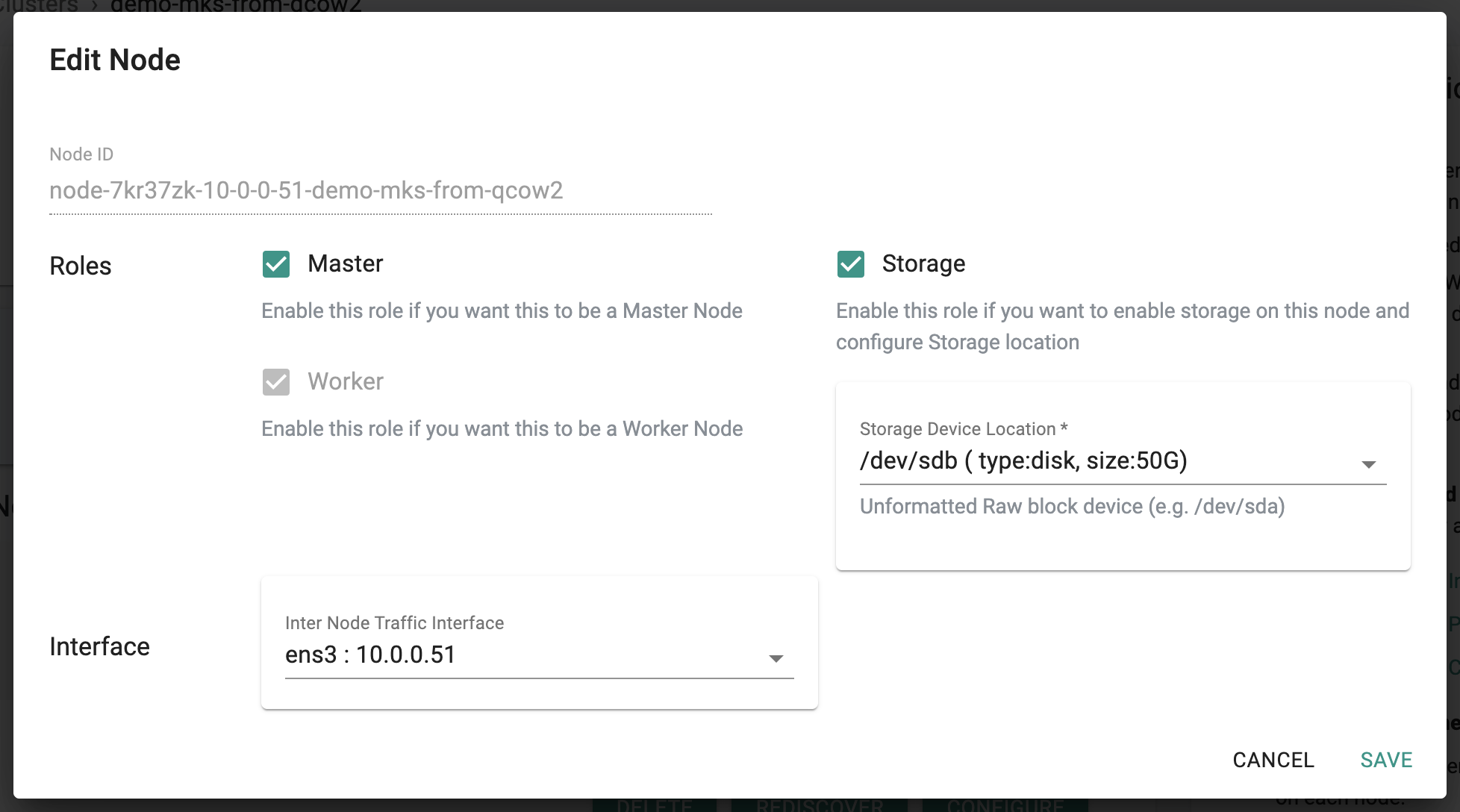

Step 5: Configure Cluster¶

In the Web Console, in a few seconds, you will see that the node has been discovered and approved. Click on “Configure” and follow the wizard

Master Role¶

Enable Master role. This will configure the node to act as a Kubernetes master.

Storage¶

The controller auto-detects and displays the available volumes. Select the correct volume from the dropdown (currently “/dev/sdb” with a capacity of 50GB)

Note

Storage is fully configurable via the provided HEAT templates.

#----Define Volumes-----

rafayVNFvol:

type: OS::Cinder::Volume

properties:

size: 50

#----Define Volume Attachments -----

rafayVolAtt:

type: OS::Cinder::VolumeAttachment

properties:

instance_uuid: { get_resource: rafayVNF }

volume_id: { get_resource: rafayVNFvol }

mountpoint: /dev/sdb

Interface¶

The controller auto-detects and displays the available interfaces. Select the correct interface from the dropdown (currently ens3 as configured in the HEAT template)

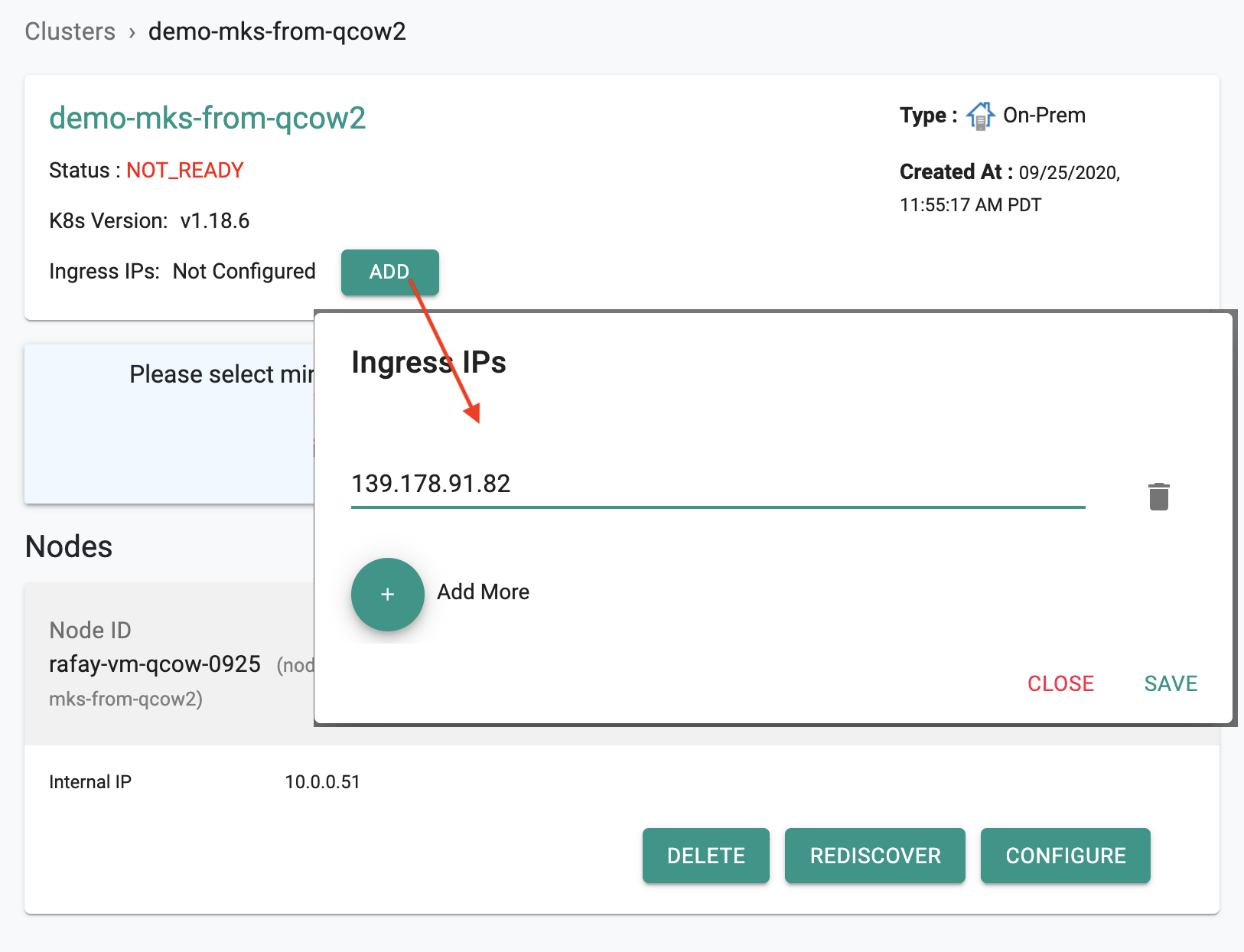

Ingress IP¶

In this step, we will configure the IP of the cluster node where you expect to receive incoming traffic. Applications (workloads) deployed on the controller managed cluster can be accessed on this IP.

- Look up the IP addresses assigned to the VM and copy/paste this here. This information is also available directly in the interface dropdown. Ensure that you select the IP in the “rafay_VNF_wan_interface_cidr” which defaults to 122.1.2.13/29. This CIDR can be specified in the environment file. Look for “rafay_VNF_wan_interface_cidr: 122.1.2.13/29”

parameters:

Rafay_image: Rafay-VM

rafay_VNF_wan_interface_cidr: 122.1.2.13/29

rafay_VNF_default_gateway: 122.1.2.11

rafay_VNF_nameservers: 8.8.8.8, 8.8.4.4

rafay_VNF_conjurer_cert: LS0tLS1CRUdJTiBDRVJUSUZJQ...<additional text removed>

rafay_VNF_conjurer_passphrase: 58cc69cf-b07a-46c9-be96-d1fb418a1688

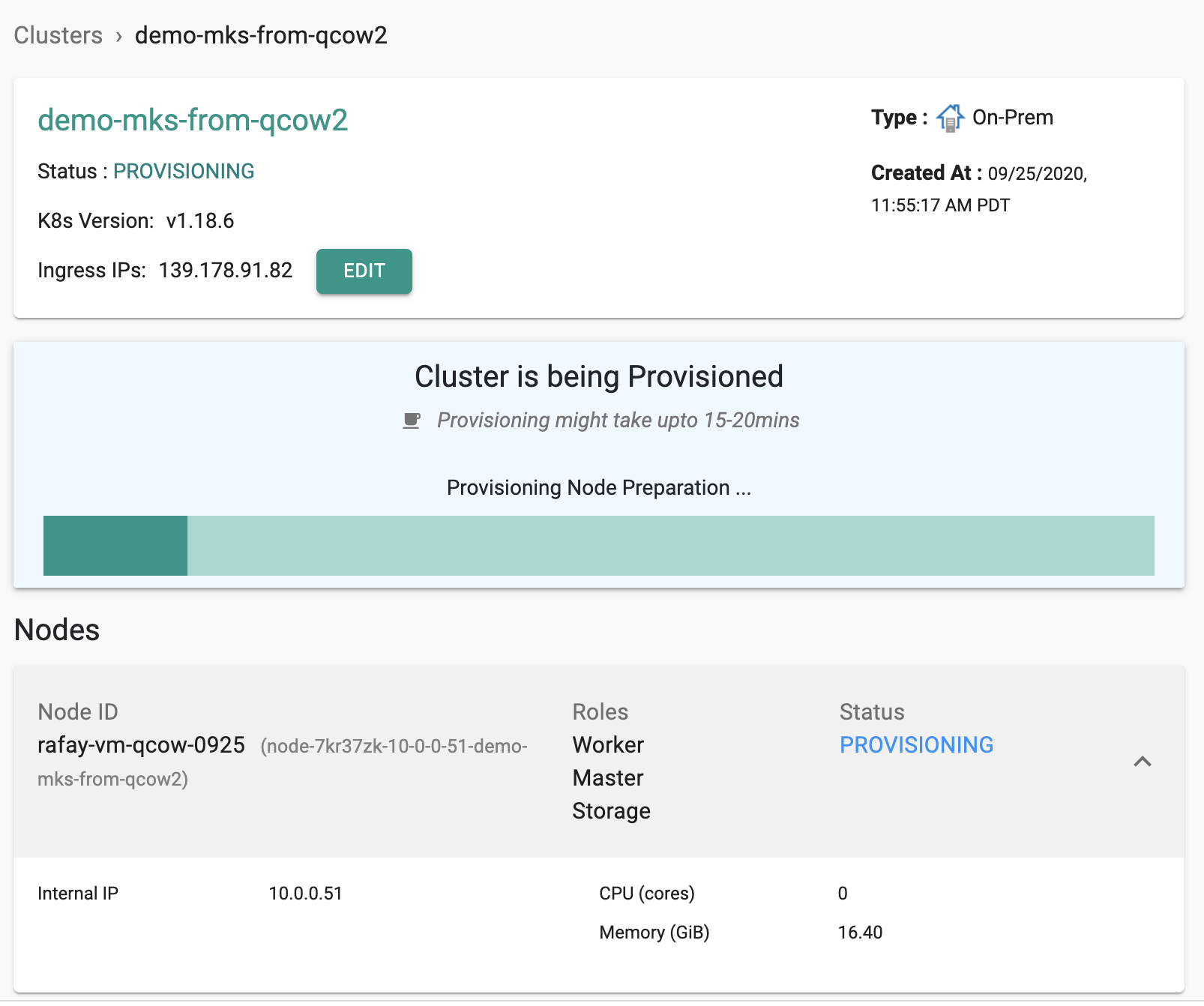

Step 6: Provision Cluster¶

Once configured, Click on “Provision” to provision the cluster and wait for the process to complete

Depending on the resources provided to the VM, the provisioning process can take ~5 minutes to complete. Progress and status updates will be displayed to the user during this process as the software components are configured in the VM. An illustrative screenshot shown below.

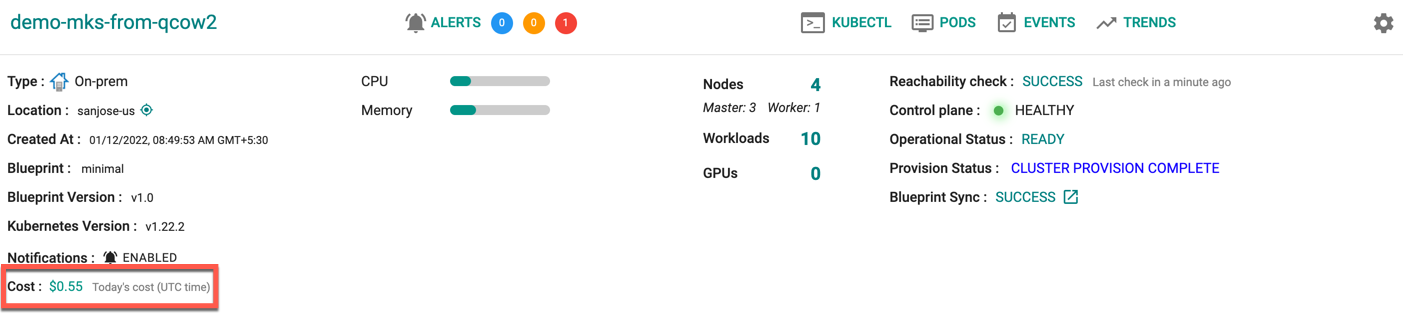

Step 7: Validate Provisioning¶

Once the cluster is provisioned, verify the following:

Control Plane¶

This should show as Green and report status as HEALTHY. This means that the k8s cluster operating in the VM on the remote uCPE is in a healthy state.

Reachability Check¶

This should report status as SUCCESS. In addition, the “Last Check in Time” should be within a minute. This means that a heartbeat has been established between the remote k8s cluster and the Controller.

To view the cost details of this cluster, click on the available cost link. This will navigate you to the Cost Explorer page, where you can access detailed cost information for this specific cluster.

An illustrative screenshot of a “successfully” provisioned cluster in the Web Console is shown below.