Examples

Infra GitOps using RCTL¶

Here are some of the examples to scale an existing cluster through Infra GitOps using RCTL

Below is a sample yaml file with Auto create CNI option and Cluster Access Settings

Cluster Access Settings

kind: Cluster

metadata:

name: demo-eks1

project: defaultproject

spec:

blueprint: default

blueprintversion: 1.13.0

cloudprovider: gk-eks

cniparams:

customCniCidr: 100.0.0.0/24

cniprovider: aws-cni

proxyconfig: {}

type: eks

---

accessConfig:

accessEntries:

- accessPolicies:

- accessScope:

type: cluster

policyARN: arn:aws:eks::aws:cluster-access-policy/AmazonEKSEditPolicy

principalARN: arn:aws:iam::679196758854:user/user1@rafay.co

type: STANDARD

- accessPolicies:

- accessScope:

type: cluster

policyARN: arn:aws:eks::aws:cluster-access-policy/AmazonEKSClusterAdminPolicy

principalARN: arn:aws:iam::679196758854:role/user2-accessConfig

type: STANDARD

authenticationMode: API

addons:

- name: coredns

version: latest

- name: kube-proxy

version: latest

- name: vpc-cni

version: latest

- name: aws-ebs-csi-driver

version: latest

apiVersion: rafay.io/v1alpha5

kind: ClusterConfig

metadata:

name: demo-eks1

region: us-west-1

version: "1.19"

nodeGroups:

- amiFamily: AmazonLinux2

desiredCapacity: 2

iam:

withAddonPolicies:

autoScaler: true

imageBuilder: true

instanceType: t3.xlarge

maxSize: 2

minSize: 1

name: self-mn

volumeSize: 80

volumeType: gp3

- amiFamily: AmazonLinux2

desiredCapacity: 1

iam:

withAddonPolicies:

autoScaler: true

imageBuilder: true

instanceType: t3.xlarge

maxSize: 2

minSize: 1

name: ng-72199bd8

volumeSize: 80

volumeType: gp3

vpc:

cidr: 192.168.0.0/16

clusterEndpoints:

privateAccess: true

publicAccess: false

nat:

gateway: Single

For Manual CNI configuration, provide the below details in the Yaml file for CNI Parameters

cniparams:

customCniCrdSpec:

us-west-2a:

- securityGroups:

- sg-05a75c401bd421a7f

- sg-07a65c401bd543a7f

subnet: subnet-041166d6499651fdc

us-west-2b:

- securityGroups:

- sg-05a75c765bd421a9f

- sg-05a75c323de421a9e

subnet: subnet-08dfb5d33b80d2b17

Update Cloud Provider¶

Use the command ./rctl apply -f demo-eks1.yaml

Expected output (with a task id):

Cluster: demo-eks1

{

"taskset_id": "d2w0em8",

"operations": [

{

"operation": "CloudProviderUpdation",

"resource_name": "demo-eks1",

"status": "PROVISION_TASK_STATUS_PENDING"

}

],

"comments": "The status of the operations can be fetched using taskset_id",

"status": "PROVISION_TASKSET_STATUS_PENDING"

}

To know the status of the cloud provider apply operation, enter the below command with the generated task id

./rctl status apply d2w0em8

Expected Output

{

"taskset_id": "d2w0em8",

"operations": [

{

"operation": "CloudProviderUpdation",

"resource_name": "demo-eks1",

"status": "PROVISION_TASK_STATUS_SUCCESS"

}

],

"comments": "Configuration is applied to the cluster successfully",

"status": "PROVISION_TASKSET_STATUS_COMPLETE"

Update Blueprint¶

Use the command ./rctl apply -f demo-eks1.yaml

Expected output (with a task id):

Cluster: eks-ap-eks1

{

"taskset_id": "dk34v2n",

"operations": [

{

"operation": "BlueprintUpdation",

"resource_name": "demo-eks1",

"status": "PROVISION_TASK_STATUS_INPROGRESS"

}

],

"comments": "Configuration is being applied to the cluster",

"status": "PROVISION_TASKSET_STATUS_INPROGRESS"

}

To know the status of the blueprint apply operation, enter the below command with the generated task id

./rctl status apply dk34v2n

Expected Output

{

"taskset_id": "dk34v2n",

"operations": [

{

"operation": "BlueprintUpdation",

"resource_name": "demo-eks1",

"status": "PROVISION_TASK_STATUS_SUCCESS"

}

],

"comments": "Configuration is applied to the cluster successfully",

"status": "PROVISION_TASKSET_STATUS_COMPLETE""

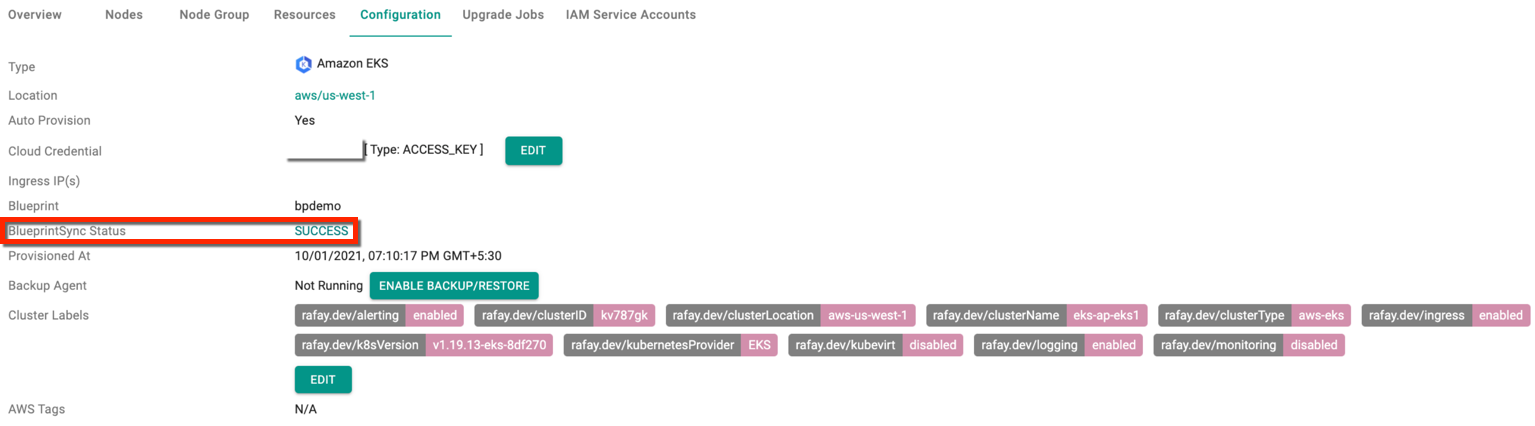

Changes in Controller

On successful Blueprint apply operation, user can view the changes reflecting in Controller

Change Cluster Endpoint¶

Use the command ./rctl apply -f demo-eks1.yaml

Expected output (with a task id):

Cluster: eks-ap-eks1

{

"taskset_id": "j2q48k9",

"operations": [

{

"operation": "UpdatingClusterEndpoints",

"resource_name": "demo-eks1",

"status": "PROVISION_TASK_STATUS_PENDING"

}

],

"comments": "The status of the operations can be fetched using taskset_id",

"status": "PROVISION_TASKSET_STATUS_PENDING"

}

To know the status of the change cluster endpoint apply operation, enter the below command with the generated task id

./rctl status apply dk34v2n

Expected Output

{

"taskset_id": "j2q48k9",

"operations": [

{

"operation": "ClusterEndpointsUpdation",

"resource_name": "demo-eks1",

"status": "PROVISION_TASK_STATUS_SUCCESS"

}

],

"comments": "Configuration is applied to the cluster successfully",

"status": "PROVISION_TASKSET_STATUS_COMPLETE""

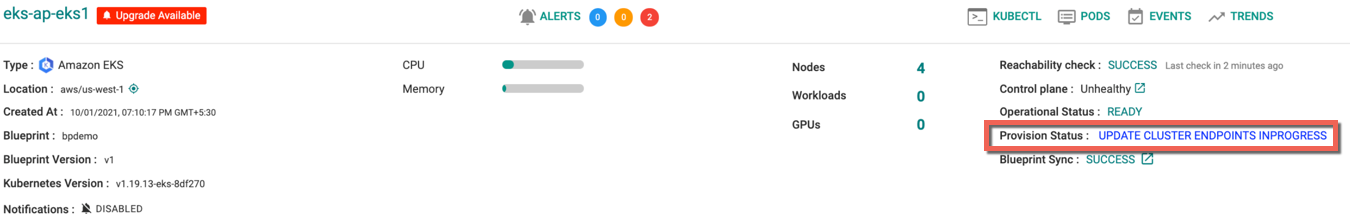

Changes in Controller

On successful Cluster Endpoint apply operation, user can view the changes reflecting in Controller

Cluster and Node Group Labels and Tags¶

When provisioning a cluster, users can include Labels and Tags via RCTL using the Cluster Configuration YAML file. An example of the cluster spec YAML file with managed node group is shown below.

To add a Tag to every resource in a cluster (like nodes and service accounts), add the Tag to the metadata section.

If duplicate tags are used in the metadata and node sections of the YAML file, the node tag will be applied to the nodes. For example, if x: y is in the metadata section and x: z is in the node section, the node tag will be x: z.

Note

While you can create tags for self managed node groups (nodeGroups) when provisioning a cluster, you cannot update tags for self managed node groups after the cluster is deployed (post deployment). This includes updating cluster level tags post deployment (tags in the metadata section).

apiVersion: rafay.io/v1alpha5

kind: ClusterConfig

metadata:

name: demo-eks1

region: us-west-1

version: "1.20"

tags:

x: y

managedNodeGroups:

- amiFamily: AmazonLinux2

name: managed-ng-1

desiredCapacity: 1

maxSize: 2

minSize: 1

instanceType: t3.xlarge

labels:

role: control-plane

tags:

nodegroup-role: control-plane

Post Cluster provisioning, users can update Managed Node group Labels and Tags via RCTL using the Cluster Configuration Yaml file. An illustrative example of the updated cluster spec YAML file with managed node group is shown below.

Note

While you can create tags for self managed node groups (nodeGroups) when provisioning a cluster, you cannot update tags for self managed node groups after the cluster is deployed (post deployment). This includes updating cluster level tags post deployment (tags in the metadata section).

apiVersion: rafay.io/v1alpha5

kind: ClusterConfig

metadata:

name: demo-eks1

region: us-west-1

version: "1.20"

tags:

x: y

managedNodeGroups:

- amiFamily: AmazonLinux2

name: managed-ng-1

desiredCapacity: 1

maxSize: 2

minSize: 1

instanceType: t3.xlarge

labels:

role: worker

tags:

nodegroup-role: worker

Update Node group Labels

Modify the managed Node group labels as per the requirement and use the below command to apply the changes.

./rctl apply -f demo-eks1.yaml

Example

./rctl apply -f demo-eks1.yaml

Output

Cluster: demo-eks1

{

"taskset_id": "lk5xw2e",

"operations": [

{

"operation": "NodegroupUpdateLabels",

"resource_name": "ng-b0ac64e3",

"status": "PROVISION_TASK_STATUS_PENDING"

}

],

"comments": "The status of the operations can be fetched using taskset_id",

"status": "PROVISION_TASKSET_STATUS_PENDING"

}

Update Node group Tags

Modify the managed Node group tags as per the requirement and use the below command to apply the changes

./rctl apply -f demo-eks1.yaml

Example

./rctl apply -f demo-eks1.yaml

Output

Cluster: demo-eks1

{

"taskset_id": "pz24nmy",

"operations": [

{

"operation": "NodegroupUpdateTags",

"resource_name": "ng-b0ac64e3",

"status": "PROVISION_TASK_STATUS_PENDING"

}

],

"comments": "The status of the operations can be fetched using taskset_id",

"status": "PROVISION_TASKSET_STATUS_PENDING"

}

Upgrade Nodegroup K8s Version¶

Post Cluster provisioning, users can upgrade a specific nodegroup K8s version via RCTL using the Cluster Configuration Yaml file.

An illustrative example of the cluster spec YAML file is shown below and the highlighted parameters identifies the nodegroup K8s version details

apiVersion: rafay.io/v1alpha5

kind: ClusterConfig

managedNodeGroups:

- amiFamily: AmazonLinux2

desiredCapacity: 1

iam:

withAddonPolicies:

autoScaler: true

instanceType: t3.large

maxSize: 1

minSize: 1

name: ng-d38d7716

version: "1.22"

volumeSize: 80

volumeType: gp3

metadata:

name: demo_ngupgrade

region: us-west-2

version: "1.22"

vpc:

cidr: 192.168.0.0/16

clusterEndpoints:

privateAccess: true

publicAccess: false

nat:

gateway: Single

Use the below command to apply the changes

./rctl apply -f <file_name.yaml>

Upgrade Custom AMI¶

Users can also upgrade the custom AMIs via RCTL using the Cluster Configuration Yaml file.

An illustrative example of the cluster spec YAML file is shown below and the highlighted parameters identifies the custom AMI details

apiVersion: rafay.io/v1alpha5

kind: ClusterConfig

managedNodeGroups:

- ami: ami-0123f8d52a516f19d

desiredCapacity: 1

iam:

withAddonPolicies:

autoScaler: true

instanceType: t3.large

maxSize: 1

minSize: 1

name: ng-590b8506

volumeSize: 80

volumeType: gp3

metadata:

name: demo_amiupgrade

region: us-west-2

version: "1.22"

vpc:

cidr: 192.168.0.0/16

clusterEndpoints:

privateAccess: true

publicAccess: false

nat:

gateway: Single

Use the below command to apply the changes

./rctl apply -f <file_name.yaml>

Important

If a nodegroup version drifts to a version higher than the control plane, then control plane upgrades will be blocked. To recover from this, pull back the nodegroup to the same version (old AMI perhaps) as control plane and then upgrade control plane

Pod Identity Association for Managed Add-ons

Pod Identity Association for Managed Add-ons¶

The following is an example of an EKS cluster configuration specification where Pod Identity Association is added to the managed add-on "vpc-cni".

kind: Cluster

metadata:

name: demo-podidentity

project: defaultproject

spec:

blueprint: minimal

cloudprovider: democp

cniprovider: aws-cni

type: eks

---

addons:

- name: eks-pod-identity-agent

version: latest

- name: vpc-cni

podIdentityAssociations:

- namespace: kube-system

permissionPolicyARNs:

- arn:aws:iam::aws:policy/AmazonEKS_CNI_Policy

serviceAccountName: aws-node

version: latest

- name: coredns

version: latest

- name: kube-proxy

version: latest

- name: aws-ebs-csi-driver

version: latest

addonsConfig:

autoApplyPodIdentityAssociations: true

apiVersion: rafay.io/v1alpha5

kind: ClusterConfig

managedNodeGroups:

- amiFamily: AmazonLinux2

desiredCapacity: 1

disableIMDSv1: false

disablePodIMDS: false

ebsOptimized: false

efaEnabled: false

enableDetailedMonitoring: false

instanceType: t3.xlarge

maxPodsPerNode: 50

maxSize: 2

minSize: 1

name: tfmng1

privateNetworking: true

spot: false

version: "1.29"

volumeEncrypted: false

volumeIOPS: 3000

volumeSize: 80

volumeThroughput: 125

volumeType: gp3

metadata:

name: demo-podidentity

region: us-west-2

tags:

email: user1@demo.co

env: qa

version: "1.29"

vpc:

autoAllocateIPv6: false

cidr: 192.168.0.0/16

clusterEndpoints:

privateAccess: true

publicAccess: false

nat:

gateway: Single

When the autoApplyPodIdentityAssociations flag is set to true, as shown below, it enables the Pod Identity to be associated with add-ons that have an IRSA service account

addons:

- name: eks-pod-identity-agent

version: latest

- name: vpc-cni

version: latest

- name: coredns

version: latest

- name: kube-proxy

version: latest

- name: aws-ebs-csi-driver

version: latest

addonsConfig:

autoApplyPodIdentityAssociations: true

Get Node Group Count¶

To get the node group and number of current nodes of that node group in a cluster, use the below command

./rctl get cluster <cluster-name> -o json -p <project name> |jq -S '.nodegroups[] | .name, .nodes '

Example with output:

./rctl get cluster demo-unified-eks -o json -p unified |jq -S '.nodegroups[] | .name, .nodes '

"ng-c3e5daab"

3

Scale Node Group¶

Use the below command to scale the node group in a cluster

./rctl scale ng <node-group name> <cluster-name> --desired-nodes 1 -p <project name>