CLI

For purposes of automation, it is strongly recommended that users create "version controlled" declarative cluster specification files to provision and manage the lifecycle of Kubernetes clusters.

Important

Users need to use only a single command (rctl apply -f cluster_spec.yaml) for both provisioning and ongoing lifecycle operations. The controller will automatically determine the required changes and seamlessly map them to the associated action (e.g. add nodes, remove nodes, upgrade Kubernetes, update blueprint etc).

Pre-requisites¶

Before provisioning a cluster using rctl, it is recommended to run a preflight check to validate the cluster environment. The preflight check ensures that the necessary configurations and dependencies are in place before proceeding with provisioning.

Users can explicitly trigger the Conjurer prechecks by passing a specific flag in the rctl command before proceeding with cluster provisioning.

./rctl apply -f <cluster_filename.yaml> --mks-prechecks

Below is an example output when running the precheck command:

Running Preflight-Check command on node: demo-mks-node-5 (129.146.83.94)

Ubuntu detected. Checking and installing bzip2 if necessary...

[+] Performing pre-tests

[+] Operating System check

[+] CPU check

[+] Memory check

[+] Internet connectivity check

[+] Connectivity check to rafay registry

[+] DNS Lookup to the controller

[+] Connectivity check to the Controller

[+] Multiple default routes check

[+] Time Sync check

[+] Storage check

Detected device: /dev/loop0, mountpoint: /snap/core18/2829, type: loop, size: 55.7M, fstype: null

Detected device: /dev/loop1, mountpoint: /snap/oracle-cloud-agent/72, type: loop, size: 77.3M, fstype: null

Detected device: /dev/loop2, mountpoint: /snap/snapd/21759, type: loop, size: 38.8M, fstype: null

Detected device: /dev/sda, mountpoint: null, type: disk, size: 46.6G, fstype: null

Detected device: /dev/sda1, mountpoint: /, type: part, size: 45.6G, fstype: ext4

Detected device: /dev/sda14, mountpoint: null, type: part, size: 4M, fstype: null

Detected device: /dev/sda15, mountpoint: /boot/efi, type: part, size: 106M, fstype: vfat

Detected device: /dev/sda16, mountpoint: /boot, type: part, size: 913M, fstype: ext4

Detected device: /dev/sdb, mountpoint: null, type: disk, size: 150G, fstype: null

Potential storage device: /dev/sdb

[+] Hostname underscore check

[+] DNS port check

[+] Nameserver Rotate option check for /etc/resolv.conf

[+] Checking for Warnings

[+] Checking for Fatal errors

[+] Checking for hard failures

-------------------------------------

Preflight-Checks ran successfully on 1 node

demo-mks-node-5 (129.146.83.94)

-------------------------------------

For more details on preflight checks, refer to Preflight Checks for Bare Metal and VM Clusters.

Create Cluster¶

Declarative¶

You can create an Upstream k8s cluster based on a version controlled cluster spec that you can manage in a Git repository. This enables users to develop automation for reproducible infrastructure.

./rctl apply -f <cluster file name.yaml>

An illustrative example of the split cluster spec YAML file for MKS is shown below

Kubelet Arguments

apiVersion: infra.k8smgmt.io/v3

kind: Cluster

metadata:

name: test-mks

project: defaultproject

labels:

check1: value1

check2: value2

spec:

blueprint:

name: default

version: latest

config:

autoApproveNodes: true

dedicatedMastersEnabled: false

highAvailability: false

installerTtl: 365

platformVersion: v1.0.0

kubeletExtraArgs:

max-pods: "730"

kubernetesVersion: v1.25.2

location: sanjose-us

network:

cni:

name: Calico

version: 3.19.1

podSubnet: 10.244.0.0/16

serviceSubnet: 10.96.0.0/12

nodes:

- arch: amd64

hostname: ip-172-31-61-40

operatingSystem: Ubuntu20.04

privateip: 172.31.61.40

kubelet_args:

max-pods: 6

<key>:<value>

roles:

- Master

- Worker

- Storage

ssh:

ipAddress: 35.86.208.181

port: "22"

privateKeyPath: mks-test.pem

username: ubuntu

type: mks

Important

Illustrative examples of "cluster specifications" are available for use in this Public Git Repository.

Extended MKS Configuration Spec¶

Below is an extended MKS config specification that includes additional parameters to facilitate system synchronization for MKS clusters.

- systemComponentsPlacement

- ssh: SSH credentials used by the GitOps Agent to perform actions on the cluster nodes. The cluster-level SSH section provides the option to override node-level SSH settings.

- port

- privateKeyPath

- username

- passphrase

apiVersion: infra.k8smgmt.io/v3

kind: Cluster

metadata:

name: demo-v3-cluster

project: defaultproject

spec:

blueprint:

name: minimal

version: latest

config:

autoApproveNodes: true

highAvailability: true

kubernetesVersion: v1.28.9

location: sanjose-us

ssh:

privateKeyPath: /home/ubuntu/.ssh/id_rsa

username: ubuntu

port: "22"

passphrase: "test"

network:

cni:

name: Calico

version: 3.26.1

podSubnet: 10.244.0.0/16

serviceSubnet: 10.96.0.0/12

nodes:

- arch: amd64

hostname: demo-mks-node-1

operatingSystem: Ubuntu20.04

privateIP: 10.12.48.231

roles:

- ControlPlane

- Worker

ssh:

ipAddress: 10.12.54.250

- arch: amd64

hostname: demo-mks-node-2

operatingSystem: Ubuntu20.04

privateIP: 10.12.101.17

roles:

- ControlPlane

- Worker

ssh:

ipAddress: 10.12.54.251

- arch: amd64

hostname: demo-mks-node-3

operatingSystem: Ubuntu20.04

privateIP: 10.12.96.235

roles:

- ControlPlane

- Worker

ssh:

ipAddress: 10.12.54.252

- arch: amd64

hostname: demo-mks-node-w-1

operatingSystem: Ubuntu20.04

privateIP: 10.12.16.15

roles:

- Worker

ssh:

ipAddress: 10.12.54.253

privateKeyPath: /home/ubuntu/.ssh/anotherkey.pem

systemComponentsPlacement:

nodeSelector:

app: infra

tolerations:

- effect: NoSchedule

key: app

operator: Equal

value: infra

type: mks

Important

- All fields can be overridden at the node level. This is useful when different SSH keys are used for each node. If an IP address is not provided for SSH, use the private IP to connect to the nodes and run the installer

- SSH configurations are primarily intended for use with RCTL

Extended MKS Configuration Spec for System Sync Operation¶

Below is an extended MKS config specification that includes additional parameters to facilitate system synchronization for MKS clusters.

- dedicatedControlPlane

- systemComponentsPlacement

- cloudCredentials

apiVersion: infra.k8smgmt.io/v3

kind: Cluster

metadata:

name: demo-mks-gitsync-ha-103

project: defaultproject

spec:

blueprint:

name: minimal

version: latest

cloudCredentials: demo-mks-ssh-v4

config:

autoApproveNodes: true

dedicatedControlPlane: true

location: sanjose-us

controlPlane:

dedicated: true

kubernetesVersion: v1.28.6

highAvailability: true

addons:

- type: cni

name: Calico

version: 3.26.1

- type:

network:

podSubnet: 10.244.0.0/16

serviceSubnet: 10.96.0.0/12z

nodes:

- arch: amd64

hostname: demo-mks-1

operatingSystem: Ubuntu20.04

privateIP: 10.12.4.90

roles:

- ControlPlane

- arch: amd64

hostname: demo-mks-3

operatingSystem: Ubuntu20.04

privateIP: 10.12.101.138

roles:

- ControlPlane

- Worker

- arch: amd64

hostname: demo-mks-1

operatingSystem: Ubuntu20.04

privateIP: 10.12.33.173

roles:

- Worker

- Master

- arch: amd64

hostname: demo-mks-2

operatingSystem: Ubuntu20.04

privateIP: 10.12.15.240

cloudCredentials: demo-mks-ssh-v5

systemCompenentsPlacement:

nodeSelector:

app: infra

tolerations:

- effect: NoSchedule

key: app

operator: Equal

value: infra

ssh:

ipAddress: 158.101.45.62

port: "22"

privateKeyPath: /Users/demo/Desktop/ocikeys/mks2.pem

username: user1

proxy: {}

type: mks

To create a cluster using this extended config spec, run the below command:

./rctl apply -f <cluster file name.yaml> --v3

Conjurer Changes for rctl apply

The below change is only for users who are using the rctl apply path for cluster provisioning.

-

Previous Behavior:

- Conjurer binaries were stored in the user's home directory.

- This could lead to file corruption when multiple nodes attempted to write to the same file during

rctl applyin environments using NFS volumes.

-

New Behavior:

- The location of Conjurer binaries has been moved to

/usr/bin. - This ensures that concurrent writes by multiple nodes no longer cause corruption.

- The location of Conjurer binaries has been moved to

-

Passphrase and Certificate Storage:

- The passphrase (txt) and certificate (PEM file) are now stored in

/tmp.

- The passphrase (txt) and certificate (PEM file) are now stored in

-

Changes Required:

- If you use automation scripts with

rctl applythat reference the old home directory path, you will need to update the path to/usr/binfor executing the Conjurer when provisioing cluster or removing cluster nodes . This change is necessary to ensure correct execution of the Conjurer binary.

- If you use automation scripts with

Note: The passphrase and PEM file are stored in the /tmp folder, which will be automatically removed on reboot.

Once the rctl create command is executed successfully, following actions will be done:

- Create cluster on the controller

- Download conjurer & credentials

- SCP conjurer & credentials to node

- Run conjurer

- Configure role, interface

- Start provision

Note

At this time only SSH key based authentication is supportted to scp into the nodes

Provision Status¶

During cluster provisioning, status can be monitored as shown below.

./rctl get cluster <cluster-name> -o json | jq .status

The above command will return READY when the provision is complete.

Add Nodes¶

Users can add nodes on the cluster and update the config yaml file with the below command

./rctl apply -f <cluster-filename.yaml>

Example:

Add the below node details in the yaml file under the nodes key

- hostname: rctl-mks-1

operatingSystem: "Ubuntu18.04"

arch: amd64

privateIP: 10.109.23.6

roles:

- Worker

- Storage

labels:

key1: value1

key2: value2

taints:

- effect: NoSchedule

key: app

value: infra

ssh:

privateKeyPath: "ssh-key-2020-11-11.key"

ipAddress: 10.109.23.6

userName: ubuntu

port: 22

Use the below command to update the yaml file and add the nodes to the cluster

./rctl apply -f <cluster_filename.yaml>

Once the rctl update command is executed succesfully, following actions will be done:

- Download conjurer & credentials

- SCP conjurer & credentials to node

- Run conjurer

- Configure role, interface

- Start provision

For more examples of MKS cluster spec, refer here

Node Provision Status¶

Once the node is added, Provision will trigger and provision status can be monitored as shown below.

rctl get cluster <cluster-name> -o json | jq -r -c '.nodes[] | select(.hostname=="<hostname of the node>") | .status'

The above command will return READY when the provision is complete.

Bulk Node Deletion¶

The MKS Bulk Node Deletion feature allows for the simultaneous deletion of multiple nodes in a MKS environment, using RCTL. It is recommended to delete up to 100 nodes at a time.

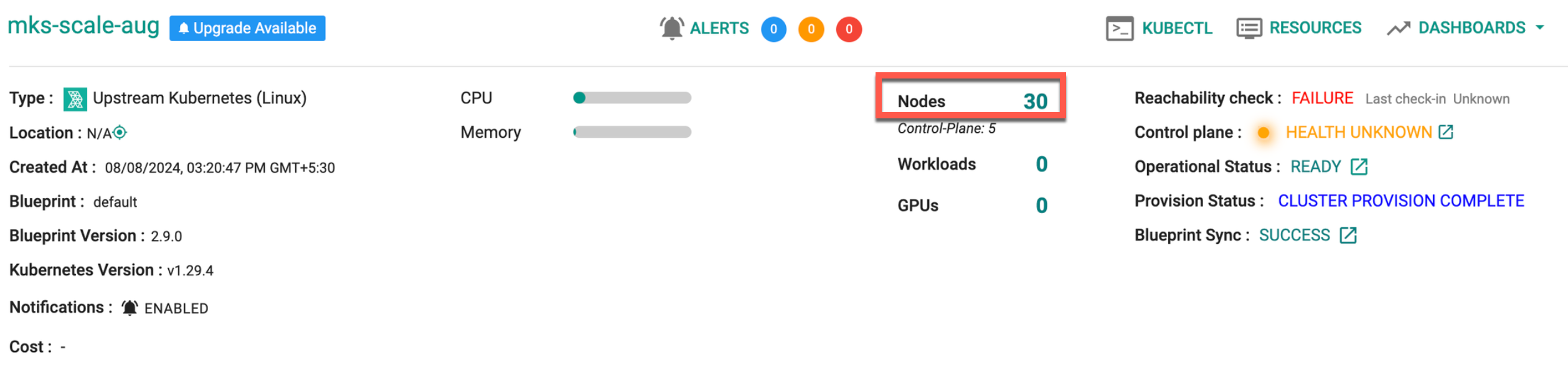

Below is an example of a cluster with 30 nodes:

- To remove these nodes from the MKS Cluster using RCTL, run the command:

./rctl apply -f <cluster_filename.yaml>. Below is the output of this deletion process

{

"taskset_id": "dk64021",

"operations": [

{

"operation": "BulkNodeDelete",

"resource_name": "mks-scale-aug",

"status": "PROVISION_TASK_STATUS_INPROGRESS"

}

],

"comments": "Configuration is being applied to the cluster",

"status": "PROVISION_TASKSET_STATUS_INPROGRESS"

}

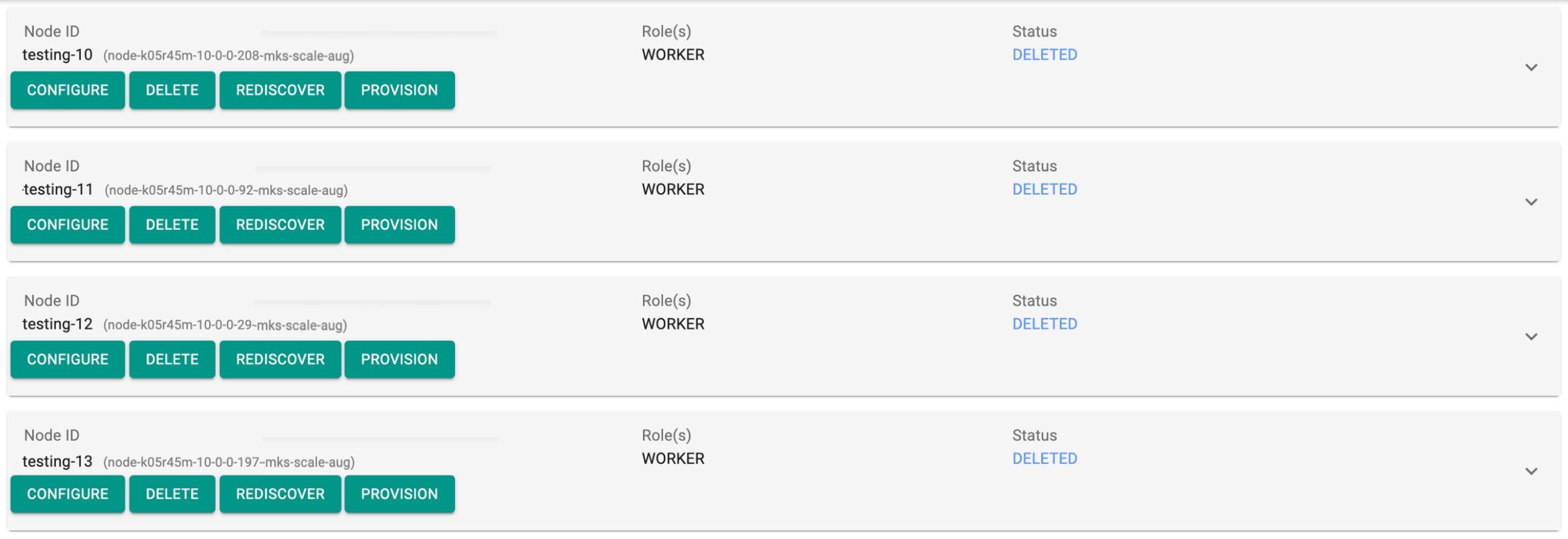

Now, the status of all nodes will be Deleting.

- To check the status of the node deletion operation, run the command using the status id:

./rctl status apply <taskset_id> - Once the node(s) deletion is complete, the status will be shown as Deleted

Note: It takes approximately 12 minutes to complete the deletion of 100 nodes.

Force Delete Node¶

To force delete a node, remove the nodes to be deleted from the cluster configuration and apply the updated configuration using the --force flag, as shown in the command below. This ensures the nodes are deleted immediately, bypassing any validation or blocking errors.

rctl apply -f <cluster_config.yaml> --force

K8s Upgrade Strategy¶

To upgrade the nodes, incorporate the strategy parameters into the specification, whether opting for a concurrent or sequential approach. Here is an illustrative configuration file where the corresponding parameters have been integrated.

apiVersion: infra.k8smgmt.io/v3

kind: Cluster

metadata:

name: qc-mks-cluster-1

project: test-project

spec:

blueprint:

name: default

config:

autoApproveNodes: true

kubernetesVersion: v1.30.4

kubernetesUpgrade:

strategy: concurrent

params:

workerConcurrency: "80%"

network:

cni:

name: Calico

version: 3.24.5

ipv6:

podSubnet: 2001:db8:42:0::/56

serviceSubnet: 2001:db8:42:1::/112

podSubnet: 10.244.0.0/16

serviceSubnet: 10.96.0.0/12

nodes:

- arch: amd64

hostname: mks-node-1

operatingSystem: Ubuntu20.04

privateip: 10.0.0.81

roles:

- Worker

- Master

ssh: {}

- arch: amd64

hostname: mks-node-2

operatingSystem: Ubuntu20.04

privateip: 10.0.0.155

roles:

- Worker

ssh: {}

- arch: amd64

hostname: mks-node-3

operatingSystem: Ubuntu20.04

privateip: 10.0.0.169

roles:

- Worker

ssh: {}

- arch: amd64

hostname: mks-node-4

operatingSystem: Ubuntu20.04

privateip: 10.0.0.196

roles:

- Worker

ssh: {}

- arch: amd64

hostname: mks-node-5

operatingSystem: Ubuntu20.04

privateip: 10.0.0.115

roles:

- Worker

ssh: {}

- arch: amd64

hostname: mks-node-6

operatingSystem: Ubuntu20.04

privateip: 10.0.0.159

roles:

- Worker

ssh: {}

proxy: {}

type: mks

For the Concurrent strategy, assign a value to workerConcurrency, whereas in the case of the Sequential strategy, workerConcurrency is not required. Refer K8s Upgrade page for more information.

Cordon/Uncordon Nodes¶

- Mark the node as unschedulable by running the command

./rctl cordon node <node-name> --cluster <cluster-name>

- Drain the node to remove all running pods, excluding daemonset pods

./rctl drain node <node-name> --cluster <cluster-name> --ignore-daemonsets --delete-emptydir-data

- Uncordon the node using the below command

./rctl uncordon node <node-name> --cluster <cluster-name>

Delete Cluster¶

Users can delete one or more clusters with a single command

./rctl delete cluster <mkscluster-name>

(or)

./rctl delete cluster <mkscluster1-name> <mkscluster2-name>

Dry Run¶

The dry run command is utilized for operations such as Cluster Provisioning, K8s upgrades, blueprint upgrades, and node operations(e.g. :Node Addition/Node Deletion/Labels/Taints). It provides a pre-execution preview of changes, enabling users to assess potential modifications before implementation. This proactive approach is beneficial for identifying and addressing issues, ensuring that the intended changes align seamlessly with infrastructure requirements. Whether provisioning a new cluster or managing updates, incorporating a dry run enhances the predictability, reliability, and overall stability of your infrastructure.

./rctl apply -f <cluster_filename.yaml> --dry-run

Example

- Node Addition - Day 2 operation

Below is an example of the output from the dry run command when a user tries to add a node on Day 2:

./rctl apply -f cluster_file.yaml --dry-run

Running echo $HOSTNAME on 34.211.224.152

Running PATH=$PATH:/usr/sbin ip -f inet addr show on 34.211.224.152

Running command -v bzip2 on 34.211.224.152 to verify if bzip2 is present on node

Running command -v wget on 34.211.224.152 to verify if wget is present on node

{

"operations": [

{

"operation": "NodeAddition",

"resource_name": "ip-172-31-27-101"

}

]

}

Dry Run Output

- The output indicates a successful dry run

- The operation specified is "NodeAddition," indicating the intent to add a node to the cluster

-

The resource_name is "ip-172-31-27-101," representing the hostname or identifier for the node being added

-

Incorrect K8s version - Day 2 operation

Below is an example of the output from the dry run command when a user tries to add an incorrect K8s version:

./rctl apply -f check1.yaml --dry-run

Error: Error performing apply on cluster "mks-test-1-calico": server error [return code: 400]: {"operations":null,"error":{"type":"Processing Error","status":400,"title":"Processing request failed","detail":{"Message":"Kubernetes version v1.28.3 not supported\n"}}}

Dry Run Output

Indicates that an error occurred while trying to apply changes to the cluster named "mks-test-1-calico," and the server returned an HTTP status code 400 (Bad Request).