Custom AWS CNI

In the CNIs default configuration, all pods are assigned an IP Address from the subnets utilized by the cluster. This can quickly exhaust IP Addresses in your subnets as we scale out our cluster. In scenarios where it's not possible to recreate or extend the VPC's CIDR block, they can deploy their worker nodes and pod network to a newly created, non-routable secondary CIDR block (e.g. 100.64.0.0/10) within the VPC. EKS Custom Networking allows you to provision clusters to utilize the subnets in the secondary CIDR block for the pod network defined in an ENIConfig custom resource.

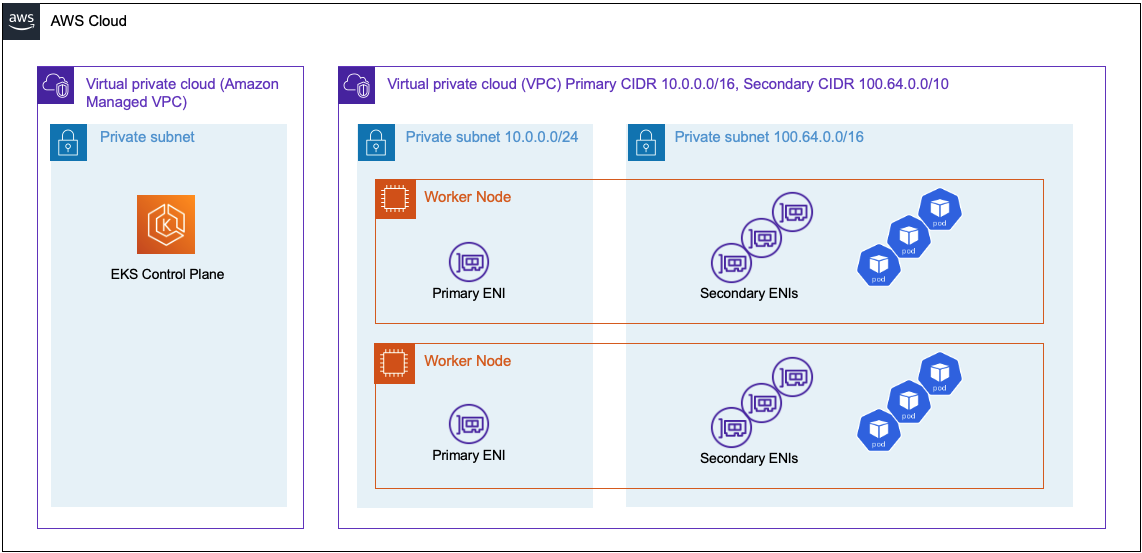

Architecture¶

In the described scenario, it is possible to run the pod network in subnets defined from the secondary CIDR block. The worker nodes primary Elastic Network Interface (ENI) will run in a subnet from the primary CIDR block. The secondary ENIs reside in subnets comprised of IP addresses defined in the secondary CIDR block. Nodes attached to the cluster will have it's host network running on the primary ENI. If you do not plan to run pods on a nodes host network, then you will lose the primary ENIs secondary IP Addresses, decreasing the instance types max pod count. The pods traffic leaving the cluster is routed out through the primary ENI. The traffic leaving the cluster is using source network translation (SNAT) and natted to the primary ENIs primary IP Address.

Important

Watch a video showcasing how you can configure and use Custom Networking for Amazon EKS clusters below.

VPC Configuration¶

Use the following VPC configuration as a reference to the content below.

| Name | Subnet-Id | CIDR | AZ |

|---|---|---|---|

| SecondarySubnetPrivateUSWEST2A | subnet-081ff5e370607fafa | 100.64.0.0/20 | us-west-2a |

| SecondarySubnetPrivateUSWEST2B | subnet-0d336d3350d55a986 | 100.64.16.0/20 | us-west-2c |

| SecondarySubnetPrivateUSWEST2D | subnet-0a4548dabae4b34cb | 100.64.32.0/20 | us-west-2d |

| PrimarySubnetPrivateUSWEST2A | subnet-083bf5944d5ecb3dd | 10.0.96.0/19 | us-west-2a |

| PrimarySubnetPrivateUSWEST2B | subnet-0bce0fb4a1f682e13 | 10.0.120.0/19 | us-west-2c |

| PrimarySubnetPrivateUSWEST2D | subnet-0f4534f41b98dd7be | 10.0.160.0/19 | us-west-2d |

| PrimarySubnetPublicUSWEST2A | subnet-0ad39284a3ed57cfe | 10.0.0.0/19 | us-west-2a |

| PrimarySubnetPublicUSWEST2B | subnet-0238aec96d29bc809 | 10.0.32.0/19 | us-west-2c |

| PrimarySubnetPublicUSWEST2D | subnet-0fb450e17506bd15d | 10.0.64.0/19 | us-west-2d |

Sample Cluster Specification¶

If creating a cluster from a cluster specification, you can define the subnets from the secondary CIDR block to be used for the cluster's pod network. Under the network section the eni configs are defined.

apiVersion: infra.k8smgmt.io/v3

kind: Cluster

metadata:

name: my-eks-cluster

project: my-project

spec:

blueprintConfig:

name: default

cloudCredentials: my-cloud-credential

config:

addons:

- name: aws-ebs-csi-driver

version: latest

managedNodeGroups:

- amiFamily: AmazonLinux2

desiredCapacity: 1

iam:

withAddonPolicies:

autoScaler: true

instanceType: t3.large

maxSize: 6

minSize: 1

name: my-ng

privateNetworking: true

version: "1.23"

volumeSize: 80

volumeType: gp3

metadata:

name: my-eks-cluster

region: us-west-2

tags:

email: rafay@rafay.co

env: dev

version: "1.23"

network:

cni:

name: aws-cni

params:

customCniCrdSpec:

us-west-2a:

- securityGroups:

- sg-0f502b379c12735ce

subnet: subnet-081ff5e370607fafa

us-west-2c:

- securityGroups:

- sg-0f502b379c12735ce

subnet: subnet-0d336d3350d55a986

us-west-2d:

- securityGroups:

- sg-0f502b379c12735ce

subnet: subnet-0a4548dabae4b34cb

vpc:

clusterEndpoints:

privateAccess: true

publicAccess: false

nat:

gateway: Single

subnets:

private:

subnet-083bf5944d5ecb3dd:

id: subnet-083bf5944d5ecb3dd

subnet-0bce0fb4a1f682e13:

id: subnet-0bce0fb4a1f682e13

subnet-0f4534f41b98dd7be:

id: subnet-0f4534f41b98dd7be

public:

subnet-0238aec96d29bc809:

id: subnet-0238aec96d29bc809

subnet-0ad39284a3ed57cfe:

id: subnet-0ad39284a3ed57cfe

subnet-0fb450e17506bd15d:

id: subnet-0fb450e17506bd15d

proxyConfig: {}

type: aws-eks

Verify¶

Pods running on the host network will have IP Addresses from a subnet within the primary CIDR block. All other pods will have an IP Address from subnets defined from the secondary CIDR block.

# kubectl get nodes -o custom-columns='HOSTNAME:status.addresses[1].address','IP:status.addresses[0].address'

HOSTNAME IP

ip-192-168-130-168.us-west-2.compute.internal 10.0.130.168

# kubectl get pods -A -o custom-columns='NAME:.metadata.name','IP:.status.podIP' --sort-by={.status.podIP}

NAME IP

coredns-686c48cb5c-xb7mn 100.64.11.5

rafay-prometheus-metrics-server-866bfd9c7f-xzljc 100.64.11.16

rafay-prometheus-kube-state-metrics-c4cc84848-mkt9n 100.64.11.20

log-router-nm2kw 100.64.11.28

ebs-csi-controller-85cdfd99df-9thgg 100.64.11.47

ebs-csi-controller-85cdfd99df-x6k2p 100.64.11.82

edge-client-555d7dddfc-gj2j2 100.64.11.94

controller-manager-v3-7bc9f78c8-sjz94 100.64.11.119

rafay-connector-v3-566596c754-cqvbp 100.64.11.121

rafay-prometheus-adapter-959579b9c-lpvxf 100.64.11.127

rafay-prometheus-alertmanager-0 100.64.11.134

coredns-686c48cb5c-gz76b 100.64.11.147

ingress-controller-v1-controller-pbl7c 100.64.11.164

rafay-prometheus-node-exporter-lcvk6 100.64.11.167

log-aggregator-5dd6778f8d-vk7d6 100.64.11.171

rafay-prometheus-server-0 100.64.11.174

relay-agent-74dc49d464-gv4bk 100.64.11.185

rafay-prometheus-helm-exporter-d96dfbfd6-gfqnh 100.64.11.201

ebs-csi-node-p4xk9 100.64.11.210

aws-node-termination-handler-v3-2p7wj 10.0.130.168

kube-proxy-bckgr 10.0.130.168

aws-node-dnck4 10.0.130.168