Part 2: Scale

What Will You Do¶

In part 2, you will:

- Scale the number of nodes in a node pool

- Add a "User" node pool to the cluster

- Remove a "User" node pool from the cluster

Watch a video of this exercise.

Assumptions¶

This part assumes that you have completed Part 1 of this series and have a successfully provisioned and healthy AKS cluster.

Select a method to provision and manage your AKS cluster from the tabs below.

Step 1: Scale Nodes¶

In this step, we will scale the number of nodes within the cluster. You can scale the number of nodes up or down, depending on your needs. In this example, we will scale the number of nodes up to 2.

- Navigate to the previously created project in your Org

- Select Infrastructure -> Clusters

- Click on the cluster name of the previosuly created cluster

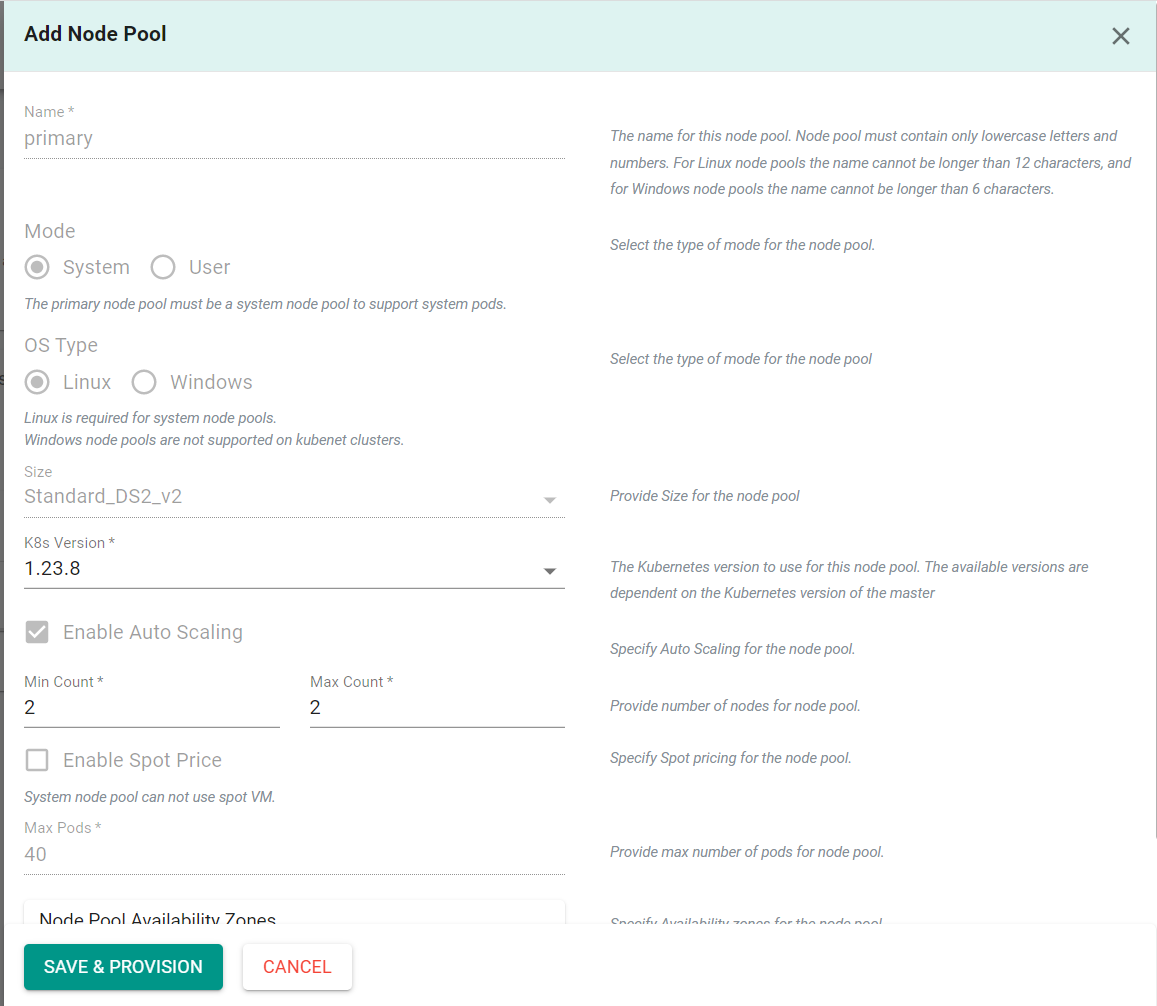

- Click the "Node Pools" tab

- Click the edit button on the existing node pool

- Increase the "Min Count" and "Max Count" to "2"

- Click "Save & Provision"

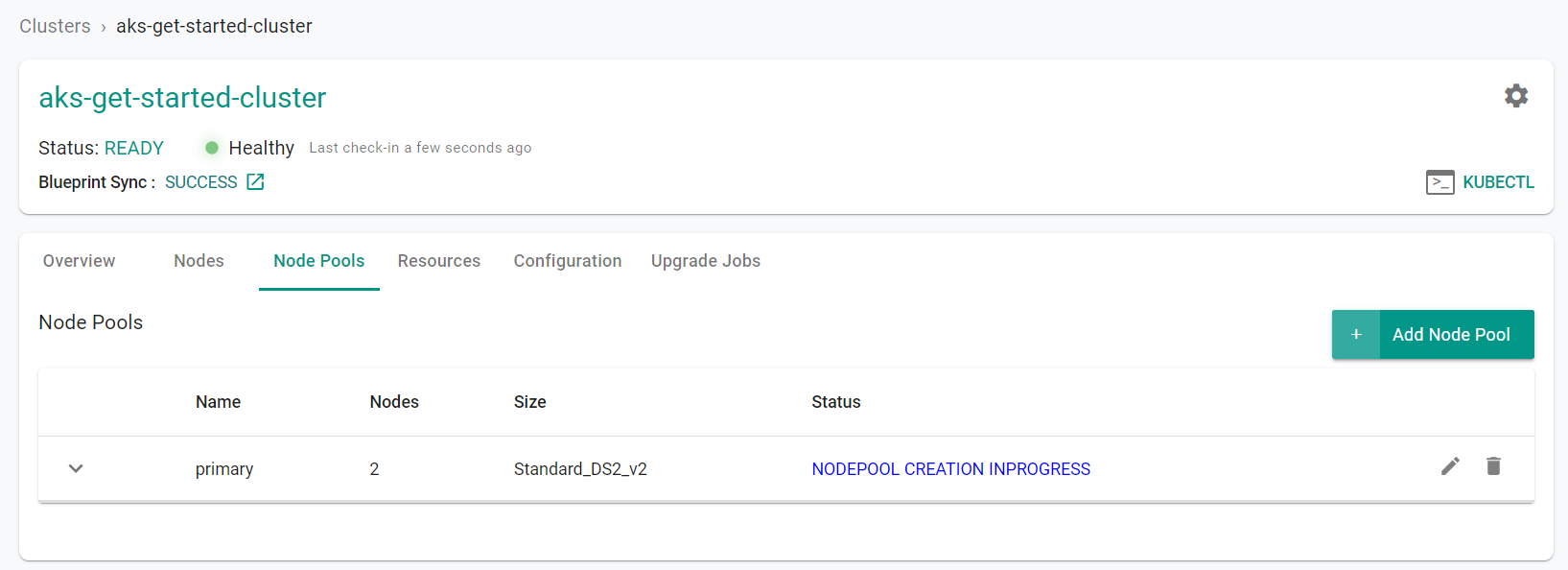

You will see the node pool begin to scale

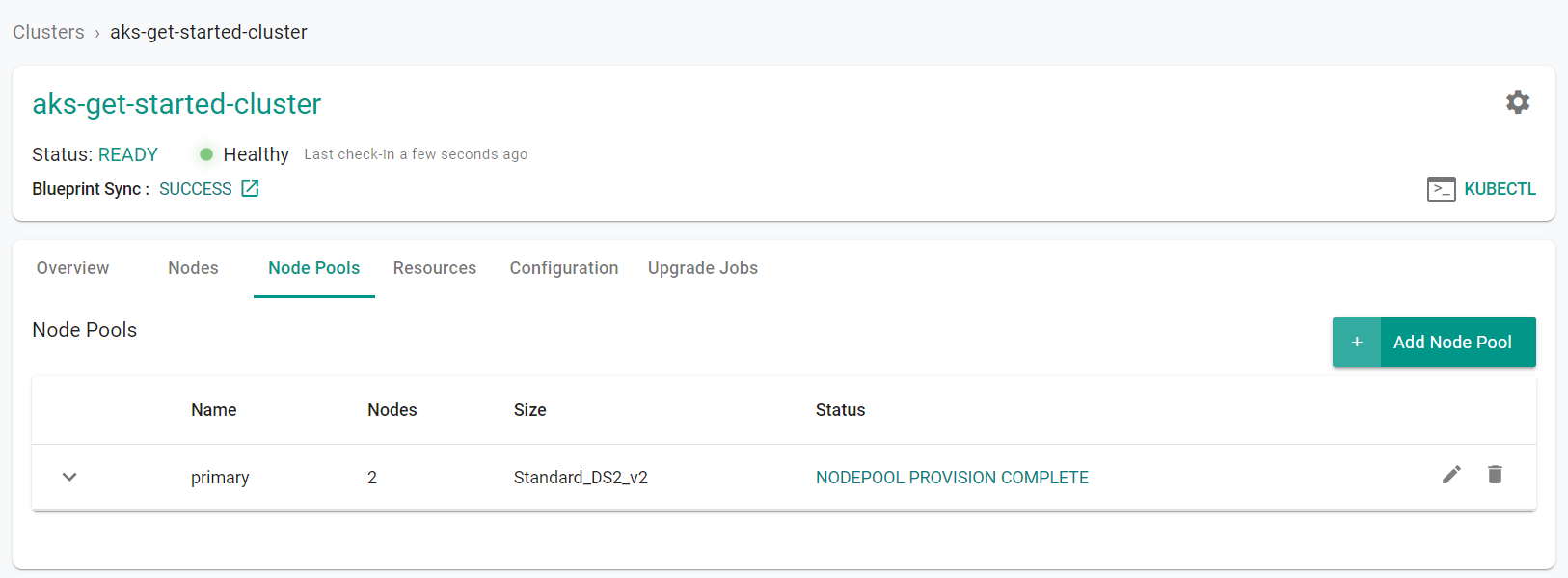

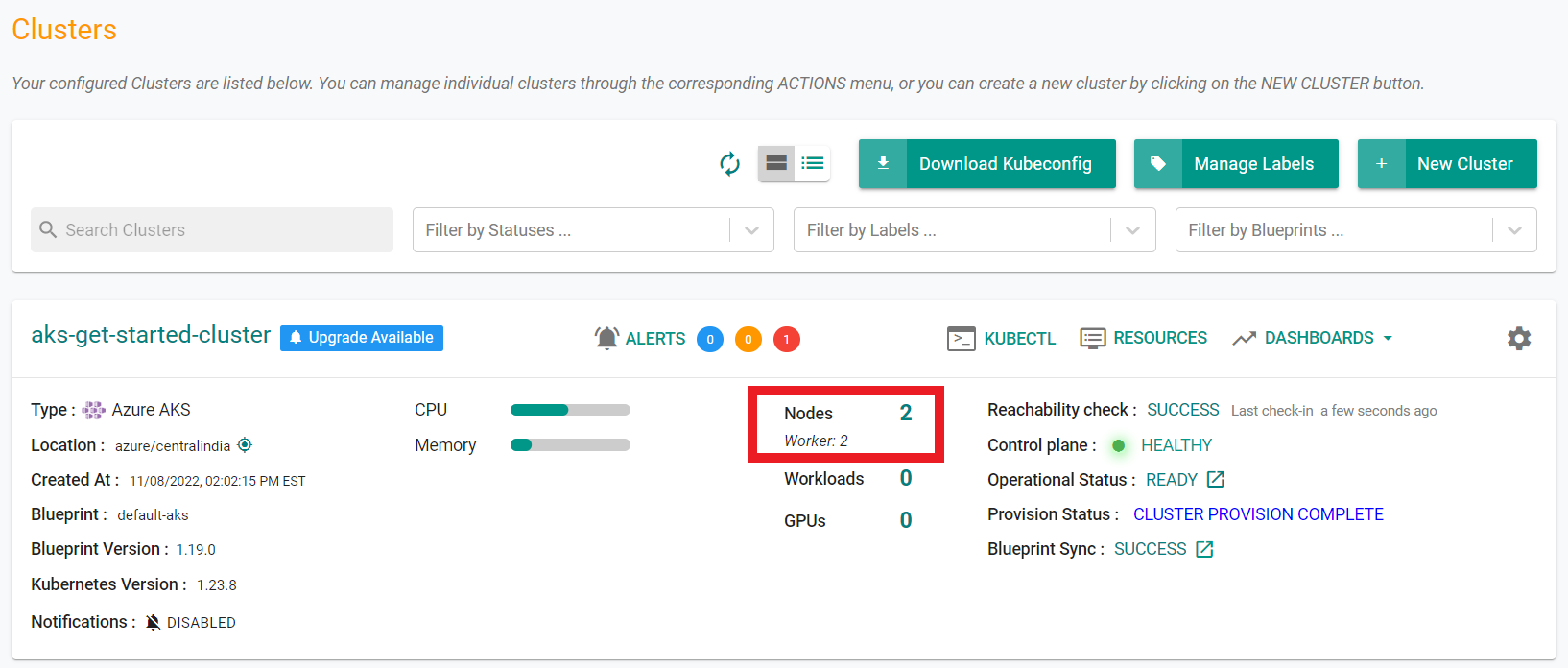

After a few minutes, from the web console, we can see that the number of nodes in the node pool have scaled to 2.

Step 1: Scale Nodes¶

In this step, we will scale the number of nodes within the cluster. You can scale the number of nodes up or down, depending on your needs. In this example, we will scale the number of nodes up to 2.

Download the cluster config from the existing cluster

- Go to Infrastructure -> Clusters. Click on the settings icon of the cluster and select "Download Cluster Config"

- Update the "count", "maxCount" and "minCount" fields to "2" in the downloaded specification file

properties:

count: 1

enableAutoScaling: true

maxCount: 1

maxPods: 40

minCount: 1

mode: System

orchestratorVersion: 1.23.8

osType: Linux

type: VirtualMachineScaleSets

vmSize: Standard_DS2_v2

The updated YAML file will look like this:

apiVersion: rafay.io/v1alpha1

kind: Cluster

metadata:

name: aks-get-started-cluster

project: aks

spec:

blueprint: default-aks

cloudprovider: Azure-CC

clusterConfig:

apiVersion: rafay.io/v1alpha1

kind: aksClusterConfig

metadata:

name: aks-get-started-cluster

spec:

managedCluster:

apiVersion: "2022-07-01"

identity:

type: SystemAssigned

location: centralindia

properties:

apiServerAccessProfile:

enablePrivateCluster: true

dnsPrefix: aks-get-started-cluster-dns

kubernetesVersion: 1.23.8

networkProfile:

loadBalancerSku: standard

networkPlugin: kubenet

sku:

name: Basic

tier: Free

type: Microsoft.ContainerService/managedClusters

nodePools:

- apiVersion: "2022-07-01"

location: centralindia

name: primary

properties:

count: 2

enableAutoScaling: true

maxCount: 2

maxPods: 40

minCount: 2

mode: System

orchestratorVersion: 1.23.8

osType: Linux

type: VirtualMachineScaleSets

vmSize: Standard_DS2_v2

type: Microsoft.ContainerService/managedClusters/agentPools

resourceGroupName: Rafay-ResourceGroup

proxyconfig: {}

type: aks

- Execute the following command to scale the number of nodes within the cluster node pool. Note, update the file name in the below command with the name of your updated specification file.

./rctl apply -f aks-get-started-cluster-config.yaml

Expected output (with a task id):

{

"taskset_id": "dkgy47k",

"operations": [

{

"operation": "NodepoolEdit",

"resource_name": "primary",

"status": "PROVISION_TASK_STATUS_PENDING"

}

],

"comments": "The status of the operations can be fetched using taskset_id",

"status": "PROVISION_TASKSET_STATUS_PENDING"

}

After a few minutes, from the web console, we can see that the number of nodes in the node pool have scaled to 2.

To scale the node group:

- Edit the

terraform.tfvarsfile. The file location is /terraform/terraform.tfvars. - For

pool1, change thenode_countfrom1to2, then save the file. - Open the terminal or command line.

- Navigate to the

terraformfolder. - Run

terraform apply. Enteryeswhen prompted.

It can take 10 minutes to scale up the cluster. Check the console for the scaling status.

Recap¶

Congratulations! At this point, you have

- Successfully scaled a node pool to include the desired number of nodes

- Successfully added a spot instance node pool to the cluster to take advantage of discounted compute resources

- Successfully removed a spot instance node pool from the cluster