CLI

For purposes of automation, it is strongly recommended that users create and manage version controlled "cluster specs" to provision and manage the lifecycle of clusters. This is well suited for scenarios where the cluster lifecycle (creation etc) needs to be embedded into a larger workflow where reproducible environments are required. For example:

- Jenkins or a CI system that needs to provision a cluster as part of a larger workflow

- Reproducible Infrastructure

- Ephemeral clusters for QA/Testing

Credentials¶

Ensure you have created valid cloud credentials for the controller to manage the lifecycle of Amazon EKS clusters on your behalf in your AWS account.

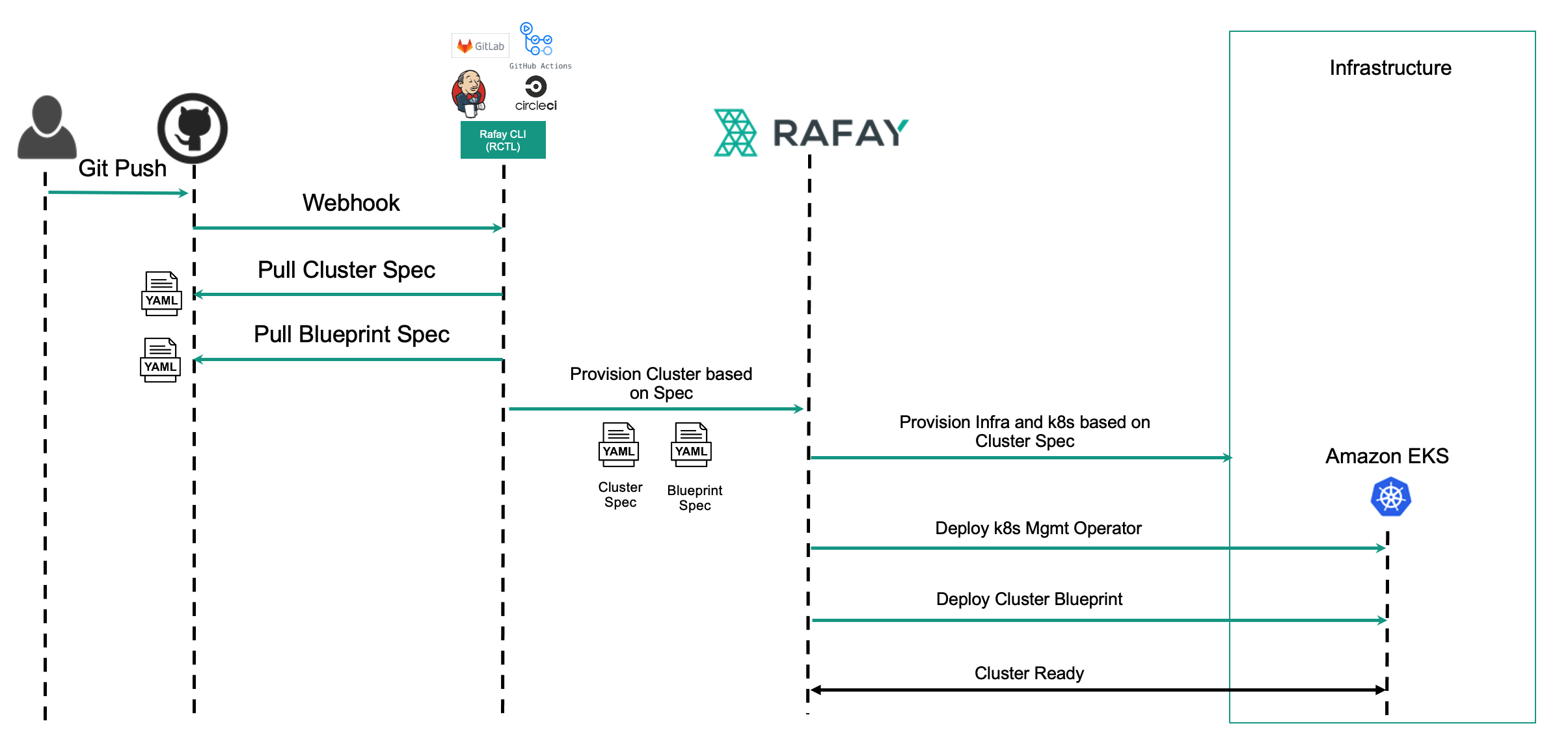

Automation Pipelines¶

The RCTL CLI can be easily embedded and integrated into your preferred platform for automation pipelines. Here is an example of a Jenkins based pipeline that uses RCTL to provision an Amazon EKS Cluster based on the provided cluster specification.

Examples¶

Multiple ready to use examples of cluster specifications are maintained and provided in this Public Git Repo.

Declarative¶

Create Cluster¶

You can also create an EKS cluster based on a version controlled cluster spec that you can manage in a Git repository. This enables users to develop automation for reproducible infrastructure.

./rctl apply -f <ekscluster-spec.yml>

EKS config (v3 API version and Recommended) file¶

An illustrative example of the EKS config (v3 API version) file for EKS is shown below

apiVersion: infra.k8smgmt.io/v3

kind: Cluster

metadata:

name: eks-cluster

project: default-project

spec:

blueprintConfig:

name: default

cloudCredentials: demo-cred

config:

addons:

- name: kube-proxy

version: latest

- name: vpc-cni

version: latest

- name: coredns

version: latest

- name: aws-ebs-csi-driver

version: latest

managedNodeGroups:

- amiFamily: AmazonLinux2

desiredCapacity: 2

iam:

withAddonPolicies:

autoScaler: true

instanceType: t3.xlarge

labels:

app: infra

dedicated: "true"

maxSize: 2

minSize: 2

name: demo-ng3

ssh:

allow: true

publicKeyName: demokey

taints:

- effect: NoSchedule

key: dedicated

value: "true"

- effect: NoExecute

key: app

value: infra

version: "1.22"

volumeSize: 80

volumeType: gp3

metadata:

name: eks-cluster

region: us-west-2

version: "1.22"

network:

cni:

name: aws-cni

vpc:

cidr: 192.168.0.0/16

clusterEndpoints:

privateAccess: false

publicAccess: true

nat:

gateway: Single

proxyConfig: {}

systemComponentsPlacement:

daemonSetOverride:

nodeSelectionEnabled: false

tolerations:

- effect: NoExecute

key: app_daemon

operator: Equal

value: infra_daemon

nodeSelector:

app: infra

dedicated: "true"

tolerations:

- effect: NoSchedule

key: dedicated

operator: Equal

value: "true"

- effect: NoExecute

key: app

operator: Equal

value: infra

type: aws-eks

Recommendation

Users can add the below wild toleration to daemon set which would tolerate any taints

tolerations:

operator: "Exists"

EKS Cluster Config with IPv6¶

An illustrative example of the IPv6 cluster config file for EKS is shown below

apiVersion: infra.k8smgmt.io/v3

kind: Cluster

metadata:

modifiedAt: "2023-11-06T05:12:25.426710Z"

name: shobhit-eks-6nov

project: shobhit

spec:

blueprintConfig:

name: minimal

version: Latest

cloudCredentials: shobhit_aws

config:

addons:

- name: kube-proxy

version: v1.27.1-eksbuild.1

- name: vpc-cni

version: v1.12.6-eksbuild.2

- name: coredns

version: v1.10.1-eksbuild.1

- name: aws-ebs-csi-driver

version: latest

iam:

withOIDC: true

kubernetesNetworkConfig:

ipFamily: IPv6

managedNodeGroups:

- amiFamily: AmazonLinux2

desiredCapacity: 2

iam:

withAddonPolicies:

autoScaler: true

instanceTypes:

- t3.xlarge

maxSize: 2

minSize: 2

name: managed-ng-1

version: "1.27"

volumeSize: 80

volumeType: gp3

metadata:

name: shobhit-eks-6nov

region: us-west-2

tags:

email: shobhit@rafay.co

env: qa

version: "1.27"

network:

cni:

name: aws-cni

vpc:

cidr: 192.168.0.0/16

clusterEndpoints:

privateAccess: true

publicAccess: false

proxyConfig: {}

type: aws-eks

For V3 EKS Config Schema, refer here

EKS Cluster config (v1 API version) file¶

An illustrative example of the EKS config (v1 API version) file for EKS is shown below

kind: Cluster

metadata:

# cluster labels

labels:

env: dev

type: eks-workloads

name: eks-cluster

project: defaultproject

spec:

blueprint: default

cloudprovider: dev-credential # Name of the cloud credential object created on the Controller

proxyconfig: {}

systemComponentsPlacement:

daemonSetOverride:

nodeSelectionEnabled: false

tolerations:

- effect: NoExecute

key: app_daemon

operator: Equal

value: infra_daemon

nodeSelector:

app: infra

dedicated: "true"

tolerations:

- effect: NoExecute

key: app

operator: Equal

value: infra

- effect: NoSchedule

key: dedicated

operator: Equal

value: "true"

type: eks

---

apiVersion: rafay.io/v1alpha5

kind: ClusterConfig

metadata:

name: eks-cluster

region: us-west-1

version: "1.22"

tags:

'demo': 'true'

nodeGroups:

- amiFamily: AmazonLinux2

desiredCapacity: 2

iam:

withAddonPolicies:

autoScaler: true

instanceType: t3.xlarge

labels:

app: infra

dedicated: "true"

maxSize: 2

minSize: 0

name: demo-ng1

ssh:

allow: true

publicKeyName: demo-key

taints:

- effect: NoSchedule

key: dedicated

value: "true"

- effect: NoExecute

key: app

value: infra

version: "1.22"

volumeSize: 80

volumeType: gp3

- amiFamily: AmazonLinux2

desiredCapacity: 2

iam:

withAddonPolicies:

autoScaler: true

instanceType: t3.xlarge

labels:

app: infra

dedicated: "true"

maxSize: 2

minSize: 0

name: demo-ng2

ssh:

allow: true

publicKeyName: demo-key

taints:

- effect: NoExecute

key: app_daemon

value: infra_daemon

version: "1.22"

volumeSize: 80

volumeType: gp3

- amiFamily: AmazonLinux2

availabilityZones:

- us-west-2-wl1-phx-wlz-1

desiredCapacity: 1

iam:

withAddonPolicies:

autoScaler: true

imageBuilder: true

instanceType: t3.medium

maxSize: 2

minSize: 1

name: ng-second-wlz-wed-1

privateNetworking: true

subnetCidr: 192.168.213.0/24

volumeSize: 80

volumeType: gp2

vpc:

cidr: 192.168.0.0/16

clusterEndpoints:

privateAccess: true

publicAccess: false

nat:

gateway: Single

For V1 EKS Config Schema, refer here

EKS Cluster config with managed addon¶

Below is an illustrative example of the cluster spec YAML file with Managed Addon parameters

kind: Cluster

metadata:

name: demo-cluster

project: default

spec:

blueprint: minimal

blueprintversion: 1.23.0

cloudprovider: provider_aws

cniprovider: aws-cni

proxyconfig: {}

type: eks

---

addons:

- name: vpc-cni

version: v1.16.4-eksbuild.2

- name: coredns

version: v1.10.1-eksbuild.7

- name: kube-proxy

version: v1.28.6-eksbuild.2

- name: aws-efs-csi-driver

version: v1.7.5-eksbuild.2

- name: snapshot-controller

version: v6.3.2-eksbuild.1

- name: amazon-cloudwatch-observability

version: v1.3.0-eksbuild.1

- name: aws-mountpoint-s3-csi-driver

version: v1.3.1-eksbuild.1

- name: adot

version: v0.92.1-eksbuild.1

- name: aws-guardduty-agent

version: v1.5.0-eksbuild.1

- name: aws-ebs-csi-driver

version: latest

apiVersion: rafay.io/v1alpha5

kind: ClusterConfig

managedNodeGroups:

- amiFamily: AmazonLinux2

desiredCapacity: 2

iam:

withAddonPolicies:

autoScaler: true

instanceType: t3.xlarge

maxSize: 2

minSize: 2

name: managed-ng-1

version: "1.24"

volumeSize: 80

volumeType: gp3

metadata:

name: demo-cluster

region: us-west-2

tags:

email: demo@rafay.co

env: qa

version: "1.24"

vpc:

cidr: 192.168.0.0/16

clusterEndpoints:

privateAccess: true

publicAccess: false

nat:

gateway: Single

EKS Cluster Config with IPv6¶

An illustrative example of the IPv6 cluster config file for EKS is shown below

kind: Cluster

metadata:

name: ipv6-eks

project: defaultproject

spec:

blueprint: minimal

cloudprovider: aws

cniprovider: aws-cni

type: eks

---

apiVersion: rafay.io/v1alpha5

kind: ClusterConfig

metadata:

name: ipv6-eks

region: us-west-2

version: "1.26"

tags:

email: abc@rafay.co

env: dev

kubernetesNetworkConfig:

ipFamily: IPv6

vpc:

clusterEndpoints:

publicAccess: true

privateAccess: true

iam:

withOIDC: true

addons:

- name: vpc-cni

- name: coredns

- name: kube-proxy

managedNodeGroups:

- amiFamily: AmazonLinux2

desiredCapacity: 2

instanceType: t3.large

tags:

cluster: nodegroupoverride

email: abc@rafay.co

env: dev

nodepool: foo

volumeSize: 80

volumeType: gp3

maxSize: 3

minSize: 1

ssh:

allow: true

publicKeyName: <user's ssh key>

updateConfig:

maxUnavailablePercentage: 33

labels:

os-distribution: amazon-linux-2

name: ng-f554ajat

Get Cluster Details¶

Once the cluster has been created, use this command to retrieve details about the cluster.

./rctl get cluster <ekscluster-name>

An example for a successfully provisioned and operational cluster is shown below.

./rctl get cluster demo-eks

+------------------------+-----------+-----------+---------------------------+

| NAME | TYPE | OWNERSHIP | PROVISION STATUS |

+------------------------+-----------+-----------+---------------------------+

| demo-eks | eks | self | INFRA_CREATION_INPROGRESS |

+------------------------+-----------+-----------+---------------------------+

To retrieve a specific v3 cluster details, use the below command

./rctl get cluster demo-ekscluster --v3

Example

./rctl get cluster demo-ekscluster --v3

+------------------------+-------------------------------+-----------+----------+-----------+---------------------------+

| NAME | CREATED AT | OWNERSHIP | TYPE | BLUEPRINT | PROVISION STATUS |

+------------------------+-------------------------------+-----------+----------+-----------+---------------------------+

| demo-ekscluster | 2023-06-05 10:54:08 +0000 UTC | self | eks | minimal | INFRA_CREATION_INPROGRESS |

+------------------------+-------------------------------+-----------+----------+-----------+---------------------------+

To view the entire v3 cluster config spec, use the below command

./rctl get cluster <ekscluster_name> --v3 -o json

(or)

./rctl get cluster <ekscluster_name> --v3 -o yaml

Download Cluster Configuration¶

Once the cluster is provisioned either using Web Console or CLI, cluster configuration can be downloaded and stored it in a code repository.

./rctl get cluster config cluster-name

The above command will output the cluster config onto stdout. It can be redirected to a file and stored in the code repository of your choice.

./rctl get cluster config cluster-name > cluster-name-config.yaml

To download a v3 cluster config, use the below command

./rctl get cluster config <cluster-name> --v3

Important

Download the cluster configuration only after the cluster is completely provisioned.

Node Groups¶

Both Managed and Self Managed node groups are supported.

Add Node Groups¶

You can add a new node group (Spot or On-Demand) to an existing EKS cluster. Here is an example YAML file to add a spot node group to an existing EKS cluster.

apiVersion: rafay.io/v1alpha5

kind: ClusterConfig

metadata:

name: eks-cluster

region: us-west-1

nodeGroups:

- name: spot-ng-1

minSize: 2

maxSize: 4

volumeType: gp3

instancesDistribution:

maxPrice: 0.030

instanceTypes: ["t3.large","t2.large"]

onDemandBaseCapacity: 0

onDemandPercentageAboveBaseCapacity: 0

spotInstancePools: 2

To add a spot node group to an existing cluster based on the config shown above.

./rctl create node-group -f eks-nodegroup.yaml

Node Groups in Wavelength Zone¶

Users can also create a Node Group in Wavelength Zone using the below config file

Manual Network Configuration

apiVersion: rafay.io/v1alpha5

kind: ClusterConfig

metadata:

name: demo-eks-testing

region: us-west-1

nodeGroups:

- amiFamily: AmazonLinux2

desiredCapacity: 4

iam:

withAddonPolicies:

autoScaler: true

imageBuilder: true

instanceType: t3.xlarge

maxSize: 4

minSize: 0

name: ng-2220fc4d

volumeSize: 80

volumeType: gp3

- amiFamily: AmazonLinux2

availabilityZones:

- us-east-1-wl1-atl-wlz-1

desiredCapacity: 2

iam:

withAddonPolicies:

autoScaler: true

imageBuilder: true

instanceType: t3.xlarge

maxSize: 2

minSize: 2

name: demo-wlzone1

privateNetworking: true

securityGroups:

attachIDs:

- test-grpid

subnets:

- 701d1419

volumeSize: 80

volumeType: gp2

Automatic Network Configuration

apiVersion: rafay.io/v1alpha5

kind: ClusterConfig

metadata:

name: demo-eks-autonode

region: us-west-2

nodeGroups:

- amiFamily: AmazonLinux2

desiredCapacity: 4

iam:

withAddonPolicies:

autoScaler: true

imageBuilder: true

instanceType: t3.xlarge

maxSize: 4

minSize: 0

name: ng-2220fc4d

volumeSize: 80

volumeType: gp3

- amiFamily: AmazonLinux2

availabilityZones:

- us-west-2-wl1-phx-wlz-1

desiredCapacity: 2

iam:

withAddonPolicies:

autoScaler: true

imageBuilder: true

instanceType: t3.xlarge

maxSize: 2

minSize: 2

name: ng-rctl-4-new

privateNetworking: true

subnetCidr: 10.51.0.0/20

volumeSize: 80

volumeType: gp2

Node Group in Wavelength Zone¶

To create a node group configuration, use the below command

./rctl create -f nodegroup.yaml

Users who wish to perform the required changes in the Cluster config file must use the below command to create Wavelength Zone Node Group in the cluster

./rctl apply -f <configfile.yaml>

Example:

./rctl apply -f newng.yaml

Output:

{

"taskset_id": "1ky4gkz",

"operations": [

{

"operation": "NodegroupCreation",

"resource_name": "ng-ui-new-ns",

"status": "PROVISION_TASK_STATUS_PENDING"

},

{

"operation": "NodegroupCreation",

"resource_name": "ng-wlz-ui-222",

"status": "PROVISION_TASK_STATUS_PENDING"

},

{

"operation": "NodegroupCreation",

"resource_name": "ng-default-thurs-222",

"status": "PROVISION_TASK_STATUS_PENDING"

},

{

"operation": "ClusterCreation",

"resource_name": "eks-rctl-friday-3",

"status": "PROVISION_TASK_STATUS_PENDING"

}

],

"comments": "The status of the operations can be fetched using taskset_id",

"status": "PROVISION_TASKSET_STATUS_PENDING"

}

Delete Node Group¶

Delete the required Node Group from the config file and use the below command to apply the deletion change

./rctl apply -f <configfile.yaml>

Example:

./rctl apply -f <newng.yaml>

Output:

{

"taskset_id": "6kno42l",

"operations": [

{

"operation": "NodegroupDeletion",

"resource_name": "ng-wlz-thurs",

"status": "PROVISION_TASK_STATUS_PENDING"

}

],

"comments": "The status of the operations can be fetched using taskset_id",

"status": "PROVISION_TASKSET_STATUS_PENDING"

}

Identity Mapping¶

During cluster provisioning, users can also create a static mapping between IAM Users and Roles, and Kubernetes RBAC groups. This allows users/roles to access specified EKS cluster resources.

Below is a cluster config spec YAML with IAM Identity Mapping objects (highlighted)

kind: Cluster

metadata:

name: demo-eks-cluster

project: defaultproject

spec:

blueprint: minimal

cloudprovider: demo-cp-aws

cniprovider: aws-cni

proxyconfig: {}

type: eks

---

apiVersion: rafay.io/v1alpha5

identityMappings:

accounts:

- "679196758850"

- "656256256267"

arns:

- arn: arn:aws:iam::679196758854:user/demo1@rafay.co

group:

- System Master

username: demo1

- arn: arn:aws:iam::679196758854:user/demo2@rafay.co

group:

- Systemmaster

username: demo2

- arn: arn:aws:iam::679196758854:user/demo5@rafay.co

group:

- Systemmaster

username: demo5

- arn: arn:aws:iam::679196758854:user/demo6@rafay.co

group:

- system:masters

username: demo6

kind: ClusterConfig

metadata:

name: demo-eks-cluster

region: us-west-2

version: "1.22"

nodeGroups:

- amiFamily: AmazonLinux2

desiredCapacity: 1

iam:

withAddonPolicies:

autoScaler: true

imageBuilder: true

instanceType: t3.xlarge

maxSize: 2

minSize: 0

name: demo-node-group

version: "1.22"

volumeSize: 80

volumeType: gp3

vpc:

cidr: 192.168.0.0/16

clusterEndpoints:

privateAccess: true

publicAccess: false

nat:

gateway: Single

On successful cluster provisioning, the users can access the cluster resources via AWS Console

To add IAM Mappings post cluster provisioning, refer Identity Mapping

Pull EKS Cloud Logs & Events¶

The Logs & Events allows users to access detailed event data related to the provisioning process of various resources within an AWS environment. This includes insights into errors, stack creation, and cluster provisioning failures. By utilizing this functionality, users can effectively diagnose issues, and debug errors, ultimately facilitating efficient troubleshooting and resolution. Users can pull the EKS CloudEvents for cluster, nodegroup, EKS Managed addon, and cluster provisioning, enhancing visibility into relevant events, particularly when encountering error messages.

Clusters¶

To extract detailed EKS CloudEvents information, including reasons for failure and aiding in the debugging process for the specific cluster, use the following command:

./rctl cloudevents cluster <cluster-name>

Example Output

./rctl cloudevents cluster demo-cluster

{

"cloudFormationStack": {

"events": [

{

"ClientRequestToken": null,

"EventId": "32120aa0-e13a-11ee-b587-0ada3f4c1df1",

"HookFailureMode": null,

"HookInvocationPoint": null,

"HookStatus": null,

"HookStatusReason": null,

"HookType": null,

"LogicalResourceId": "rafay-demo-cluster-nodegroup-ng-1",

"PhysicalResourceId": "arn:aws:cloudformation:us-west-2:679196758854:stack/rafay-demo-cluster-nodegroup-ng-1/e4d369f0-e139-11ee-8586-02f9fc3bb443",

"ResourceProperties": null,

"ResourceStatus": "CREATE_COMPLETE",

"ResourceStatusReason": null,

"ResourceType": "AWS::CloudFormation::Stack",

"StackId": "arn:aws:cloudformation:us-west-2:679196758854:stack/rafay-demo-cluster-nodegroup-ng-1/e4d369f0-e139-11ee-8586-02f9fc3bb443",

"StackName": "rafay-demo-cluster-nodegroup-ng-1",

"Timestamp": "2024-03-13T13:04:15.935Z"

},

{

"ClientRequestToken": null,

"EventId": "ManagedNodeGroup-CREATE_COMPLETE-2024-03-13T13:04:15.323Z",

"HookFailureMode": null,

"HookInvocationPoint": null,

"HookStatus": null,

"HookStatusReason": null,

"HookType": null,

"LogicalResourceId": "ManagedNodeGroup",

"PhysicalResourceId": "demo-cluster/ng-1",

"ResourceProperties": "{\"NodeRole\":\"arn:aws:iam::679196758854:role/rafay-demo-cluster-nodegroup-ng-1-NodeInstanceRole-ut37QnU4J\",\"NodegroupName\":\"ng-1\",\"Subnets\":[\"subnet-0bea6dbbfaa2d2b71\",\"subnet-08ae2d62d931b0d0d\",\"subnet-05777dd1333480b39\"],\"AmiType\":\"AL2_x86_64\",\"ScalingConfig\":{\"DesiredSize\":\"2\",\"MinSize\":\"2\",\"MaxSize\":\"2\"},\"LaunchTemplate\":{\"Id\":\"lt-05adbe14ef9f823f7\"},\"ClusterName\":\"demo-cluster\",\"Labels\":{\"alpha.rafay.io/cluster-name\":\"demo-cluster\",\"alpha.rafay.io/nodegroup-name\":\"ng-1\"},\"InstanceTypes\":[\"t3.medium\"],\"Tags\":{\"alpha.rafay.io/nodegroup-name\":\"ng-1\",\"alpha.rafay.io/nodegroup-type\":\"managed\",\"env\":\"dev\",\"email\":\"mvsphani@rafay.co\"}}",

"ResourceStatus": "CREATE_COMPLETE",

"ResourceStatusReason": null,

"ResourceType": "AWS::EKS::Nodegroup",

"StackId": "arn:aws:cloudformation:us-west-2:679196758854:stack/rafay-demo-cluster-nodegroup-ng-1/e4d369f0-e139-11ee-8586-02f9fc3bb443",

"StackName": "rafay-demo-cluster-nodegroup-ng-1",

"Timestamp": "2024-03-13T13:04:15.323Z"

}]

}

Nodegroup¶

To retrieve detailed EKS CloudEvents, providing insights into events specific to failure reasons and aiding in the debugging process, utilize the following command:

./rctl cloudevents nodegroup <ng-name> <cluster-name>

Example Output

./rctl cloudevents nodegroup ng-10 demo-cluster

{

"cloudFormationStack": {

"events": [

{

"ClientRequestToken": null,

"EventId": "32120aa0-e13a-11ee-b587-0ada3f4c1df1",

"HookFailureMode": null,

"HookInvocationPoint": null,

"HookStatus": null,

"HookStatusReason": null,

"HookType": null,

"LogicalResourceId": "rafay-demo-cluster-nodegroup-ng-1",

"PhysicalResourceId": "arn:aws:cloudformation:us-west-2:679196758854:stack/rafay-demo-cluster-nodegroup-ng-1/e4d369f0-e139-11ee-8586-02f9fc3bb443",

"ResourceProperties": null,

"ResourceStatus": "CREATE_COMPLETE",

"ResourceStatusReason": null,

"ResourceType": "AWS::CloudFormation::Stack",

"StackId": "arn:aws:cloudformation:us-west-2:679196758854:stack/rafay-demo-cluster-nodegroup-ng-1/e4d369f0-e139-11ee-8586-02f9fc3bb443",

"StackName": "rafay-demo-cluster-nodegroup-ng-1",

"Timestamp": "2024-03-13T13:04:15.935Z"

},

... ]

},

"autoScalingGroup": {

"events": [

{

"ActivityId": "cd4c4111-0267-4d58-9899-6454238725a7",

"AutoScalingGroupARN": "arn:aws:autoscaling:us-west-2:679196758854:autoScalingGroup:889e564d-a418-4988-b89c-08d7ea218c9d:autoScalingGroupName/eks-ng-1-76c71bf4-1b3c-25e9-fcd7-9f0689df0f60",

"AutoScalingGroupName": "eks-ng-1-76c71bf4-1b3c-25e9-fcd7-9f0689df0f60",

"AutoScalingGroupState": null,

"Cause": "At 2024-03-14T14:31:57Z a user request update of AutoScalingGroup constraints to min: 0, max: 2, desired: 0 changing the desired capacity from 2 to 0. At 2024-03-14T14:32:05Z an instance was taken out of service in response to a difference between desired and actual capacity, shrinking the capacity from 2 to 0. At 2024-03-14T14:32:05Z instance i-05954df4ec04e3db3 was selected for termination. At 2024-03-14T14:32:05Z instance i-0480b708771c63d9d was selected for termination.",

"Description": "Terminating EC2 instance: i-0480b708771c63d9d",

"Details": "{\"Subnet ID\":\"subnet-08ae2d62d931b0d0d\",\"Availability Zone\":\"us-west-2c\"}",

"EndTime": "2024-03-14T14:47:54Z",

"Progress": 100,

"StartTime": "2024-03-14T14:32:05.775Z",

"StatusCode": "Successful",

"StatusMessage": null

},

...

]}

}

Add-ons¶

To retrieve detailed EKS CloudEvents, providing insights into events that occurred and failure reasons specific to a particular add-on, utilize the following command:

./rctl cloudevents addon <addon-name> <cluster-name>

Example Output

./rctl cloudevents addon aws-ebs-csi-driver demo-cluster

{

"cloudFormationStack": {

"events": [

{

"ClientRequestToken": null,

"EventId": "32120aa0-e13a-11ee-b587-0ada3f4c1df1",

"HookFailureMode": null,

"HookInvocationPoint": null,

"HookStatus": null,

"HookStatusReason": null,

"HookType": null,

"LogicalResourceId": "rafay-demo-cluster-nodegroup-ng-1",

"PhysicalResourceId": "arn:aws:cloudformation:us-west-2:679196758854:stack/rafay-demo-cluster-nodegroup-ng-1/e4d369f0-e139-11ee-8586-02f9fc3bb443",

"ResourceProperties": null,

"ResourceStatus": "CREATE_COMPLETE",

"ResourceStatusReason": null,

"ResourceType": "AWS::CloudFormation::Stack",

"StackId": "arn:aws:cloudformation:us-west-2:679196758854:stack/rafay-demo-cluster-nodegroup-ng-1/e4d369f0-e139-11ee-8586-02f9fc3bb443",

"StackName": "rafay-demo-cluster-nodegroup-ng-1",

"Timestamp": "2024-03-13T13:04:15.935Z"

},

... ]

},

"healthIssue": {

"events": [

{

"Code": "InsufficientNumberOfReplicas",

"Message": "The add-on is unhealthy because it doesn't have the desired number of replicas.",

"ResourceIds": null

}

]

}

}

Cluster Provision¶

To extract AWS CloudEvents related to the provisioning process of a cluster, providing insights into events occurring during cluster creation, utilize the following command:

./rctl cloudevents provision <cluster-name>

Example Output

./rctl cloudevents provision <cluster-name>

Command: rctl cloudevents provision demo-cluster

Output:

{

"Cluster": {

"cloudFormationStack": {

"events": [

{

},

{

}

]

}

},

"BootstrapNodegroup": {

"NodegroupName": "bootstrap",

"NodegroupEvents": {

"cloudFormationStack": {

"events": [

{

},

{

}

]

},

"bootstrap": {

"cloudInitLogs": {

"InstanceId": "i-00a0197c76e8d6e24",

"Output": "\u001b[H\u001b[J\u001b[1;1H\u001b[H\u001b[J\u001b[1;1H[ 0.000000] Linux version 5.10.209-198.812.amzn2.x86_64 (mockbuild@ip-10-0-35-124) (gcc10-gcc (GCC) 10.5.0 20230707 (Red Hat 10.5.0-1), GNU ld version 2.35.2-9.amzn2.0.1) #1 SMP Tue Jan 30 20:59:52 UTC 2024\r\n..",

"Timestamp": "2024-02-21T23:37:11Z"

}

}

}

},

"DefaultNodegroup": {

"NodegroupName": "ng-874658f7",

"NodegroupEvents": {

"cloudFormationStack": {

"events": [

{

},

{

}

]

}

"autoScalingGroup": {

"events": [

{

},

{

}

]

}

}

}

}

Refer to the Troubleshooting page for detailed information on EKS Cloud Logs & Events.

Upgrade Insights with RCTL¶

To use Upgrade Insights with RCTL utilize the following commands

Usage:

rctl get upgrade-insights [flags]

Aliases:

upgrade-insights, insights

Examples:

Using command(s):

rctl get upgrade-insights <cluster-name>

rctl get upgrade-insghts <cluster-name> --id <id>

Example Output

./rctl get upgrade-insights <cluster-name>

{

"insights": [

{

"Category": "UPGRADE_READINESS",

"Description": "Checks for usage of deprecated APIs that are scheduled for removal in Kubernetes v1.29. Upgrading your cluster before migrating to the updated APIs supported by v1.29 could cause application impact.",

"Id": "6d9fb543-f242-47d4-b784-1e48b26af16a",

"InsightStatus": {

"Reason": "No deprecated API usage detected within the last 30 days.",

"Status": "PASSING"

},

"KubernetesVersion": "1.29",

"LastRefreshTime": "2024-05-17T02:52:50Z",

"LastTransitionTime": "2024-03-27T02:07:47Z",

"Name": "Deprecated APIs removed in Kubernetes v1.29"

},

{

"Category": "UPGRADE_READINESS",

"Description": "Checks for usage of deprecated APIs that are scheduled for removal in Kubernetes v1.26. Upgrading your cluster before migrating to the updated APIs supported by v1.26 could cause application impact.",

"Id": "c6fed4c7-8f1b-4618-9ab2-4ee10f49f63b",

"InsightStatus": {

"Reason": "No deprecated API usage detected within the last 30 days.",

"Status": "PASSING"

},

"KubernetesVersion": "1.26",

"LastRefreshTime": "2024-05-17T02:52:50Z",

"LastTransitionTime": "2024-03-27T02:07:47Z",

"Name": "Deprecated APIs removed in Kubernetes v1.26"

},

{

"Category": "UPGRADE_READINESS",

"Description": "Checks for usage of deprecated APIs that are scheduled for removal in Kubernetes v1.27. Upgrading your cluster before migrating to the updated APIs supported by v1.27 could cause application impact.",

"Id": "1e6232b1-3c97-4c53-aa1b-3a957935ef5e",

"InsightStatus": {

"Reason": "No deprecated API usage detected within the last 30 days.",

"Status": "PASSING"

},

"KubernetesVersion": "1.27",

"LastRefreshTime": "2024-05-17T02:52:50Z",

"LastTransitionTime": "2024-03-27T02:07:47Z",

"Name": "Deprecated APIs removed in Kubernetes v1.27"

},

{

"Category": "UPGRADE_READINESS",

"Description": "Checks for usage of deprecated APIs that are scheduled for removal in Kubernetes v1.25. Upgrading your cluster before migrating to the updated APIs supported by v1.25 could cause application impact.",

"Id": "ca80c795-0e46-4112-af2c-1511e5566e3e",

"InsightStatus": {

"Reason": "Deprecated API usage detected within last 30 days and your cluster is on Kubernetes v1.24.",

"Status": "ERROR"

},

"KubernetesVersion": "1.25",

"LastRefreshTime": "2024-05-17T02:52:50Z",

"LastTransitionTime": "2024-03-27T22:12:47Z",

"Name": "Deprecated APIs removed in Kubernetes v1.25"

},

{

"Category": "UPGRADE_READINESS",

"Description": "Checks for usage of deprecated APIs that are scheduled for removal in Kubernetes v1.32. Upgrading your cluster before migrating to the updated APIs supported by v1.32 could cause application impact.",

"Id": "4293ee72-8919-4a72-9ca8-9b36265f08e9",

"InsightStatus": {

"Reason": "No deprecated API usage detected within the last 30 days.",

"Status": "PASSING"

},

"KubernetesVersion": "1.32",

"LastRefreshTime": "2024-05-17T02:52:50Z",

"LastTransitionTime": "2024-03-27T02:07:47Z",

"Name": "Deprecated APIs removed in Kubernetes v1.32"

}

]

}

Imperative Commands for Creating Cluster, Upgrading, Scaling, and Adding NodeGroup to the Cluster¶

Create Cluster¶

Create an EKS cluster object in the configured project in the Controller. You can optionally also specify the cluster blueprint during this step. To create an EKS cluster, region and cloud credentials name are mandatory. If not specified, the default cluster blueprint will be used.

./rctl create cluster eks eks-cluster sample-credentials --region us-west-2

To create an EKS cluster with a custom blueprint

./rctl create cluster eks eks-cluster sample-credentials --region us-west-2 -b standard-blueprint

Cluster Upgrade¶

Use the below command to upgrade a cluster

./rctl upgrade cluster <cluster-Name> --version <version>

Below is an example of cluster version upgrade

./rctl upgrade cluster eks-cluster --version 1.20

Scale Node Group¶

To Scale an existing node group in a cluster

./rctl scale node-group nodegroup-name cluster-name --desired-nodes <node-count>

Drain Node Group¶

To drain a node group in a cluster

./rctl drain node-group nodegroup-name cluster-name

Node group Labels and Tags¶

Post Cluster provisioning, users can update Managed Node group Labels and Tags via RCTL

Update Node group labels

To update the node group labels in a cluster

./rctl update nodegroup <node-group-name> <cluster-name> --labels 'k1=v1,k2=v2,k3=v3'

Update Node group tags

To update the node group labels in a cluster

./rctl update nodegroup <node-group-name> <cluster-name> --tags 'k1=v1,k2=v2,k3='

Delete Node Group¶

To delete a node group from an existing cluster

./rctl delete node-group nodegroup-name cluster-name

Delete Cluster¶

This will delete the EKS cluster and all associated resources in AWS.

./rctl delete cluster eks-cluster