GPU Config

Graphics Processing Units (GPUs) accelerate certain types of workloads, particularly in machine learning and data processing tasks. By leveraging GPUs, users can significantly improve the performance and speed of these workloads compared to using traditional central processing units (CPUs) alone. GPU Configuration can be managed via UI, RCTL, Terraform, System Sync and Swagger API (V2 and V3)

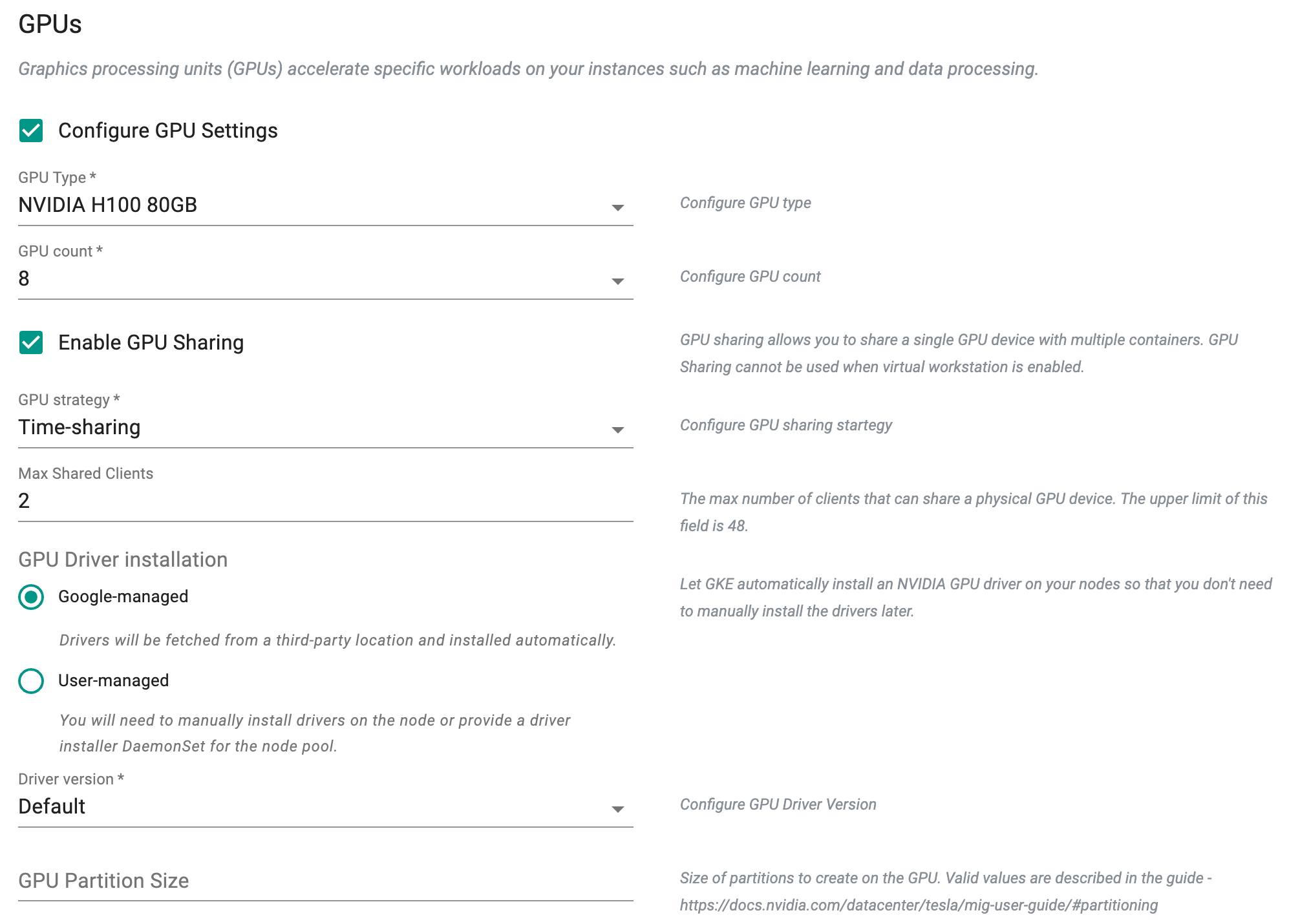

GPU Configuration via UI¶

- Under Node Pools, click Add Node Pool

- Enable Configure GPU Settings

-

Select a GPU Type and GPU Count

-

Optionally, enable Enable GPU Sharing. GPU Accelerator Sharing ensures that GPU resources are utilized effectively across multiple workloads, maximizing the utilization of expensive GPU hardware and reducing costs.

-

Select the GPU strategy and enter the Max Shared Clients

-

GPU Driver Installation: The process of installing the necessary device drivers for GPU hardware on a computing system. GPU driver installation involves configuring how GPU drivers will be installed on the virtual machine (VM) instances that support GPU workloads. The two types of driver installations are Google-managed, and User-managed. By default, User-managed is selected.

- User-managed allows users to manually install drivers on the node or provide a driver installer DaemonSet for the node pool.

- On selecting Google-managed, drivers will be fetched from a third-party location and installed automatically. Select a Driver version.

- Provide GPU Partition Size

Note: When you create a GPU node pool with the DriverInstallationType set as "user-managed," the GPU count will not appear in the cluster card of the console. It will only appear once you manually install the drivers.

| Field Name | Description |

|---|---|

| GKE Node Accelerator | |

| GPU Type* | Allows optimization for specific workload requirements, ensuring efficient performance and cost-effectiveness by leveraging the most suitable hardware accelerators available |

| GPU Count* | Refers to the quantity of Graphics Processing Units (GPUs) assigned to each node, facilitating workload optimization and resource allocation for GPU-accelerated tasks |

| GPU Sharing | |

| GPU Strategy* | Defines how GPUs are allocated and shared among pods within the cluster, with options like time-sharing allowing efficient utilization of GPU resources across multiple workloads based on predefined allocation policies |

| Max Shared Clients | The maximum number of clients permitted to concurrently share a single physical GPU within a GKE cluster |

| GPU Driver Installation | |

| Driver Version* | Represents the specific version of the GPU driver installed on each node, ensuring compatibility with GPU-accelerated workloads |

| GPU Partition Size | Defines the size of partitions to be created on the GPU within a GKE cluster. Valid values are outlined in the NVIDIA documentation, specifying the granularity for allocating GPU resources based on workload requirements and resource availability |

Note

To understand the limitations, please refer to this page.

GPU Configuration via RCTL¶

V3 Config Spec (Recommended)

Below is an example of a v3 spec for creating a GKE cluster with GPU configuration.

apiVersion: infra.k8smgmt.io/v3

kind: Cluster

metadata:

name: new-gpu

project: defaultproject

modifiedAt: "2024-03-12T09:42:58.528168Z"

spec:

cloudCredentials: cred-gke

type: gke

config:

gcpProject: dev-12345

location:

type: zonal

config:

zone: us-central1-a

controlPlaneVersion: "1.27"

network:

name: default

subnetName: default

access:

type: public

config: null

enableVPCNativetraffic: true

maxPodsPerNode: 110

features:

enableComputeEnginePersistentDiskCSIDriver: true

nodePools:

- name: default-nodepool

nodeVersion: "1.27"

size: 3

machineConfig:

imageType: COS_CONTAINERD

machineType: n1-standard-4

bootDiskType: pd-standard

bootDiskSize: 100

accelerators:

- type: nvidia-tesla-t4

count: 1

gpuDriverInstallation:

type: google-managed

config:

version: "LATEST"

upgradeSettings:

strategy: SURGE

config:

maxSurge: 1

blueprint:

name: minimal

version: latest

V2 Config Spec

Below is an example of a v2 spec for creating a GKE cluster with GPU configuration.

apiVersion: infra.k8smgmt.io/v2

kind: Cluster

metadata:

name: demogpu-test

project: defaultproject

spec:

blueprint:

name: minimal

version: latest

cloudCredentials: cred-gke

config:

controlPlaneVersion: "1.27"

feature:

enableComputeEnginePersistentDiskCSIDriver: true

location:

type: zonal

zone: us-central1-a

name: demogpu-test

network:

enableVPCNativeTraffic: true

maxPodsPerNode: 110

name: default

networkAccess:

privacy: public

nodeSubnetName: default

nodePools:

- machineConfig:

accelerators:

- count: 1

driverInstallation:

type: user-managed

type: nvidia-tesla-t4

bootDiskSize: 100

bootDiskType: pd-standard

imageType: COS_CONTAINERD

machineType: n1-standard-4

management: {}

name: default-nodepool

nodeVersion: "1.27"

size: 3

upgradeSettings:

strategy: SURGE

surgeSettings:

maxSurge: 1

maxUnavailable: 0

project: dev-12345

type: Gke