Part 4: Workload

What Will You Do¶

In this part of the self-paced exercise, you will deploy a test workload to your Amazon EKS cluster that will be used to change the load on the cluster and trigger Karpenter to scale the cluster up and down.

Step 1: Deploy Workload¶

In this step, you will create a workload on the cluster using the "inflate-workload.yaml" file which contains the declarative specification for our test workload.

The following items may need to be updated/customized if you made changes to these or used alternate names.

- project: "defaultproject"

- clusters: "karpenter-cluster"

name: inflate-workload

namespace: default

project: defaultproject

type: NativeYaml

clusters: karpenter-cluster

payload: inflate.yaml

- Open Terminal (on macOS/Linux) or Command Prompt (Windows) and navigate to the folder where you forked the Git repository

- Navigate to the folder "

/getstarted/karpenter/workload" - Type the command below

rctl create workload inflate-workload.yaml

If there were no errors, you should see a message like below

Workload created successfully

Now, let us publish the newly created workload to the EKS cluster. The workload can be deployed to multiple clusters as per the configured "placement policy". In this case, you are deploying to a single EKS cluster with the name "karpenter-cluster".

rctl publish workload inflate-workload

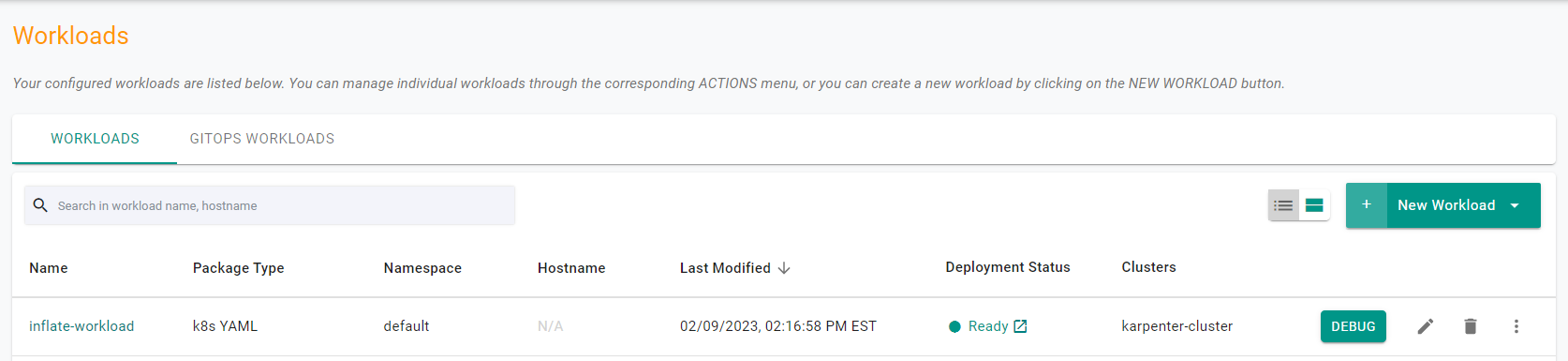

In the web console, click on Applications -> Workloads. You should see something like the following.

Step 2: Scale Workload¶

The test workload can be scaled to consume the resources of the cluster. Once the cluster resources are constrained, Karpenter will increase the number of nodes in the cluster as needed.

- Navigate to Infrastructure -> Clusters

- Click on "KUBECTL" in the cluster card

- Verify the number of "inflate" pods is 0

kubectl get deployments

- You should see a result like the following showing the inflate deployment with 0 pods running.

NAME READY UP-TO-DATE AVAILABLE AGE

inflate 0/0 0 0 62s

- Verify the number of nodes

kubectl get nodes

- You should see a result like the following showing 1 node.

NAME STATUS ROLES AGE VERSION

ip-192-168-21-112.us-west-2.compute.internal Ready <none> 64m v1.28.8-eks-ae9a62a

- Scale the number of inflate replicas up to increase workload and wait 2-3 minutesfor new nodes

kubectl scale deployment inflate --replicas 5

- To verify that the deployment was scaled up succesfully

kubectl get pod -n default

- You should see a result like the following showing 5 inflate pods

NAME READY STATUS RESTARTS AGE

inflate-56d689d486-kqhj2 1/1 Running 0 3m37s

inflate-56d689d486-nd89d 1/1 Running 0 3m37s

inflate-56d689d486-vv7bv 1/1 Running 0 3m37s

inflate-56d689d486-w4rbp 1/1 Running 0 3m37s

inflate-56d689d486-wzlgt 1/1 Running 0 3m37s

- Verify the number of nodes again

kubectl get nodes

- You should see a result like the following showing 3 nodes

NAME STATUS ROLES AGE VERSION

ip-192-168-185-147.us-west-2.compute.internal Ready <none> 8m27s v1.29.6-eks-1552ad0

ip-192-168-93-53.us-west-2.compute.internal Ready <none> 90m v1.29.6-eks-1552ad0

- Verify that the inflate pods are now in a running state with the new node resources

kubectl get pod -n default

- You should see a result like the following showing 5 inflate pods in a running state

NAME READY STATUS RESTARTS AGE

inflate-7d57f774d4-92njk 1/1 Running 0 87s

inflate-7d57f774d4-bm8kv 1/1 Running 0 87s

inflate-7d57f774d4-nkxg5 1/1 Running 0 87s

inflate-7d57f774d4-v8tkq 1/1 Running 0 87s

inflate-7d57f774d4-vd4xw 1/1 Running 0 87s

Recap¶

Congratulations! At this point, you have successfuly

- Deployed and scaled a test workload to the EKS Cluster and verified that Karpenter automatically adjusted the number of nodes in the cluster