CLI

Google Kubernetes Engine (GKE) is a fully managed Kubernetes service provided by Google Cloud. An integration is developed with GKE to ensure that users can provision GKE Clusters in any region and Google Cloud project using CLI (RCTL).

Create Cluster Via RCTL¶

Step 1: Cloud Credentials¶

Use the below command to create a GCP credential via RCTL

./rctl create credential gcp credentials-name <Location of credentials JSON File>

On successful creation, use this credential in the cluster config file to create a GKE cluster

Step 2: Create Cluster¶

Users can create the cluster based on a version controlled cluster spec that you can store in a Git repository. This enables users to develop automation for reproducible infrastructure.

./rctl apply -f cluster-spec.yml

GKE config (v3 API version and Recommended)¶

Here's an illustrative example of a GKE Cluster v3 YAML file, featuring the following components:

- One (1) node pool configured

- The Kubernetes control plane version is set to 1.29

- The cluster location is set to regional in us-central1-c

- Maximum pods per node is set to 110

- Compute Engine Persistent Disk CSI driver is enabled

- Private Nodes are enabled with true

- Reservation Affinity is not explicitly configured in this spec

apiVersion: infra.k8smgmt.io/v3

kind: Cluster

metadata:

name: my-gke-cluster

project: defaultproject

spec:

blueprint:

name: minimal

version: latest

cloudCredentials: dev-cred

config:

controlPlaneVersion: "1.29"

features:

enableComputeEnginePersistentDiskCSIDriver: true

gcpProject: gcpproj-1

location:

config:

region: us-central1

zone: us-central1-c

type: regional

network:

access:

config:

disableSNAT: true

enableAccessControlPlaneExternalIP: true

enableAccessControlPlaneGlobal: true

enablePrivateNodes: true

privateEndpointSubnetworkName: user1-subnetwork

type: private

enableVPCNativetraffic: true

maxPodsPerNode: 110

name: cloudengg-qe-vpc

subnetName: default-subnet

nodePools:

- machineConfig:

bootDiskSize: 100

bootDiskType: pd-standard

imageType: COS_CONTAINERD

machineType: e2-standard-4

name: gkepool1

nodeVersion: "1.29"

size: 1

upgradeSettings:

config:

maxSurge: 1

strategy: SURGE

type: gke

To configure either DataPlaneV2 or Calico Kubernetes Network Policy, include the parameters dataPlaneV2 and set enableDataPlaneV2Observability and enableDataPlaneV2Metrics to true. Alternatively, set the networkPolicyConfig to true and specify the network policy as calico. Below is a demonstration of the configuration for network details

network:

name: default

subnetName: default

access:

type: public

config:

enableVPCNativetraffic: true

maxPodsPerNode: 110

# dataPlaneV2: "ADVANCED_DATAPATH"

# enableDataPlaneV2Observability: true

# enableDataPlaneV2Metrics: true

networkPolicyConfig: true

networkPolicy: "CALICO"

Important

Users are allowed to enable either DataPlane V2 or Calico Kubernetes Network Policy during GKE cluster creation, but not both simultaneously.

For the V3 Config Schema, refer here

GKE config (v2 API version)¶

Here's an illustrative example of a YAML file for a regional GKE cluster, featuring the following components:

- two (2) node pools

- auto-upgrade enablement

- node pool upgrade strategy set to Surge

apiVersion: infra.k8smgmt.io/v2

kind: Cluster

metadata:

name: gke-cluster

project: default-project

spec:

blueprint:

name: default

version: latest

cloudCredentials: gke-cred

config:

controlPlaneVersion: "1.22"

location:

region:

region: us-east1

zone: us-east1-b

type: regional

name: gke-cluster

network:

enableVPCNativeTraffic: true

maxPodsPerNode: 75

name: default

networkAccess:

privacy: public

nodeSubnetName: default

nodePools:

- machineConfig:

bootDiskSize: 100

bootDiskType: pd-standard

imageType: COS_CONTAINERD

machineType: e2-medium

reservationAffinity:

consumeReservationType: specific

reservationName: reservation-name

name: default-nodepool

nodeMetadata:

gceInstanceMetadata:

- key: org-team

value: qe-cloud

kubernetesLabels:

- key: nodepool-type

value: default-np

nodeVersion: "1.22"

size: 2

management:

autoUpgrade: true

upgradeSettings:

strategy: SURGE

surgeSettings:

maxSurge: 0

maxUnavailable: 1

- machineConfig:

bootDiskSize: 60

bootDiskType: pd-standard

imageType: COS_CONTAINERD

machineType: e2-medium

reservationAffinity:

consumeReservationType: specific

reservationName: reservation-name

name: pool2

nodeMetadata:

gceInstanceMetadata:

- key: org-team

value: qe-cloud

kubernetesLabels:

- key: nodepool-type

value: nodepool2

nodeVersion: "1.22"

size: 2

project: project1

security:

enableLegacyAuthorization: true

enableWorkloadIdentity: true

type: Gke

To configure the shared VPC Network, specify the exact network path names as demonstrated in the following configuration for network details, where podSecondaryRangeName and serviceSecondaryRangeName are mandatory

network:

enableVPCNativeTraffic: true

maxPodsPerNode: 110

name: projects/kr-test-200723/global/networks/km-1

networkAccess:

privacy: public

nodeSubnetName: projects/kr-test-200723/regions/us-central1/subnetworks/km-1

podSecondaryRangeName: pod

serviceSecondaryRangeName: service

To configure a private cluster with Firewall Rules, specify the cluster privacy as demonstrated in the following configuration

network:

enableVPCNativeTraffic: true

maxPodsPerNode: 110

name: default

networkAccess:

privacy: private

privateCluster:

controlPlaneIPRange: 172.16.8.0/28

disableSNAT: true

enableAccessControlPlaneExternalIP: true

enableAccessControlPlaneGlobal: true

firewallRules:

- action: ALLOW

rules:

- protocol: tcp

ports:

- "22284"

- "9447"

description: Allow Webhook Connections

direction: EGRESS

name: test-allow-webhook

# network: default /

priority: 1555

destinationRanges:

- 172.16.8.0/28

- action: DENY

rules:

- protocol: tcp

ports:

- "22281"

- "9443"

description: Allow Webhook Connections

direction: INGRESS

name: deny-demo-test-allow-webhook-1

priority: 1220

sourceRanges:

- 172.16.8.0/28

DataPlane V2 and Network Policy Config

To configure either DataPlaneV2 or Calico Kubernetes Network Policy, include the parameters dataPlaneV2 and set enableDataPlaneV2Observability and enableDataPlaneV2Metrics to true. Alternatively, set the networkPolicyConfig to true and specify the network policy as calico. Below is a demonstration of the configuration for network details

network:

dataPlaneV2: "ADVANCED_DATAPATH"

enableDataPlaneV2Observability: true

enableDataPlaneV2Metrics: true

# networkPolicyConfig: true

# networkPolicy: "CALICO"

enableVPCNativeTraffic: true

maxPodsPerNode: 110

disableDefaultSNAT: true

name: default

networkAccess:

privacy: public

nodeSubnetName: default

Important

- To configure Calico network policy for nodes, the

networkPolicyConfigmust be set to true.

- The parameters

enableDataPlaneV2ObservabilityandenableDataPlaneV2Metricscan be set to false as a Day-2 operation. - Day-2 operation is not supported for

dataPlaneV2, but the parametersenableDataPlaneV2ObservabilityandenableDataPlaneV2Metricscan be modified as a Day-2 operation.

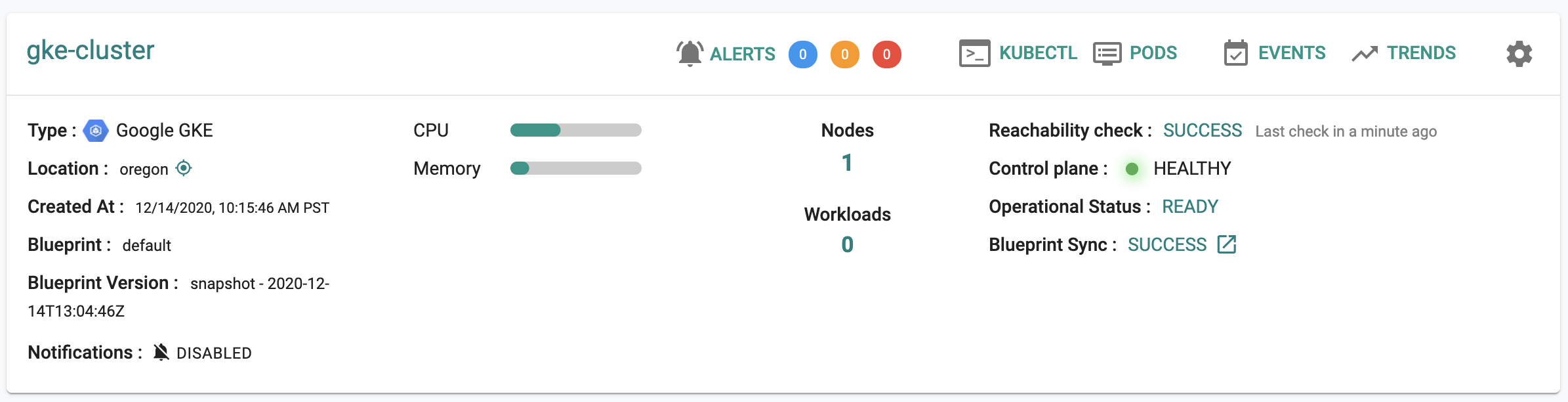

On successful provisioning, you can view the cluster details as shown below

For more GKE cluster spec examples, refer here

For the V2 Config Schema, refer here

Cluster Sharing¶

For cluster sharing, add a new block to the cluster config (Rafay Spec) as highlighted in the below config file

apiVersion: infra.k8smgmt.io/v2

kind: Cluster

metadata:

labels:

clusterName: demo-gke-cluster

clusterType: gke

name: demo-gke-cluster

project: defaultproject

spec:

blueprint:

name: minimal

version: latest

cloudCredentials: demo-cred

config:

controlPlaneVersion: "1.24"

location:

type: zonal

zone: us-west1-c

name: demo-gke-cluster

network:

enableVPCNativeTraffic: true

maxPodsPerNode: 110

name: default

networkAccess:

privacy: public

nodeSubnetName: default

nodePools:

- machineConfig:

bootDiskSize: 100

bootDiskType: pd-standard

imageType: COS_CONTAINERD

machineType: e2-standard-4

name: default-nodepool

nodeMetadata:

nodeTaints:

- effect: NoSchedule

key: k1

nodeVersion: "1.24"

size: 3

- machineConfig:

bootDiskSize: 100

bootDiskType: pd-standard

imageType: COS_CONTAINERD

machineType: e2-standard-4

name: pool2

nodeVersion: "1.24"

size: 3

project: dev-382813

sharing:

enabled: true

projects:

- name: "demoproject1"

- name: "demoproject2"

type: Gke

You can also use the wildcard operator "*" to share the cluster across projects

sharing:

enabled: true

projects:

- name: "*"

Notes: When passing the wildcard operator, users cannot pass other projects name

To remove any cluster sharing from the project(s), remove that specific project name(s) and run the apply command

List Clusters¶

To retrieve a specific GKE cluster, use the below command

./rctl get cluster <gkecluster_name>

Output

./rctl get cluster demo-gkecluster

+------------------------+-----------+-----------+---------------------------+

| NAME | TYPE | OWNERSHIP | PROVISION STATUS |

+------------------------+-----------+-----------+---------------------------+

| demo-gkecluster | gke | self | INFRA_CREATION_INPROGRESS |

+------------------------+-----------+-----------+---------------------------+

To retrieve a specific v3 cluster details, use the below command

./rctl get cluster demo-gkecluster --v3

Example

./rctl get cluster demo-gkecluster --v3

+------------------------+-------------------------------+-----------+----------+-----------+---------------------------+

| NAME | CREATED AT | OWNERSHIP | TYPE | BLUEPRINT | PROVISION STATUS |

+------------------------+-------------------------------+-----------+----------+-----------+---------------------------+

| demo-gkecluster | 2023-06-05 10:54:08 +0000 UTC | self | gke | minimal | INFRA_CREATION_INPROGRESS |

+------------------------+-------------------------------+-----------+----------+-----------+---------------------------+

To view the entire v3 cluster config spec, use the below command

./rctl get cluster <gkecluster_name> --v3 -o json

(or)

./rctl get cluster <gkecluster_name> --v3 -o yaml

Download Cluster Config¶

Use the below command to download the v3 Cluster Config file

./rctl get cluster config <cluster-name> --v3

Important

Download the cluster configuration only after the cluster is completely provisioned

Node Pool Management¶

To add/edit/scale/upgrade/delete node pool(s), make the required changes in the GKE Cluster config spec and run the apply command

Delete Cluster¶

Delete cluster will clean up the resources in Google Cloud as well

./rctl delete cluster <cluster_name>

Dry Run¶

The dry run command is utilized for operations such as upgrades, control plane upgrades, blueprint upgrades, and node pool operations. It provides a pre-execution preview of changes, enabling users to assess potential modifications before implementation. This proactive approach is beneficial for identifying and addressing issues, ensuring that the intended changes align seamlessly with infrastructure requirements. Whether provisioning a new cluster or managing updates, incorporating a dry run enhances the predictability, reliability, and overall stability of your infrastructure.

./rctl apply -f <clusterfile.yaml> --dry-run

Examples

- Controlplane Upgrade

Below is an example of the output from the dry run command when a user successfully attempts to upgrade a control plane.

./rctl apply -f demo_cluster.yaml --dry-run

{

"operations": [

{

"operation": "ControlPlane upgrade",

"resourceName": "ControlPlane version: 1.26",

"comment": "ControlPlane will be upgraded. Diff shown in json patch"

}

],

"diffJsonPatch": {

"patch": [

{

"op": "replace",

"path": "/spec/config/controlPlaneVersion",

"value": {

"Kind": {

"string_value": "1.26"

}

}

}

]

}

}

- New update request when cluster provision in progress

Below is an example of the output from the dry run command when a user tries to send an update request to a cluster while the cluster provision is IN PROGRESS:

./rctl apply -f demo_cluster.yaml --dry-run

{

"operations": [

{

"operation": "Cluster Update",

"resourceName": "gke-np-upgradesetting",

"comment": "Cluster object exists. But provision is IN-PROGRESS.",

"error": ["Cannot run dry-run on the cluster now. Try again later.."]

}

],

"diffJsonPatch": {

"patch": [

{

"op": "replace",

"path": "/spec/config/controlPlaneVersion",

"value": {

"Kind": {

"string_value": "1.27"

}

}

}

]

}

}