Kubernetes Upgrades

The Controller provides seamless workflows to help customers manage the lifecycle of Kubernetes clusters including workflows to keep the Kubernetes version current and up to date.

Kubernetes Versions¶

Kubernetes versions are expressed as vMajor.vMinor.vPatch. The Kubernetes project typically releases new vMinor versions every 3-months. New vPatch updates are made available to address security issues and/or bug fixes.

Supported Versions¶

The Kubernetes project maintains release branches for the most recent three minor releases. Applicable fixes, including security fixes are typically made available ONLY to these three release branches.

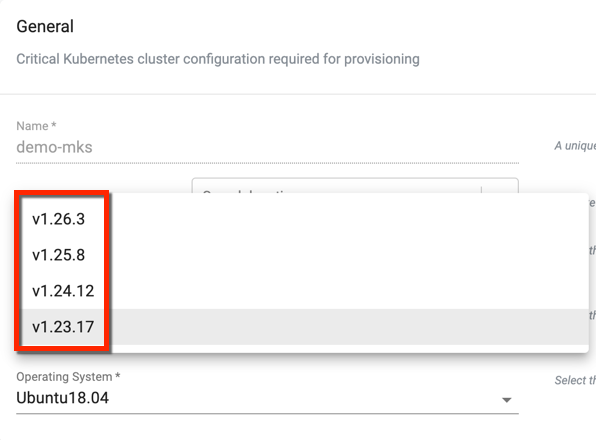

We actively track the Kubernetes Project for availability of patches and minor/major versions. These are then immediately put through a round of testing and qualification before being made available to customers. By default, the latest version of the Kubernetes is used for cluster provisioning. Customers can also optionally specify a version from an older minor version from the support matrix of Kubernetes during cluster provisioning. For example, the screenshot below shows the K8s version selection dropdown for provisioning on bare metal/VMs.

- Users must update the Blueprint (both custom and default) of the existing cluster before upgrading the cluster to the k8 1.24 version

- Users must upgrade the Helm and Yaml workload to the new API versions before upgrading the cluster to K8 1.24 version

Important

Upgrading a workload fails if not upgraded to the new API versions before upgrading a cluster to the k8s 1.24 version. To upgrade a deprecated Yaml or Helm charts on the k8s v1.24 cluster, users must Unpublish (delete) the workload and Publish (create) to apply the new changes on the workload

In Place k8s Upgrades¶

Design Goals¶

- Customer’s application should not suffer from lack of orchestration capabilities (e.g. autoscaling) during k8s upgrade process.

- Kubernetes upgrades can be scheduled and performed in customer's preferred maintenance windows.

- Kubernetes upgrades can be performed with a canary approach i.e. one canary cluster first, then followed by remaining clusters.

- Customer applications should be able to operate in a heterogeneous k8s environment for extended periods of time i.e. some clusters on latest versions and remaining on prior version.

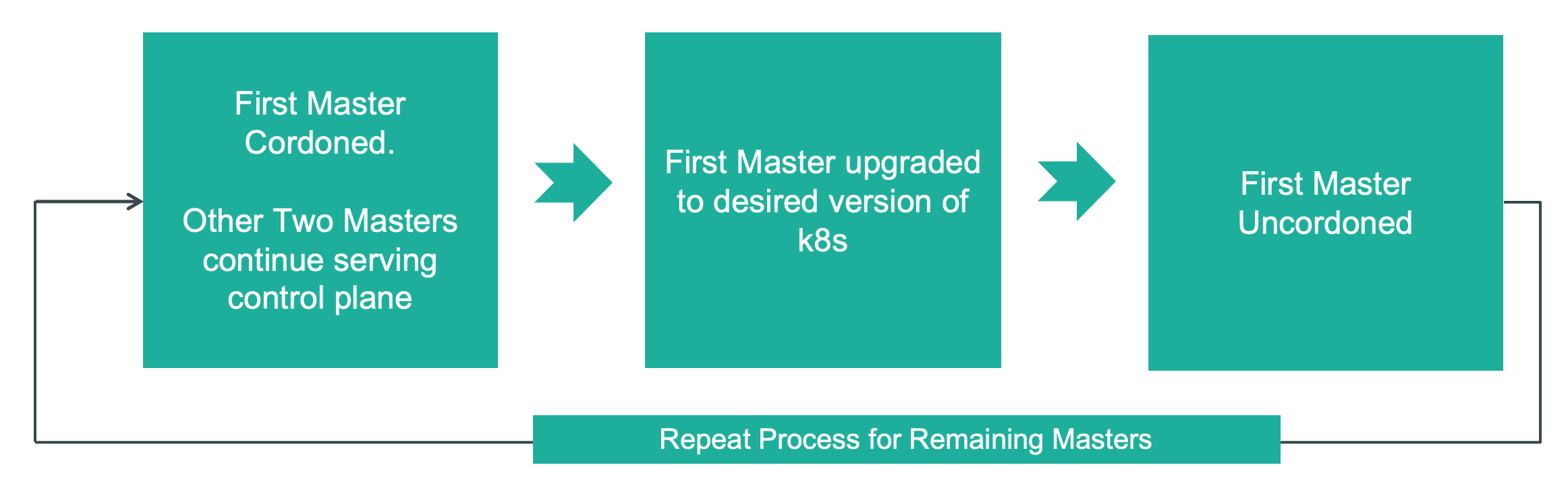

Master Nodes¶

HA clusters will have "multiple Kubernetes masters" deployed on three separate nodes. The master nodes are upgraded one at a time ensuring there is no disruption to both customer containers as well as core control/management functions.

Non HA, single node systems have one Kubernetes master. When Kubernetes is upgraded on these systems, the control functions are paused briefly until upgrade is complete. It is worth emphasizing that there is no impact to the customer's containers on this system while Kubernetes is being upgraded.

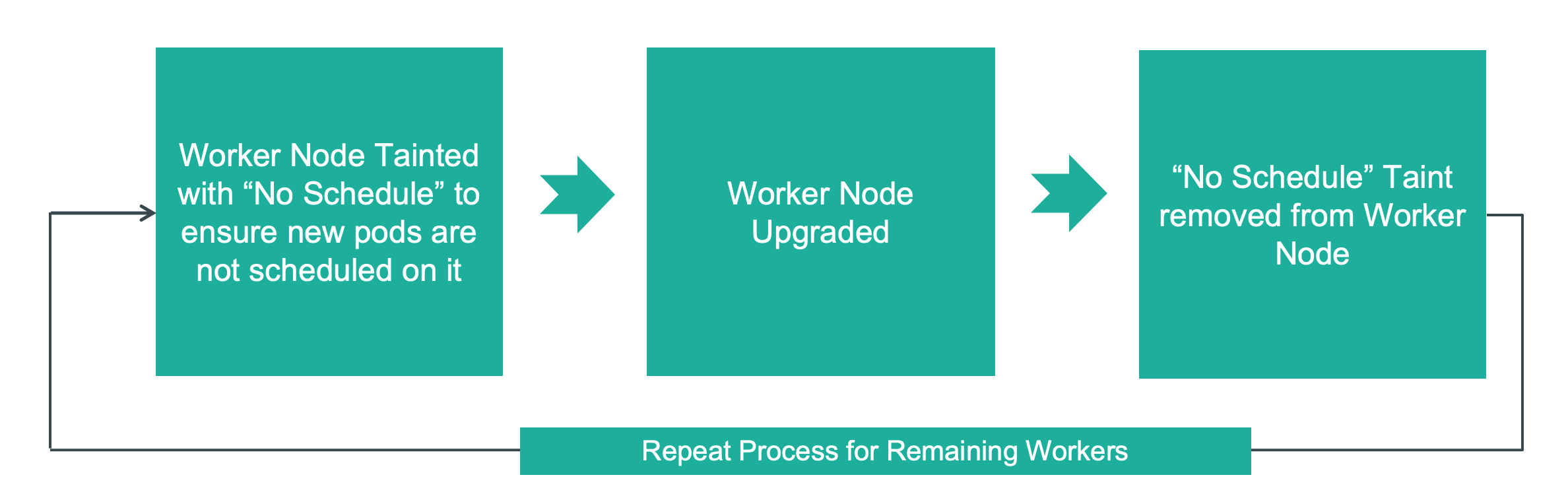

Worker Nodes¶

Before the upgrade, the worker nodes are tainted with "No Schedule" to ensure that new pods are not scheduled on it. Once upgrade is complete, the taint is removed.

Note: During the worker node upgrade process, there is no disruption to the data path to the customer applications.

Typical Process¶

Upgrade Notifications¶

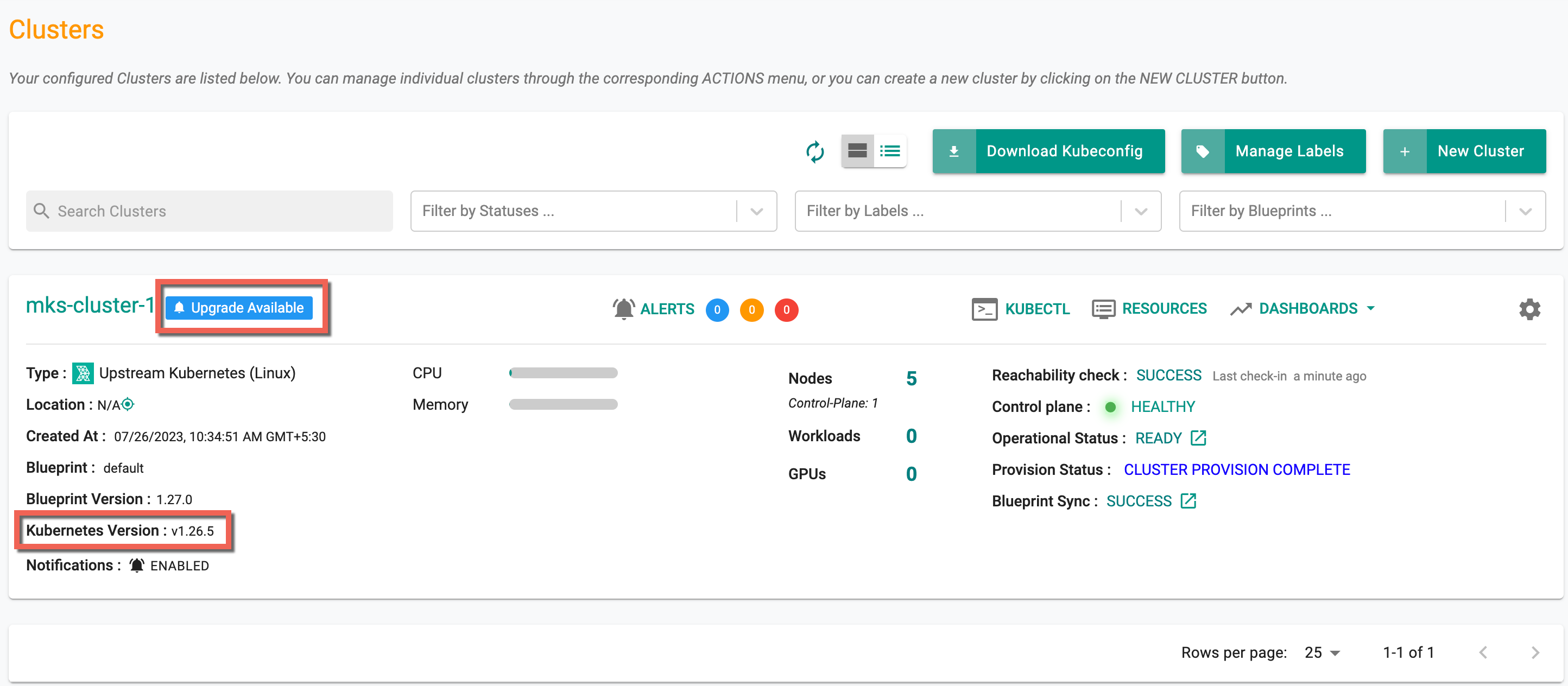

When new Kubernetes versions (vMinor or vPatch) are made available, cluster administrators are provided a notification.

- A red banner appearing as Upgrade Available indicates that the cluster is multiple version(s) behind the latest version

- A blue banner appearing as Upgrade Available indicates that the cluster is one version behind the latest version

For example, the cluster shown below is running an older version of Kubernetes (v1.26.5) and is shown a blue "upgrade available" notification badge.

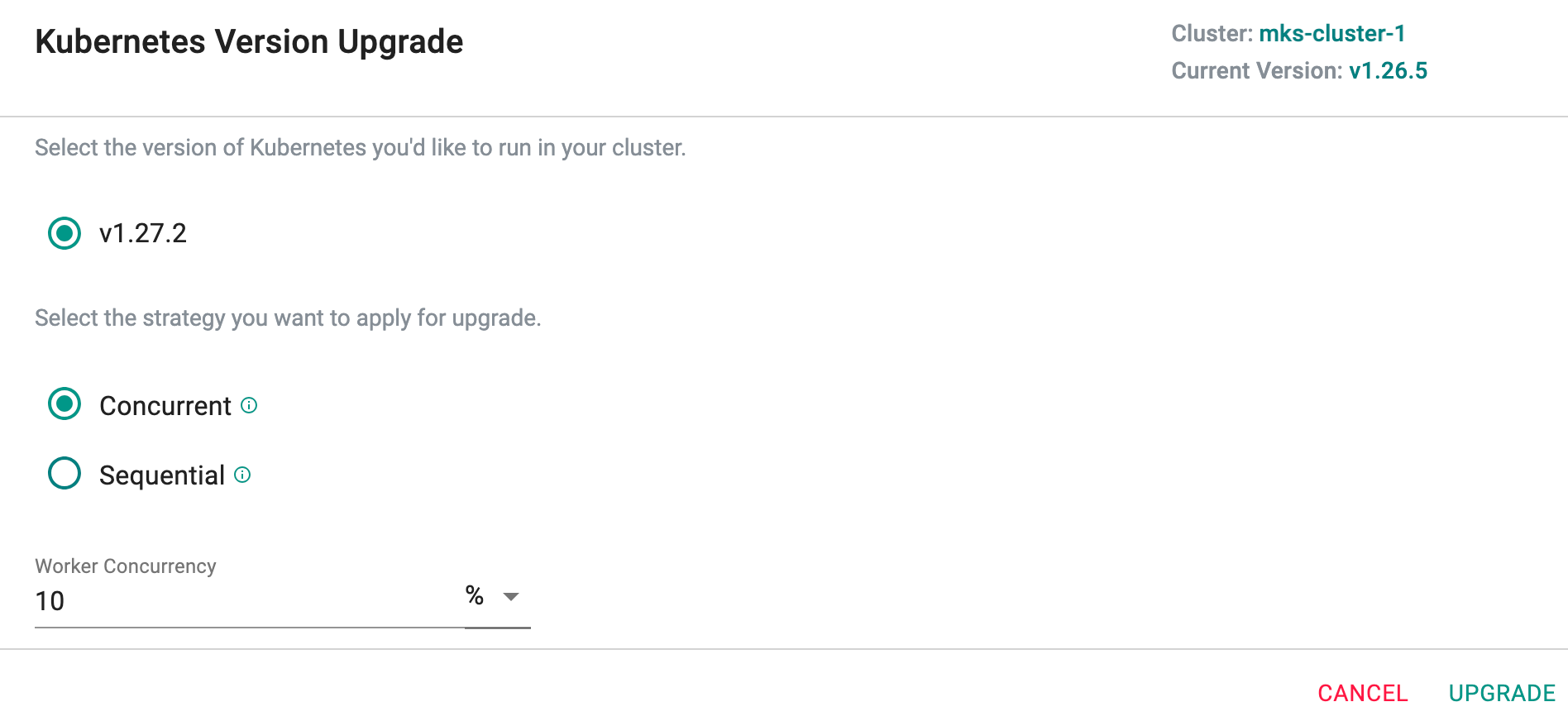

Clicking on the notification badge will present available upgrade options. Now the user can upgrade to the following versions

- The latest K8s version (v1.27.2)

- The next possible vMinor (v1.26.5 -to- v1.27.2)

When upgrading worker nodes in a batch, users have two options to choose from: Concurrent and Sequential.

- Concurrent: If the Concurrent option is selected, all worker nodes within a batch are upgraded simultaneously, providing a faster upgrade process. This approach is particularly beneficial for larger clusters with multiple nodes to upgrade.

With this option, users have the flexibility to set the worker concurrency either in percentage or as a node count. This means they can specify the number of worker nodes that will be upgraded simultaneously based on a percentage of the total nodes in the cluster or by directly specifying the desired count of nodes to be upgraded at once. This helps the users to fine-tune the upgrade process according to their specific cluster requirements, and optimize the upgrade speed based on their preferences and cluster size.

- Sequential: The Sequential option allows users to upgrade the worker nodes one by one. While this method takes longer, it offers greater control and allows users to monitor each node's upgrade individually. Users can choose the most suitable option based on their cluster size, upgrade requirements, and the level of control they prefer during the upgrade process.

In cases where a collection of worker nodes is present, some of these nodes may be running the latest version of Kubernetes (K8s), while others may still be on older versions. Utilizing the Upgrade Available banner, any worker nodes operating on outdated K8s versions can be seamlessly upgraded to the most recent version

Important

On upgrading k8s version from 1.25 to 1.26, containerd version is upgraded to 1.6.10.

Start Upgrade¶

Only authorized users with appropriate RBAC are allowed to perform Kubernetes upgrades.

The controller performs an "in-place" upgrade of Kubernetes. During the Kubernetes upgrade process, the nodes are cordoned (not drained) before they are upgraded. As a result, pods already resident on the node can remain where they are running with no loss of transient data in local volumes.

Important

Cordon will not schedule new pods on the node. Draining will remove the current pods on the node and they get rescheduled to a different node. This can result in evictions of pods etc.

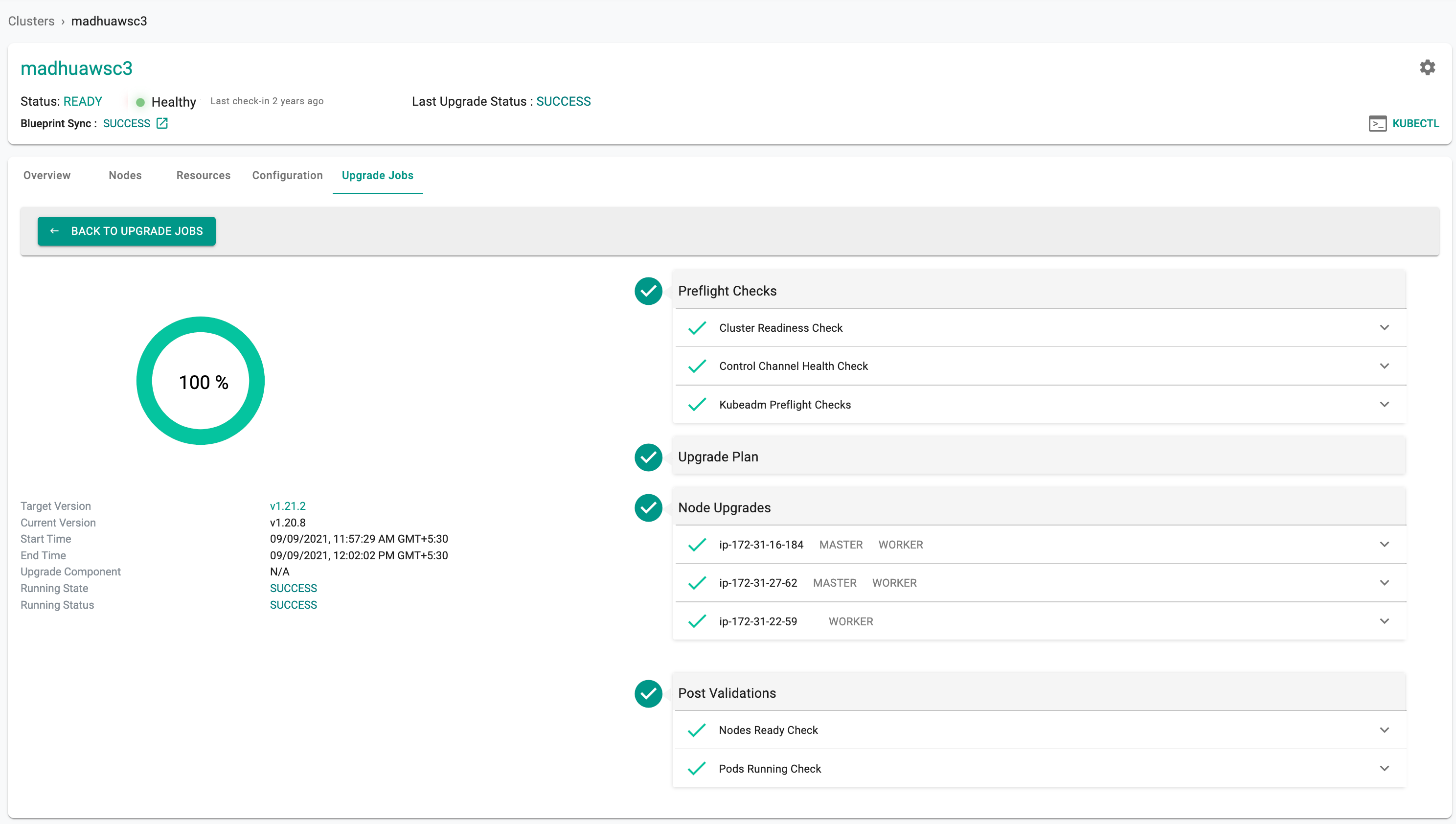

Preflight Checks¶

Preflight checks are automatically performed before the cluster is upgraded/downgraded to the new Kubernetes version. The process is terminated if preflight checks do not pass.

The following preflight checks are performed and have to pass before the upgrade process is allowed to proceed.

- Cluster Readiness (i.e. Is cluster actually provisioned and in a READY state?)

- Control Channel Health (i.e. Is the OS level control channel to Controller active?) and

- Kubeadm Internal Preflight Checks (i.e. verifies the cluster’s health, node health etc)

Node Upgrades¶

The software binaries for the target Kubernetes version are downloaded from the Controller as a single TAR file. The typical size of the downloaded tar file is ~40 MB.

Post-Upgrade Validation¶

Post upgrade validation checks are automatically performed after the cluster is upgraded/downgraded to the new Kubernetes version. The following checks are performed and have to pass before the upgrade is deemed successful.

- Node Ready Check (i.e. did all the cluster nodes report back as READY after upgrade?)

- Pods Running Check (i.e. are all the pods in the critical namespaces running after upgrade?)

Important

Performing k8s upgrades on unhealthy clusters can result in long validation time windows because the process will continuously retry for 10 minutes to ensure that the cluster and pods settle down.

Successful Upgrade¶

The upgrade process can take a few minutes (3-5 mins) and is dependent on the number of nodes on the cluster and network bandwidth for software downloads. Note that the time taken for every step is measured and displayed to the user.

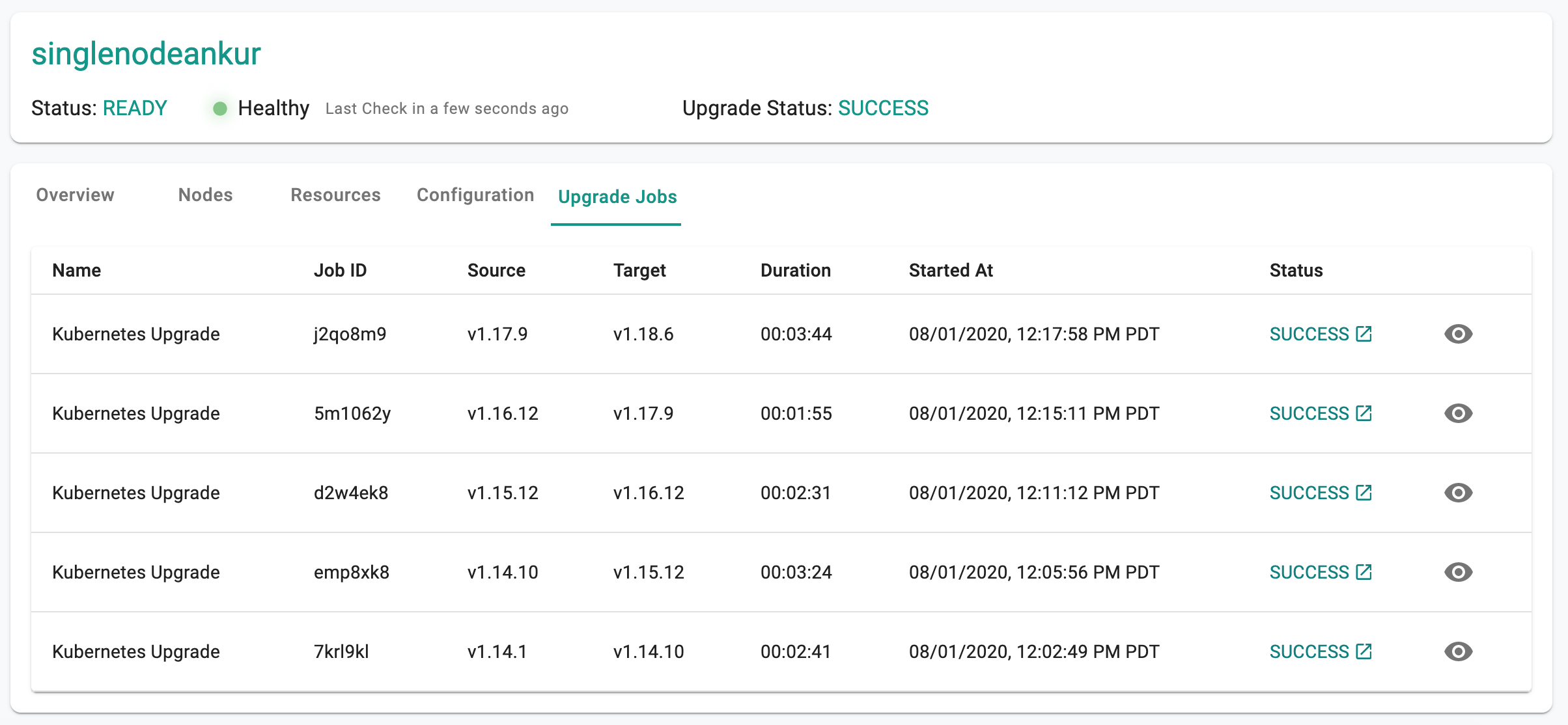

Upgrade History¶

The Controller maintains a history of all successful and unsuccessful upgrades.

- Navigate to the Cluster

- Click on Activity

- Clicking on the "eye" icon will display deep details associated with the particular upgrade job

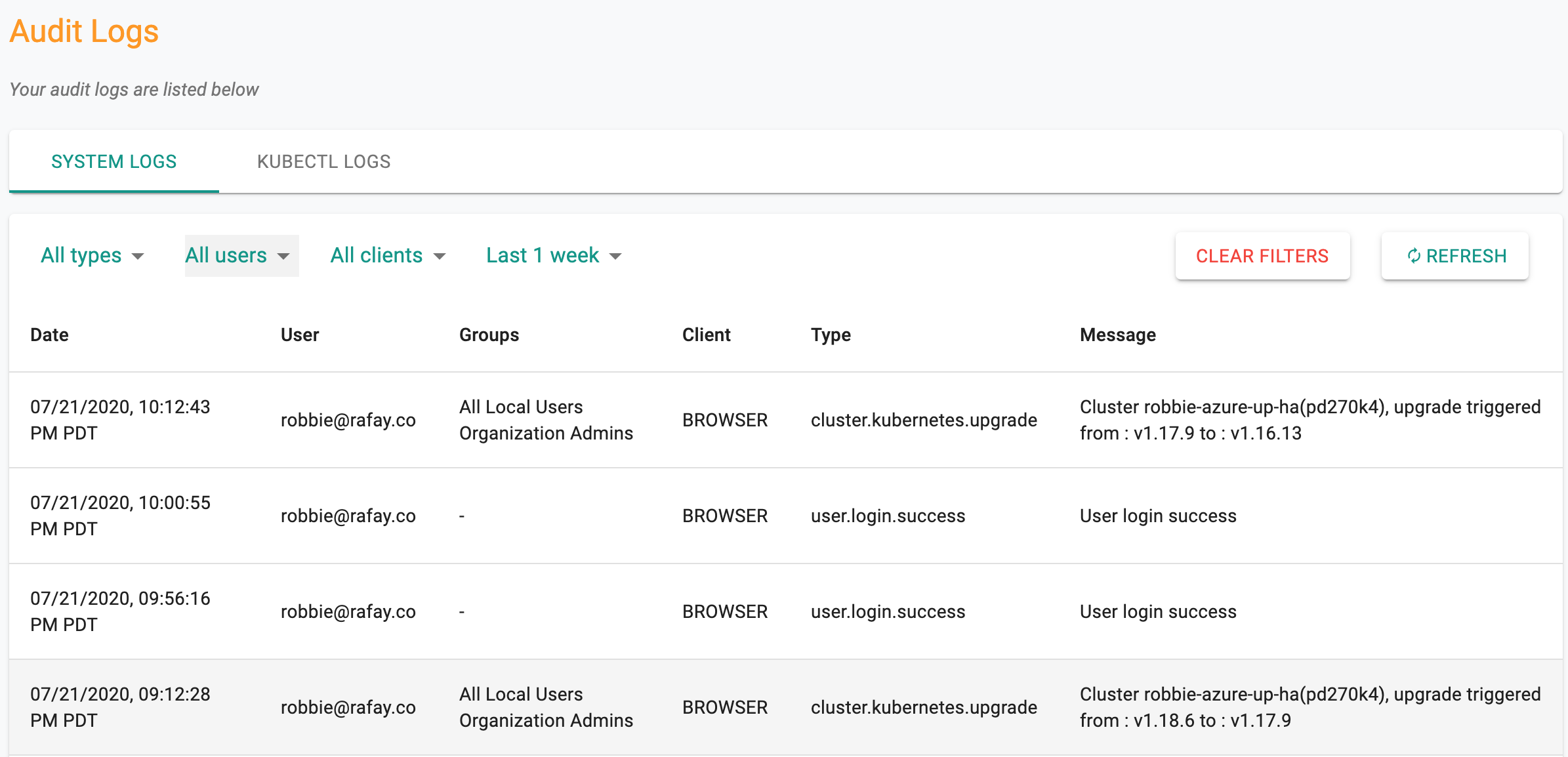

Audit Trail¶

An audit entry is generated when a Kubernetes upgrade is performed. It is possible to retroactively determine "Who performed the upgrade when and from which version to version.

Understanding Component Upgrades in an Upstream Rafay MKS Cluster¶¶

Upgrading a Kubernetes cluster is a crucial process that ensures your infrastructure stays up-to-date with the latest features, bug fixes, and security patches. As part of this process, several components within the cluster undergo upgrades.

Components Upgraded During MKS Cluster Upgrade¶

The specific components that undergo upgrades may vary depending on the Kubernetes version and the cluster's configuration. However, some core components commonly get upgraded during the cluster upgrade process. Here is a list of core components commonly upgraded during the cluster upgrade process:

- kube-apiserver

- kube-controller-manager

- kube-scheduler

- kubelet

- kube-proxy

- coredns

Periodic Upgrades on MKS Cluster Type¶

In addition to the upgrades performed during the standard cluster upgrade process, both CSPs and Rafay periodically upgrade specific components to ensure the cluster's overall health, performance, and security. Here are some of the components that are commonly upgraded

- CNI versions

- Containerd

- Consul

- ETCD

- CNI Plugins

- Crictl

Conclusion¶¶

Keeping your MKS cluster up-to-date with component upgrades is essential to leverage the latest Kubernetes features, bug fixes, and security enhancements.

Explore our blog for deeper insights on Component Upgrades in an Upstream MKS Cluster, available here!