Part 2: Blueprint

What Will You Do¶

This is Part 2 of a multi-part, self-paced quick start exercise. In this part, you will apply the "default-upstream" blueprint which contains the managed storage addon to the cluster. By applying the blueprint, you will be installing Rook-Ceph into the cluster.

Apply Blueprint¶

First, you will apply the "default-upstream" blueprint to the cluster.

- In the console, navigate to your project

- Select Infrastructure -> Clusters

- Click the gear icon on the cluster card

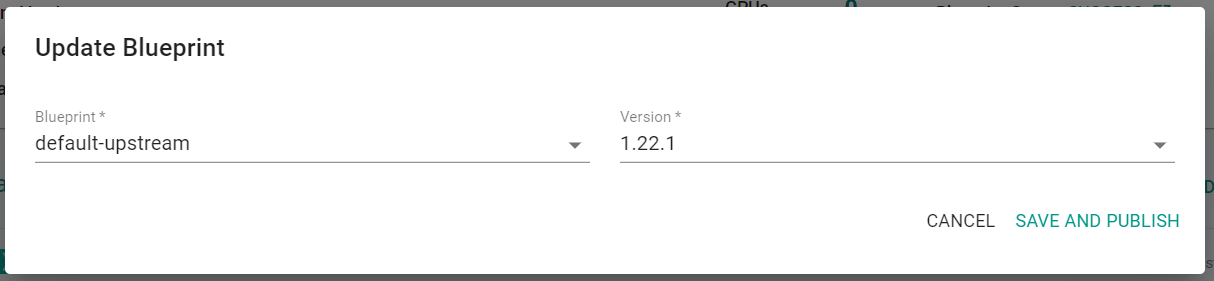

- Select "Update Blueprint"

- Select "default-upstream" for the blueprint

- Select the latest version

- Click "Save and Publish"

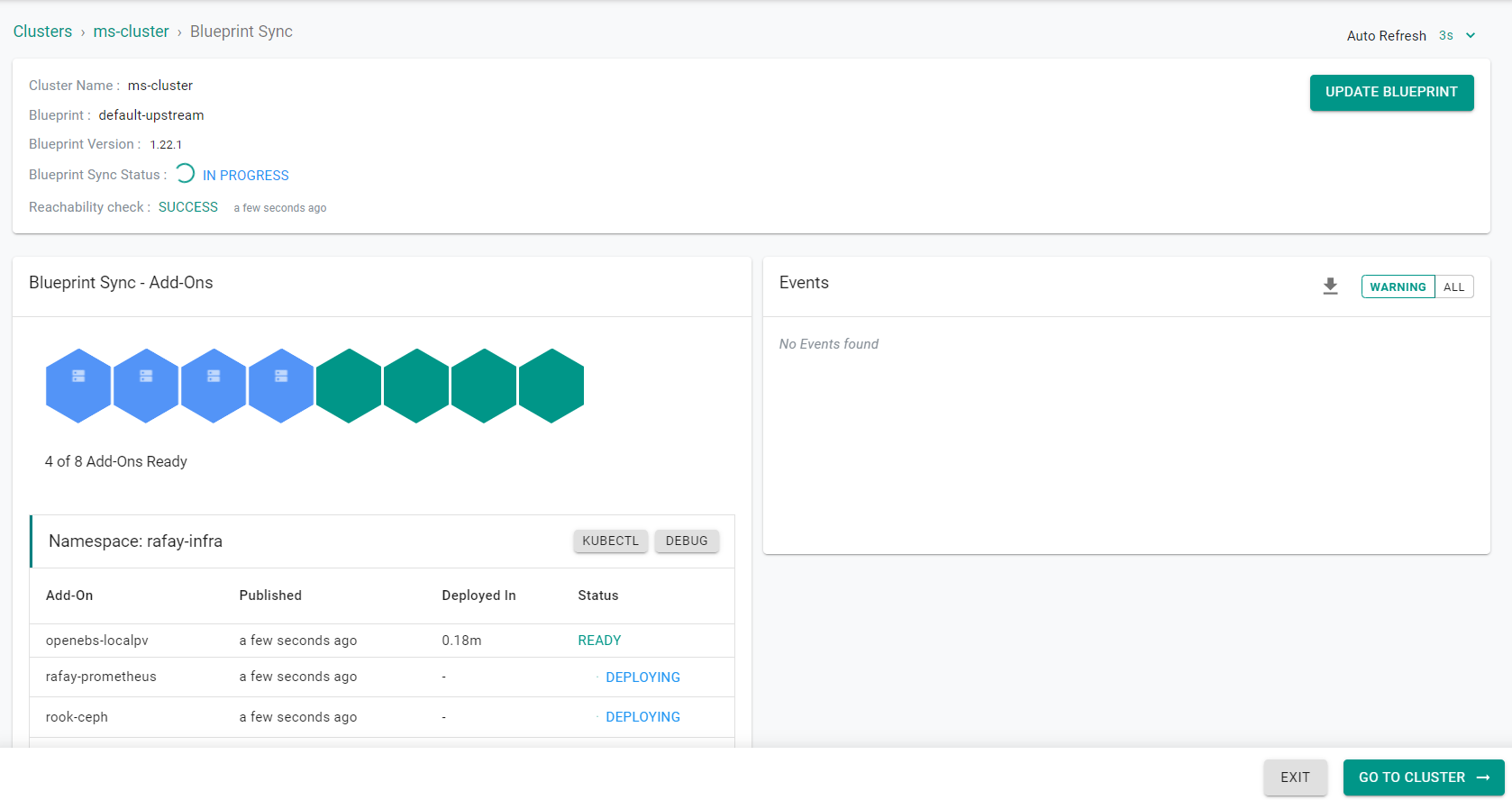

The blueprint will begin to be applied to the cluster.

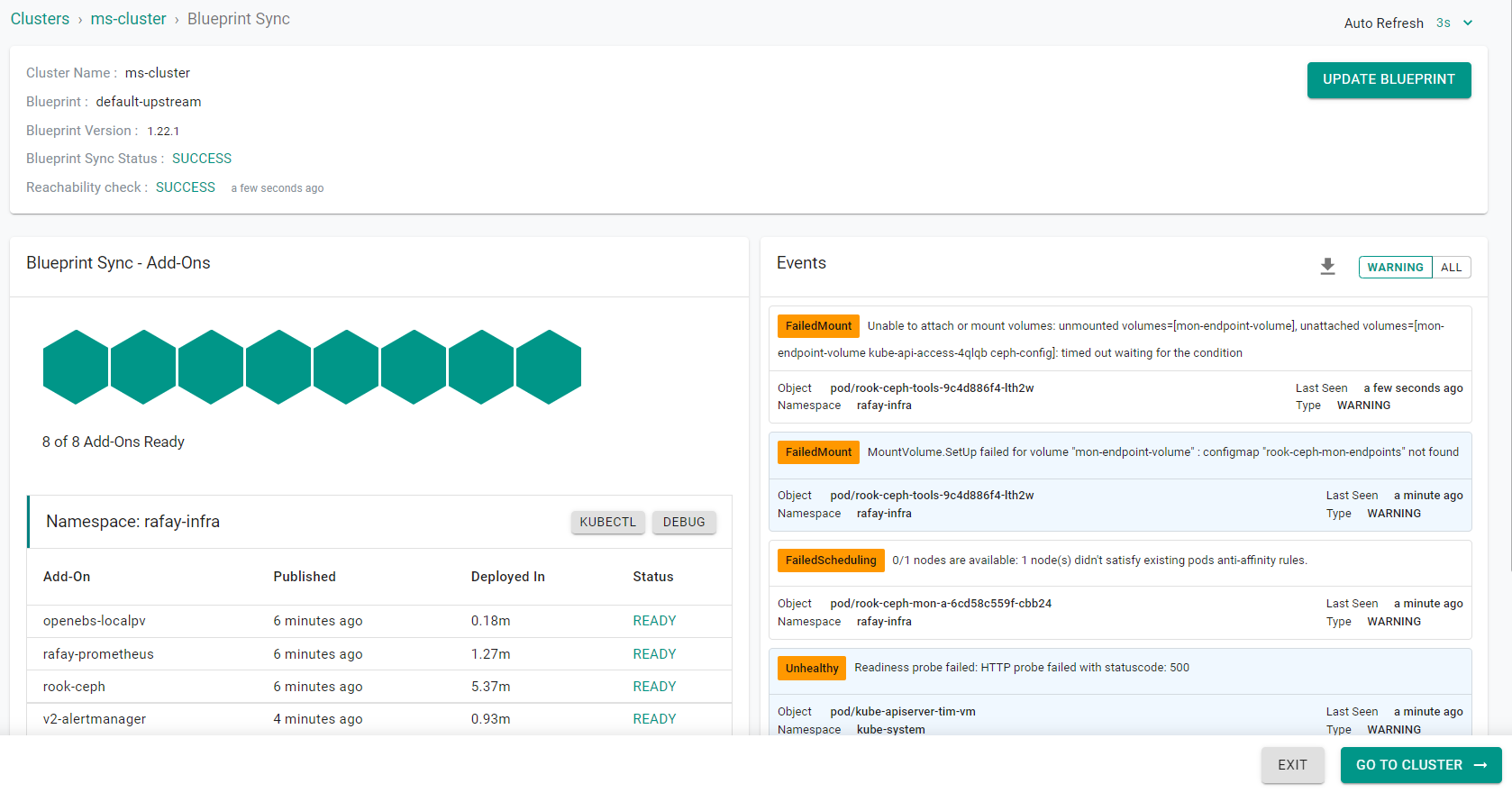

After a few minutes, the cluster will be updated to the new blueprint.

- Click "Exit"

Validate Add-On¶

Now, you will validate the Rook Ceph managed system add-on is running on the cluster.

- In the console, navigate to your project

- Select Infrastructure -> Clusters

- Click the cluster name on the cluster card

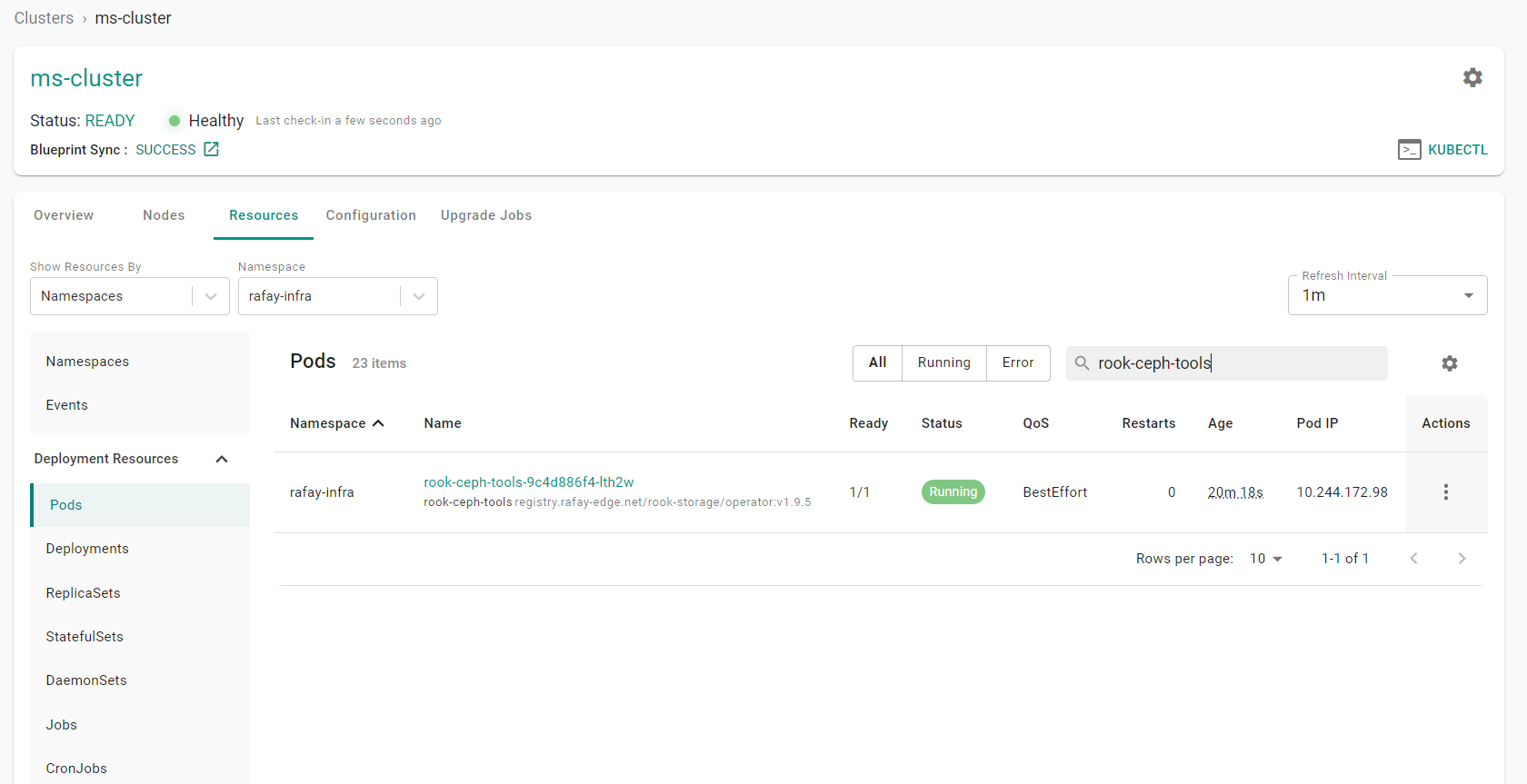

- Click the "Resources" tab

- Select "Pods" in the left hand pane

- Select "rafay-infra" from the "Namespace" dropdown

- Enter "rook-ceph-tools" into the search box

- Click the "Actions" button

- Select "Shell and Logs"

- Click the "Exec" icon to open a shell into the container

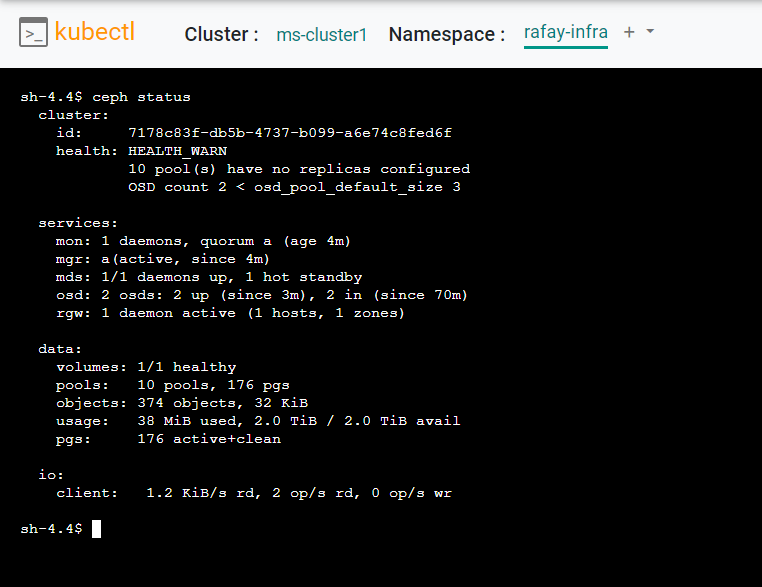

- Enter the following command in the shell to check the status of the Ceph cluster

ceph status

Now, you will confirm the nodes in the cluster have a the storage devices controlled by Ceph.

- Open a shell within each node in the cluster

- Execute the following command to list all block devices

lsblk -a

The output of the command should show the previosuly viewed raw devices now with a Ceph partitions.

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINTS

loop0 7:0 0 55.6M 1 loop /snap/core18/2667

loop1 7:1 0 55.6M 1 loop /snap/core18/2697

loop2 7:2 0 63.3M 1 loop /snap/core20/1778

loop3 7:3 0 63.3M 1 loop /snap/core20/1822

loop4 7:4 0 111.9M 1 loop /snap/lxd/24322

loop5 7:5 0 50.6M 1 loop /snap/oracle-cloud-agent/48

loop6 7:6 0 52.2M 1 loop /snap/oracle-cloud-agent/50

loop7 7:7 0 49.6M 1 loop /snap/snapd/17883

loop8 7:8 0 49.8M 1 loop /snap/snapd/18357

loop9 7:9 0 0B 0 loop

sda 8:0 0 46.6G 0 disk

├─sda1 8:1 0 46.5G 0 part /var/lib/kubelet/pods/1270387a-9525-4286-854d-0ab5bca5df87/volume-subpaths/kubernetesconf/reloader/1

│ /var/lib/kubelet/pods/1270387a-9525-4286-854d-0ab5bca5df87/volume-subpaths/kubernetesconf/fluentd/4

│ /

├─sda14 8:14 0 4M 0 part

└─sda15 8:15 0 106M 0 part /boot/efi

sdb 8:16 0 1T 0 disk

└─ceph--ba1c0a57--d96e--467a--a3b9--1b61ec70ab7c-osd--block--c3ab1b1d--cd3f--470b--ad2e--1da594a0e424

253:2 0 1024G 0 lvm

└─GLCh4v-svKt-SuOc-5r2n-ft8V-Q1ef-lqdD9P 253:3 0 1024G 0 crypt

sdc 8:32 0 1T 0 disk

└─ceph--3bf147eb--98c4--4c7c--b1ff--b4598b55d195-osd--block--c2262605--d314--4cce--9cac--ef3d0131d98c

253:0 0 1024G 0 lvm

└─qDlkR4-qRbD-UncQ-Ytcs-EMDS-rOjG-rQIKHb 253:1 0 1024G 0 crypt

nbd0 43:0 0 0B 0 disk

nbd1 43:32 0 0B 0 disk

nbd2 43:64 0 0B 0 disk

nbd3 43:96 0 0B 0 disk

nbd4 43:128 0 0B 0 disk

nbd5 43:160 0 0B 0 disk

nbd6 43:192 0 0B 0 disk

nbd7 43:224 0 0B 0 disk

nbd8 43:256 0 0B 0 disk

nbd9 43:288 0 0B 0 disk

nbd10 43:320 0 0B 0 disk

nbd11 43:352 0 0B 0 disk

nbd12 43:384 0 0B 0 disk

nbd13 43:416 0 0B 0 disk

nbd14 43:448 0 0B 0 disk

nbd15 43:480 0 0B 0 disk

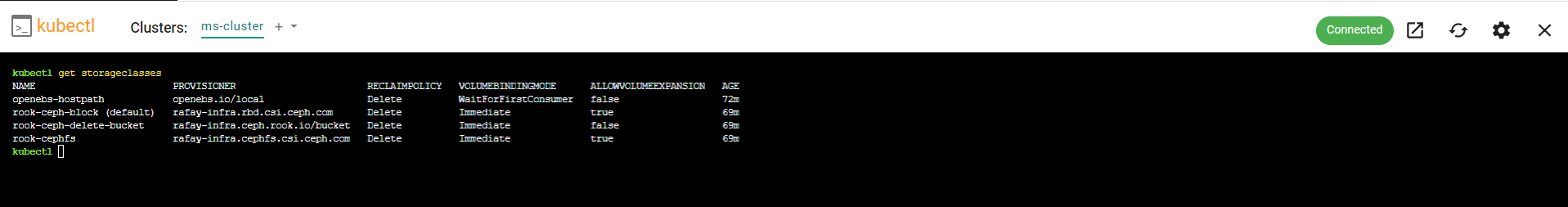

Now, you will view the storageclasses created by Rook Ceph in the cluster

- In the console, navigate to your project

- Select Infrastructure -> Clusters

- Click "kubectl" on the cluster card

- Enter the following command

kubectl get storageclasses

You will see the three Rook Ceph storageclasses that were created. Each of classes maps to a particular type of storage being exposed (block, file and object).

Recap¶

At this point, you have the blueprint with the Rook Ceph managed system add-on configured and deployed to the cluster.