Test

In this section, you will try to configure and deploy a "problematic" workload to the cluster with the K8sGPT blueprint. We will then verify whether it was able to identify the issue and assist the user with a recommendation for resolution.

Step 1: Create Namespace¶

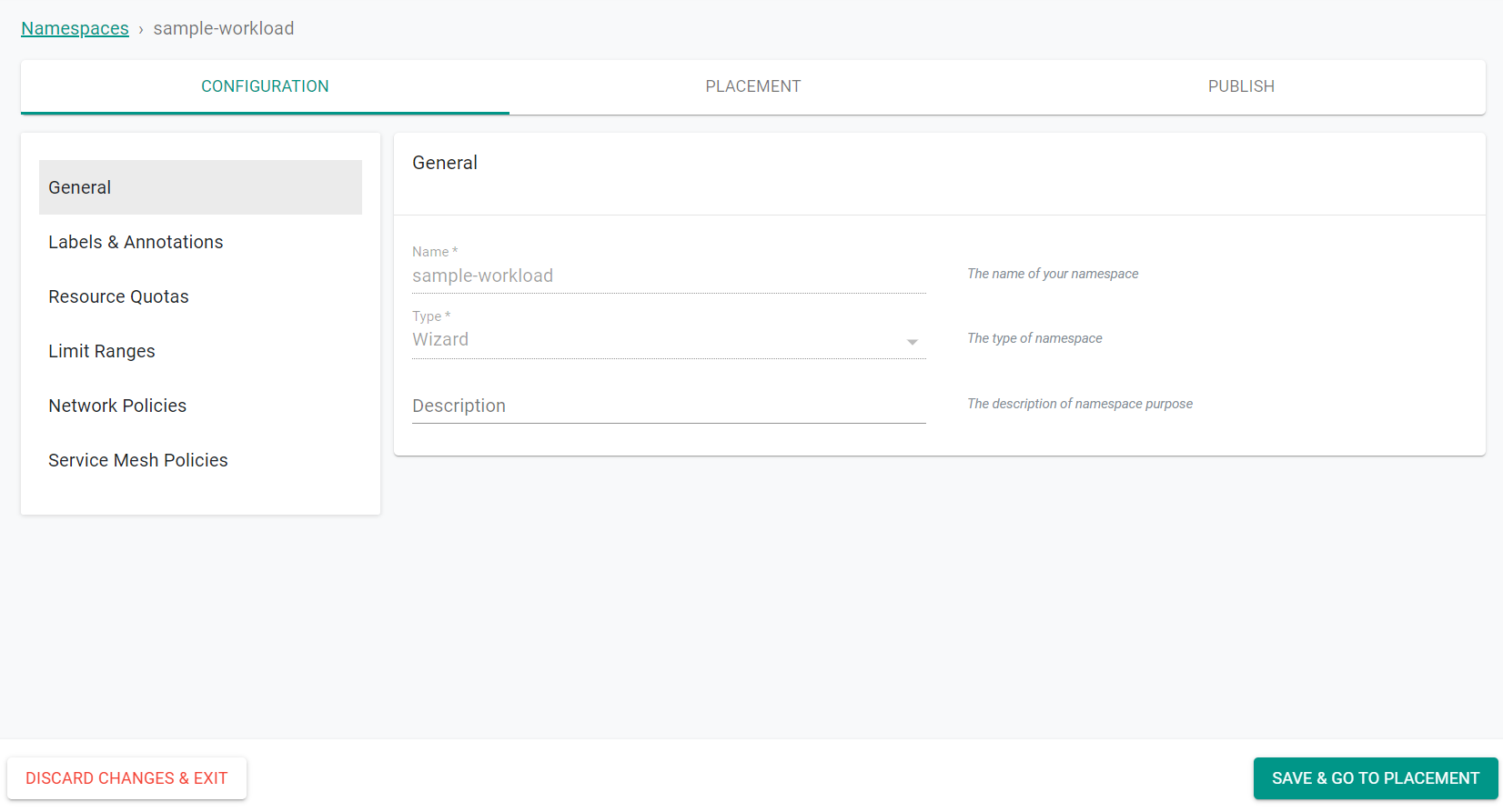

In this step, you will create a namespace for the a sample workload that will be published to the cluster. The sample workload will be used to see how K8sGPT can scan and identify issues.

- Select Infrastructure -> Namespaces

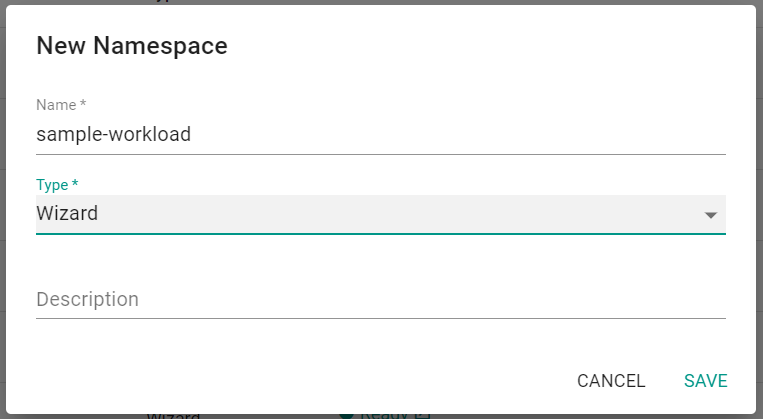

- Click New Namespace

- Enter the name sample-workload

- Select wizard for the type

- Click Save

- Click Save & Go to Placement

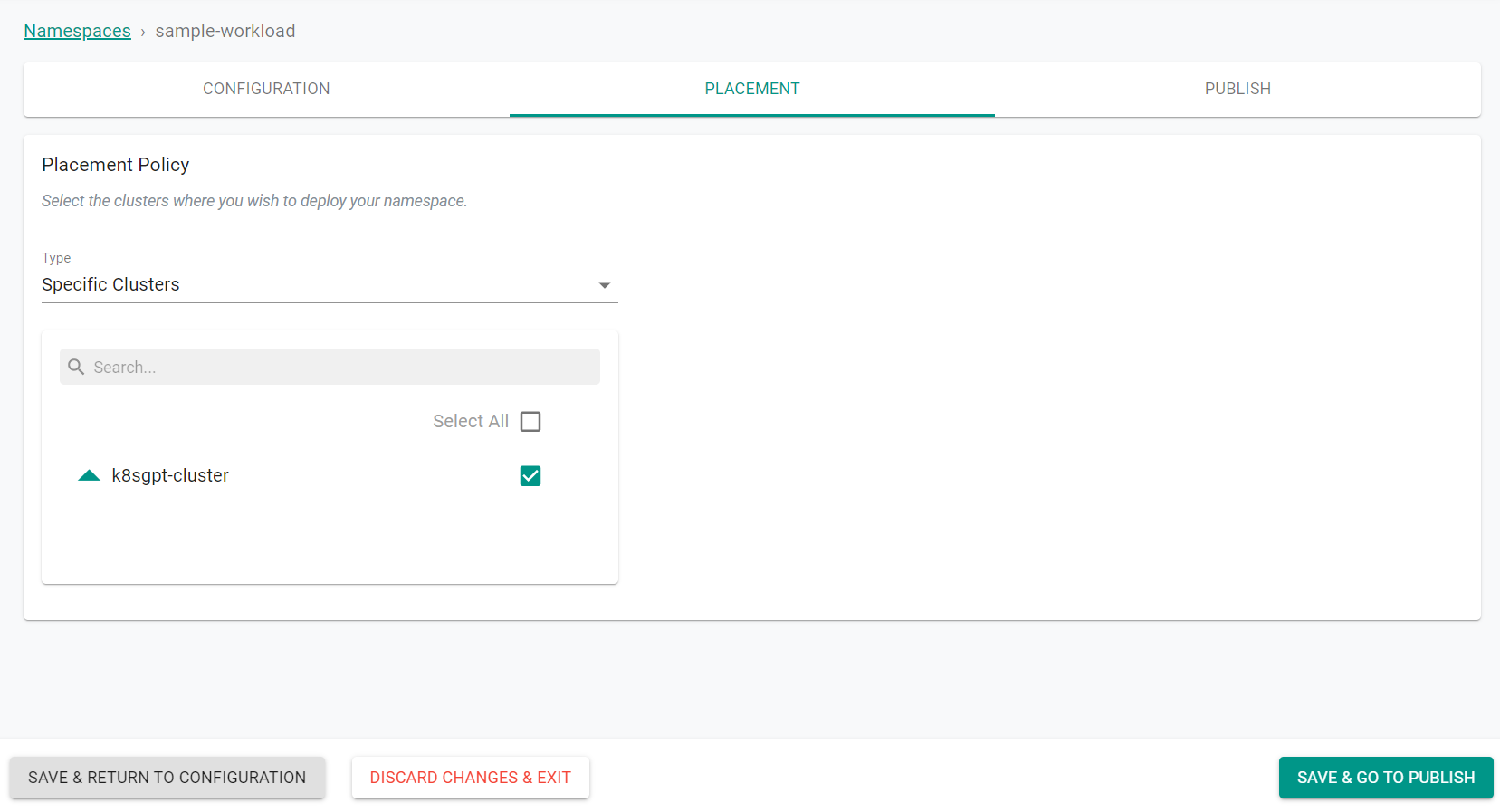

- Select the cluster where the K8sGPT operator is installed

- Click Save & Go to Publish

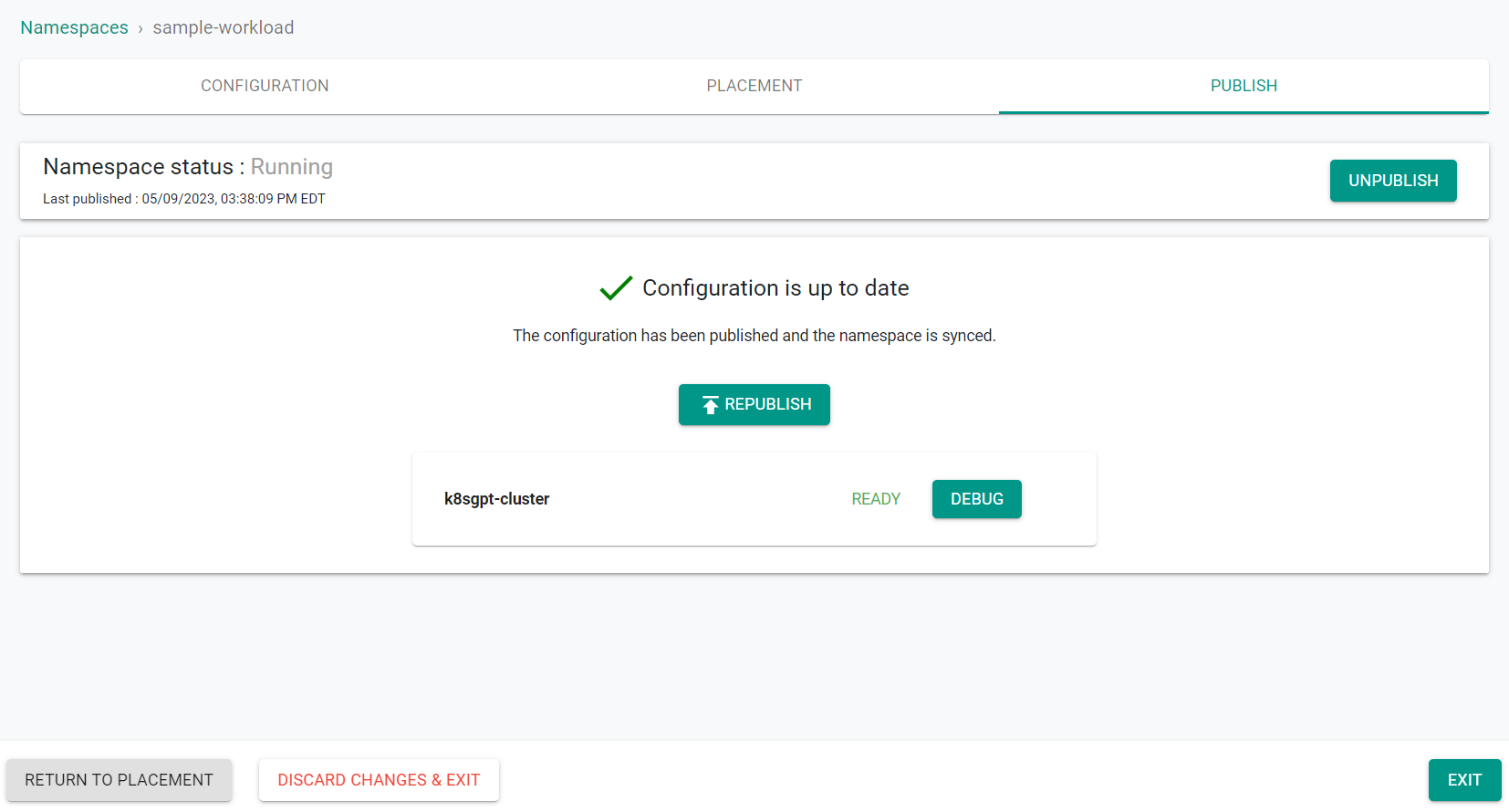

- Click Publish

- Click Exit

Step 2: Create Workload¶

In this step, you will create a a workload which will be used to rest the K8sGPT operator.

- Save the below YAML to a file named sample-workload.yaml

Note

This service contains a selector that does not map to an existing pod. This will be picked up by K8sGPT.

apiVersion: v1

kind: Service

metadata:

name: myapp

spec:

type: ClusterIP

ports:

- name: myapp

protocol: TCP

port: 80

selector:

app: nonexistentapp

- Select Applications -> Workloads

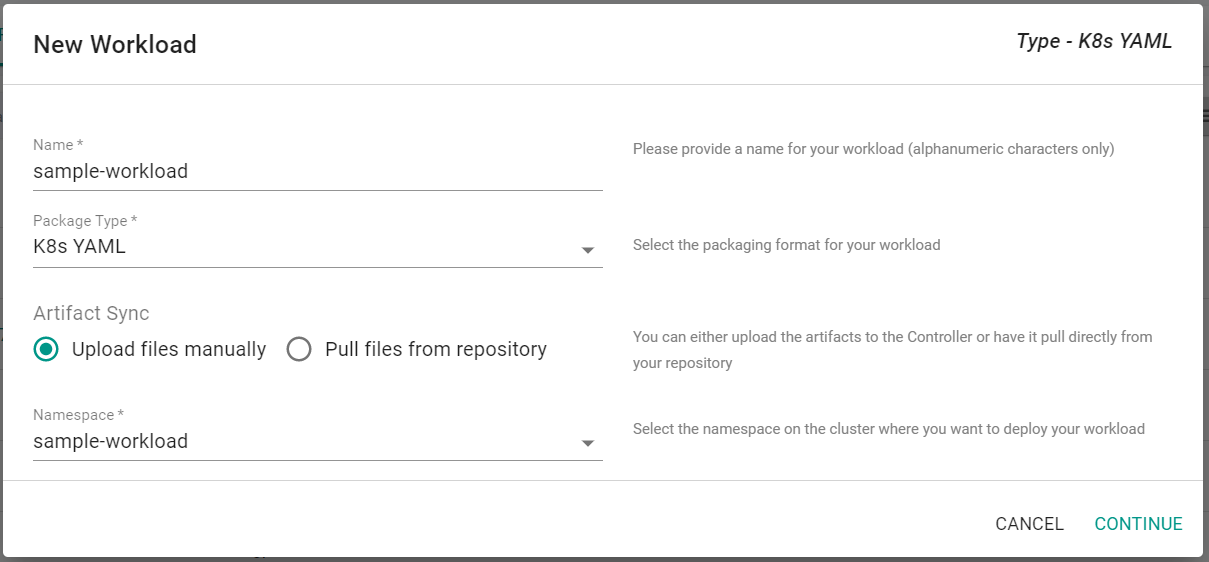

- Click New Workload -> Create New Workload

- Enter a name for the workload

- Select K8s YAML for the package type

- Select Upload files manually

- Select sample-workload for the namespace

- Click Continue

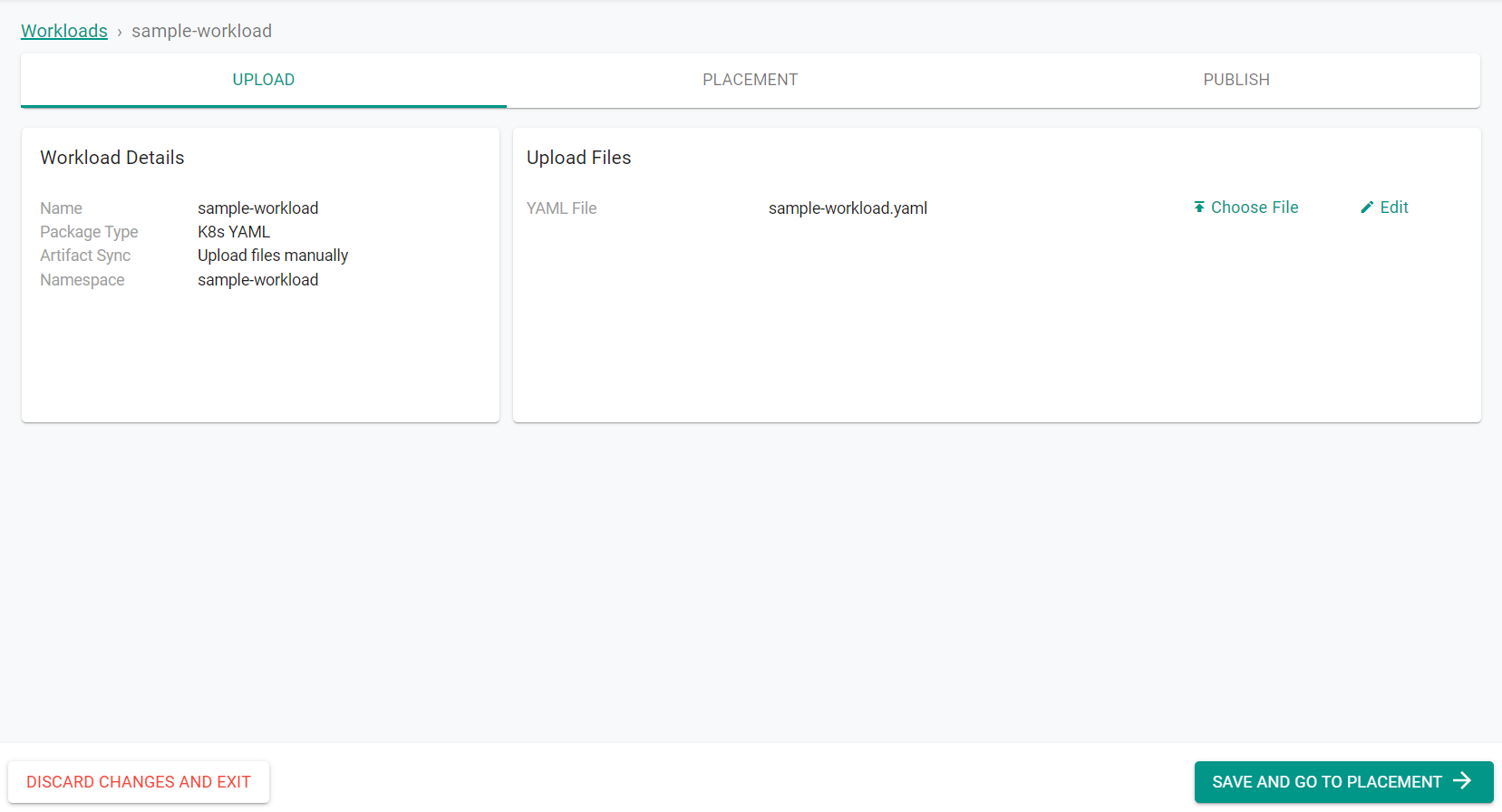

- Click Choose File and select the previously saved sample-workload.yaml file

- Click Save & Go to Placement

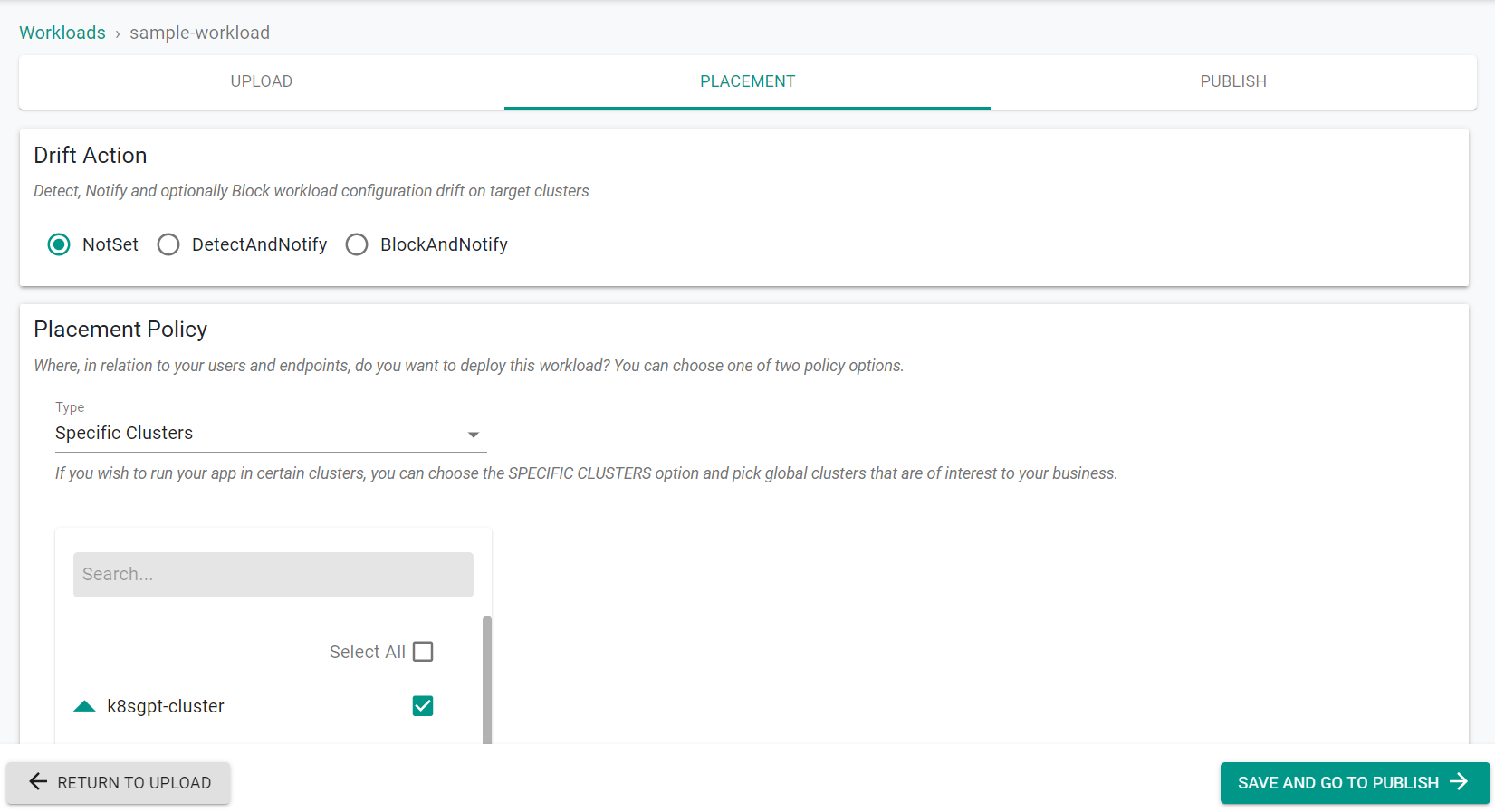

- Select the cluster where the K8sGPT operator is installed

- Click Save & Go to Publish

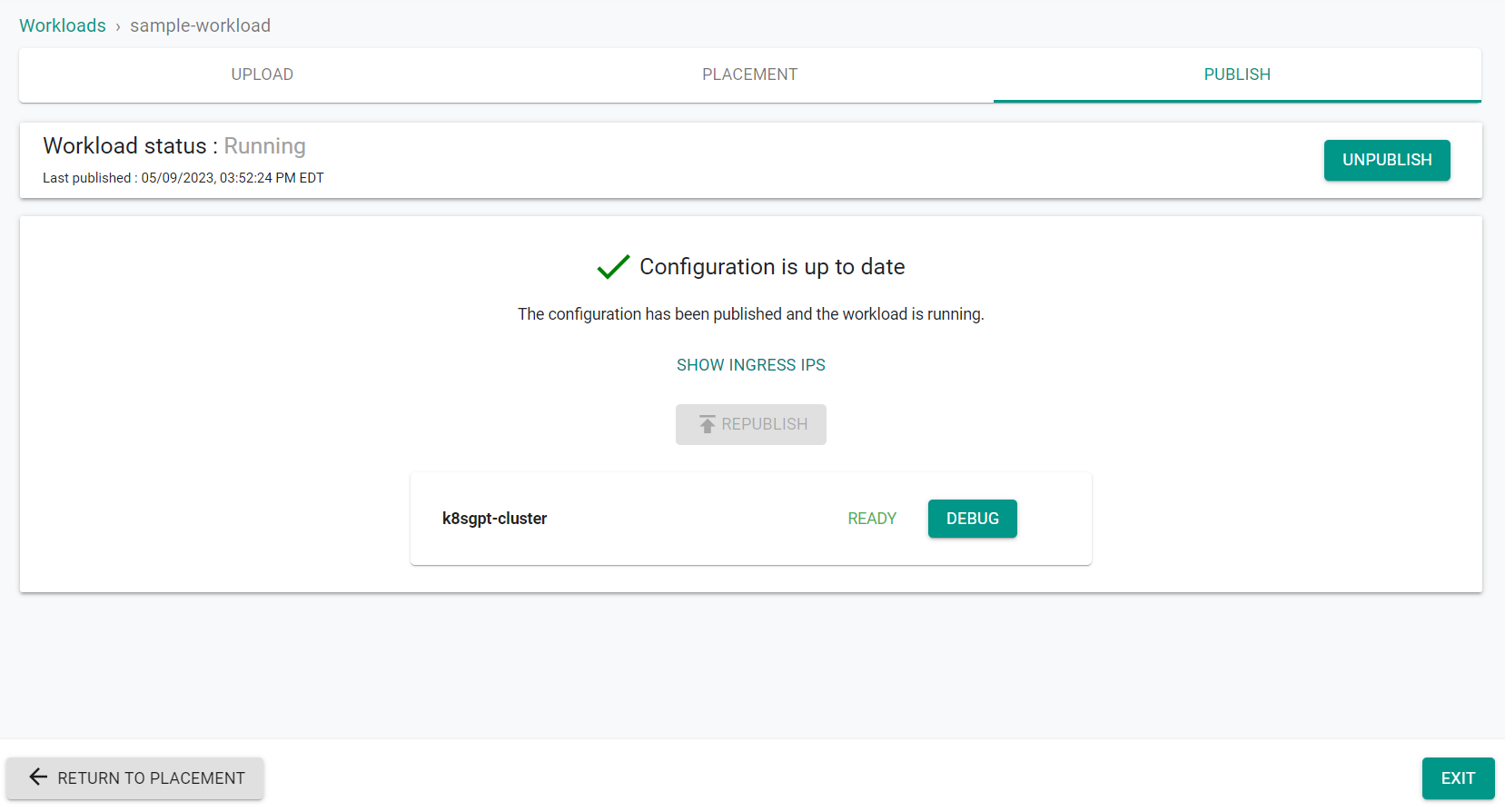

- Click Publish

- Click Exit

Step 3: View K8sGPT Results¶

In this step, you will view the results of the K8sGPT Operator.

- Execute the following command

kubectl get results -A

You will see a result like the following providing details of the issue and possible resolution in simple terms.

{

"apiVersion": "v1",

"items": [

{

"apiVersion": "core.k8sgpt.ai/v1alpha1",

"kind": "Result",

"metadata": {

"creationTimestamp": "2023-05-09T19:55:28Z",

"generation": 1,

"name": "sampleworkloadmyapp",

"namespace": "kube-system",

"resourceVersion": "54316",

"uid": "76646698-a0f1-4632-9aad-2b0de48b2b44"

},

"spec": {

"details": "The service is looking for endpoints with the label \"app=nonexistentapp\", but it cannot find any. \n\nThe solution is to make sure that the label \"app=nonexistentapp\" is correctly assigned to one or more pods that are running the application. This can be done by checking the labels of the pods using the \"kubectl get pods --show-labels\" command and verifying that the \"app=nonexistentapp\" label is present. If it is not present, add it using the \"kubectl label pods \u003cpod-name\u003e app=nonexistentapp\" command. Once the label is correctly assigned, the endpoints should be discovered by the service and the error should be resolved.",

"error": [

{

"sensitive": [

{

"masked": "XUBj",

"unmasked": "app"

},

{

"masked": "azUvWklzT0pEUU1lMjA=",

"unmasked": "nonexistentapp"

}

],

"text": "Service has no endpoints, expected label app=nonexistentapp"

}

],

"kind": "Service",

"name": "sample-workload/myapp",

"parentObject": ""

}

}

],

"kind": "List",

"metadata": {

"resourceVersion": ""

}

}

Recap¶

Congratulations! You have successfully deployed the K8sGPT Operator on your managed Kubernetes cluster as an add-on in a custom cluster blueprint. You then tested the efficacy of the K8sGPT operator with a broken/misconfigured workload.