Vclusters

Overview¶

Virtual Clusters are fully working Kubernetes clusters that run on top of other Kubernetes clusters. Compared to fully separate "real" clusters, virtual clusters reuse worker nodes and networking of the host cluster. They have their own control plane and schedule all workloads into a single namespace of the host cluster. Like virtual machines, virtual clusters partition a single physical cluster into multiple separate ones.

As part of the development process, engineers often still need access to cluster-scoped resources like cluster roles, shared CRDs or persistent volumes. Virtual clusters can be configured independently of the physical cluster, this makes them ideal for engineering teams to run experiments, continuous integration and set up sandbox environments.

What Will You Do¶

In this exercise,

- You will assign a workspace admin role to a user

- You will then create a namespace and provision a K3s based virtual cluster (vcluster) in that namespace

Important

This tutorial describes the steps to create a virtual cluster using the Web Console. The entire workflow can also be fully automated and embedded into an automation pipeline

Assumptions¶

- You have already provisioned or imported a Kubernetes cluster using the controller

Enforcing Governance¶

There are two primary ways to enforce governance, especially in shared cluster environments today:

- Platform teams can leverage the Workspace Admin role to enable a self-service model for application teams. This ensures that application teams can only create new namespaces and manage resources in those specific namespaces (including creating vclusters) but do not have cluster-wide privileges for the host cluster

- Platform teams can also create multiple projects in the platform (one for each application team), share the host cluster or host cluster groups across those projects and enforce resource quotas

More information on the "workspace as a service" capability is available here

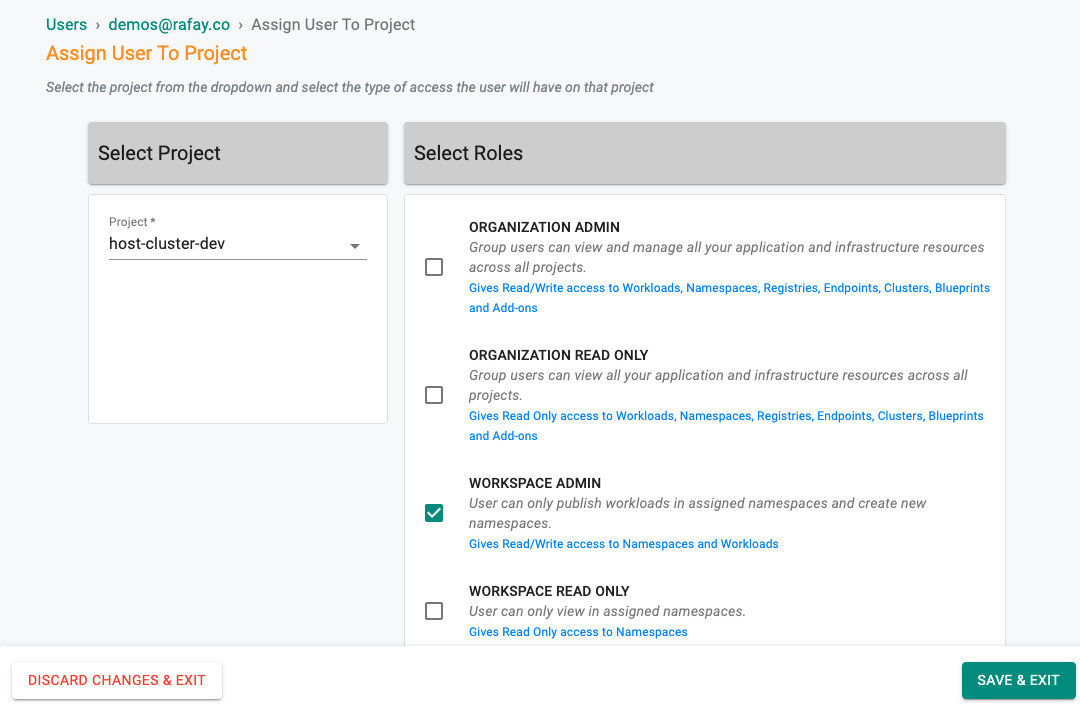

Step 1: Assign Workspace admin role to user¶

- Login into the Web Console as an Org Admin

- Select System -> Users

- Search and click the desired user

- Select the Projects Tab

- Click Assign User to Project

- Select the project from the dropdown

- Assign the Workspace Admin role to the user

- Click Save & Exit

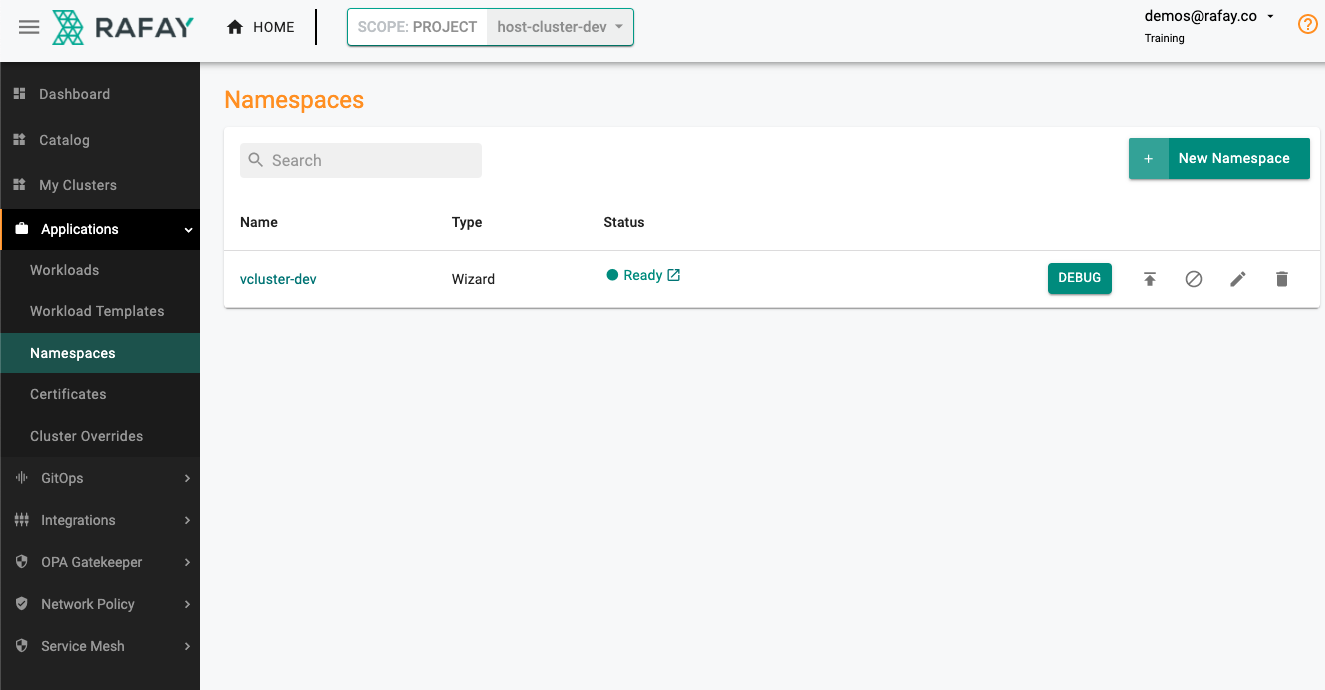

Step 2: Create the host namespace for the vcluster¶

- Login into the Web Console as the Workspace Admin user

- Navigate to the Namespaces page

- Create a new namespace, specify the name (e.g. vcluster-dev) and select type as Wizard

- In the placement section, select the host cluster (e.g. host-cluster-dev)

- Click Save & Go to Publish

- Publish the namespace

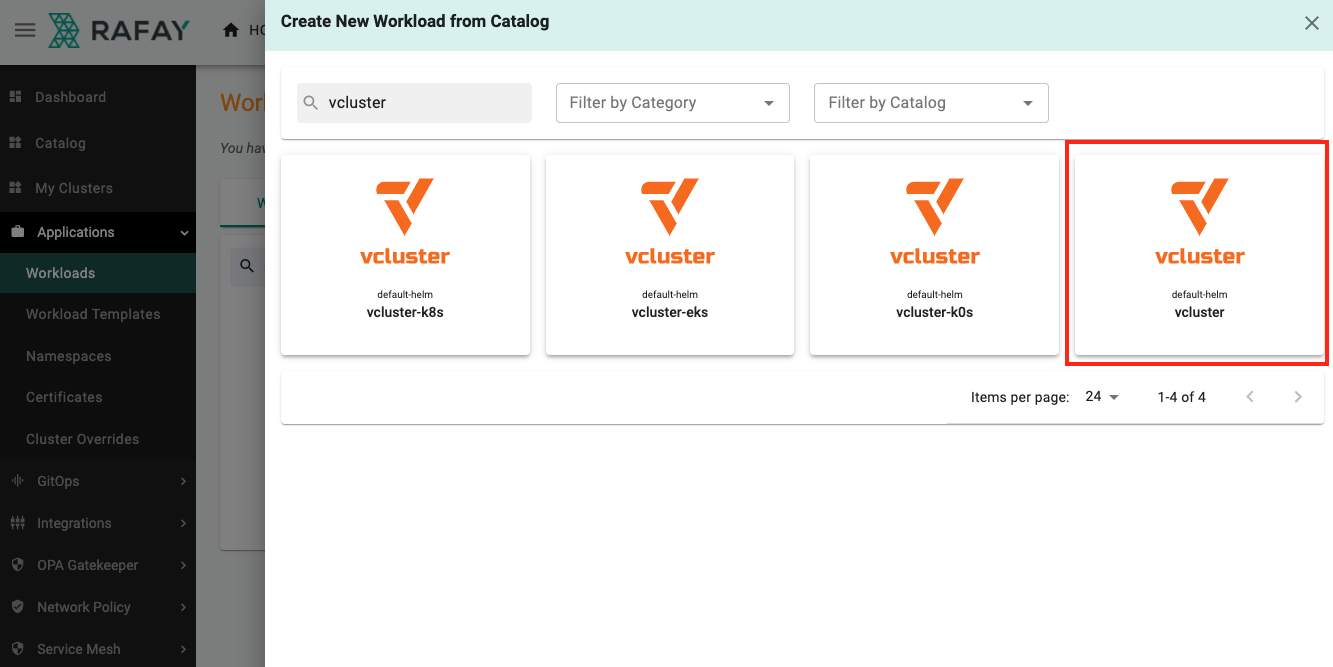

Step 3: Create a vcluster¶

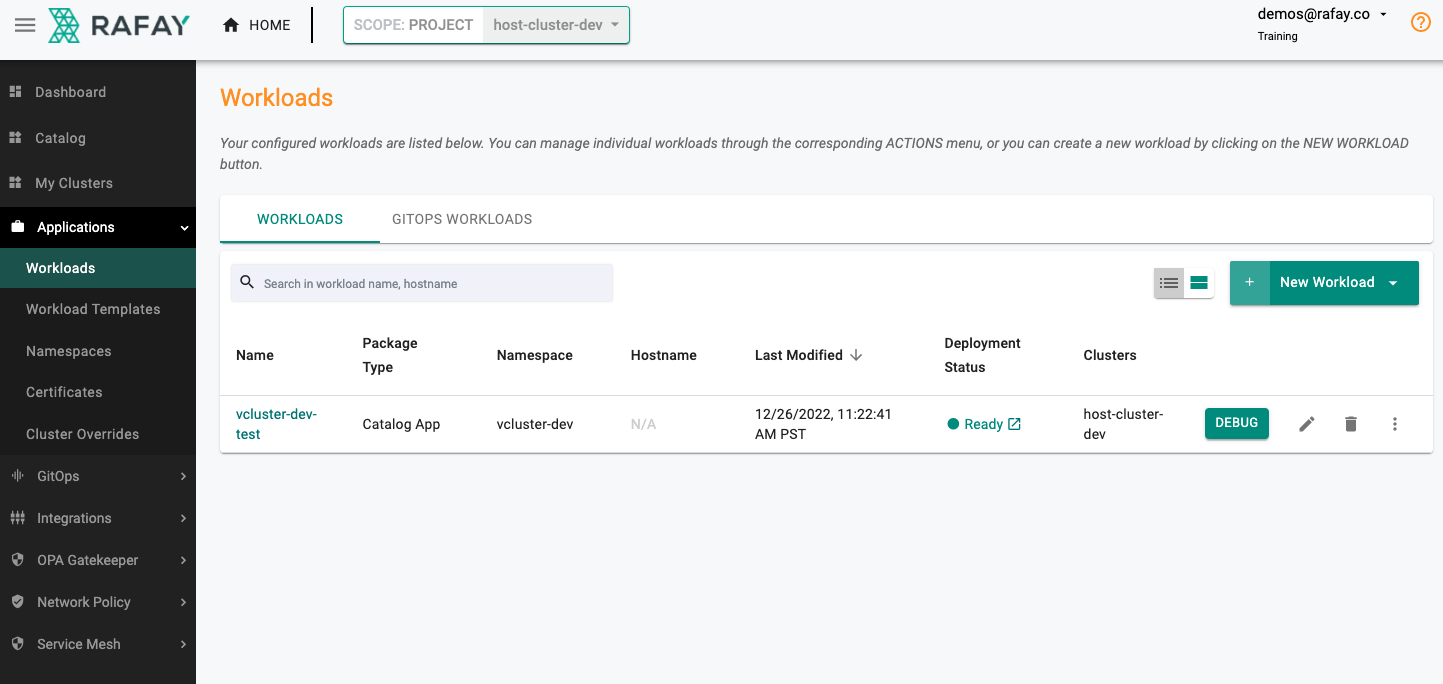

- Navigate to the Workloads page

- Create a new Workload from Catalog

- Search for vcluster

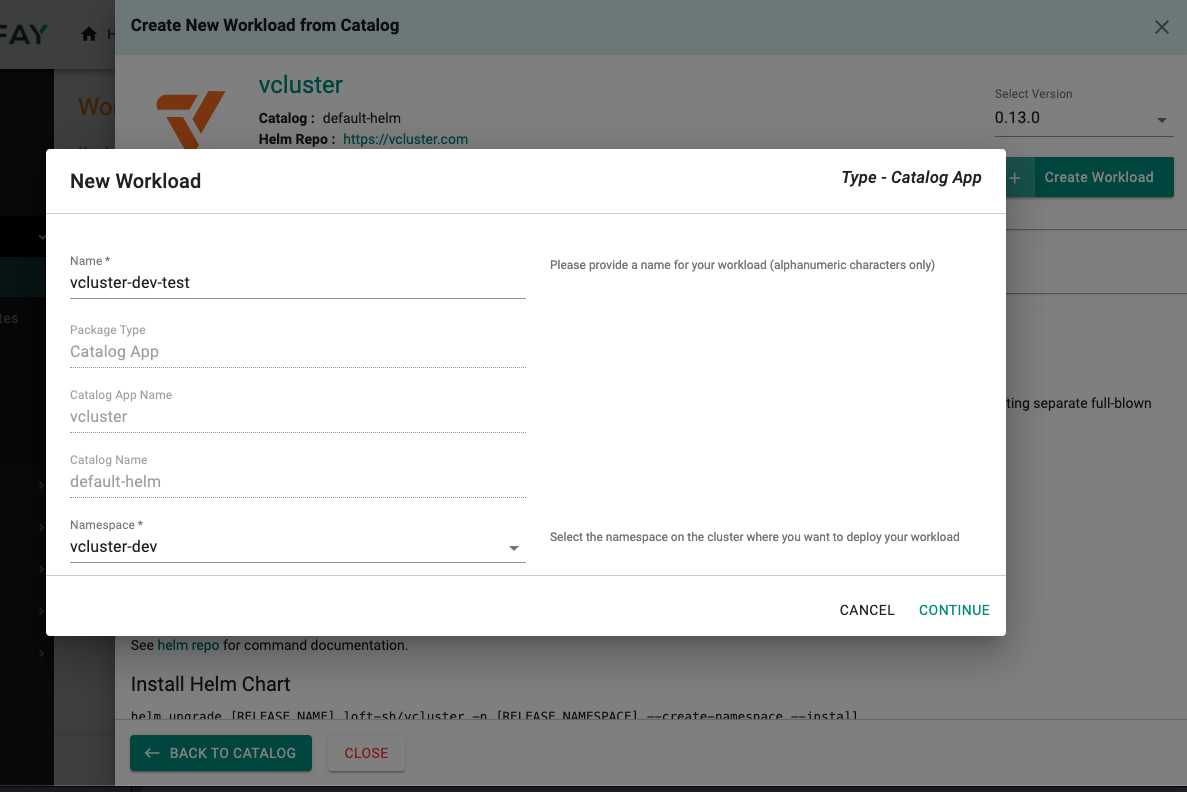

- In this example, we will create a K3s based vcluster

- Select Create Workload

- Specify the namespace (e.g. vcluster-dev-test) and select the host namespace created as part of the previous step

- Click Continue

- Click Save and Go to Placement

- Select the cluster that you want to provision the vcluster in (e.g. host-cluster-dev)

- Click Save and Go to Publish

- Click Publish

Step 4: Verify deployment¶

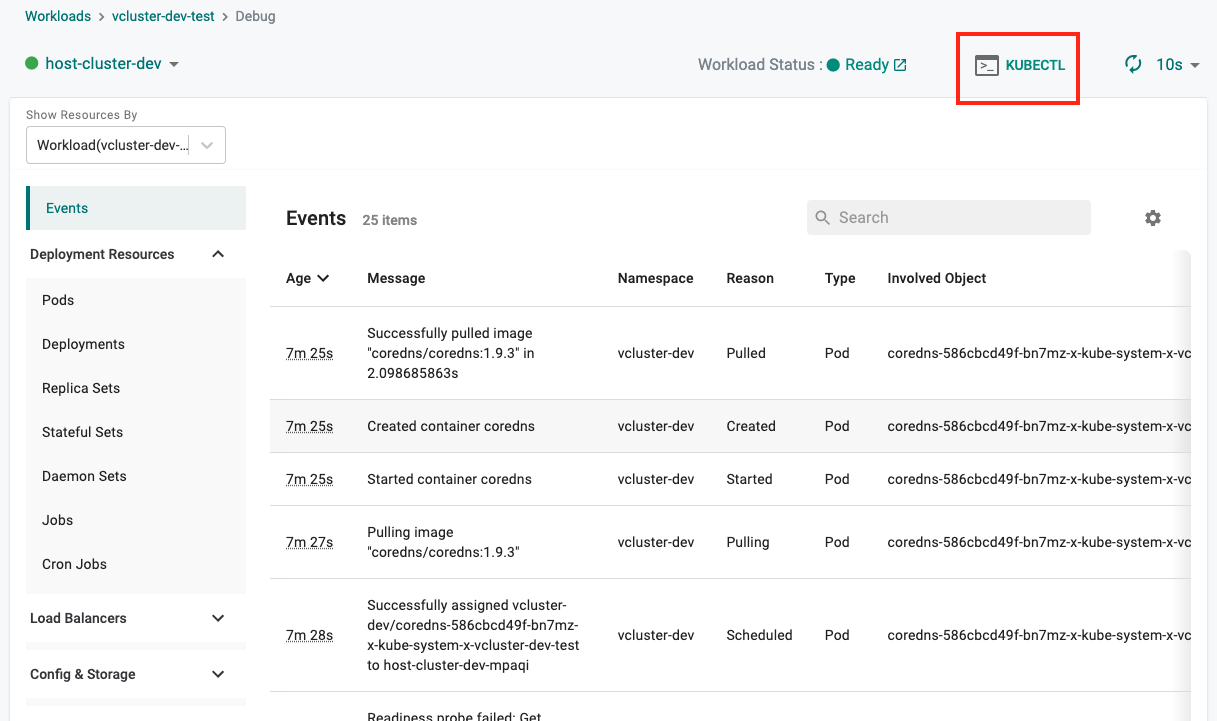

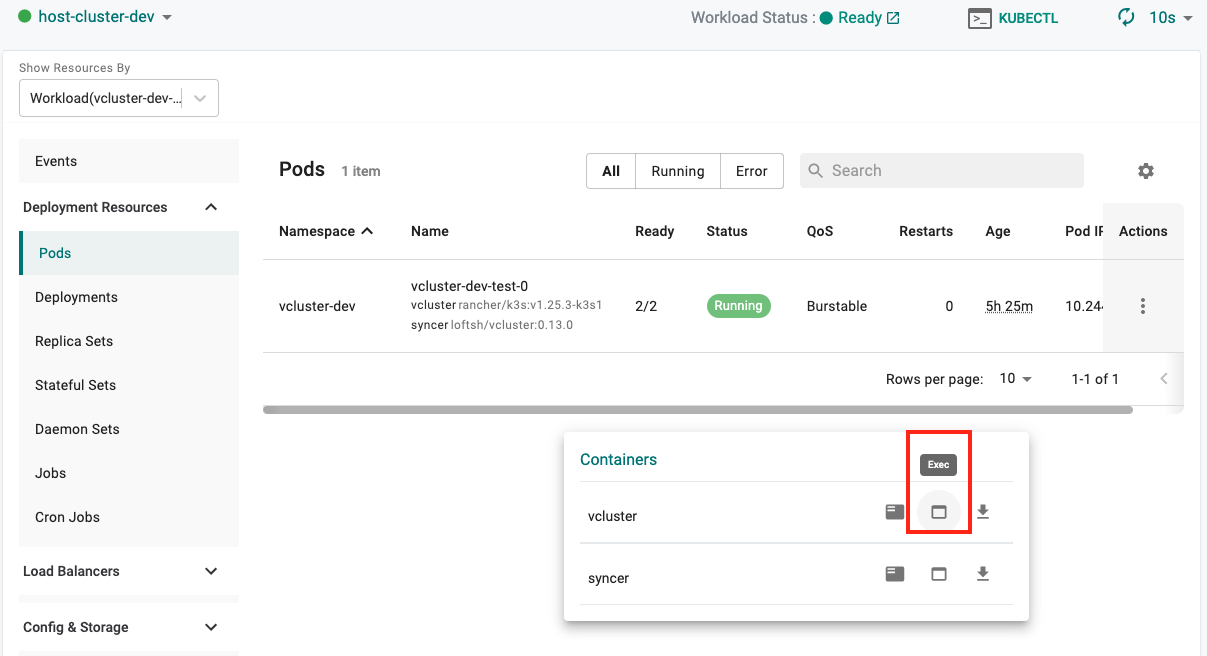

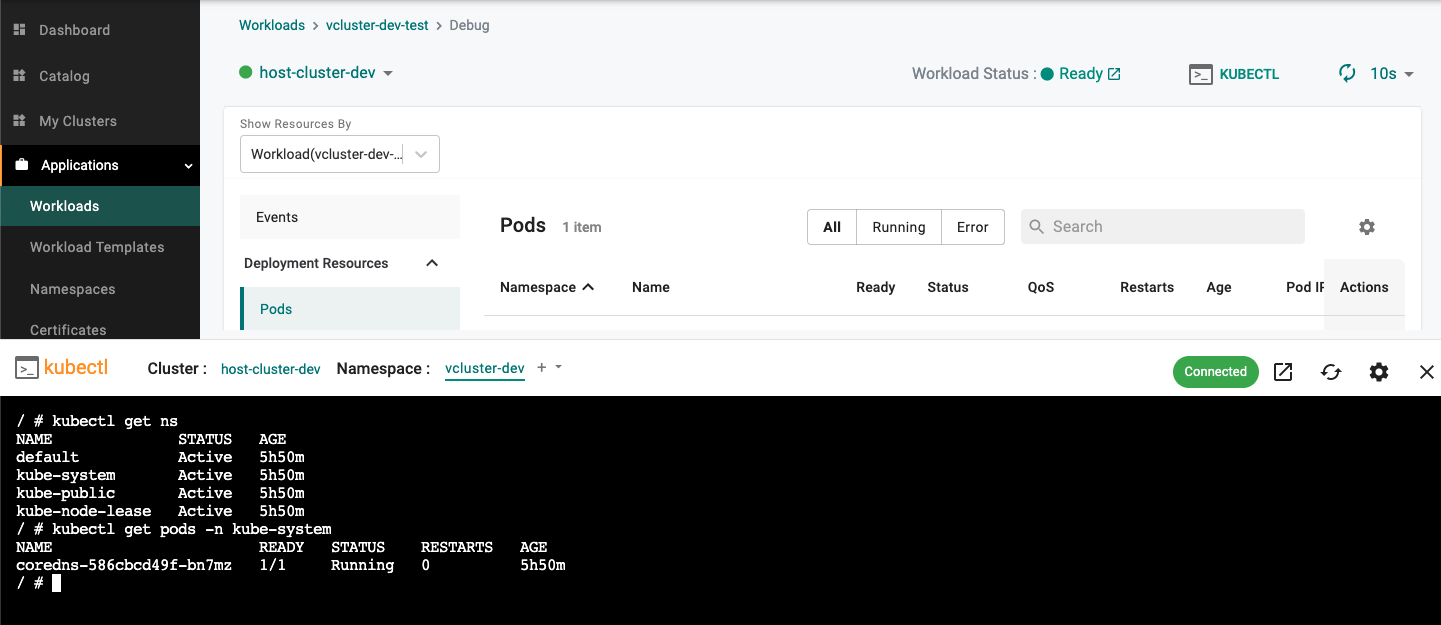

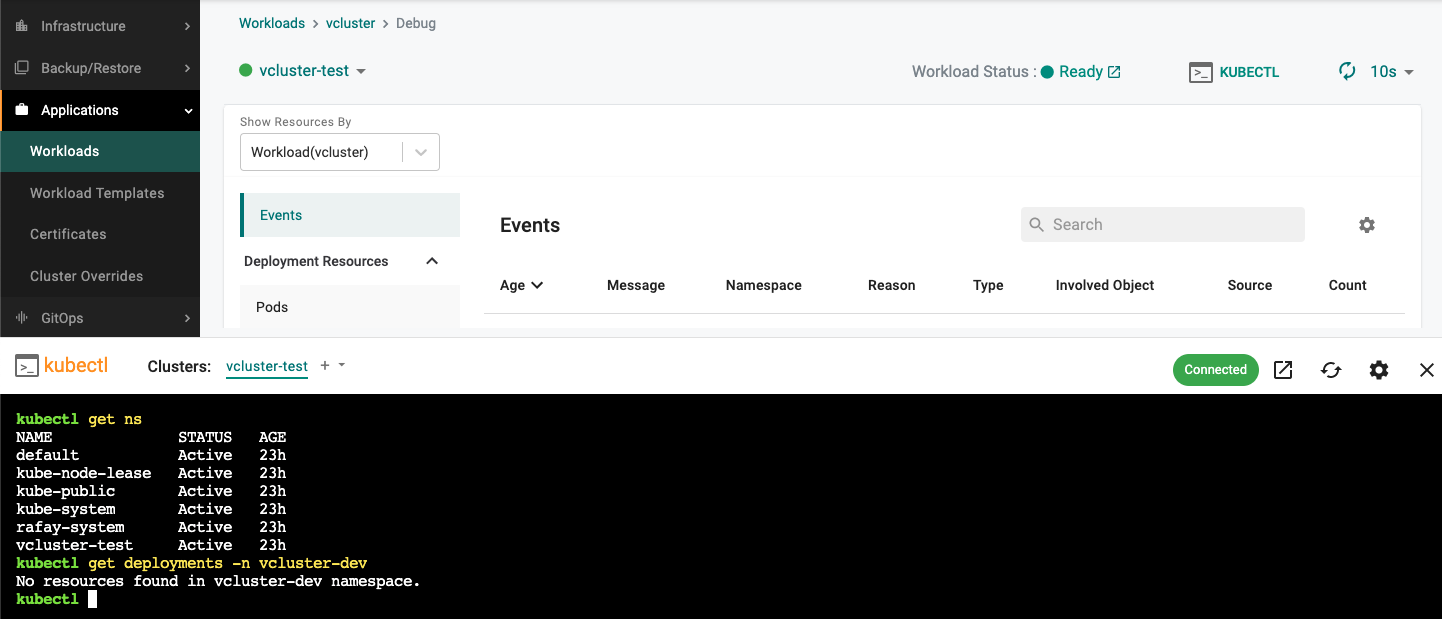

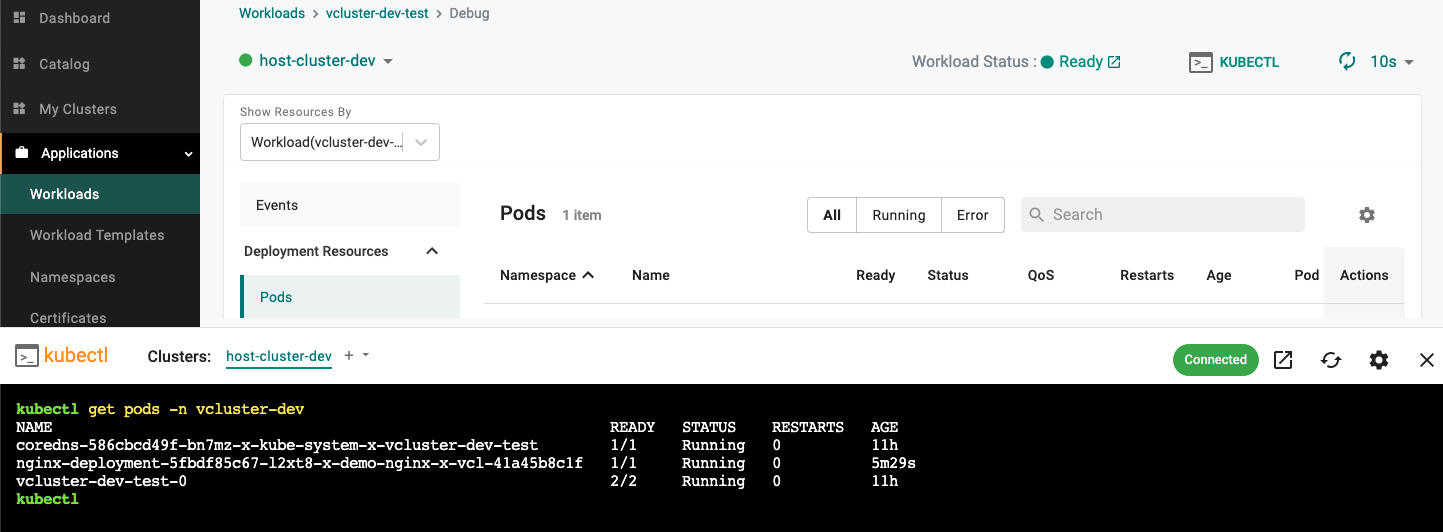

- Click on the DEBUG option available on the workloads listing page

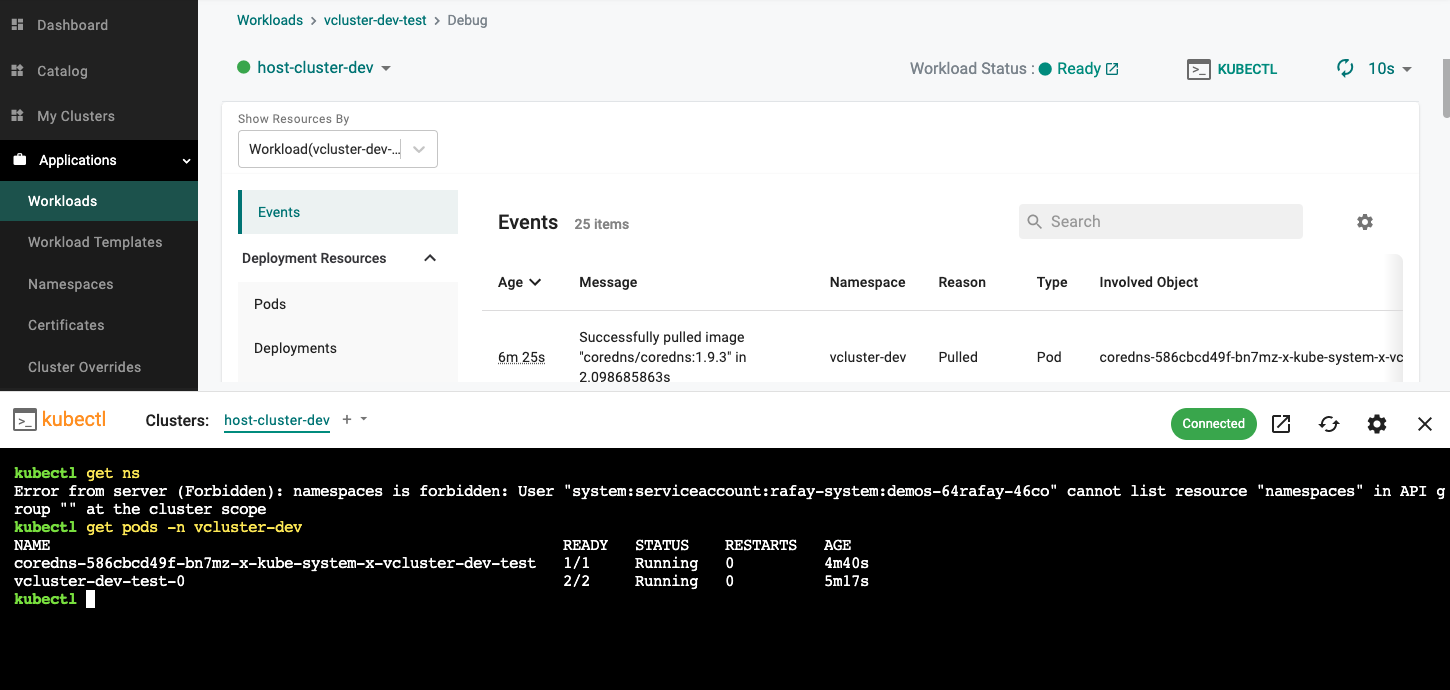

- Click on the KUBECTL option

- You can see that any kubectl commands that require cluster-wide privileges are prevented (get ns) while namespace specific operations are allowed (get pods -n vcluster-dev)

The vcluster-dev-test-0 pod is vcluster with the k3s control-plane and the coredns-586cbcd49f-bn7mz-x-kube-system-x-vcluster-dev-test pod is the coredns deployment for inside the virtual cluster.

Step 5: Secure kubectl access to the vcluster¶

The platform's Zero Trust Kubectl Access (ZTKA) capability provides a secure way to perform kubectl operations on clusters deployed in public cloud or on-premise environments.

To access the vcluster, we will perform a kubectl exec to the container running the vcluster. This can be done either via UI or Terminal.

For UI based option:

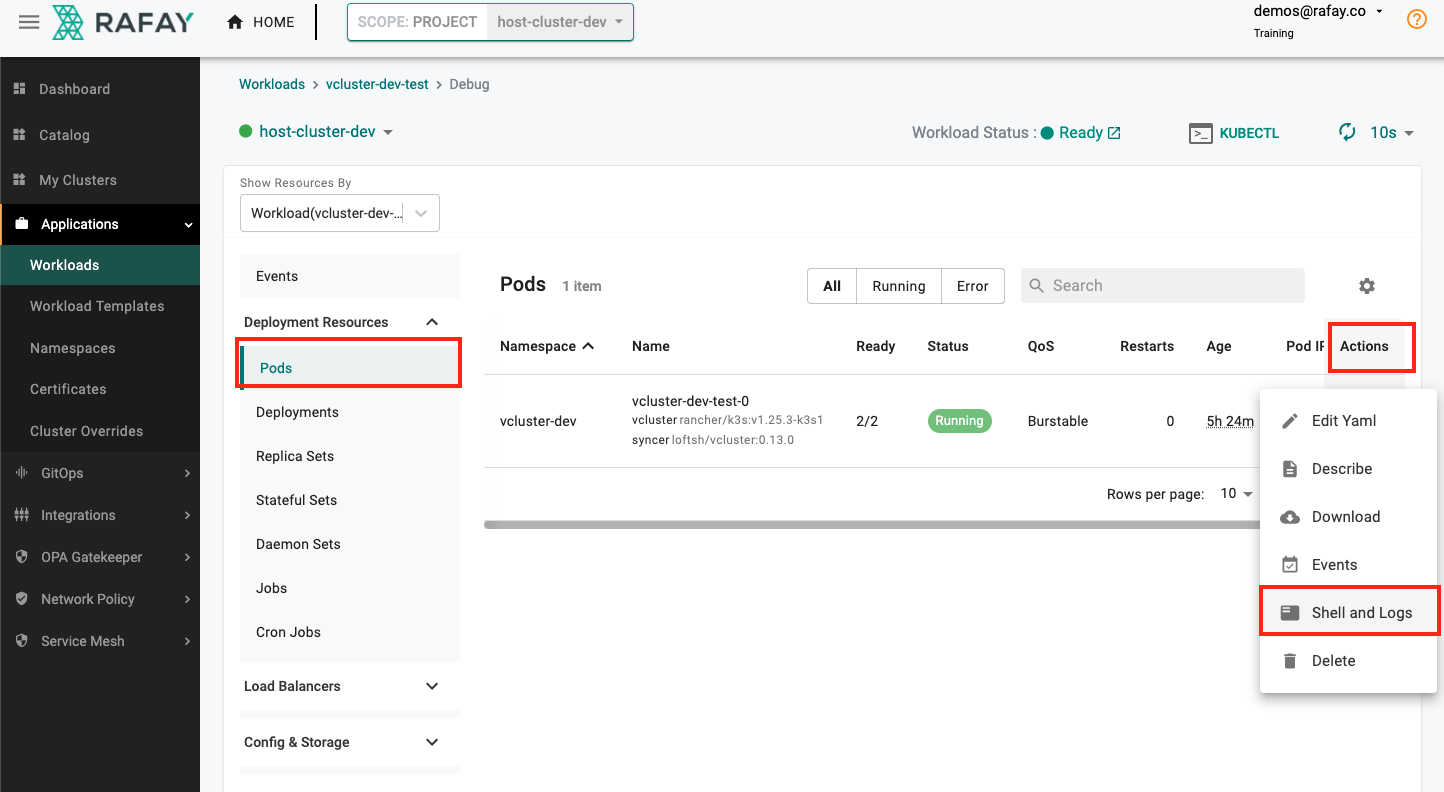

- Click on the DEBUG option available on the workloads listing page

- Click on Pods, click on Action (for vcluster-dev-test-0) and select Shell and Logs

- Initiate an exec to the vcluster container

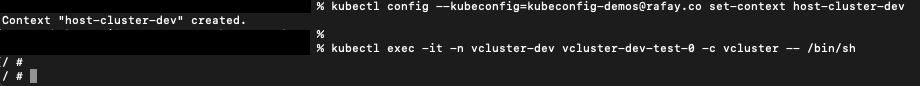

For Terminal based option:

- Download Kubeconfig file from the console (Home -> My Tools)

- Run the commands below to initiate an exec to the vcluster container

kubectl config --kubeconfig=kubeconfig-demos@rafay.co set-context <context name>

kubectl exec -it -n <namespace> <pod name> -c vcluster -- /bin/sh

A virtual cluster behaves the same way as a regular Kubernetes cluster.

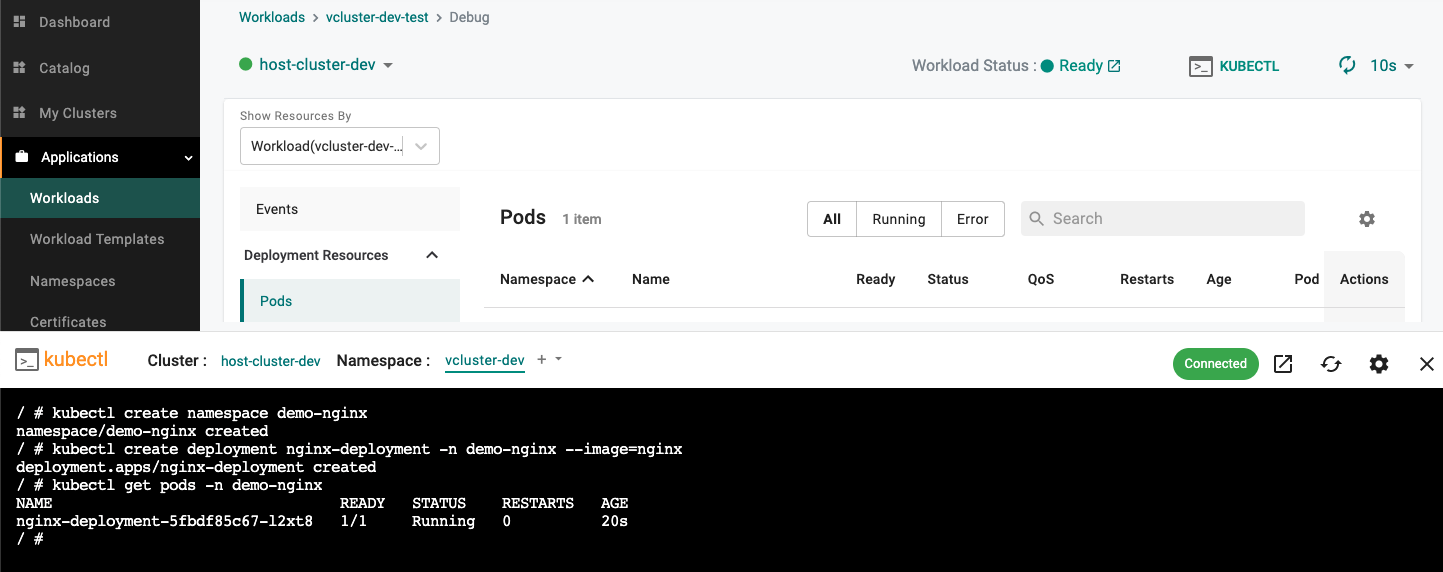

Let's create a namespace and a demo nginx deployment. You can check that this demo deployment will create pods inside the vcluster.

Here are some key things to notice about this deployment:

- On the host cluster, there is no namespace "demo-nginx" because this namespace only exists inside the vcluster.

- Everything that belongs to the vcluster will always remain inside the vcluster's host namespace vcluster-dev.

- You will also see that there is no deployment nginx-deployment because it also just lives inside the virtual cluster.

Note that you would need to run the commands below with a user who has cluster-wide privileges.

You will notice that the pod that has been scheduled for our nginx-deployment has made it to the underlying host cluster. The reason for this is that vclusters do not have separate nodes. Instead, they have a syncer which synchronizes resources from the vcluster to the underlying host namespace to actually get the pods of the vcluster running on the host cluster's nodes and the containers started inside the underlying host namespace.

Recap¶

Congratulations! You have successfully created a virtual cluster (vcluster) and securely performed kubectl operations on a remote cluster.