Part 5: Cluster Blueprints

This is Part 5 of a multi-part, self paced exercise.

What Will You Do¶

In this part, you will

- Create a custom cluster blueprint with select "managed addons"

- Apply the blueprint to your cluster

Estimated Time

Estimated time burden for this part is 10 minutes.

Important

The monitoring addon is incompatible with Docker Desktop. Ensure this is not selected on this environment.

Step 1: Create Blueprint¶

- Login to your Org

- Navigate to the "desktop" project and Infrastructure -> Blueprints

- Click on New Blueprint and provide a name (e.g. monitoring)

- Click on New Version

We imported our cluster in Part-1 with a minimal blueprint (i.e. just the base k8s mgmt operator components are installed on the cluster). We will build and extend the minimal blueprint to build our custom blueprint with a few of the "managed addons".

Note that you can also bring your own "custom addons". This will be covered in a different exercise.

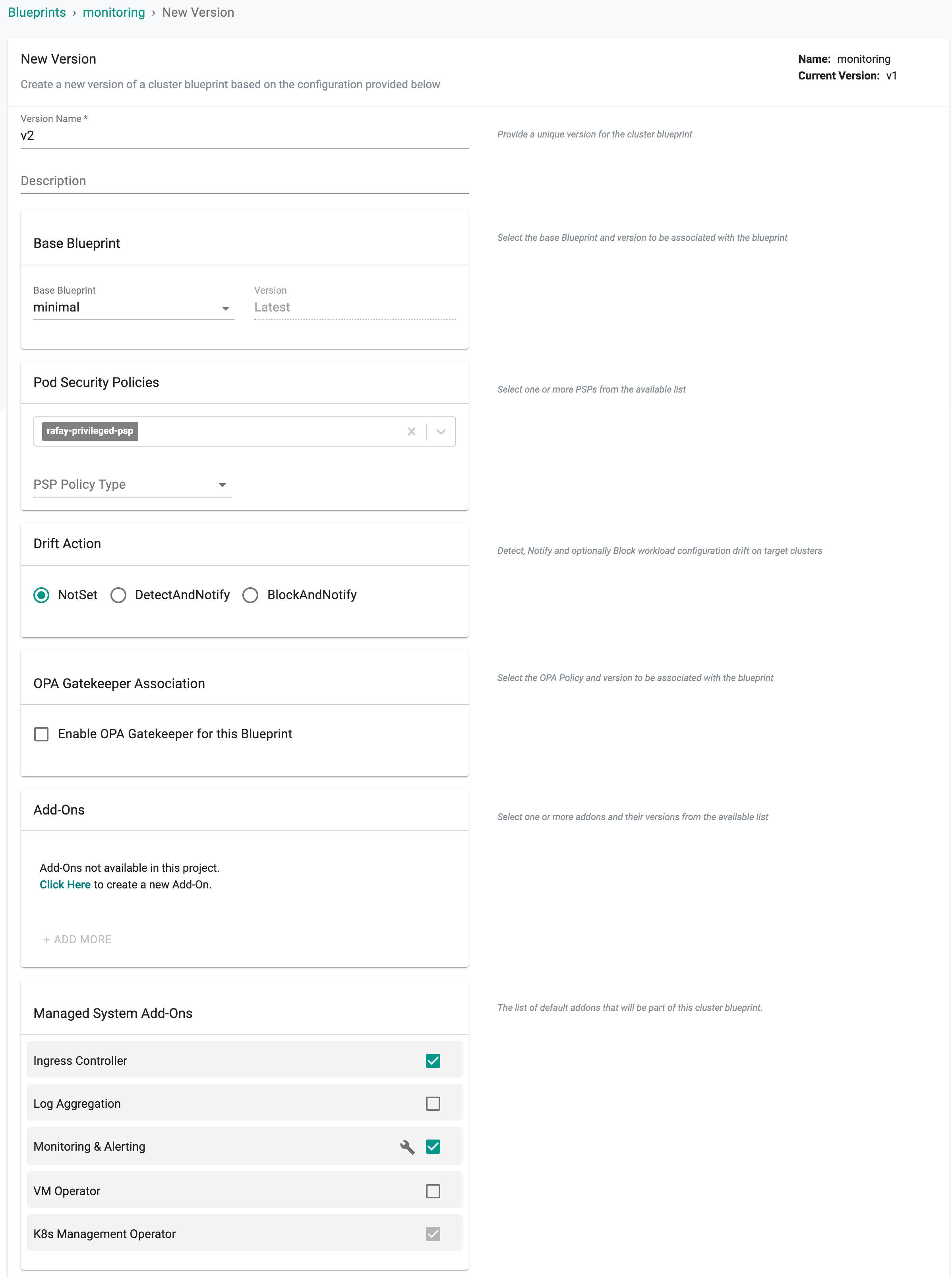

- Provide a version (e.g. v2) and optional text description for the custom blueprint

- Ensure the base blueprint selected is minimal

- In the "managed system addons", select "Monitoring" and "Ingress Controller" and Save

This will create a new version of the custom blueprint.

Note

You can also create and add custom addons to your custom blueprint. This is out of scope for this exercise.

Step 2: Publish Blueprint¶

You will now apply the custom blueprint to your cluster

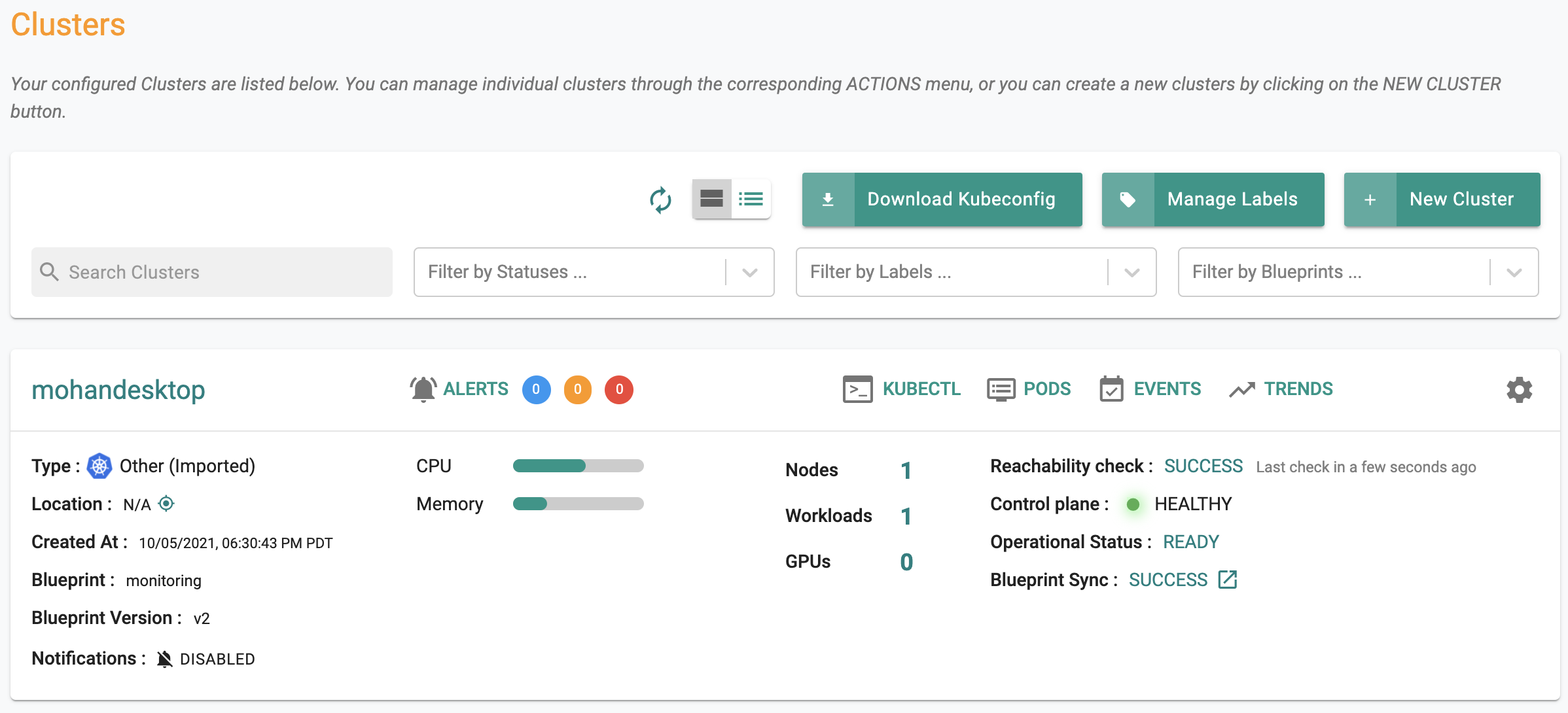

- Navigate to Infrastructure -> Clusters

- Click on the "gear" icon on the far right and click on "Update Blueprint"

- Select "monitoring" for blueprint name and "v2" for the version (i.e the version we created in the previous step)

- Click on Save and Publish

This will force the reconciliation of the custom cluster spec with your cluster. This can take a few minutes to complete esp. if images have to be downloaded for the new addons. Once complete, you should see something similar to the following. Notice that the blueprint is reported as "monitoring" and the version is reported as "v2". This is exactly what we wanted to apply to the cluster.

Step 3: Verify¶

Optionally, you can verify that the pods for the "Ingress Controller" and "Monitoring" addons are active and operational on your cluster using kubectl. You should see something like the following.

The managed Ingress Controller's addon's pods are deployed to the "rafay-infra" namespace.

kubectl get po -n rafay-system

NAME READY STATUS RESTARTS AGE

relay-agent-55b85c79c9-l7x6f 1/1 Running 0 2d

edge-client-6b7d568fc8-dmck9 1/1 Running 0 2d

rafay-connector-fd44db794-dsl6f 1/1 Running 23 2d

controller-manager-85685db5b5-25nhs 1/1 Running 21 2d

ingress-nginx-admission-create-c79k9 0/1 Completed 0 7m56s

ingress-nginx-admission-patch-pkf77 0/1 Completed 1 7m56s

l4err-65468fd8c4-f4qc6 1/1 Running 0 7m44s

ingress-nginx-controller-sf8fn 1/1 Running 0 7m44s

The monitoring addon's pods are deployed to the "rafay-infra" namespace.

kubectl get po -n rafay-infra

NAME READY STATUS RESTARTS AGE

rafay-prometheus-node-exporter-lkcj6 1/1 Running 0 2d

rafay-prometheus-metrics-server-77dc957b6c-w4n4c 1/1 Running 0 2d

rafay-prometheus-adapter-6bbd764b64-bb7x4 1/1 Running 0 2d

rafay-prometheus-alertmanager-0 2/2 Running 0 2d

rafay-prometheus-kube-state-metrics-6478bbc5d-7cf58 1/1 Running 0 2d

rafay-prometheus-helm-exporter-5dc79dbc5d-4rsrg 1/1 Running 0 2d

rafay-prometheus-server-0 2/2 Running 0 2d

Recap¶

Congratulations! At this point, you have successfully created a custom cluster blueprint and published it to your cluster.