Configure

In this section, you will create a standardized cluster blueprint with the kuberay-operator add-on. You can then reuse this blueprint with all your clusters.

Step 1: Create Namespace¶

In this step, you will create a namespace for the Kuberay Operator.

- Navigate to a project in your Org

- Select Infrastructure -> Namespaces

- Click New Namespace

- Enter the name kuberay

- Select wizard for the type

- Click Save

- Click Discard Changes & Exit

Step 2: Create Repository¶

In this step, you will create a repository in your project so that the controller can retrieve the kuberay Operator Helm chart automatically.

- Select Integrations -> Repositories

- Click New Repository

- Enter the name kuberay

- Select Helm for the type

- Click Create

- Enter https://ray-project.github.io/kuberay-helm/ for the endpoint

- Click Save

Optionally, you can click on the validate button on the repo to confirm connectivity.

Step 3: Create kuberay-operator Addon¶

In this step, you will create a custom add-on for the kuberay-operator that will pull the Helm chart from the previously created repository. This add-on will be added to a custom cluster blueprint in a later step.

- Select Infrastructure -> Add-Ons

- Click New Add-On -> Create New Add-On

- Enter the name kuberay-operator

- Select Helm 3 for the type

- Selct Pull files from repository

- Select Helm for the repository type

- Select kuberay for the namespace

-

Click Create

-

Click New Version

- Enter a version name

- Select the previously created repository

- Enter kuberay-operator for the chart name

- Click Save Changes

Step 4: Create Blueprint¶

In this step, you will create a custom cluster blueprint that contains the kuberay-operator add-on that is previously created. The cluster blueprint can be applied to one or multiple clusters.

- Select Infrastructure -> Blueprints

- Click New Blueprint

- Enter the name kuberay

- Click Save

- Enter a version name

- Select Minimal for the base blueprint

- In the add-ons section, click Configure Add-Ons

- Click the + symbol next to the previously created add-ons to add them to the blueprint

- Click Save Changes

Step 5: Apply Blueprint¶

In this step, you will apply the previously created cluster blueprint to an existing cluster. The blueprint will deploy the kuberay-operator add-ons to the cluster.

- Select Infrastructure -> Clusters

- Click the gear icon on the cluster card -> Update Blueprint

- Select the previously created kuberay blueprint and version

- Click Save and Publish

The controller will publish and reconcile the blueprint on the target cluster. This can take a few seconds to complete.

Step 6: Create RayLLM Workload¶

In this step, you will create a workload for the RayLLM.

- Save the below YAML to a file named ray-service-llm.yaml

apiVersion: ray.io/v1

kind: RayService

metadata:

name: rayllm

spec:

serviceUnhealthySecondThreshold: 1200

deploymentUnhealthySecondThreshold: 1200

serveConfigV2: |

applications:

- name: router

import_path: rayllm.backend:router_application

route_prefix: /

args:

models:

- ./models/continuous_batching/amazon--LightGPT.yaml

- ./models/continuous_batching/OpenAssistant--falcon-7b-sft-top1-696.yaml

rayClusterConfig:

headGroupSpec:

rayStartParams:

resources: '"{\"accelerator_type_cpu\": 2}"'

dashboard-host: '0.0.0.0'

template:

spec:

containers:

- name: ray-head

image: anyscale/ray-llm:latest

resources:

limits:

cpu: 2

memory: 8Gi

requests:

cpu: 2

memory: 8Gi

ports:

- containerPort: 6379

name: gcs-server

- containerPort: 8265

name: dashboard

- containerPort: 10001

name: client

- containerPort: 8000

name: serve

workerGroupSpecs:

- replicas: 1

minReplicas: 0

maxReplicas: 1

groupName: gpu-group

rayStartParams:

resources: '"{\"accelerator_type_cpu\": 1, \"accelerator_type_a10\": 1, \"accelerator_type_a100_80g\": 1}"'

template:

spec:

containers:

- name: llm

image: anyscale/ray-llm:latest

lifecycle:

preStop:

exec:

command: ["/bin/sh","-c","ray stop"]

resources:

limits:

cpu: "48"

memory: "192G"

nvidia.com/gpu: 1

requests:

cpu: "1"

memory: "1G"

nvidia.com/gpu: 1

ports:

- containerPort: 8000

name: serve

tolerations:

- key: "ray.io/node-type"

operator: "Equal"

value: "gpu"

effect: "NoSchedule"

Note

Make sure to have the taint ray.io/node-type:gpu on the gpu nodes in the cluster.

- Select Applications -> Workloads

- Click New Workload -> Create New Workload

- Enter the name kuberay-llm

- Select K8s YAML for the type

- Select Upload files manually

- Select kuberay for the namespace

- Click Create

- Click Upload and select the previously saved ray-service-llm.yaml file

- Click Save And Goto Placement

- Select the cluster in the placement page

- Click Save And Goto Publish

- Click Publish

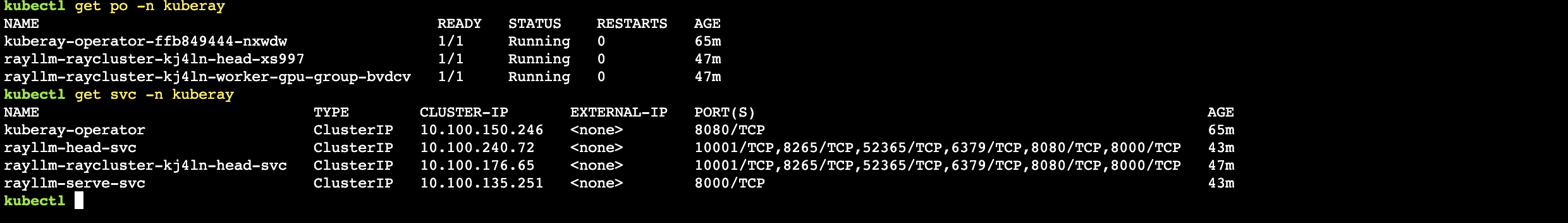

- Wait for the pods to come up and rayllm-serve-svc to be created

Next Step¶

At this point, you have done everything required to get kuberay-operator installed and operational on your cluster along with RayLLM. In the next step, we will query the models deployed using RayLLM.