Canary

What Will You Do¶

In this part, you will test how to execute a "canary" deployment pattern. The Canary type implies creating new pods in parallel with existing ones, in the same way as the Rolling Update does, but gives a bit more control over the update process.

Background¶

Like with the Blue-Green strategy in the previous exercise, a Canary type isn’t included natively in the ".spec.strategy.type". However, it can also be realized without having to install and manage additional controllers in your Kubernetes cluster.

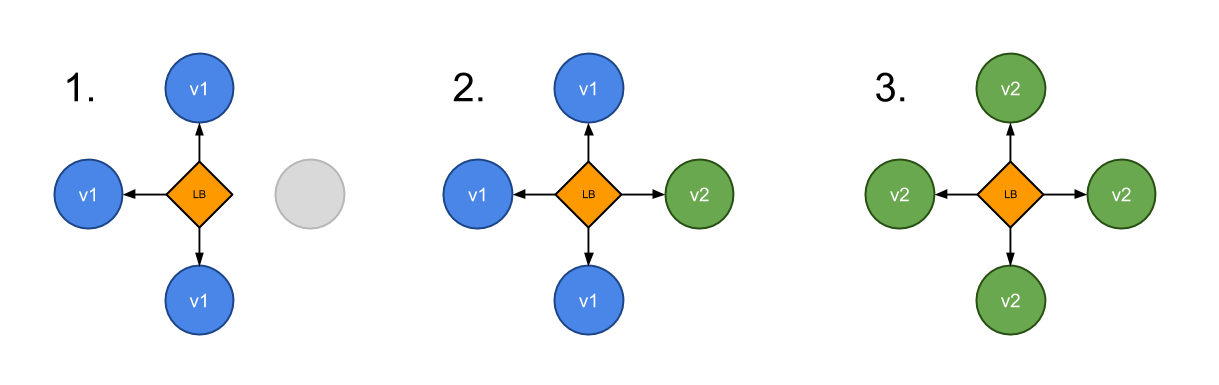

In this exercise, we will achieve this by creating two Deployments and a Service with the same set of labels in its selector. After running a new version of an application, some part of new requests will be routed to it, and some part will proceed using the old version. If the new version is working well, then the rest of the users will be switched to the new one and old pods will be deleted.

Advantages

- Low/No application downtime

- New version is released gradually and can be rolled back if quality not acceptable

Disadvantages

- Rollout/rollback can take time

- Supporting multiple APIs is hard

Estimated Time

Estimated time burden for this part is 5 minutes.

Step 1: Baseline¶

This assumes that you have already completed at least "Part-1" and have an operational GitOps pipeline syncing artifacts from your Git repository.

- Navigate to your Git repo -> echo.yaml file and Edit

- Copy the following YAML and update the file

- Commit the changes in your Git repository

Note that we have "two deployments". The first one called "echo" is active and has two replicas. The second one is called "echo-canary" and has zero replicas.

apiVersion: v1

kind: Service

metadata:

name: echo

spec:

type: LoadBalancer

ports:

- port: 80

targetPort: 5678

protocol: TCP

selector:

app: echo

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: echo

spec:

selector:

matchLabels:

app: echo

strategy:

type: RollingUpdate

rollingUpdate:

maxSurge: 2

maxUnavailable: 1

replicas: 2

template:

metadata:

labels:

app: echo

version: "1.0"

spec:

containers:

- name: echo

image: hashicorp/http-echo

args:

- "-text=Prod"

ports:

- containerPort: 5678

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: echo-canary

spec:

selector:

matchLabels:

app: echo

strategy:

type: RollingUpdate

rollingUpdate:

maxSurge: 2

maxUnavailable: 1

replicas: 0

template:

metadata:

labels:

app: echo

version: "1.0"

spec:

containers:

- name: echo

image: hashicorp/http-echo

args:

- "-text=Canary"

ports:

- containerPort: 5678

Once the GitOps pipeline has reconciled, you should see something like the following via the zero trust kubectl

kubectl get po -n echo

NAME READY STATUS RESTARTS AGE

echo-84dc79598c-dm5dp 1/1 Running 0 8s

echo-84dc79598c-jcsdt 1/1 Running 0 8s

Step 2: Setup¶

To test the addition of a Canary, we will execute cURL against the configured IP of the Service every second.

- Open Terminal

- Copy the following command, enter the External-IP for your Load Balancer and execute it.

while true; do sleep 1; curl http://<External-IP from above>;done

You should see something like the following:

Prod

Prod

Prod....

At this point all traffic is being routed to the "Prod" replicas

Step 3: Add Canary¶

- Navigate to your Git repo -> echo.yaml file and Edit

- Update replicas for "echo-canary" from "0" to "1"

- Commit the changes in your Git repository

Step 4: View Behavior¶

The Git commit will trigger the Gitops pipeline. It will automatically update the workload on the cluster adding the replica from the "Canary" deployment into the mix. You should start seeing responses from the Canary replicas "Canary" like the following in a few minutes with zero downtime.

Prod

Prod

Prod

Prod

Canary

Prod

Canary

Prod

If you check the pods via the zero trust kubectl, you should see something like the following:

kubectl get po -n echo

NAME READY STATUS RESTARTS AGE

echo-84dc79598c-dm5dp 1/1 Running 0 3m47s

echo-84dc79598c-jcsdt 1/1 Running 0 3m47s

echo-canary-66849577f8-424km 1/1 Running 0 32s

At this point, users are being served by both the existing replicas and the canary replica. Depending on behavior of the canary, users can scale it up or scale it down by simply changing the replica count in the Git repo.

Recap¶

In this part, you tested and experienced how a "Canary" deployment pattern/strategy can be implemented easily on Kubernetes.