Overview

An EKS cluster consists of two VPCs:

- The first VPC managed by AWS that hosts the Kubernetes control plane and

- The second VPC managed by customers that hosts the Kubernetes worker nodes (EC2 instances) where containers run, as well as other AWS infrastructure (like load balancers) used by the cluster.

All worker nodes need the ability to connect to the managed API server endpoint. This connection allows the worker node to register itself with the Kubernetes control plane and to receive requests to run application pods.The worker nodes connect through the EKS-managed elastic network interfaces (ENIs) that are placed in the subnets that you provide when you create the cluster.

Overview¶

Amazon EKS node groups are immutable by design i.e. once created, it is not possible to change its type (managed/unmanaged), the AMI or instance type. Node groups can be scaled up/down anytime. The same EKS cluster can have "multiple" node groups to accommodate different type of workloads. A node group can have mixed instance types when configured to use Spot.

Users can use the Controller to provision Amazon EKS Clusters with either "Self Managed" or "AWS Managed" node groups.

Comparing Node Group Types¶

| Feature | Self Managed | AWS Managed |

|---|---|---|

| Automated Updates | No | Limited |

| Graceful Scaling | No | Yes |

| Custom AMI | Yes | Yes |

| Custom Security Group Rules | Yes | Limited |

| Custom SSH Auth | Yes | Limited |

| Windows | Yes | No |

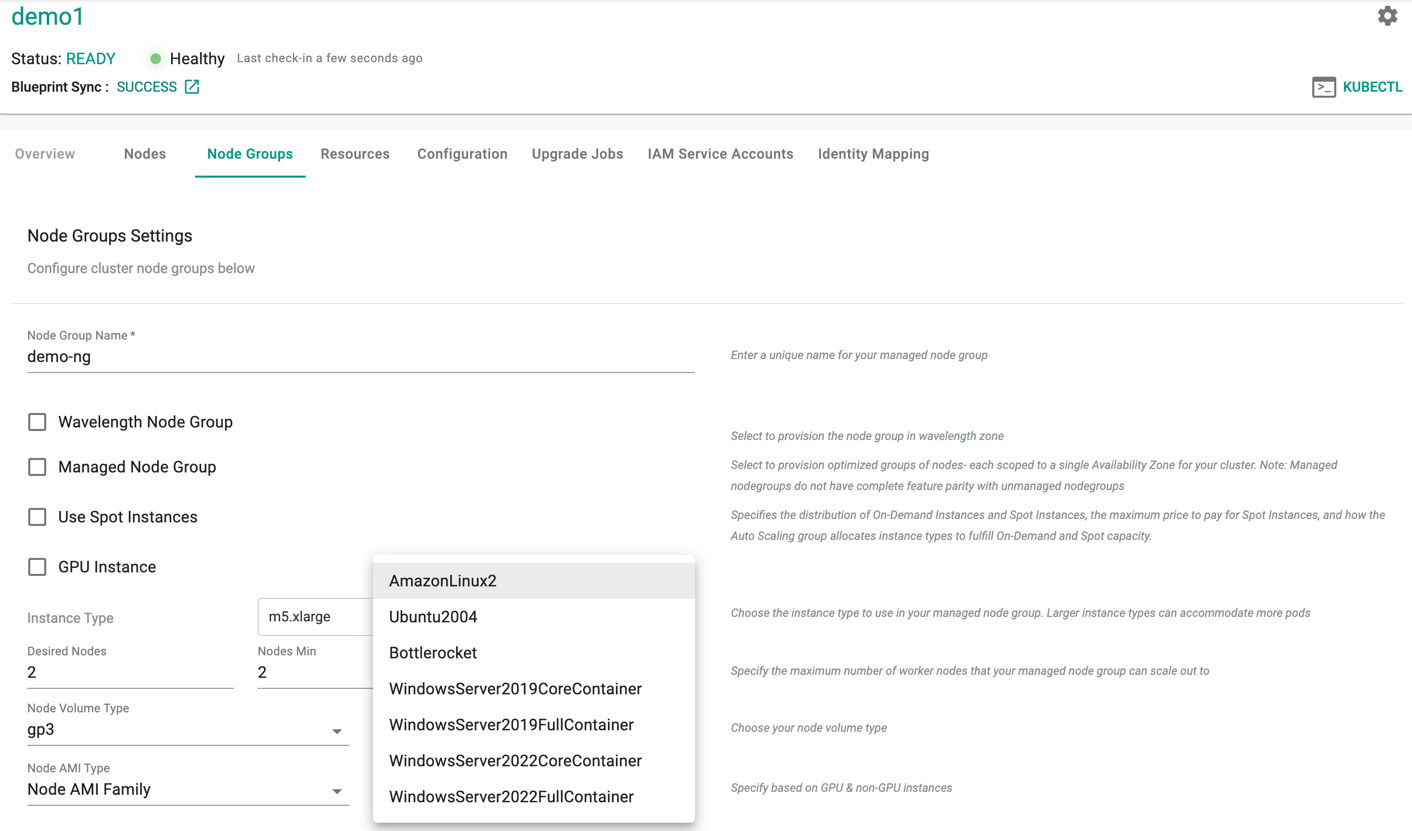

Node AMIs¶

Users can select from multiple Node AMI family types for the node group. In addition, users can also bring their own "Custom AMI".

| OS | Node AMI Family |

|---|---|

| Linux | Amazon Linux 2023, Amazon Linux2, Ubuntu18.04, Ubuntu 20.04, BottleRocket |

| Windows | Windows Server 2019 Full, Windows Server 2019 Core, Windows Server 1909 Core, Windows Server 2004 Core |

Important

- BottleRocket nodegroup supports proxy

- Amazon Linux2 uses only bootstrap.sh, while Amazon Linux2023 uses NodeConfig.

Self Managed Node Groups¶

Self Managed node groups are essentially user provisioned EC2 instances or Auto Scaling Groups that are registered as worker nodes to the EKS control plane. To provision EC2 instances as EKS workers, you need to ensure that the following criteria is satisfied:

- The AMI has all the components installed to act as Kubernetes Nodes (i.e. kubelet, container engine at min)

- The associated Security Group needs to allow communication with the Control Plane and other Workers in the cluster.

- User data or boot scripts of the instances need to include a step to register with the EKS control plane.

- The IAM role used by the worker nodes are registered users in the cluster.

Note

On EKS optimized AMIs, the user data is handled by the bootstrap.sh script installed on the AMI.

The Controller streamlines and automates all these steps as part of the provisioning process essentially providing a custom, managed experience for users.

Considerations¶

Self managed node groups do not benefit from any managed services provided by AWS. The user needs to configure everything including the AMI to use, Kubernetes API access on the node, registering nodes to EKS, graceful termination, etc. The Controller helps streamline and automate the entire workflow. On the flip side, self managed node groups give users the most flexibility in configuring their worker nodes. Users have complete control over the underlying infrastructure and can customize all the nodes to suit their preference.

Managed Node Groups¶

Managed Node Groups automate the provisioning and lifecycle management of the EKS cluster's worker nodes. With this configuration, AWS takes on the operational burden for the following items:

- Running the latest EKS optimized AMI.

- Gracefully draining nodes before termination during a scale down event.

- Gracefully rotate nodes to update the underlying AMI.

- Apply labels to the resulting Kubernetes Node resources.

While Managed Node Groups provides a managed experience for the provisioning and lifecycle of EC2 instances, they do not configure horizontal auto-scaling or vertical auto-scaling. Managed Node Groups also do not automatically update the underlying AMI to handle OS patches or Kubernetes version updates. The user still needs to manually trigger a Managed Node Group update.

Considerations¶

With Managed Node Groups

- SSH access is possible only with an EC2 Key Pair i.e. you have to use a single, shared key pair for all SSH access.

- Users have limited control over the security group rules for remote access.

i.e. when you specify an EC2 key pair on the Managed Node Group, by default the security group automatically opens access to port 22 to the whole world (0.0.0.0/0). You can further restrict access by specifying source security group IDs, but you do not have the option to restrict CIDR blocks. This makes it hard to expose access over a peered VPC connection or Direct Connect, where the security group may not live in the same account.

- Using managed node groups with Spot Instances requires significantly less operational effort compared to using self-managed nodes.

Windows Node Groups¶

Amazon EKS supports Windows Nodes that allow running Windows containers.

Pre-Requisites¶

- A Linux node group with active linux nodes is required to run the VPC resource controller and CoreDNS (Microsoft Windows does not support host-networking mode). Since the Linux node group is critical to the functioning of the cluster, it is recommended to have at least two t2.large Linux nodes to ensure High Availability.

- Ensure that you have disabled the "managed ingress" in the cluster blueprint. This is because this collides with the port used for the VPC controller.

Add Windows Node Group¶

Users can add a Windows Node Group exactly like how they add a Linux node group. The Windows AMI family supports both managed and self-managed node groups

Note

The self service wizard ensures that users will not be shown/allowed to add a Windows node group until there is at least one Linux based node group attached to the EKS cluster.

Windows AMIs¶

There are two primary release channels for Windows Server. The Amazon EKS optimized AMIs for Windows are built on top of Windows Server 2019, and are configured to serve as the base image for Amazon EKS nodes. The AMI includes Docker and kubelet out of the box.

Long-Term Servicing Channel (LTSC)

- Currently Windows Server 2019.

- A new major version of Windows Server is released every 2-3 years

- 5 years of mainstream support and 5 years of extended support.

Semi-Annual Channel

- Currently Windows Server 2004.

- New releases available twice a year, in spring and fall.

- Each release in this channel will be supported for 18 months from the initial release.

VPC Resource Controller¶

The controller automatically installs and configures the VPC resource controller as part of the cluster provisioning process.

Visibility and Monitoring¶

Users can use the console to view details about their Windows Node Groups and scale it up/down as required.

Scale Node Group¶

The process to scale a Windows node group using the controller is identical to the process for Linux node groups.

Considerations¶

There are a number of considerations that need to be factored in to use Windows worker nodes on Amazon EKS.

Node Selectors¶

Ensure that the workloads use the correct "node selectors" to ensure they are scheduled on the correct nodes (Windows or Linux).

For Windows workloads

nodeSelector:

kubernetes.io/os: windows

kubernetes.io/arch: amd64

For Linux workloads

nodeSelector:

kubernetes.io/os: linux

kubernetes.io/arch: amd64

Fargate¶

AWS Fargate is a managed serverless compute engine for containers that works with Amazon EKS. Fargate removes the need to provision and manage servers. Fargate allows developers to specify and pay for resources per application. The use of Fargate can also improve security because applications are isolated by design.

EKS clusters require a Fargate profile that contains information needed to instantiate pods in Fargate. These are:

- Pod Execution Role: Defines the permissions required to run the pod and the networking location (subnet) to run the pod. This allows the same networking and security permissions to be applied to multiple Fargate pods and makes it easier to migrate existing pods on a cluster to Fargate.

- Selector: Define which pods should run on Fargate (namespace and labels)

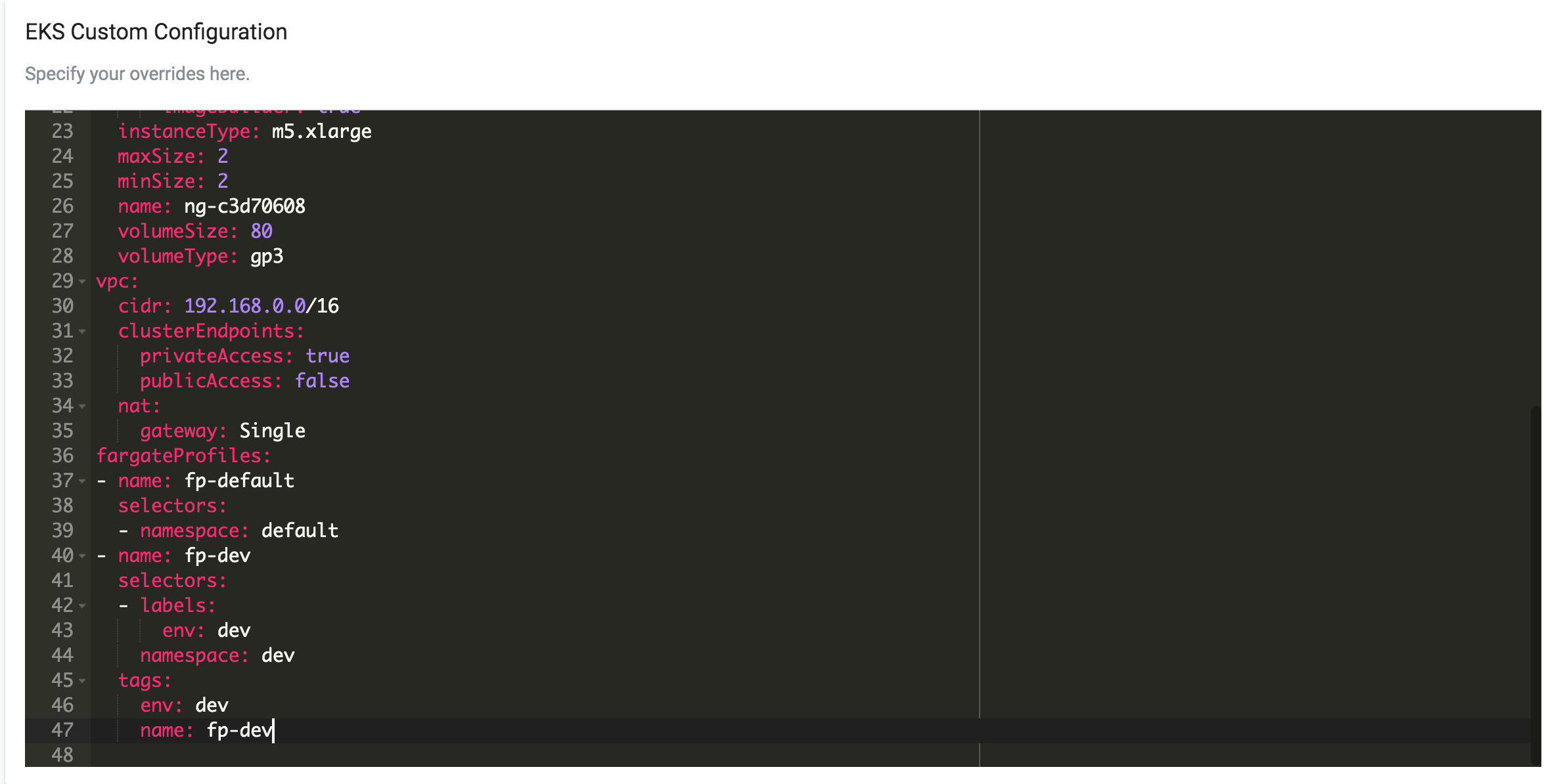

To create fargate profile, follow the below steps:

- Click on "Clusters" on the left panel

- Click on the "New Cluster" button

- Select "Create a New Cluster" and click Continue

- Select "Environment" as "Public Cloud"

- Select "Cloud Provider" as "AWS"

- Select "Kubernetes Distribution" as "Amazon EKS"

- Give it a name and click Continue

- Configure the required settings for VPC, nodegroup, IAM, etc.

- Click "SAVE & CUSTOMIZE"

You will see a cluster yaml spec with all the configuration details based on the information given on previous screen. Add the below snippet to the end of the yaml spec and customize it according to your needs.

fargateProfiles:

- name: fp-default

selectors:

- namespace: default

- name: fp-dev

selectors:

- labels:

env: dev

namespace: dev

tags:

env: dev

name: fp-dev

- Save the changes and provision the cluster

In the above example, workloads that meet the below criteria will be scheduled onto Fargate:

- all workloads in the "default" namespace

- all workloads in the "dev" namespace matching the specified label selectors

Note

Amazon EKS clusters can contain managed/self managed node groups and Fargate at the same time.

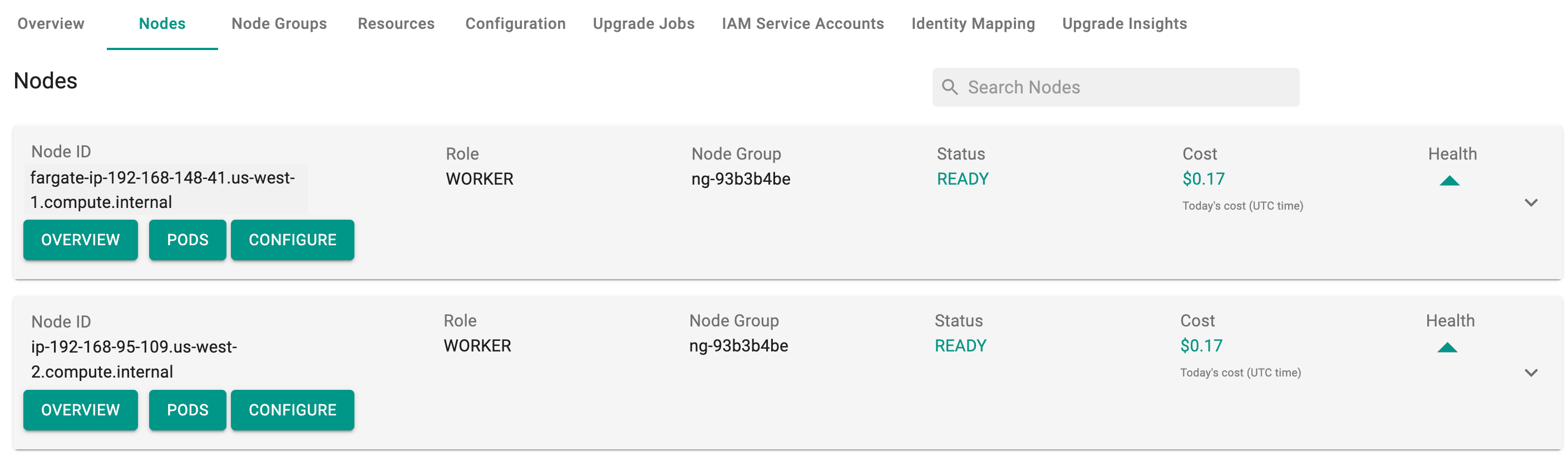

Once the cluster is up and a workload is deployed matching the above fargate profile, fargate nodes will be created and can be seen under Cluster -> Nodes

To view the cost details of each node, click on the available cost link. This will navigate you to the Cost Explorer

Node Group Lifecycle¶

Amazon EKS Clusters provisioned by the Controller starts life with one node group. Additional node groups can be added after initial provisioning. Users can also use the Controller to perform actions on node groups.

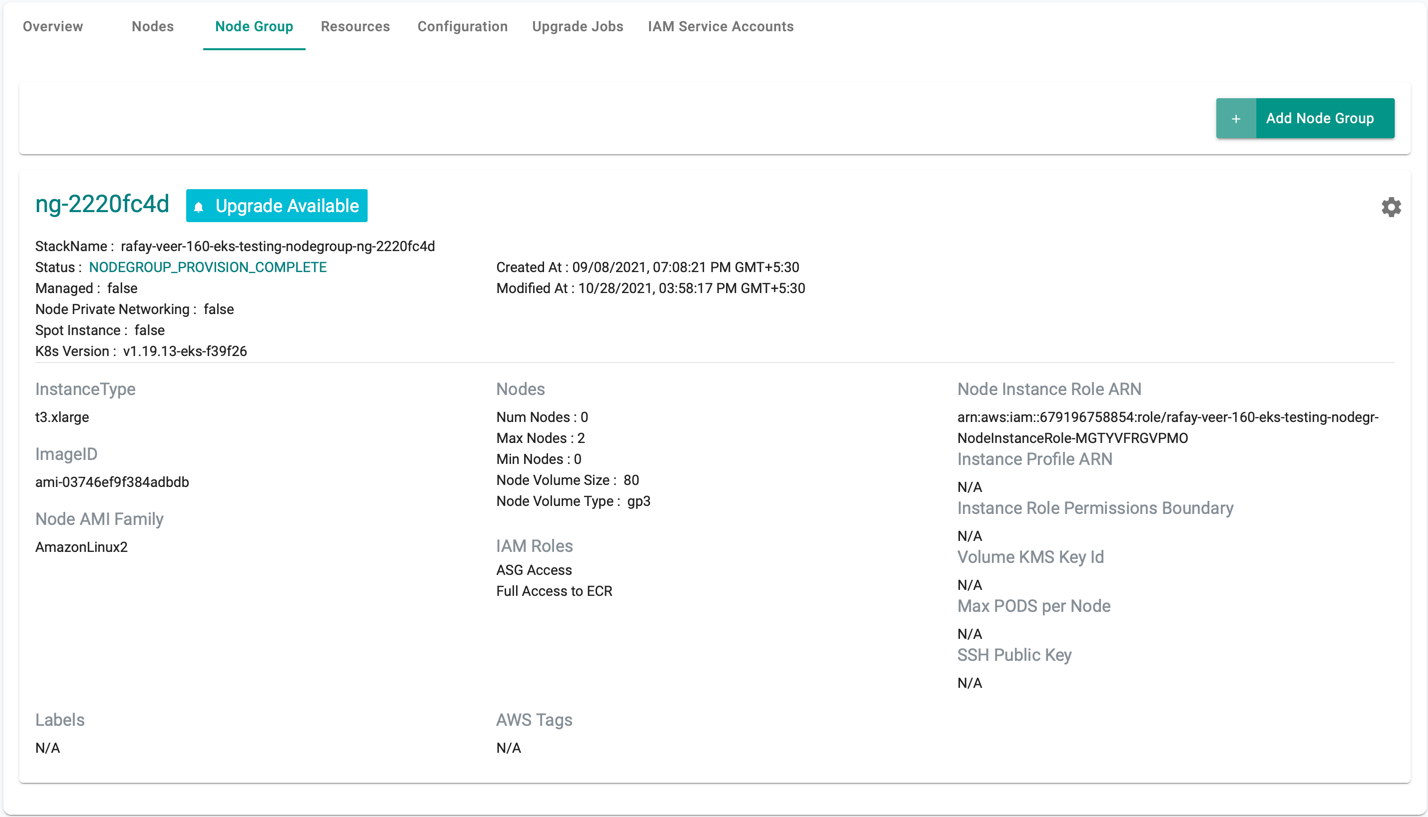

View Node Group Details¶

Click on the nodegroup to view all the nodegroups and their details. In the example below, as you can see, the EKS cluster has one nodegroup.

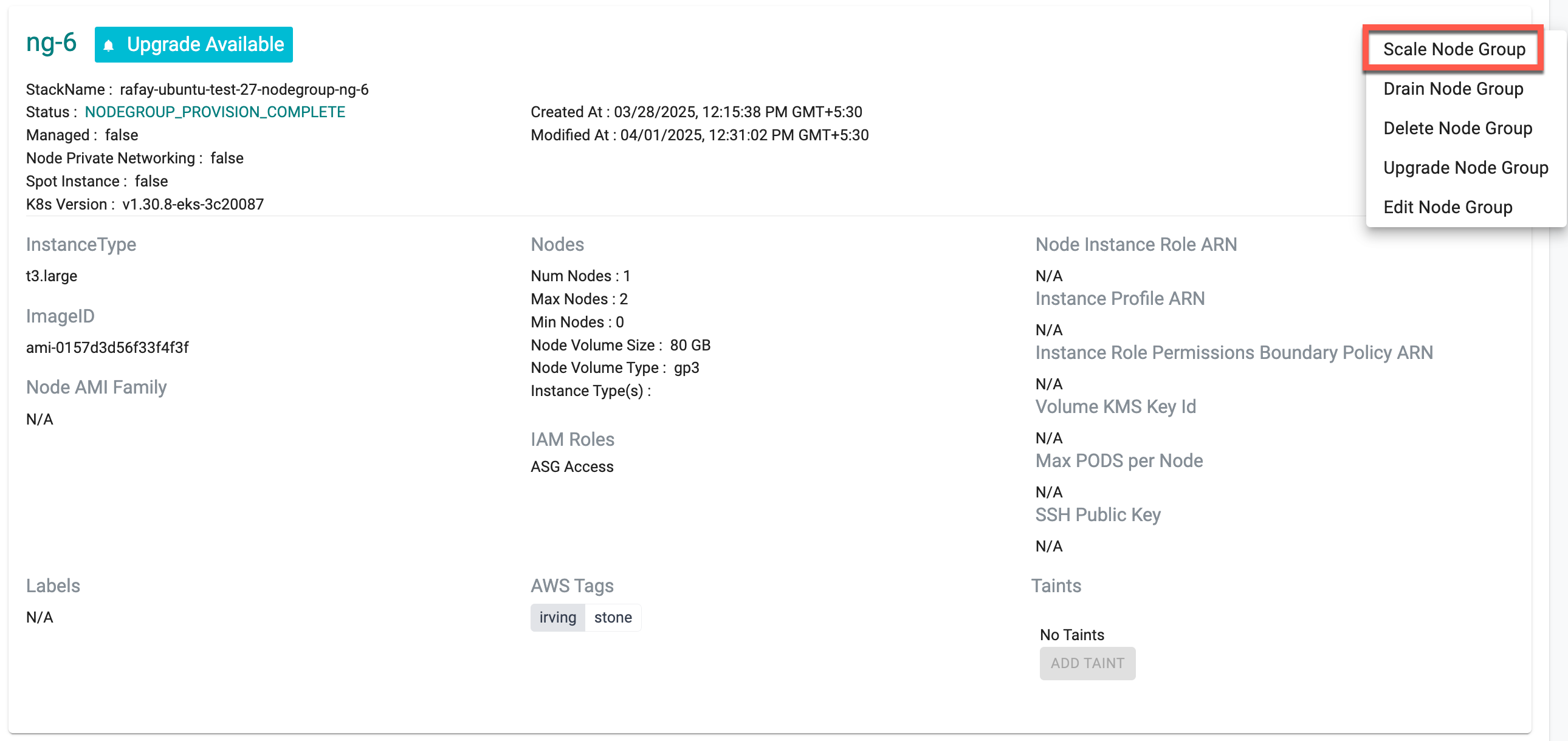

Scale Node Group¶

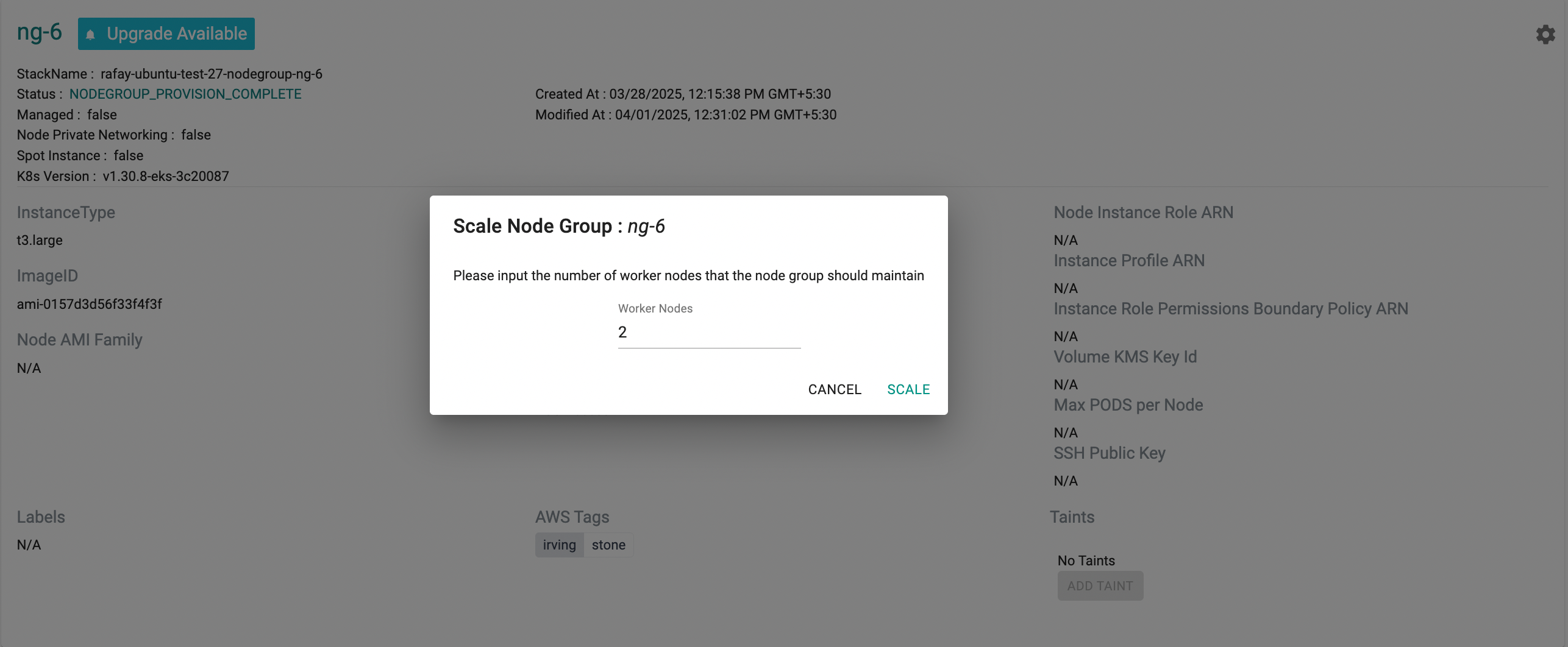

Click on the gear on the far right on a node group to view available actions for a node group.

This will present the user with a prompt for "desired" number of worker nodes. Depending on what is entered, the node group will be either "Scaled Up" or "Scaled Down"

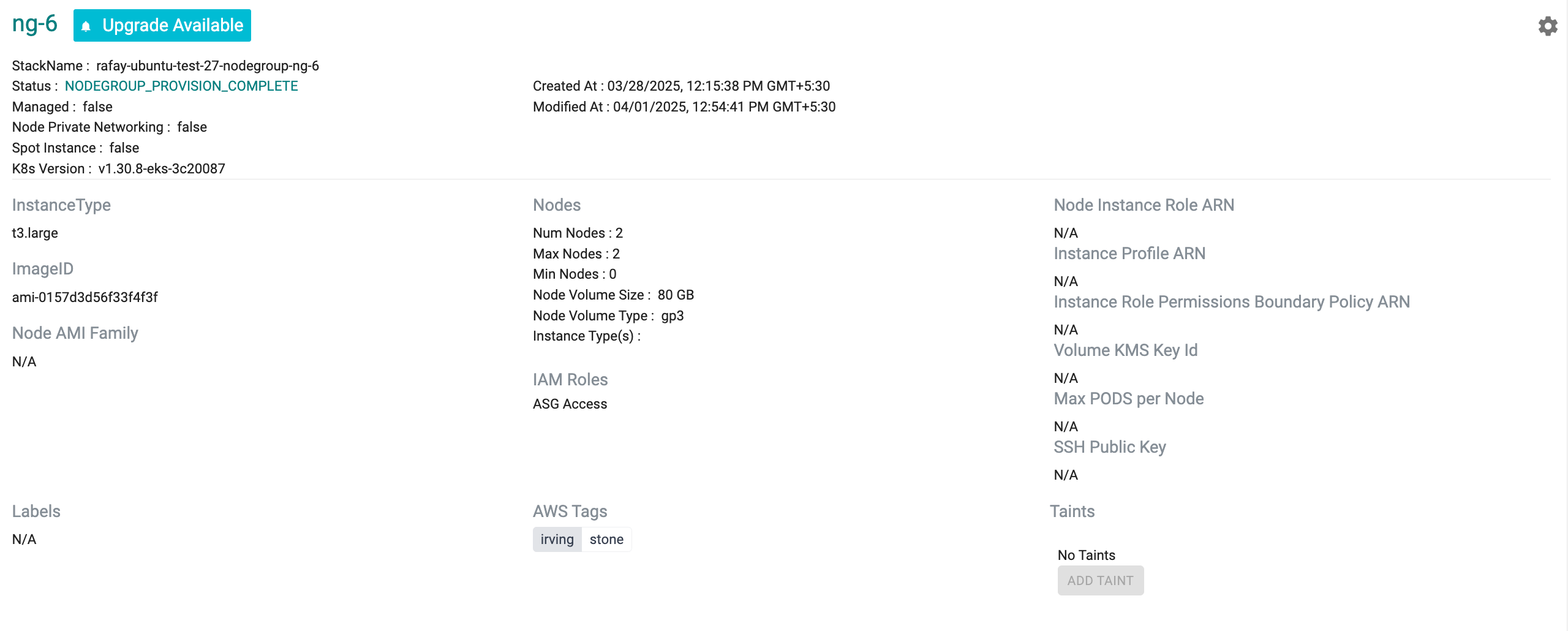

Scaling a node group can take ~5 minutes to ensure that the ec2 instances are provisioned, fully operational and attached to the cluster. The user is provided with feedback and status. Illustrative screenshot below

Important

Scaling down a node group does not explicitly drain the node before removing the nodes from the Auto Scaling Group (ASG). Pods running on the node are terminated and will be restarted by Kubernetes on available nodes.

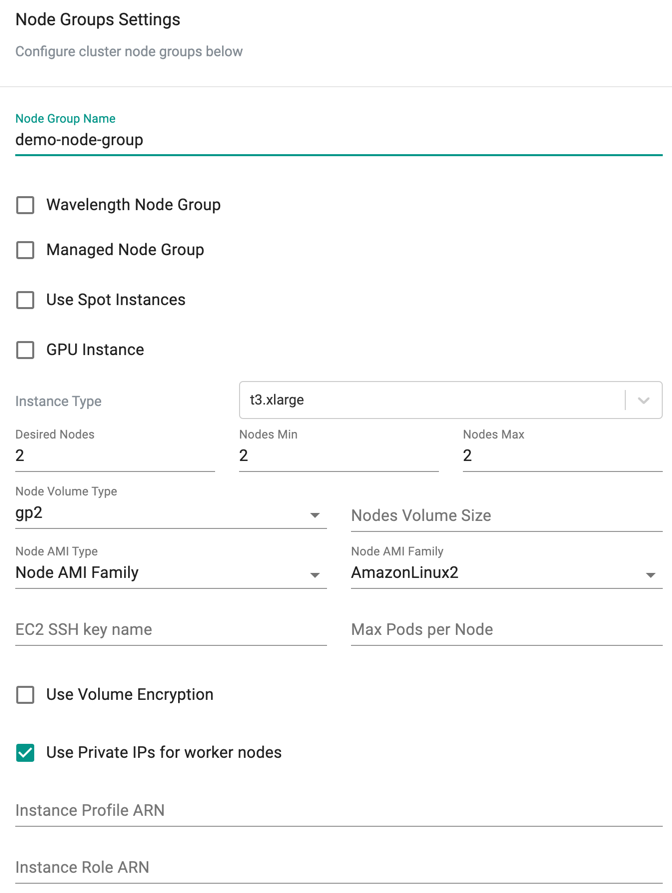

Add Node Group¶

Perform the below steps to add node groups:

- Click on Add Node Group on the far right. Node Group Settings screen appears

- Select the required Node Group type and provide the required details

- Click Provision Node Group

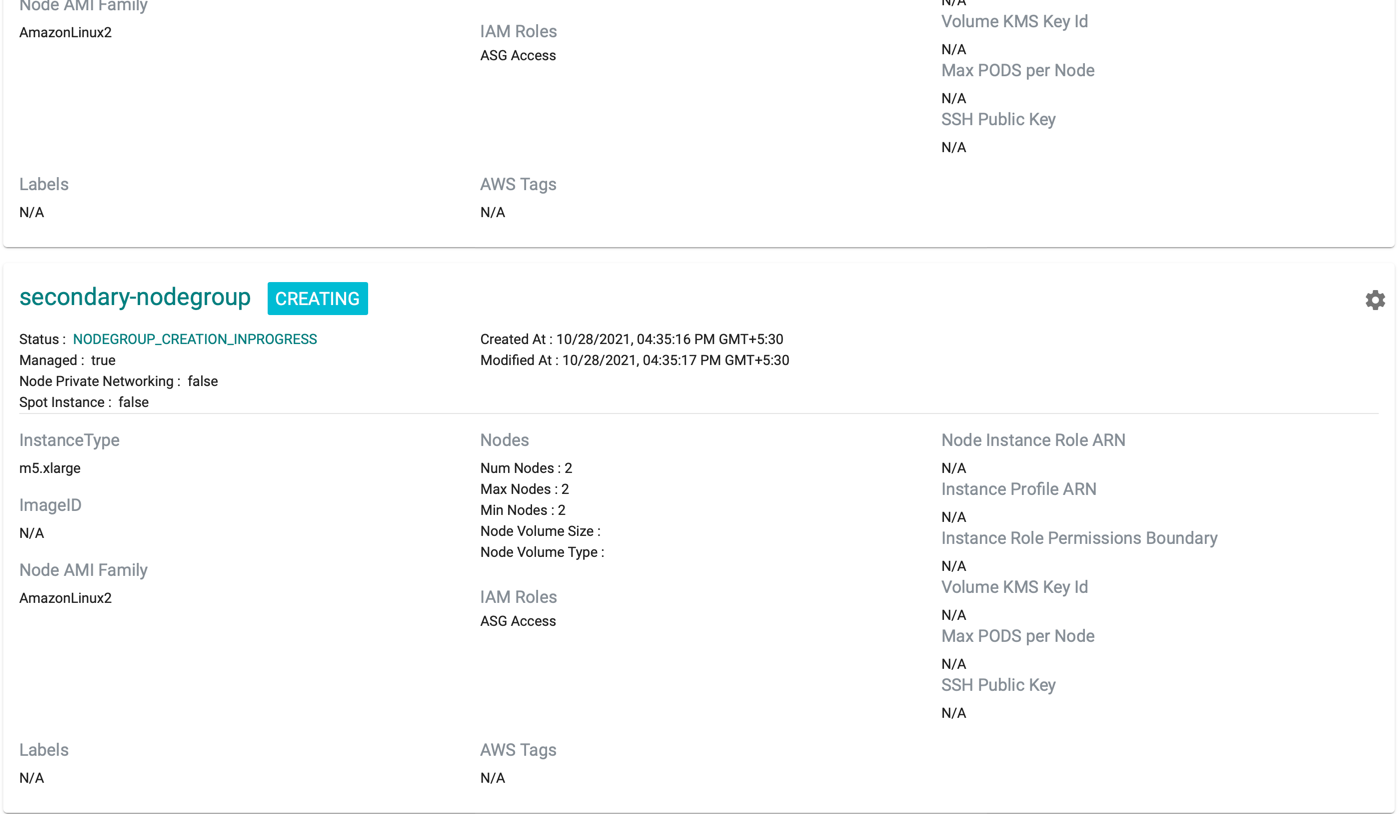

Adding a new nodegroup can take ~5 minutes to ensure that the ec2 instances are provisioned, fully operational and attached to the cluster. The user is provided with feedback and status. Illustrative screenshot below

Drain Node Group¶

When the user drains a node group, the nodes are cordoned. This ensures that existing pods are relocated from these nodes and new pods cannot be scheduled on these nodes.

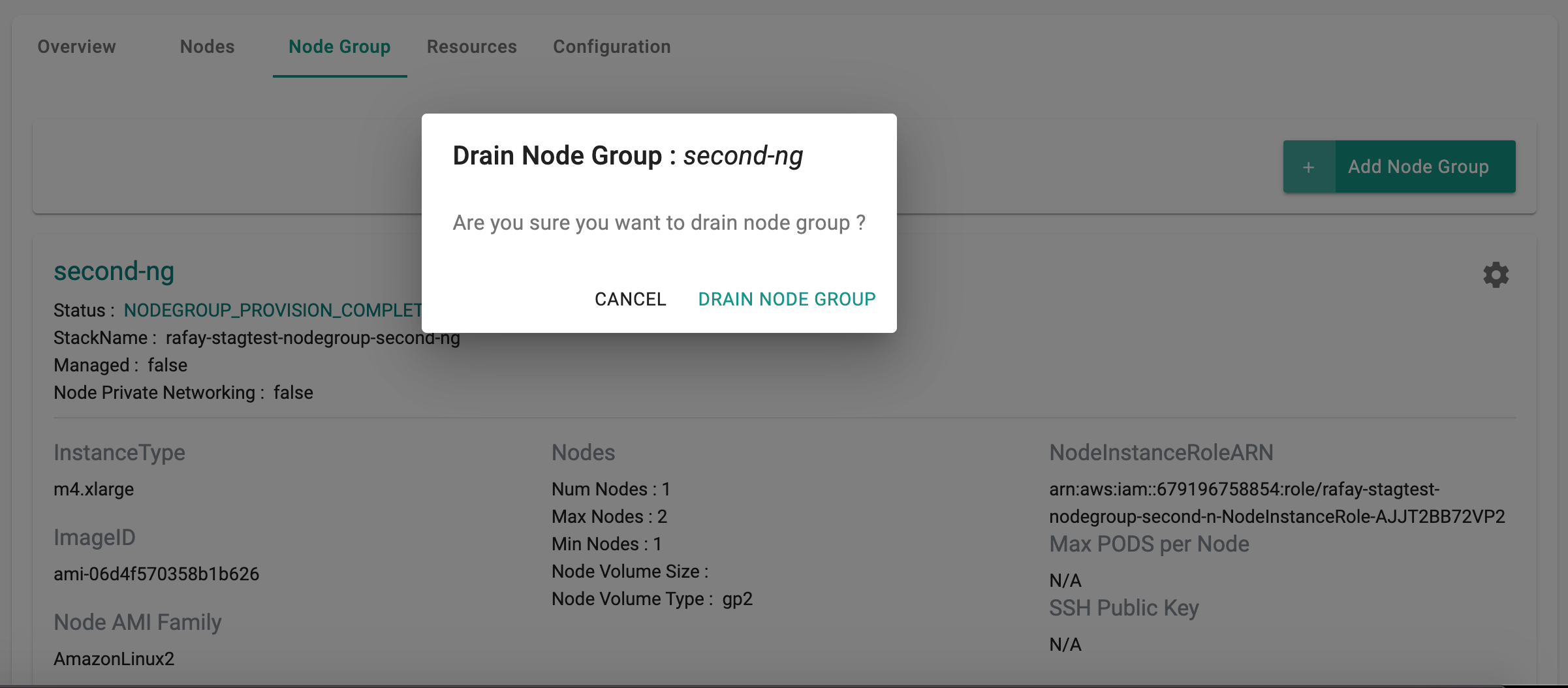

The user is provided a warning before the node group is drained.

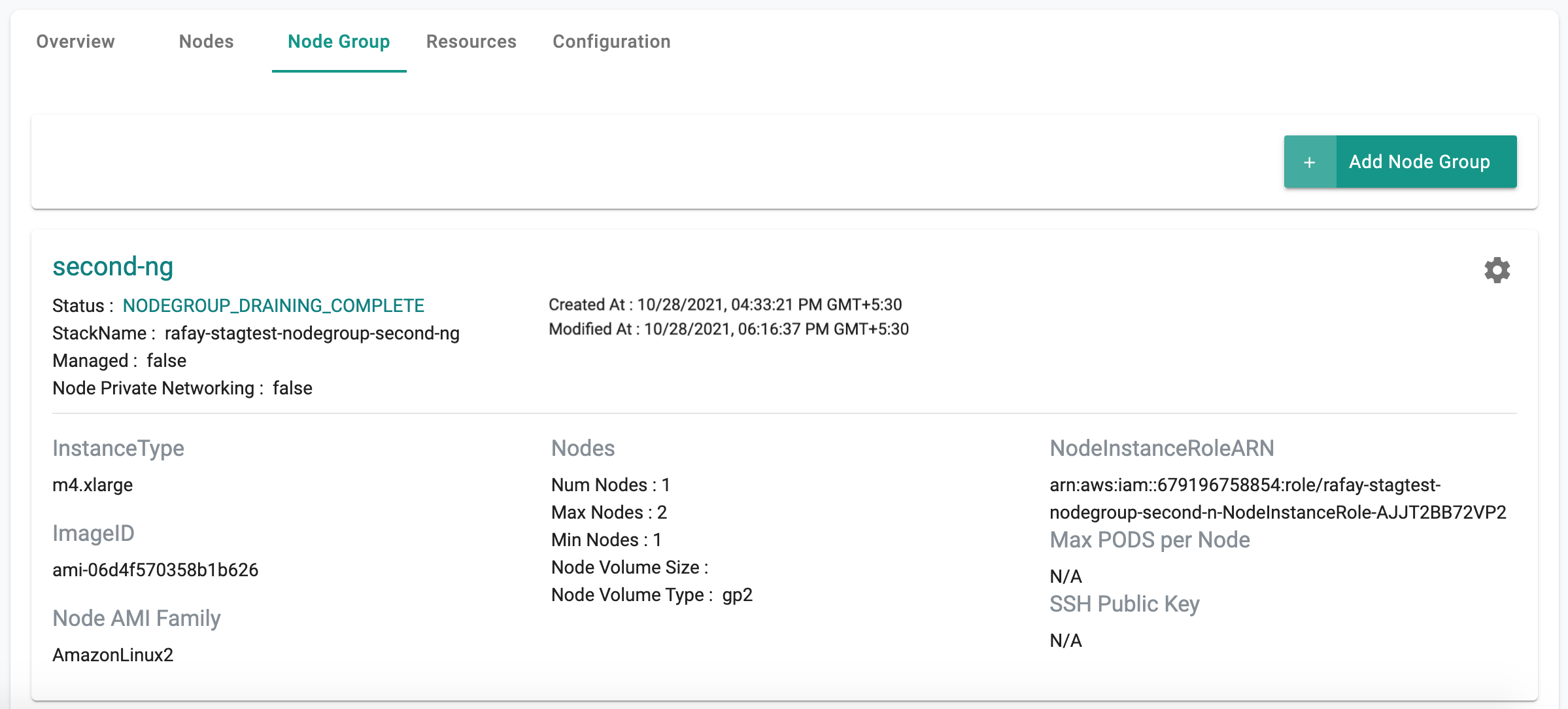

Draining a node group can take a few minutes. The user is provided with feedback and status once this is completed. Illustrative screenshot below

Users can leave a node group in a "drained" state for extended periods of time.

Delete Node Group¶

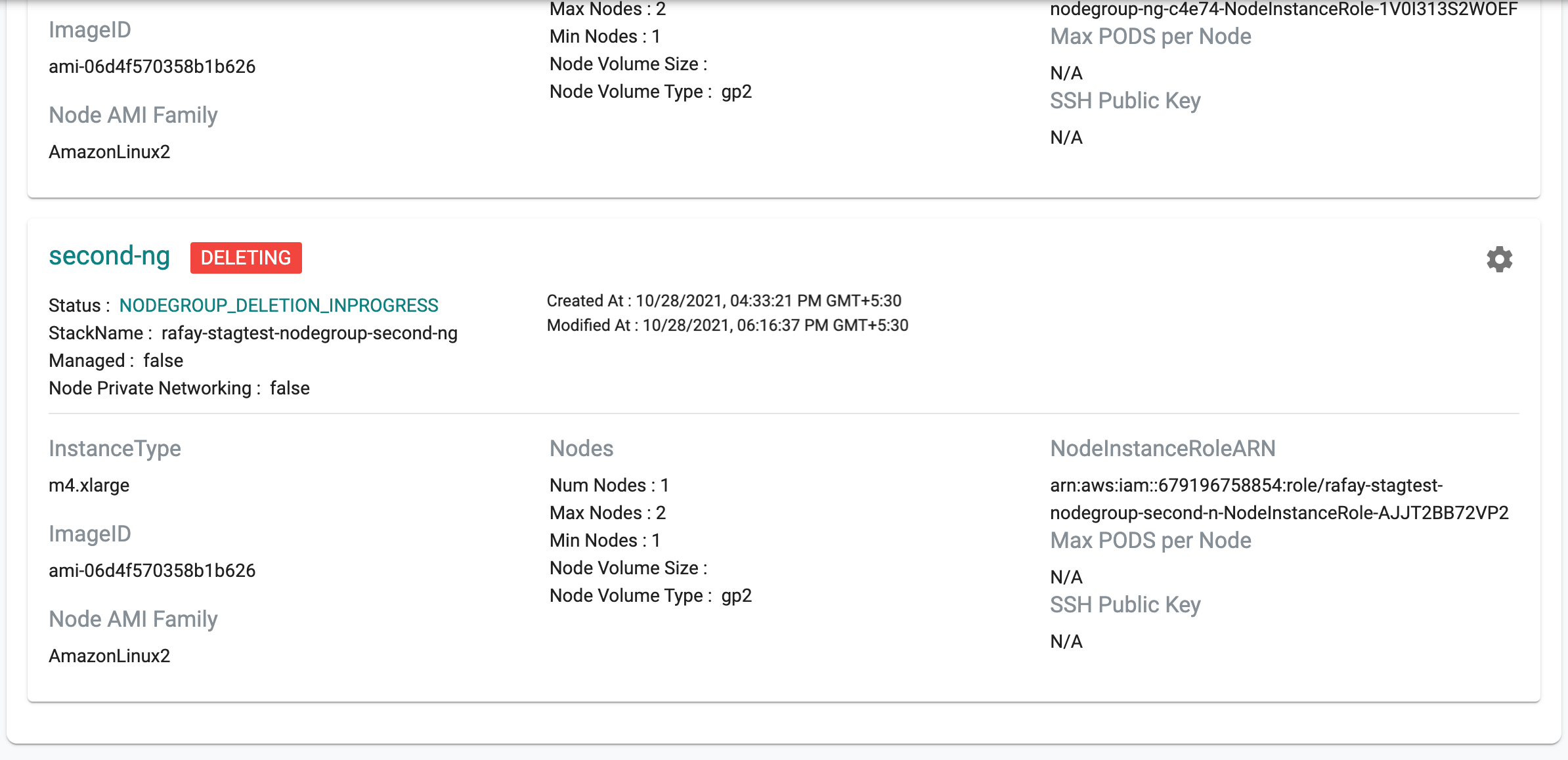

When the user deletes a node group, the Controller ensures that the node group is drained first before it is deleted.

Deleting a node group can take ~5 minutes to ensure that the ec2 instances are deprovisioned and the CF templates appropriately reconciled. The user is provided with feedback and status during this process. Illustrative screenshot below

Upgrade Node Groups¶

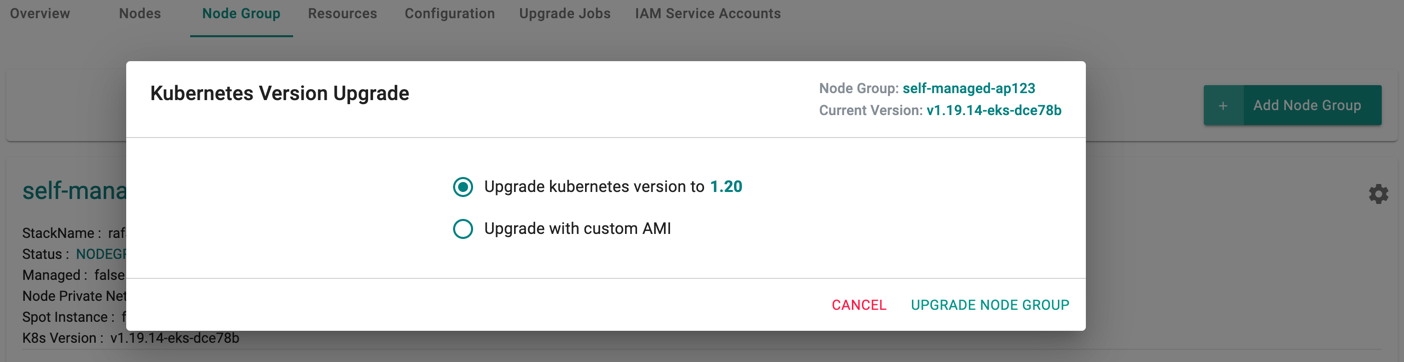

When the user upgrades a node group, the controller ensures that the node group is upgraded to the chosen Kubernetes version

The user can select either Upgrade to the latest kubernetes version or Upgrade with custom AMI and click Upgrade Node Group

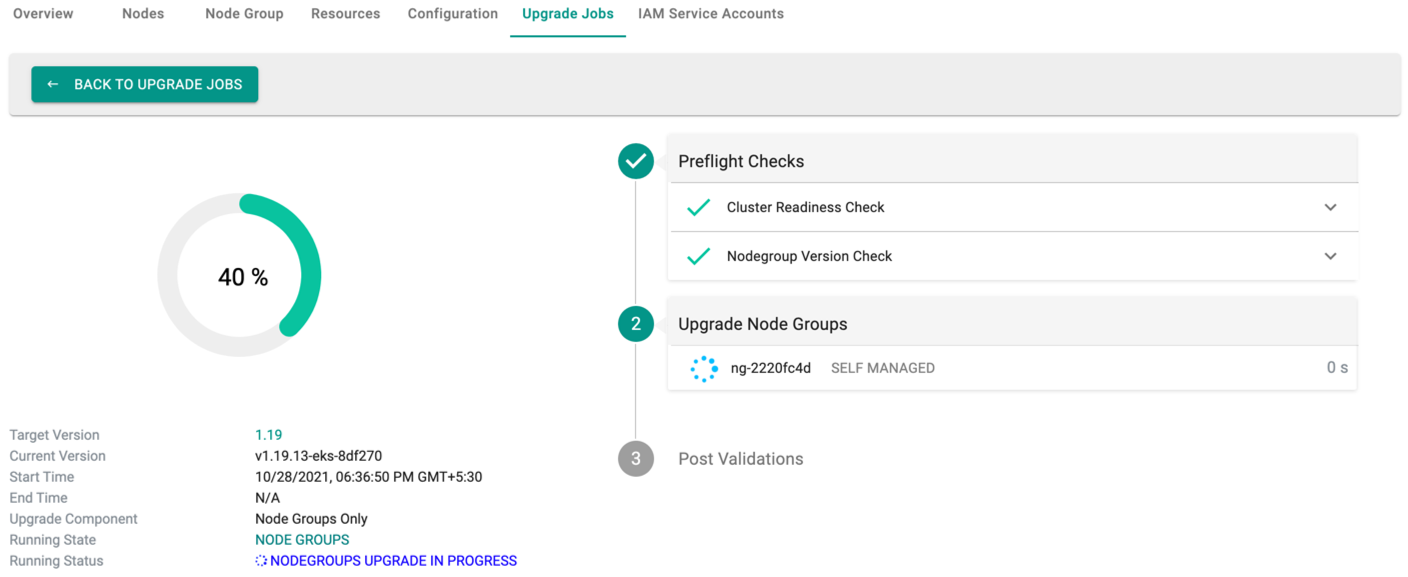

Upgrading a node group can take ~5 minutes and the user is provided with upgrade status as shown below

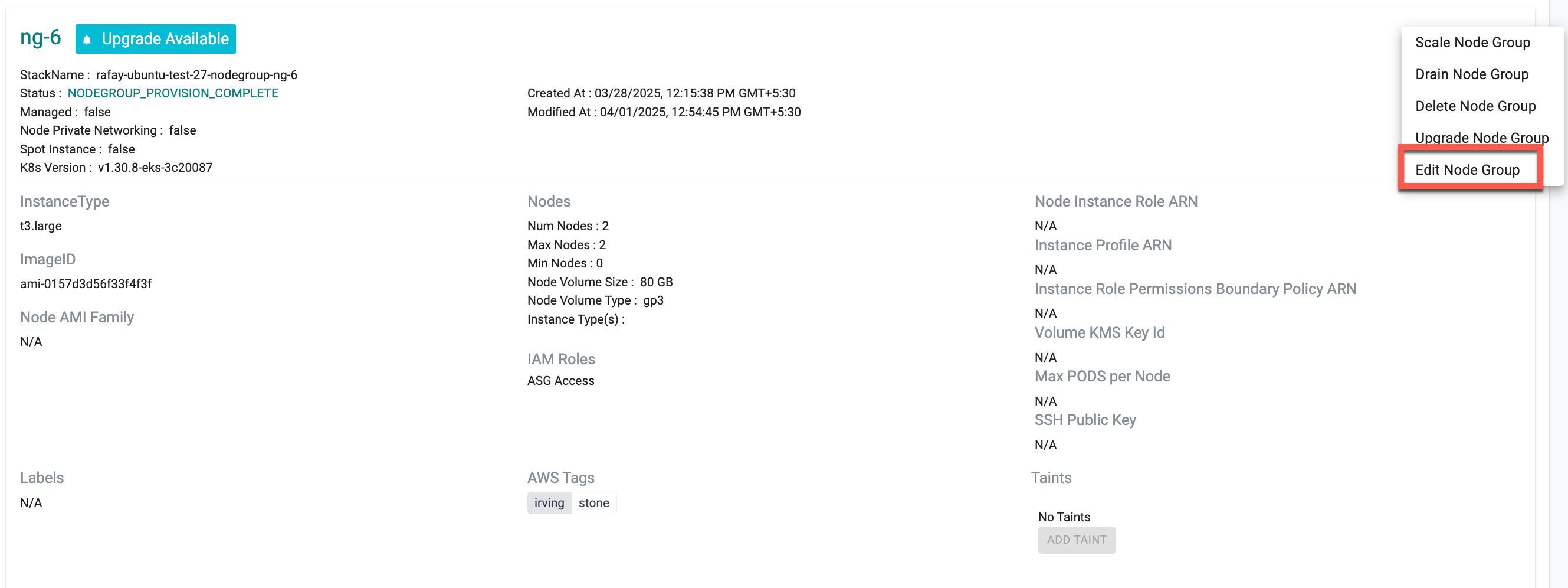

Edit Node Group¶

Users can add tags to both managed and self-managed node groups by using the Edit Node Group option.

-

To edit a self-managed node group:

- Provide the desired tags

-

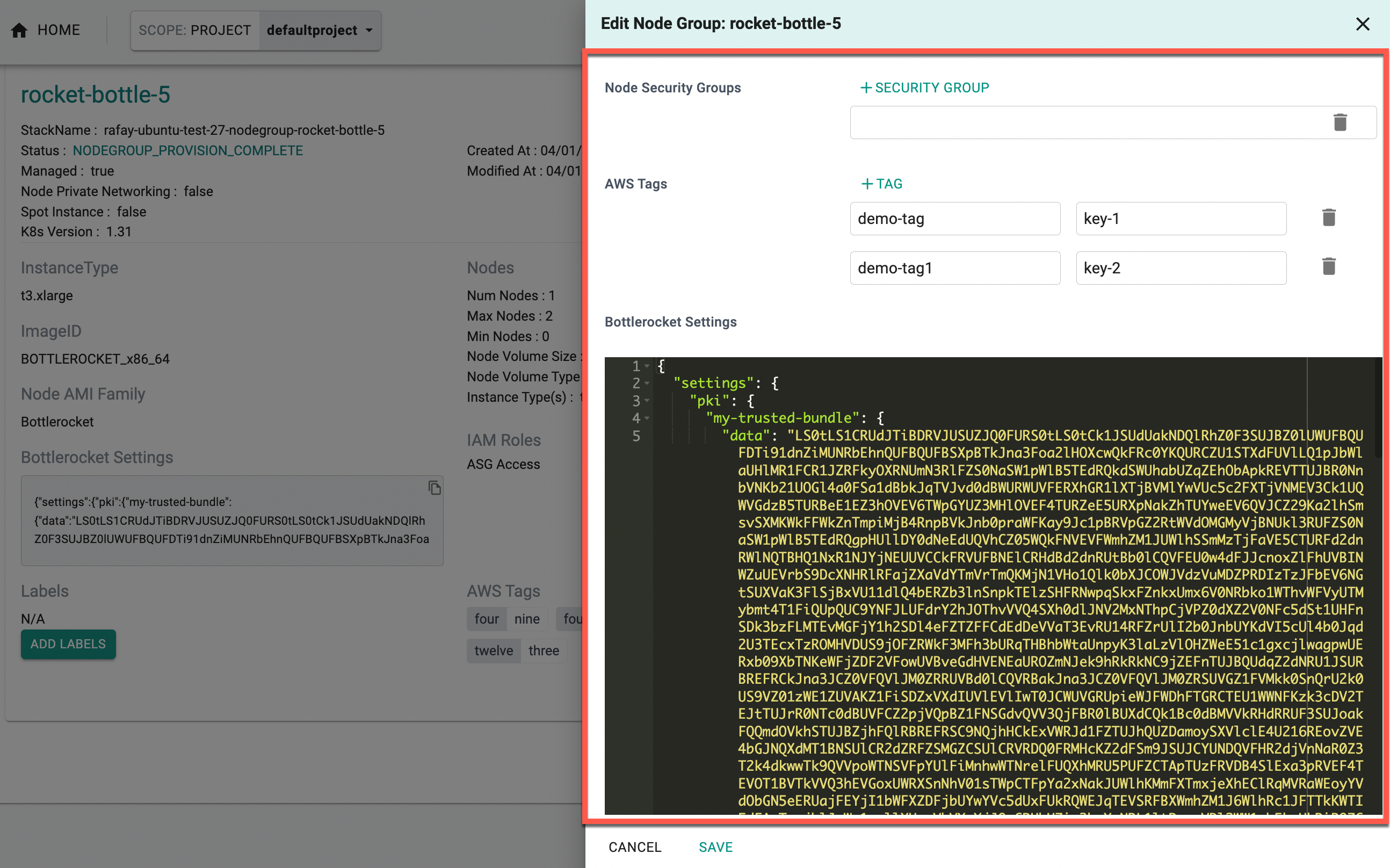

To edit a managed node group:

- Specify the Node Security Groups and AWS Tags

-

To edit a node group using the Bottlerocket AMI family:

- Specify the Node Security Groups, AWS Tags, and Bottlerocket Settings

❗ Important Note

- One field must be specified to edit a node group, but not all fields are required.

- Updating tags will recycle the nodes, as it will also update the launch template.