Spot Instances

Rafay makes it easy for cluster administrators to significantly save costs by simplifying the use of EC2 Spot Instances on Amazon EKS Clusters. Specifically, Rafay allows users to implement and codify a number of best practices associated with using Spot Instances such as diversification, automated interruption handling, and leveraging Auto Scaling groups to acquire capacity.

Overview¶

Spot Instances are spare Amazon EC2 capacity that are offered at significantly lower costs compared to On-Demand instances. Spot capacity is split into pools determined by instance type, Availability Zone (AZ), and AWS Region.

Spot Instance pricing can change based on supply and demand of a particular Spot capacity pool. A Spot instance pool is a set of unused EC2 instances with the same instance type (e.g. m5.xlarge), operating system and AZ.

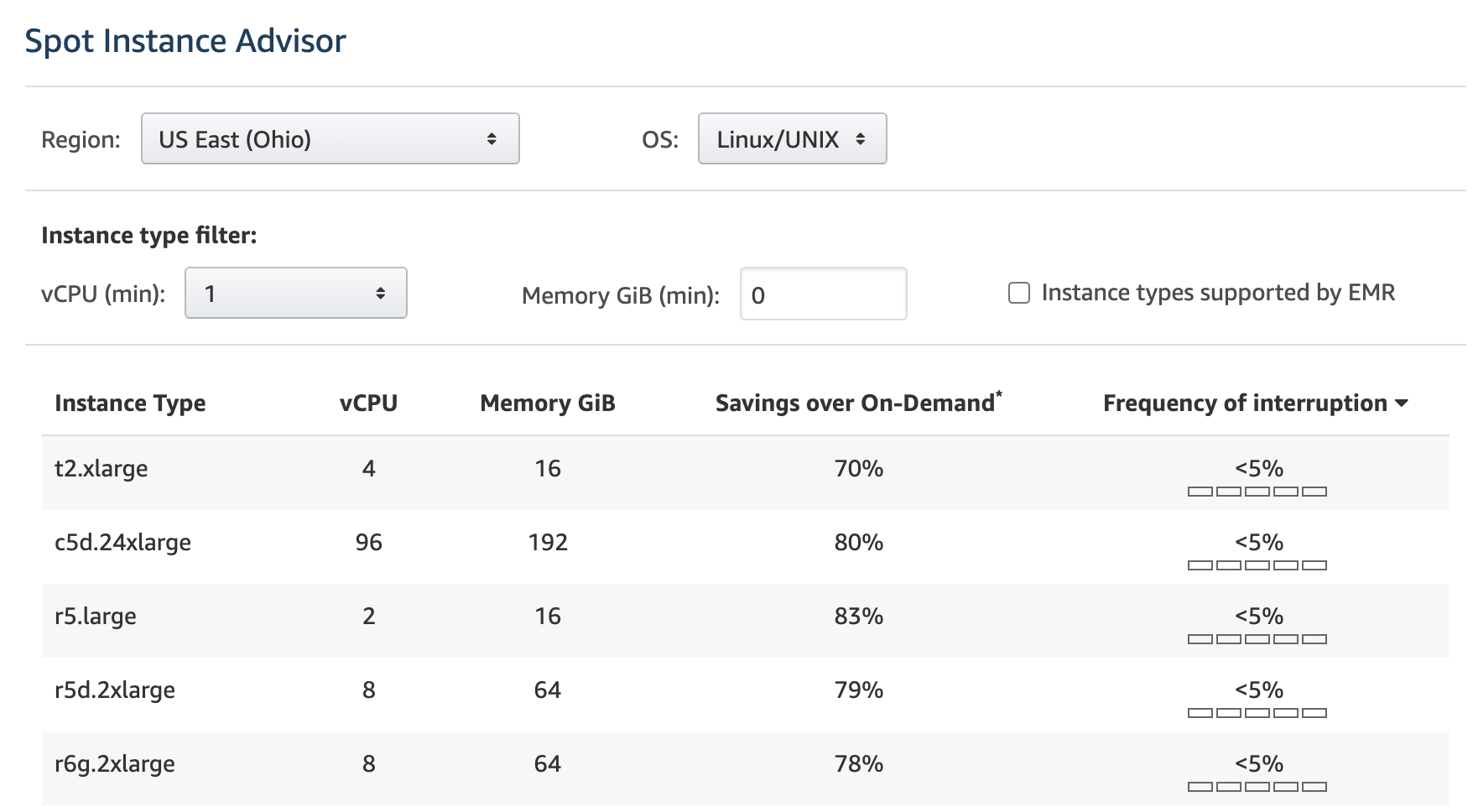

Spot Instances can be available at up to a 90% discount. AWS publishes a "Spot Instance Advisor" where they publish savings trends and frequency of interruption for various instance types. An illustrative example shown below.

Spot Policy¶

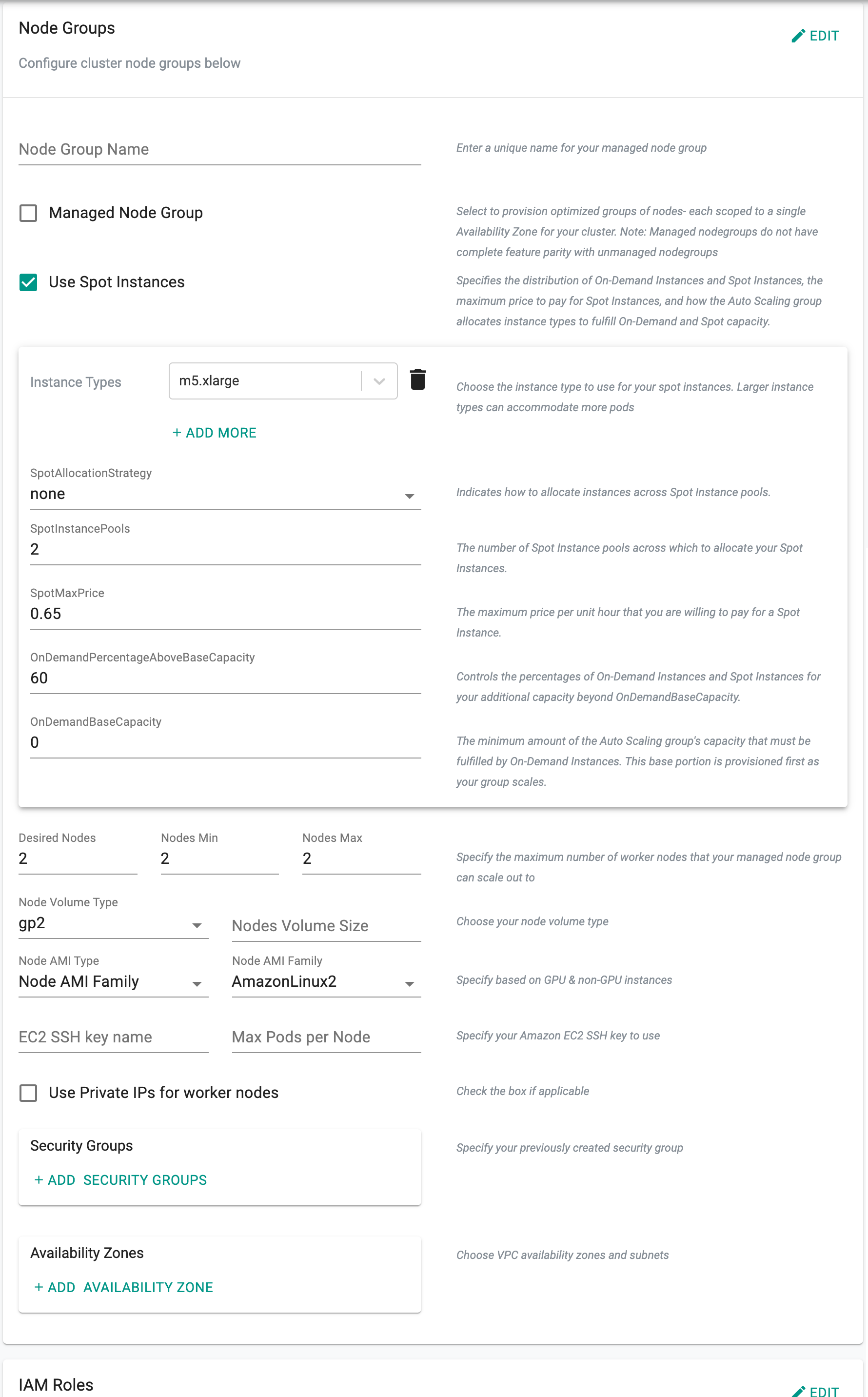

To use Spot Instances, enable "Use Spot Instances" in the Node Group configuration.

Instance Types¶

Specify multiple instance types for the node group.

SpotAllocation Strategy¶

- Select desired SpotAllocationStrategy (none, lowest-price, capacity-optimized)

- Specify SpotInstancePools

Spot Max Price¶

Users can still set up a maxPrice in scenarios where they want to set maximum budget. By default, maxPrice is set to the On-Demand price. Note that regardless of maxPrice, spot instances will still be charged at the current spot market price.

OnDemand Capacity¶

- OnDemandBaseCapacity

- OnDemandPercentageAboveBaseCapacity

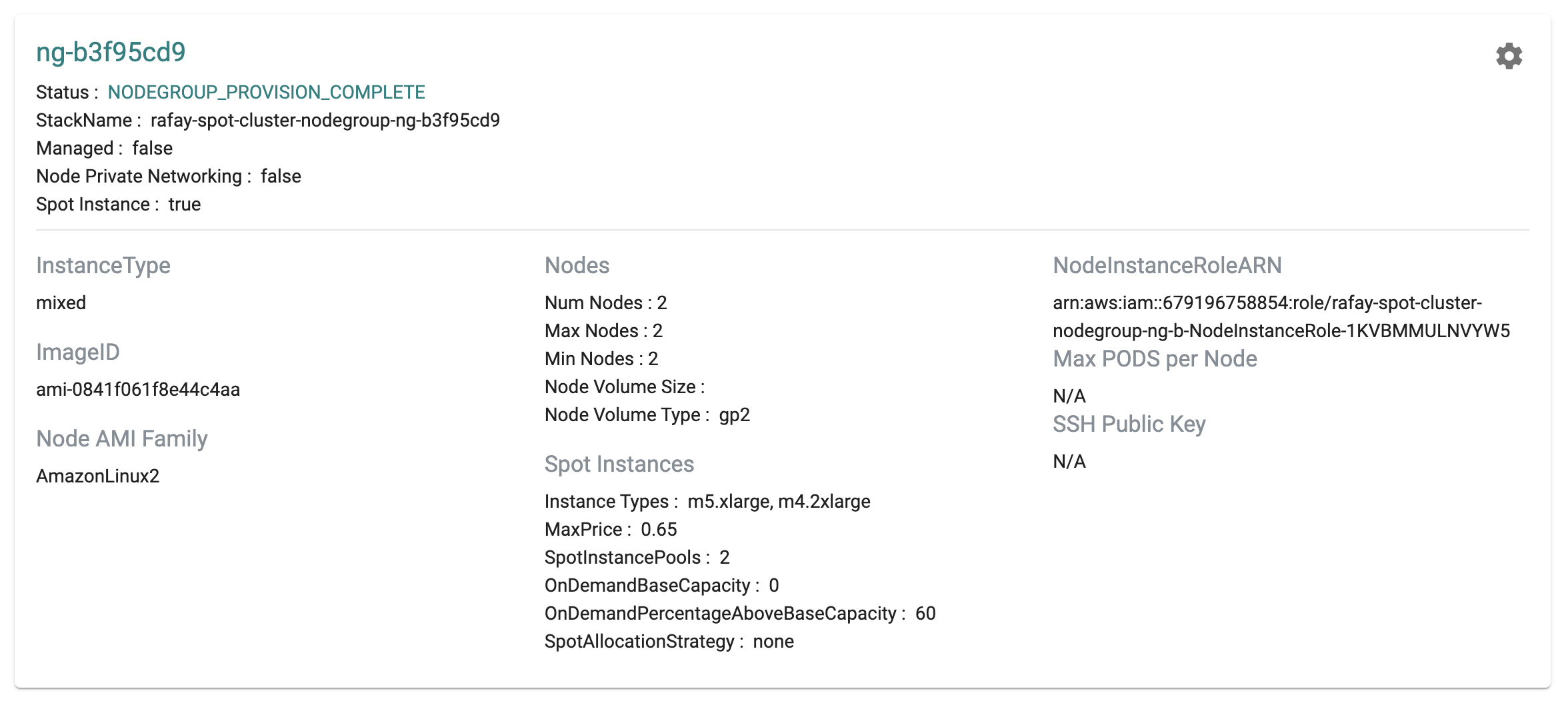

View Current Spot Policy¶

For Spot enabled node groups, users can view the current spot policy. Note the section called "Spot Instances".

Update Spot Policy¶

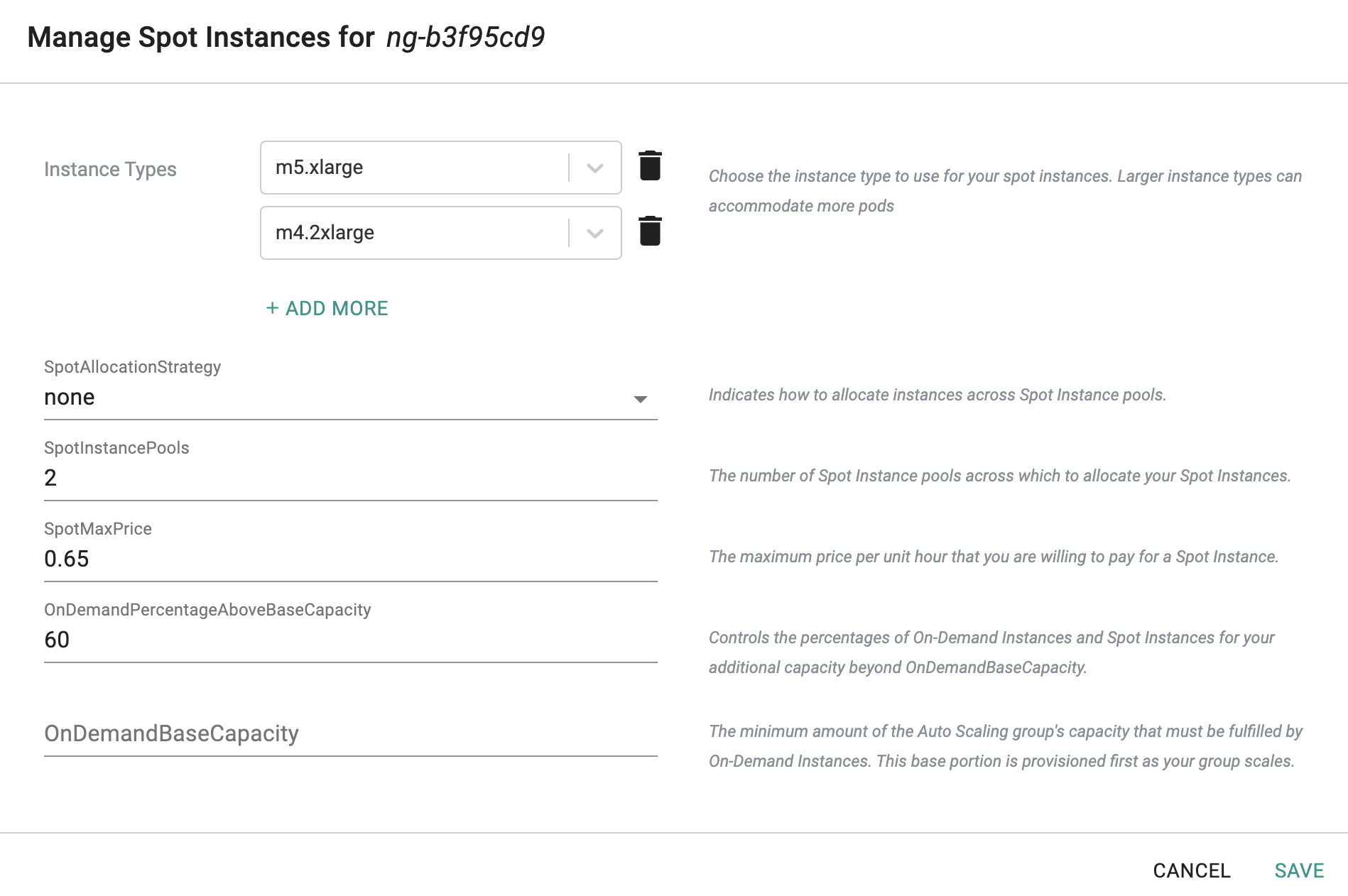

For existing Spot enabled node groups, users can update the spot policy anytime.

- Navigate to the Node Group for the EKS Cluster

- Click on the gear next to the Node Group

- Select "Manage Spot Instances"

- Make the necessary changes in the provided menu and save

Spot Requests¶

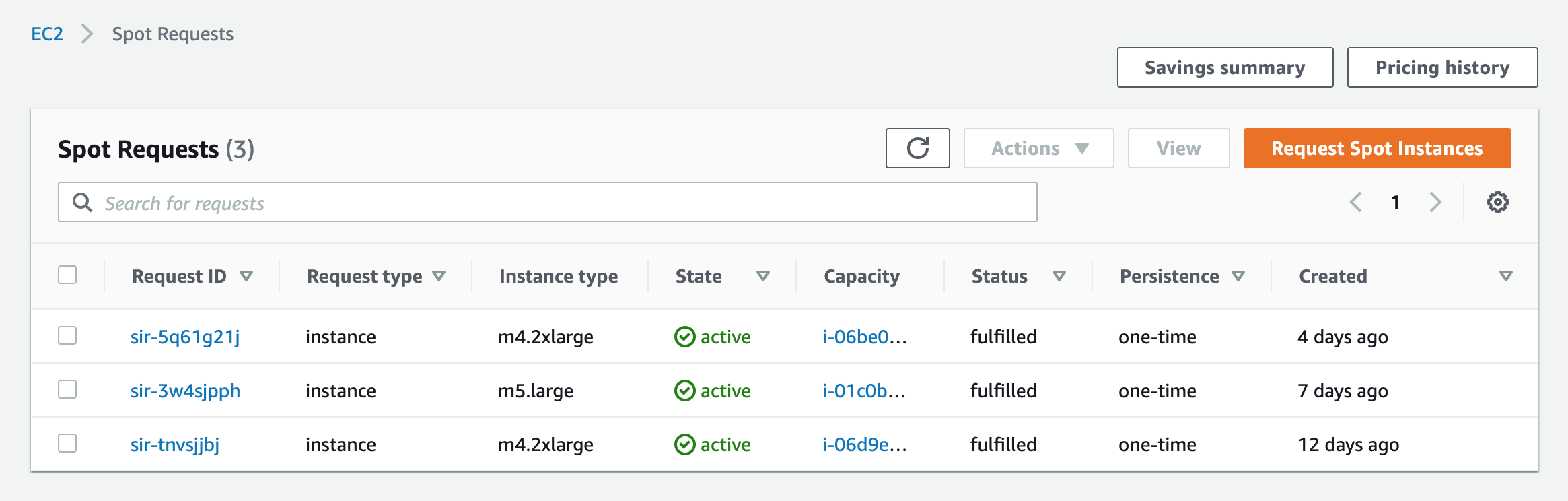

Users can view and monitor their Spot Requests on the AWS Console.

- Login into AWS's console and navigate to the region where you made the Spot requests

- Click on EC2 service and select "Spot Requests"

An illustrative example for spot requests and their status in this region is shown below.

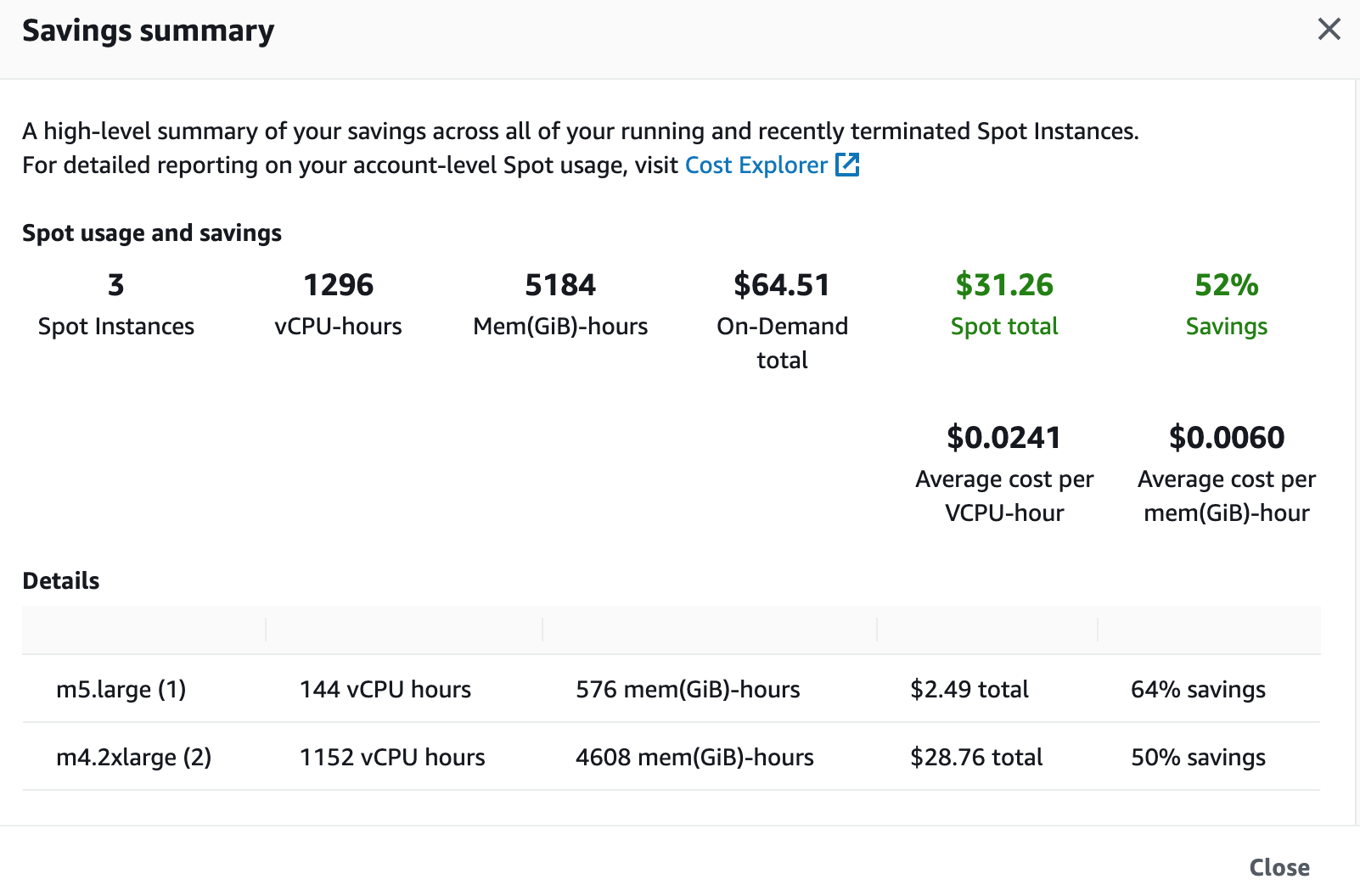

Spot Savings¶

- In the Spot Requests section, click on "Savings Summary"

An illustrative example for spot based savings is shown below. In this example, the user saved 64% in costs over regular 'On-Demand' instances by leveraging Spot Instances for the EKS Cluster worker nodes.

Examples¶

50% Spot¶

In this example, the nodegroup is configured with a base capacity of 50% with on-demand instances and 50% with spot instances.

Instance Types Select "m5.large" and "m5.xlarge" for instance types.

Spot Allocation Strategy Select "Capacity Optimized" for "spotAllocationStrategy".

This option will ensure that the provisioned instances in our nodegroups are procured from the spot pools with lower probability of being interrupted.

On Demand Set "onDemandBaseCapacity" to "0". Set "onDemandPercentageAboveBaseCapacity" to "50".

Node Groups Set Desired Nodes to 2, Nodes Min to 0 and Nodes Max to 5.

This will configure the autoscaling group for the worker node "node group" to maintain at least 2 worker nodes. One of the worker nodes (50% capacity) will be Spot and the other will be On-Demand.

100% Spot¶

In this example, the nodegroup is configured to use 100% spot instances.

Instance Types Select "m5.large" and "m5.xlarge" for instance types.

Spot Allocation Strategy Select "Capacity Optimized" for "spotAllocationStrategy".

This option will ensure that the provisioned instances in our nodegroups are procured from the spot pools with lower probability of being interrupted.

On Demand Set "onDemandBaseCapacity" to "0". Set "onDemandPercentageAboveBaseCapacity" to "0".

Node Groups Set Desired Nodes to 2, Nodes Min to 0 and Nodes Max to 5.

This will configure the autoscaling group for the worker node "node group" to maintain at least 2 worker nodes. Both worker nodes (100% capacity) will be Spot.

Best Practices¶

Spot Allocation Strategy¶

AWS Auto Scaling Groups (ASG) backing the worker node groups support mixed instance types and will automatically replace instances if they are terminated due to Spot interruption.

To decrease the chance of interruption, use the capacity-optimized Spot allocation strategy. This automatically launches Spot Instances into the most available pools by looking at real-time capacity data, and identifying which are the most available.

Interruptions¶

When EC2 needs the instances back, interruption notifications are sent to the instances in the associated Spot pool. The instance is then provided a two window grace period after which it is reclaimed.

It is sensible to configure the EKS Cluster appropriately so that it can automate a response to this interruption notification. For example, you may want to drain pods, ELB connections etc.

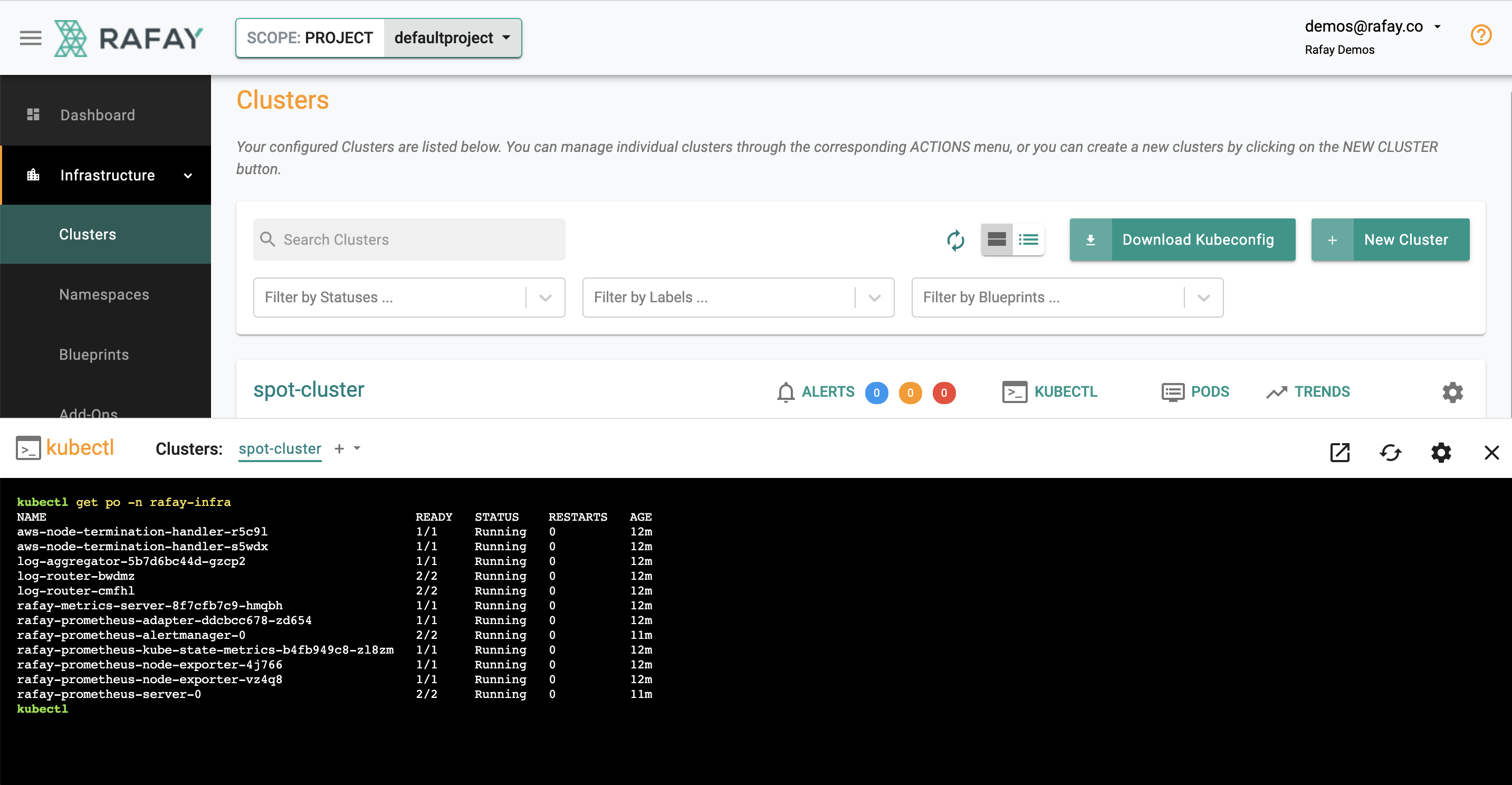

On Rafay provisioned EKS clusters, the default cluster blueprint automatically deploys the AWS Node Termination Handler.

The AWS Node Termination Handler ensures that the EKS control plane can respond to ec2 related events (i.e. maintenance events and spot interruptions). This handler detects instances going down and use the Kubernetes API to cordon the node to ensure no new pods are scheduled on it. The node is then drained to remove any existing pods.

Without this, workloads pods may not have enough time to stop gracefully and the workload may suffer from downtime before it can fully recover. The screenshots below shows the daemonset for AWS Node Termination handler on a Rafay provisioned EKS Cluster.

kubectl get ds -A

NAMESPACE NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

kube-system aws-node 2 2 2 2 2 <none> 13m

kube-system kube-proxy 2 2 2 2 2 <none> 13m

rafay-infra aws-node-termination-handler 2 2 2 2 2 <none> 2m30s

rafay-infra log-router 2 2 2 2 2 <none> 2m48s

rafay-infra rafay-prometheus-node-exporter 2 2 2 2 2 <none> 2m9s

rafay-system nginx-ingress-controller 2 2 2 2 2 kubernetes.io/os=linux 2m8s

Instance Flexibility/Diversification¶

As a best practice for Spot, users should consider "instance flexibility" as a powerful tool in their arsenal. This allows users to acquire instances from many different pools of Spot capacity and therefore reduce the likelihood of being interrupted.

In a nutshell, it is prudent to employ a strategy where you tap into multiple spot capacity pools across instance types and AZs to achieve your desired scale.

For example, if your EKS cluster worker nodes are deployed across "Two AZs and uses only a m5.xlarge instance type", then you are technocally only using two (2x1) spot capacity pools.

A more robust alternative would be to use "Six AZs and multiple instance types (e.g. four)". This will give the user 24 (6x4) spot capacity pools.