Shared VPC Network

A Shared Virtual Private Cloud (VPC) enables to link resources across various projects to a central VPC network and this connects projects within the same organization. In the context of Shared VPC, specify a project as the host project and connect one or more additional service projects (target projects) to it. The VPC networks within the host project are referred to as Shared VPC networks. During cluster provisioning, the Shared VPC can be utilized in the target clusters.

Pre-requisites¶

Before creating a GKE cluster with shared VPC, ensure the following conditions are satisfied in GCP Console:

- Designate a HOST project and attach other SERVICE projects to the host project

-

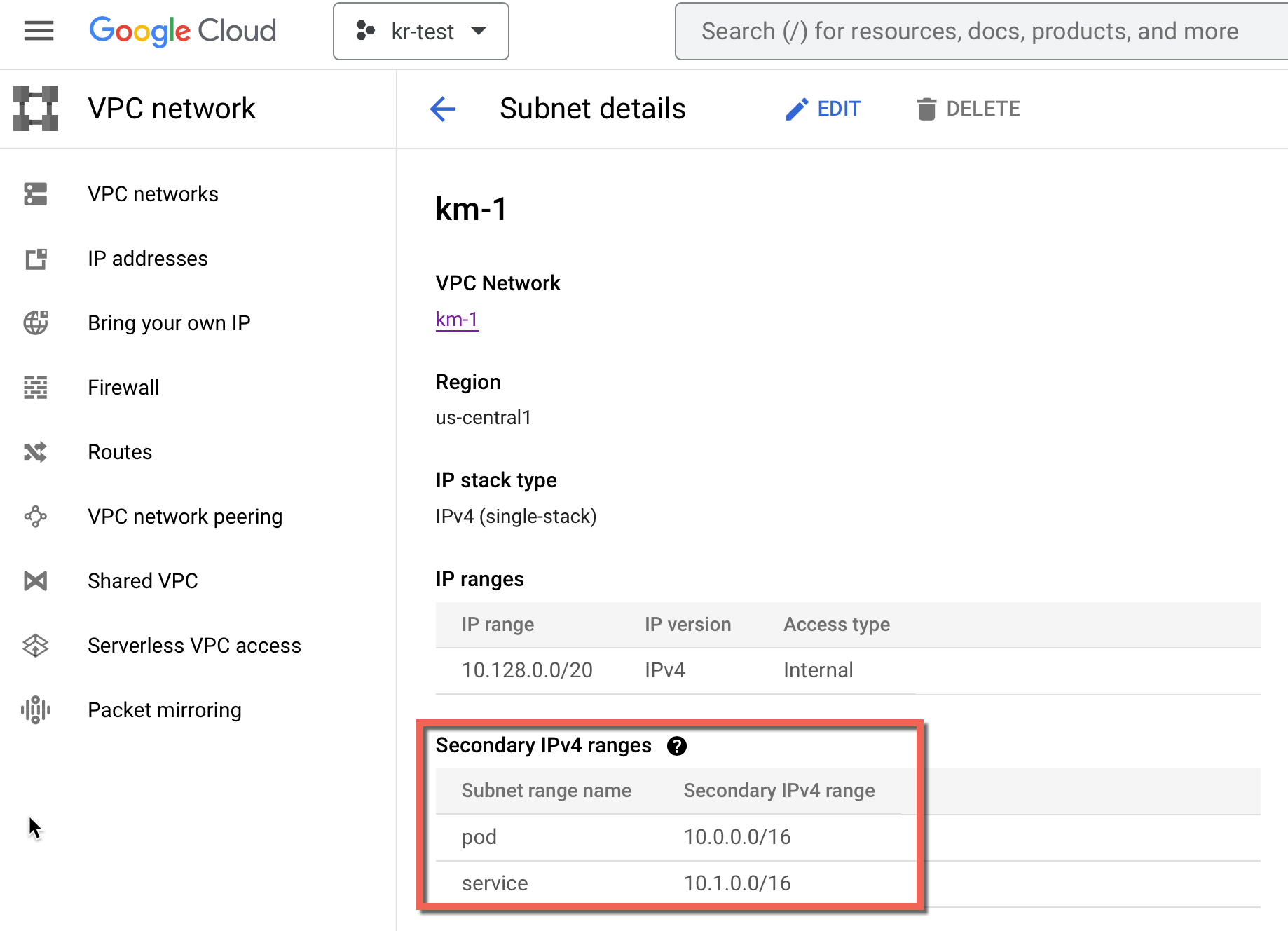

Create at least one network and a subnet (in the same region as the cluster) in the host project, along with two secondary address ranges

-

In host project, create a network named shared-net.

- Create a subnet named shared-subnet with two secondary ranges, one for Services and one for Pods.gcloud compute networks create shared-net \ --subnet-mode custom \ --project demo-12345gcloud compute networks subnets create shared-subnet \ --project demo-12345 \ --network shared-net \ --range 10.0.4.0/22 \ --region us-central1 \ --secondary-range services=10.0.32.0/20,pods=10.4.0.0/14 -

The cloud credentials being used for the GKE cluster must have the following roles associated with the HOST project:

- roles/compute.networkUser

- roles/compute.securityAdmin

- To share the VPC Network, user must have the permission

compute.networks.get

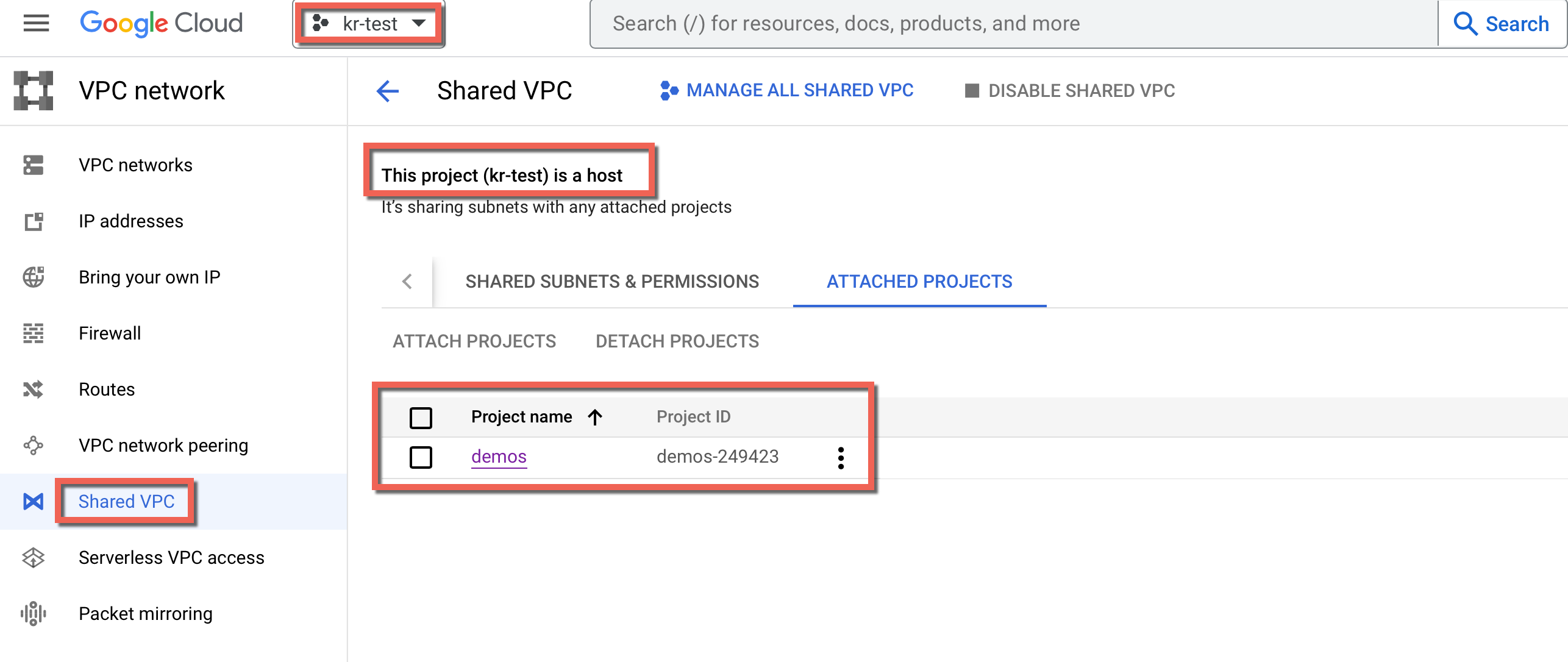

Below is an example of a host project, kr-test-200723, and the target project, demos

Once the VPC Network shared, users can retrieve the Pod Secondary CIDR Range (Name) and Service Secondary CIDR Range (Name) from the Subnet details page as shown below

- Configuring Additional Roles for Service Accounts:

When setting up clusters with shared VPCs, it's essential to ensure that the service accounts associated with the clusters have the additional roles Compute Network User, and Compute Security Admin

To add these roles to the service account, use the gcloud projects add-iam-policy-binding command with the appropriate project ID, service account, and role:

- Compute Network User

gcloud projects add-iam-policy-binding <host-project-id> --member=serviceAccount:<Service ACCOUNT in service project> --role=roles/compute.networkUser

- Compute Security Admin

gcloud projects add-iam-policy-binding <host-project-id> --member=serviceAccount:<Service ACCOUNT in service project> --role=roles/compute.securityAdmin

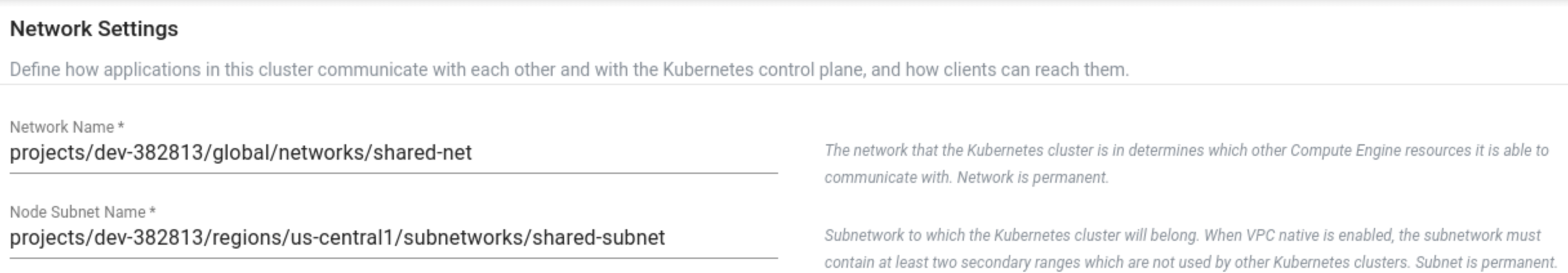

Network Settings in UI¶

To create a GKE cluster with shared VPC network via console, provide the Network Name projects/dev-382813/global/networks/shared-net, and Node Subnet projects/dev-382813/regions/us-central1/subnetworks/shared-subnet as shown in the below example