Bidirectional Synchronization

System Sync enables two Way or bidirectional Sync to maintain the configuration in the system (system database) and Git repository sync. Any configuration changes performed in the Git repository get reflected in the system, and vice-versa is supported. To achieve this bidirectional sync, external and internal triggers are required. External triggers (Pipeline Triggers) notify whenever resources are modified in a Git repository, and internal triggers notify whenever resources are modified in an internal artifact store.

Cluster Resource System Sync flow¶

The following are sequence diagrams that illustrate actions in the System sync flow for cluster resource.

Cluster Provision Click Ops flow

sequenceDiagram

autonumber

participant Admin

participant Rafay

participant AWS/Azure

participant Customer Git Repo

rect rgb(191, 223, 255)

note right of Admin: Day-1:Cluster Provisioning

Admin->>Rafay: Configure Cluster via Web Console

Rafay->>AWS/Azure: Provision Cluster

Rafay->>Customer Git Repo: “Auto Generate” Cluster Spec

Rafay->>Admin: Cluster Provisioned

endUpdate Cluster GitOps flow

sequenceDiagram

autonumber

participant Admin

participant Rafay

participant AWS/Azure

participant Customer Git Repo

rect rgb(191, 223, 255)

note right of Admin: Day2: Scale and upgrade via gitops

Admin->>Customer Git Repo: Git PR to update cluster spec

Customer Git Repo->> Rafay: Webhook notification

Rafay->>AWS/Azure: Bring cluster to desired state

Rafay->>Admin: Cluster Updated

endUpdate Cluster Click Ops flow

sequenceDiagram

autonumber

participant Admin

participant Rafay

participant AWS/Azure

participant Customer Git Repo

rect rgb(191, 223, 255)

note right of Admin: Day2: Scale and upgrade via ClickOps

Admin->>Rafay:Update Cluster via WebConsole

Rafay->> AWS/Azure: Update Cluster

Rafay->>Customer Git Repo: Auto Update Cluster spec

Rafay->>Admin: Cluster Updated

endPre-requisites¶

System Sync Stage requires the below setup to complete the sync successfully

Github Repository

- User should create a valid Git repository

- Update the created repository in the Repositories page with an appropriate name

GitOps Agents

- Create GitOps Agents and associate with the K8s Cluster for secured connectivity to the repositories

- Git Repo used for System-Sync must be associated with the GitOps agent. This helps to access the repositories available in the private network

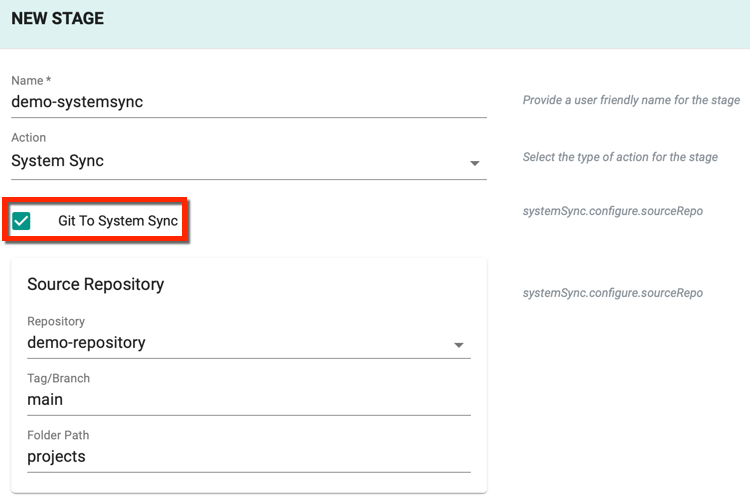

System Sync Operation¶

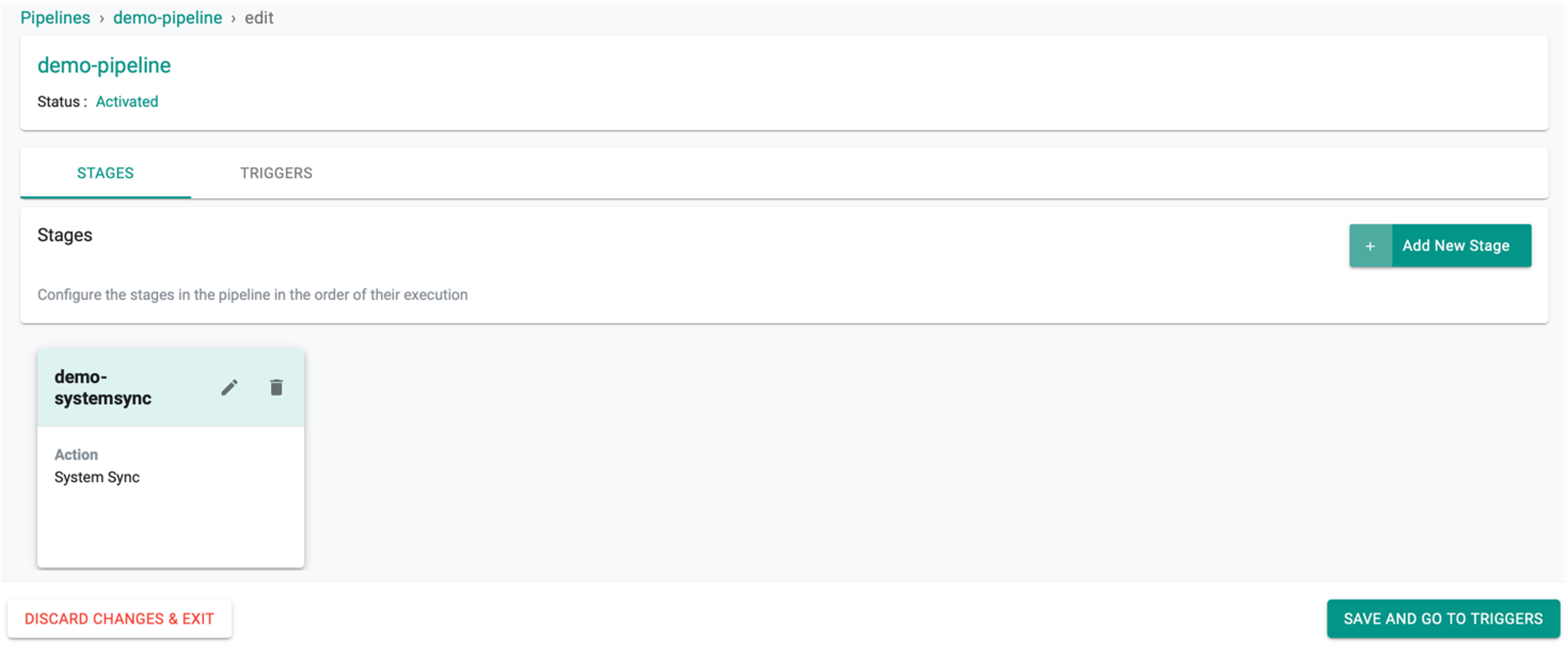

This stage is responsible for resource config sync between Git Repository and the system

- Provide a friendly name for the stage

- Select System Sync

Git To System Sync

- To sync the resource specification from Git Repository to System, select Git To System Sync checkbox

- Select the Repository from where the resource details are to be cloned

- Provide the Tag/Branch of the repository

- Provide the appropriate Folder path of the repository

When a commit occurs in the Git repository and triggers a Git-to-system sync, users will receive information about the paths of the resources that have been modified or updated by scanning the entire repository. During the initial Git-to-system sync, the system is updated with all lineage information. However, for subsequent modifications to resources in the repository, only the specific resource path will be displayed in the pipeline.

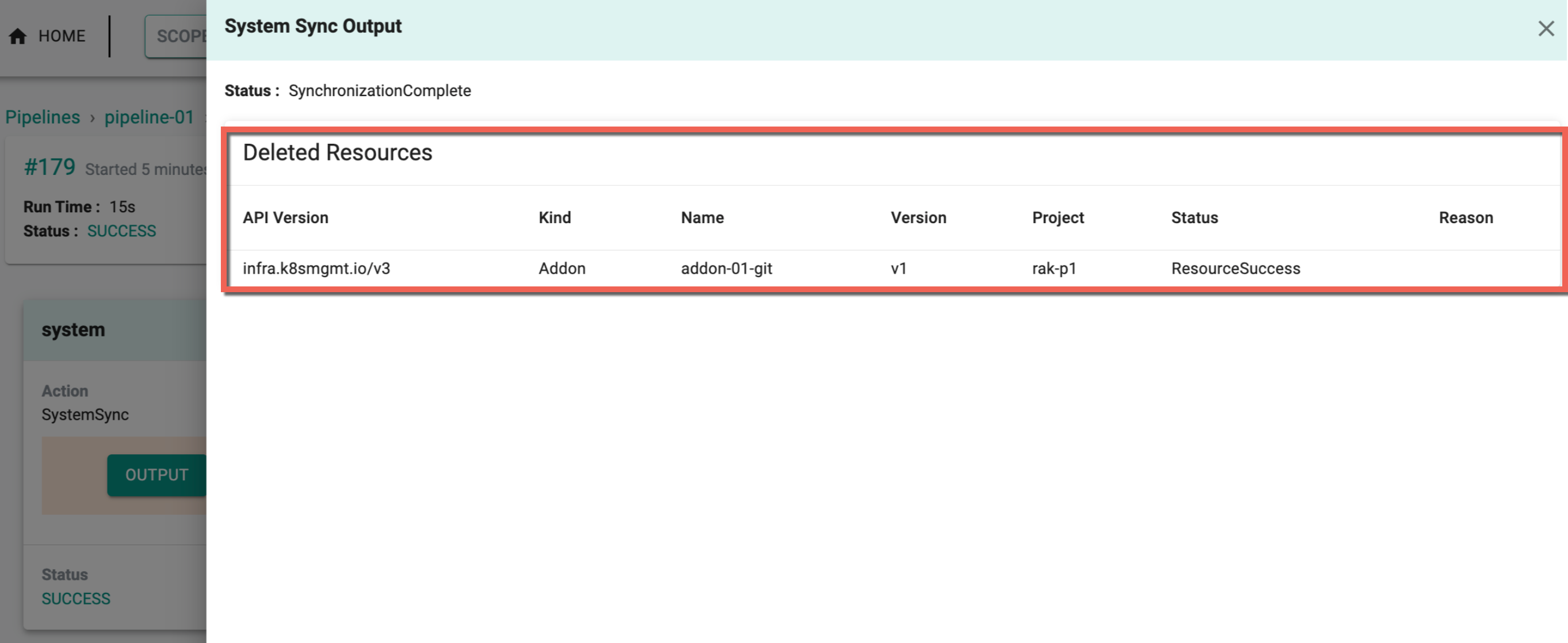

A resource sourced from Git can only be deleted if it existed in the repository during any synchronization from Git to the system, and these changes will be visible in the pipeline as shown below

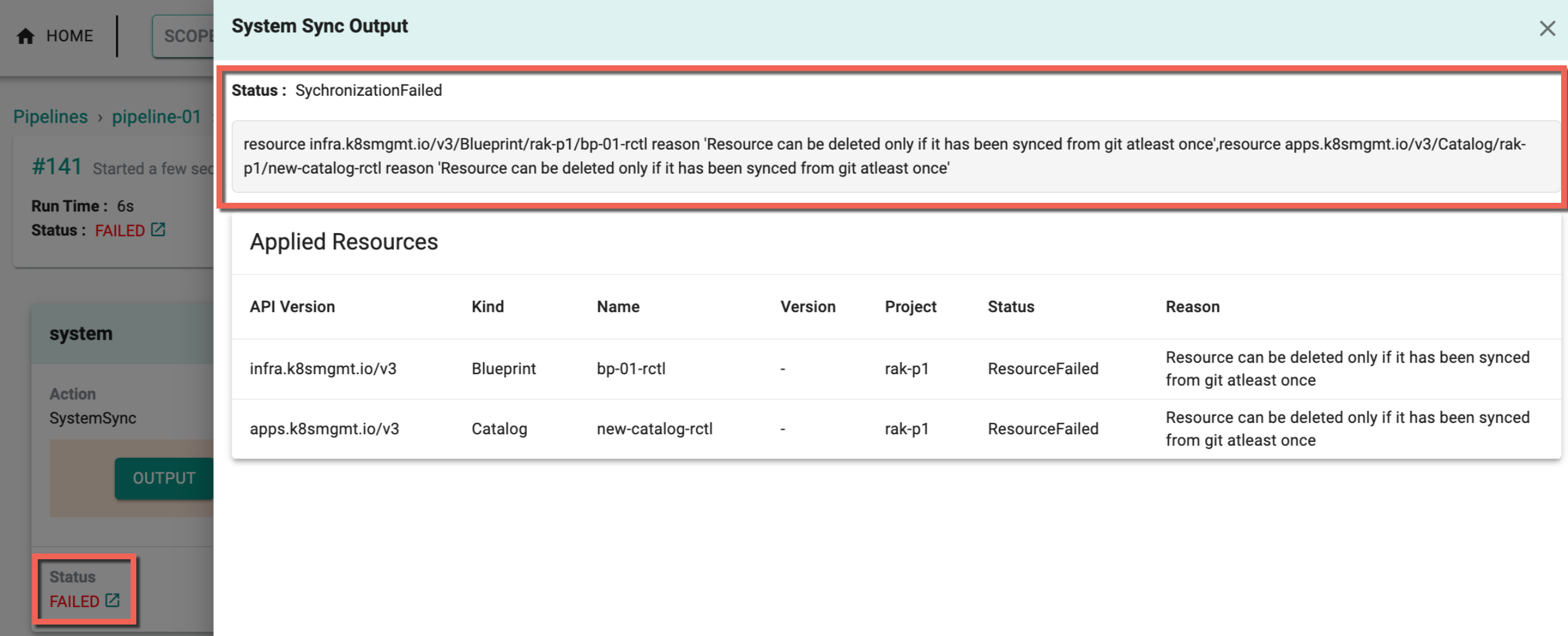

If attempting to delete a resource not created or updated in Git, the following error message will appear

Important

To prevent accidental deletions during synchronization, Termination Protection can be configured. This feature safeguards critical resources and ensures that deletion operations are performed with caution. For more details on how to configure and utilize Termination Protection, refer to the Termination Protection Settings.

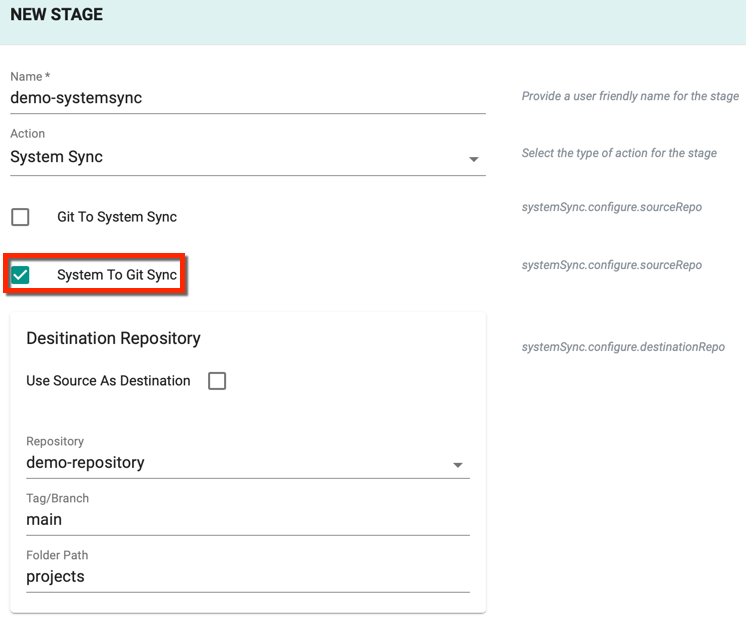

System to Git Sync

- To sync the resource specification (updated through Controller) from system to Git Repository to System, select System To Git Sync checkbox

- Select the Repository to which the resource details must be synced

- Provide the Tag/Branch of the repository

- Provide the appropriate Folder path of the repository

- Select Use Source As Destination checkbox to use the same source destination (or) uncheck this option to provide a different (system or repo) destination

Important

When enabling two-way sync for a cluster, it's recommended for admins to wait for a period of time to ensure synchronization of the cluster configuration on both the System and GIT before making any changes to the cluster via the Controller.

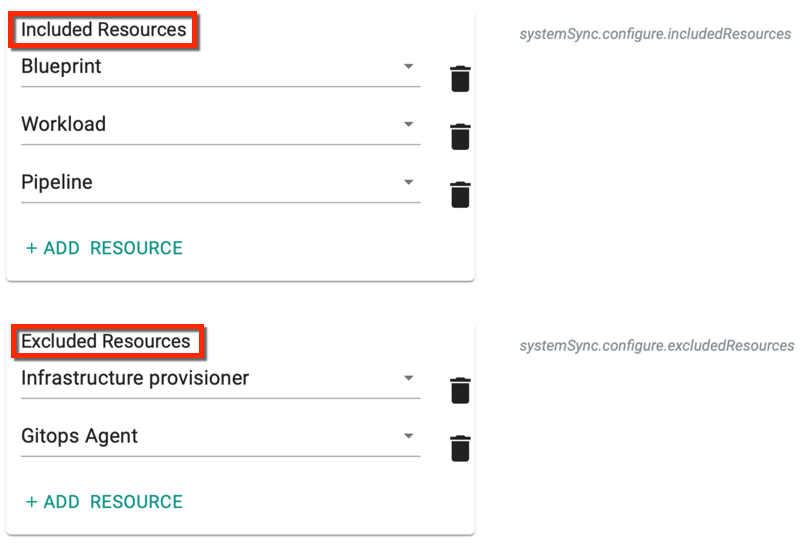

Include / Exclude Resources¶

Include Resources allows the users to add all the resources or add only the required resources anytime. Click Add Resource to include one or more resources. This operation sync only the selected resource(s) in Git Repository and System. Below are the supported resources

- Add-On

- Blueprint

- Catalog

- Cluster

- Cluster Network Policy

- Cluster Network Policy Rule

- Cluster Overrides

- Config Context

- Cost Profile

- Driver

- Environment

- Environment Template

- Fleet Plan

- Gitops Agent

- Infrastructure Provisioner

- Namespace

- Namespace Network Policy

- Namespace Network Policy Rule

- Network Policy Installation Profile

- OPA Constraint

- OPA Constraint Template

- OPA Installation Profile

- OPA Policy

- Pipeline

- Repository

- Resource Template

- Secret Provider Class

- Secret Sealer

- Secret Store

- Static Resources

- Workload

- Workload Template

Important

- Add-On from Catalog and Workload from Catalog system sync can be performed using the respective YAML spec available in the Git Repo. The parameter

catalog: default-bitnamiin the workload and add-on YAML spec represents the catalog functionality

By default, All Resources are selected from the drop-down

Exclude Resource allow the users to remove the resource(s) anytime. Click Add Resource to exclude one or more resources

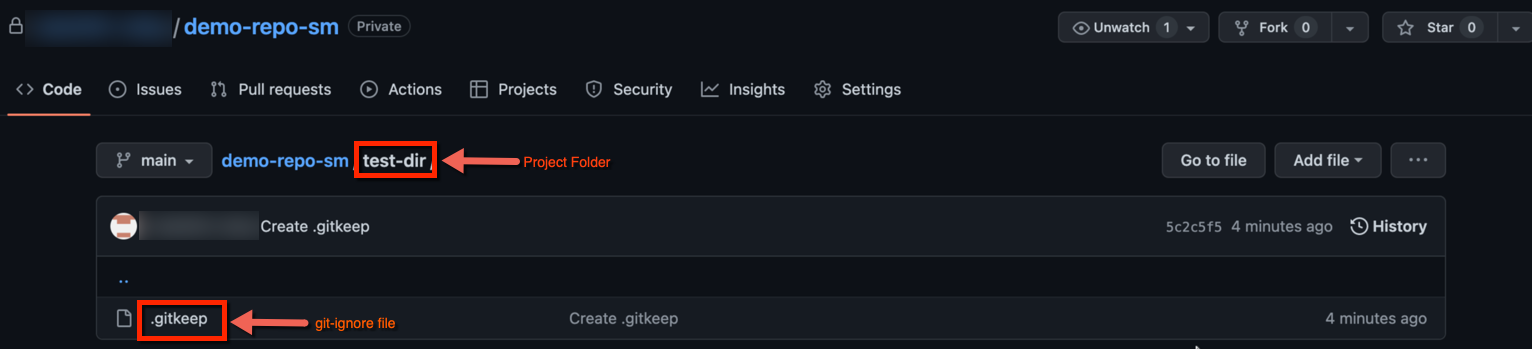

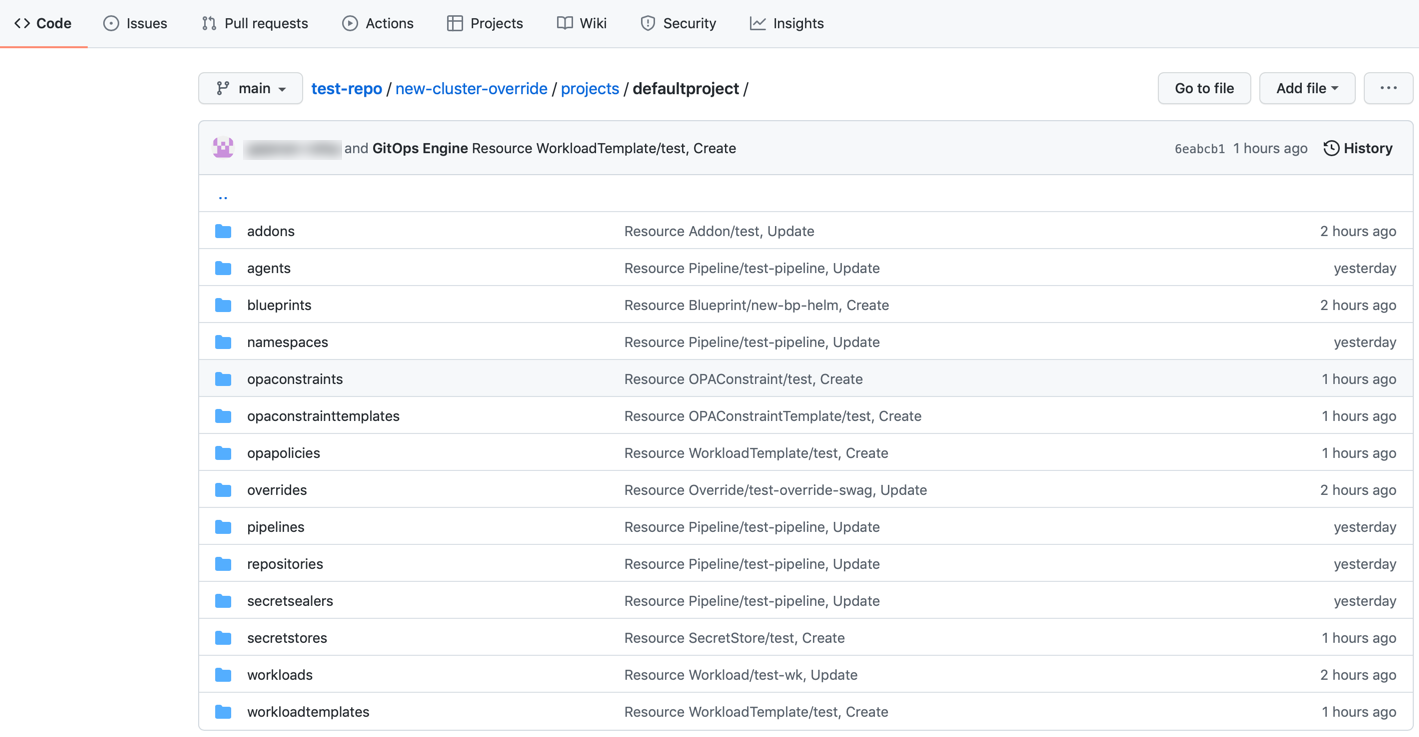

During System to Git Sync, regardless of whether any resource configuration files are available to sync, a new project folder with a git-ignore file named .gitkeep will be created in the selected git repo when triggering a pipeline. Users can add or remove the resources at any time. A project folder cannot be empty in the git repo, thus deleting the .gitkeep file where the project folder doesn't have any other resource configuration files, results in deleting the project folder itself. When you delete that .gitkeep file, the same file will get created on the next pipeline run.

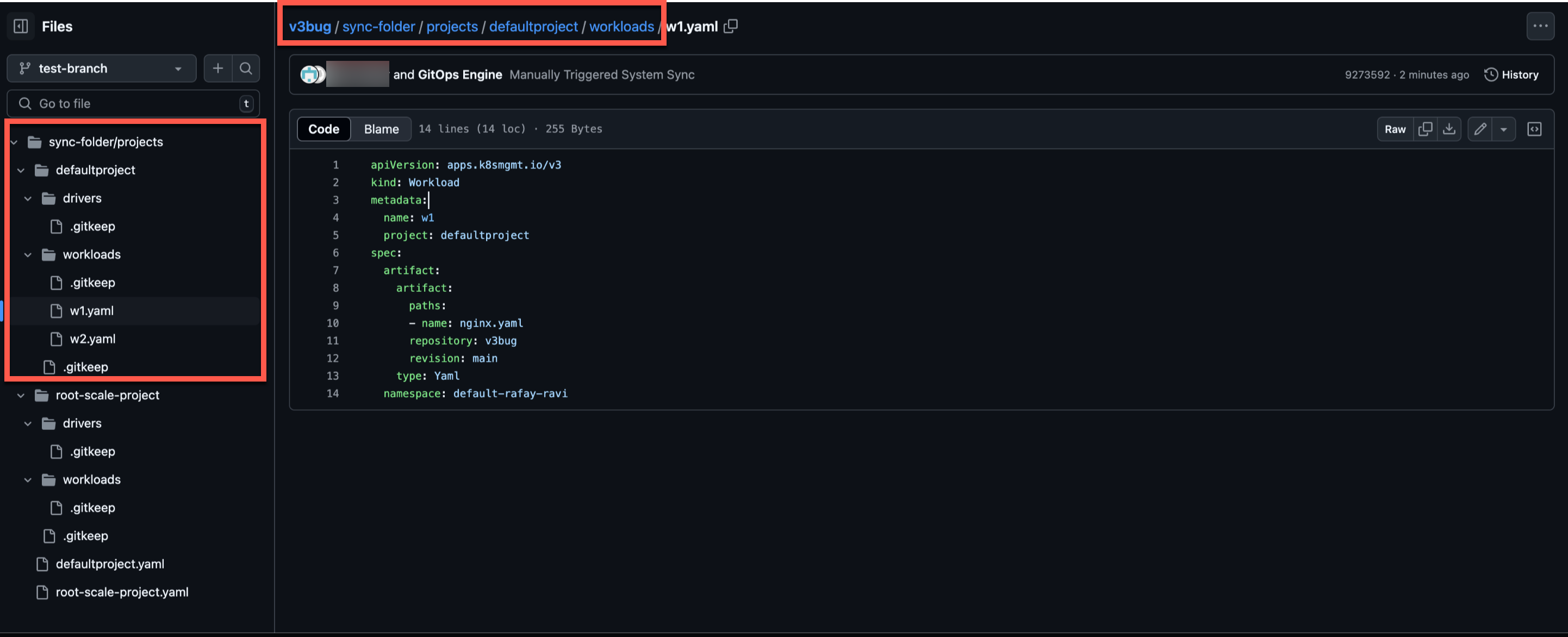

Similar to the project folder creation, during System to Git Sync, resource folders are now created based on the resources selected in the inclusion list, even if no resource configuration files are available to sync. When a pipeline is triggered, a folder corresponding to each selected resource will be created in the Git repository. Below is an example of the folder structure in the Git repository.

Pre-Conditions (Optional)

Stages can be configured to execute ONLY if the expression matches the specified pre-conditions

Stage Variables (Optional)

All the variables available for a given stage are fetched as a sorted list according to their scope (Organization -> Project -> Pipeline -> Trigger -> Stage). These variables are evaluated with the environment. The environment is then updated with the variable according to their scope

Click Save to add the system sync stage to the pipeline or Cancel to abort the process. Click Save and Go To Triggers to complete the sync process and trigger the changes

Refer Triggers for more information

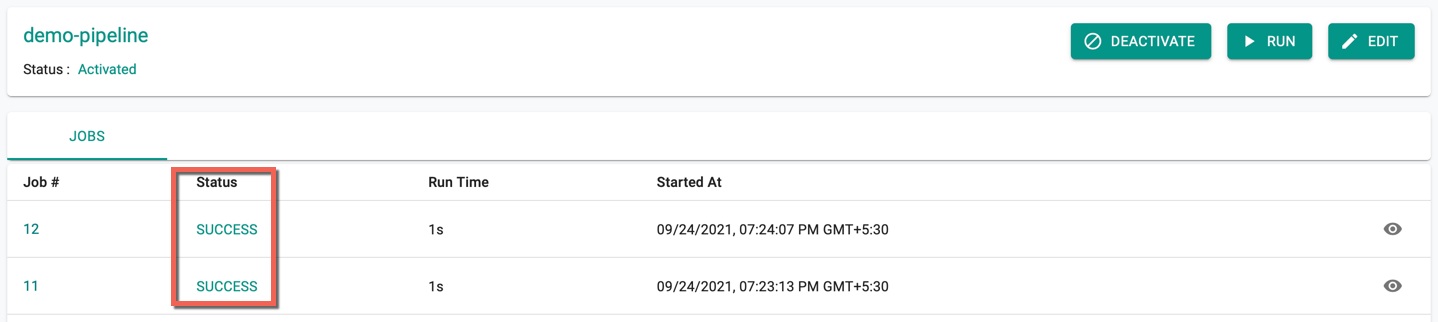

Success System Sync¶

The System Sync job begins automatically in the pipeline. The initial status is In Progress, later changes to Success. Use the Run button to trigger the pipeline for the changes performed manually in the system

Users can view the recently triggered changes on the Triggers page. Also, this creates a pipeline in Git Repo with all the selected resources in the specified project. Below is an example of defaultproject and the list of included resources

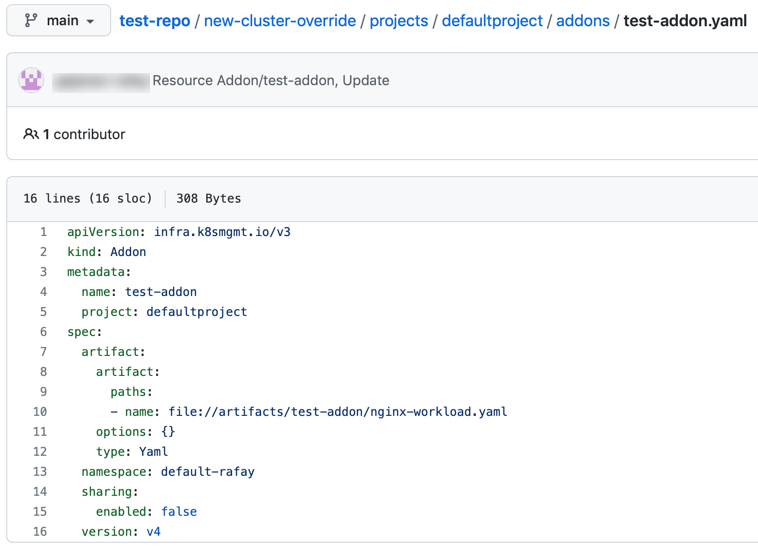

Below is an example of the add-ons resource in Git Repository where the users can view or update the resource specs based on the selected direction during system sync stage creation

Git Directory Structure for resource(s) sync: Repo_name/folder_name (provided by the customer)/projects/project_name/resource_name (example: cluster, addons, blueprint)

Important

When a resource contains a secret value/key, the system sync fails for that specific resource, but continues with the other resources. Currently, Infrastructure Provisioners is the only resource that can contain a secure text. Refer Secret Sealer on how to configure secrets for Infra Provisioners.

Refer Infrastructure Provisioners for more details on Infrastructure Provisioners configurations

Cluster Resource¶

The resource Cluster system sync is applicable for AKS, EKS and GKE clusters.

Examples

Below is an example of the AKS cluster resource yaml for cluster system sync process

apiVersion: infra.k8smgmt.io/v3

kind: Cluster

metadata:

name: testazure

project: default

spec:

blueprintConfig:

name: minimal

cloudCredentials: azure

config:

kind: aksClusterConfig

metadata:

name: testazure

spec:

managedCluster:

apiVersion: "2022-07-01"

identity:

type: SystemAssigned

location: centralindia

properties:

apiServerAccessProfile:

enablePrivateCluster: true

dnsPrefix: testazure-dns

kubernetesVersion: 1.24.9

networkProfile:

dnsServiceIP: 10.0.0.10

dockerBridgeCidr: 172.17.0.1/16

loadBalancerSku: standard

networkPlugin: azure

serviceCidr: 10.0.0.0/16

sku:

name: Basic

tier: Free

tags:

email: demo_abc@rafay.co

env: qa

type: Microsoft.ContainerService/managedClusters

nodePools:

- apiVersion: "2022-07-01"

location: centralindia

name: primary

properties:

count: 1

enableAutoScaling: true

maxCount: 1

maxPods: 110

minCount: 1

mode: System

orchestratorVersion: 1.24.9

osType: Linux

type: VirtualMachineScaleSets

vmSize: Standard_B2ms

type: Microsoft.ContainerService/managedClusters/agentPools

- apiVersion: "2022-07-01"

location: centralindia

name: np2

properties:

count: 1

enableAutoScaling: true

maxCount: 1

maxPods: 110

minCount: 1

mode: User

orchestratorVersion: 1.24.9

osType: Linux

type: VirtualMachineScaleSets

vmSize: Standard_B2ms

type: Microsoft.ContainerService/managedClusters/agentPools

resourceGroupName: demo-rg

proxyConfig: {}

type: aks

Below is an example of the EKS cluster resource yaml for cluster system sync process

apiVersion: infra.k8smgmt.io/v3

kind: Cluster

metadata:

name: demo-cluster

project: default

spec:

blueprintConfig:

name: demo-bp

version: v1

cloudCredentials: demo_aws

config:

managedNodeGroups:

- amiFamily: AmazonLinux2

desiredCapacity: 1

iam:

withAddonPolicies:

autoScaler: true

instanceType: t3.xlarge

maxSize: 2

minSize: 0

name: managed-ng-1

version: "1.22"

volumeSize: 80

volumeType: gp3

metadata:

name: demo-cluster

region: us-west-2

version: "1.22"

network:

cni:

name: aws-cni

params:

customCniCrdSpec:

us-west-2a:

- securityGroups:

- sg-09706d2348936a2b1

subnet: subnet-0f854d90d85509df9

us-west-2b:

- securityGroups:

- sg-09706d2348936a2b1

subnet: subnet-0301d84c8b9f82fd1

vpc:

clusterEndpoints:

privateAccess: false

publicAccess: true

nat:

gateway: Single

subnets:

private:

subnet-06e99eb57fcf4f117:

id: subnet-06e99eb57fcf4f117

subnet-0509b963a387f7fc7:

id: subnet-0509b963a387f7fc7

public:

subnet-056b49f76124e37ec:

id: subnet-056b49f76124e37ec

subnet-0e8e6d17f6cb05b29:

id: subnet-0e8e6d17f6cb05b29

proxyConfig: {}

type: aws-eks

Below is an example of the GKE cluster resource yaml for cluster system sync process

{

"Cluster": {

"description": "Cluster definition",

"properties": {

"apiVersion": {

"default": "infra.k8smgmt.io/v3",

"description": "api version",

"title": "API Version",

"type": "string"

},

"kind": {

"default": "Cluster",

"description": "kind",

"title": "Kind",

"type": "string"

},

"metadata": {

"$ref": "#/components/schemas/Metadata"

},

"spec": {

"$ref": "#/components/schemas/ClusterSpec"

},

"status": {

"$ref": "#/components/schemas/ClusterStatus"

}

},

"required": [

"apiVersion",

"kind",

"metadata",

"spec"

],

"title": "Cluster",

"type": "object"

},

"Metadata": {

"description": "metadata of the resource",

"properties": {

"annotations": {

"additionalProperties": {

"type": "string"

},

"description": "annotations of the resource",

"title": "Annotations",

"type": "object"

},

"description": {

"description": "description of the resource",

"title": "Description",

"type": "string"

},

"labels": {

"additionalProperties": {

"type": "string"

},

"description": "labels of the resource",

"title": "Lables",

"type": "object"

},

"name": {

"default": "example-cluster",

"description": "name of the resource",

"title": "Name",

"type": "string"

},

"project": {

"default": "defaultproject",

"description": "Project of the resource",

"title": "Project",

"type": "string"

}

},

"required": [

"name",

"project"

],

"title": "Metadata",

"type": "object"

},

"ClusterSpec": {

"description": "cluster specification",

"properties": {

"blueprint": {

"$ref": "#/components/schemas/ClusterBlueprint"

},

"cloudCredentials": {

"description": "The credentials to be used to interact with the cloud infrastructure",

"title": "Cloud Credentials",

"type": "string"

},

"config": {

"description": "GKE V3 cluster specification",

"properties": {

"controlPlaneVersion": {

"description": "Kubernetes version of ControlPlane",

"title": "Controlplane Version",

"type": "string"

},

"features": {

"$ref": "#/components/schemas/GkeFeatures"

},

"gcpProject": {

"description": "GCP Project name.",

"title": "Project",

"type": "string"

},

"location": {

"$ref": "#/components/schemas/GkeLocation"

},

"network": {

"$ref": "#/components/schemas/GkeNetwork"

},

"nodePools": {

"description": "GKE cluster node pool configuration.",

"items": {

"$ref": "#/components/schemas/GkeNodePool"

},

"title": "Node Pool",

"type": "array"

},

"preBootstrapCommands": {

"description": "Commands will be executed every time Cluster nodes come up. Example: Node Creation, Node Restart.",

"items": {

"type": "string"

},

"title": "PreBootstrapCommands",

"type": "array"

},

"security": {

"$ref": "#/components/schemas/GkeSecurity"

}

},

"title": "V3 GKE Cluster Specifications",

"type": "object"

},

"proxy": {

"$ref": "#/components/schemas/ClusterProxy"

},

"sharing": {

"$ref": "#/components/schemas/Sharing"

}

},

"title": "Cluster Specification",

"type": "object"

},

"ClusterProxy": {

"properties": {

"allowInsecureBootstrap": {

"type": "boolean"

},

"bootstrapCA": {

"type": "string"

},

"enabled": {

"type": "boolean"

},

"httpProxy": {

"type": "string"

},

"httpsProxy": {

"type": "string"

},

"noProxy": {

"type": "string"

},

"proxyAuth": {

"type": "string"

}

},

"type": "object"

},

"Sharing": {

"properties": {

"enabled": {

"type": "boolean"

},

"projects": {

"items": {

"$ref": "#/components/schemas/Projects"

},

"type": "array"

}

},

"type": "object"

},

"Projects": {

"properties": {

"name": {

"type": "string"

}

},

"type": "object"

},

"GkeV3ConfigObject": {

"properties": {

"controlPlaneVersion": {

"description": "Kubernetes version of ControlPlane",

"title": "Controlplane Version",

"type": "string"

},

"features": {

"$ref": "#/components/schemas/GkeFeatures"

},

"gcpProject": {

"description": "GCP Project name.",

"title": "Project",

"type": "string"

},

"location": {

"$ref": "#/components/schemas/GkeLocation"

},

"network": {

"$ref": "#/components/schemas/GkeNetwork"

},

"nodePools": {

"description": "GKE cluster node pool configuration.",

"items": {

"$ref": "#/components/schemas/GkeNodePool"

},

"title": "Node Pool",

"type": "array"

},

"preBootstrapCommands": {

"description": "Commands will be executed every time Cluster nodes come up. Example: Node Creation, Node Restart.",

"items": {

"type": "string"

},

"title": "PreBootstrapCommands",

"type": "array"

},

"security": {

"$ref": "#/components/schemas/GkeSecurity"

}

},

"type": "object"

},

"GkeZonalCluster": {

"properties": {

"zone": {

"description": "Zone in which the cluster's control plane and nodes are located",

"title": "Zone",

"type": "string"

}

},

"type": "object"

},

"GkeAccess": {

"properties": {

"config": {

"oneOf": [

{

"properties": {

"controlPlaneIPRange": {

"description": "Control plane IP range is for the control plane VPC. The control plane range must not overlap with any subnet in your cluster's VPC. The control plane and your cluster use VPC peering to communicate privately",

"title": "Control Plane IP Range",

"type": "string"

},

"disableSNAT": {

"description": "To use Privately Used Public IPs (PUPI) ranges, the default source NAT used for IP masquerading needs to be disabled",

"title": "Disable SNAT",

"type": "boolean"

},

"enableAccessControlPlaneExternalIP": {

"description": "Disabling this option locks down external access to the cluster control plane. There is still an external IP address used by Google for cluster management purposes, but the IP address is not accessible to anyone",

"title": "Enable Access Control Plane External IP",

"type": "boolean"

},

"enableAccessControlPlaneGlobal": {

"description": "With control plane global access, you can access the control plane's private endpoint from any GCP region or on-premises environment no matter what the private cluster's region is",

"title": "Enable Access Control Plane Global",

"type": "boolean"

}

},

"type": "object"

},

{

"type": "object"

}

]

},

"type": {

"description": "Choose the type of network you want to allow to access your cluster's workloads. private or public",

"enum": [

"private",

"public"

],

"title": "Network Access type",

"type": "string"

}

},

"type": "object"

},

"GkeAuthorizedNetwork": {

"properties": {

"cidr": {

"description": "CIDR Example: 198.51.100.0/24",

"title": "CIDR",

"type": "string"

},

"name": {

"description": "Name of the Authorized Network Example: Corporate Office",

"title": "Name",

"type": "string"

}

},

"type": "object"

},

"GkeControlPlaneAuthorizedNetwork": {

"properties": {

"authorizedNetwork": {

"description": "Add control plane authorized networks to block untrusted non-GCP source IPs from accessing the Kubernetes control plane through HTTPS",

"items": {

"$ref": "#/components/schemas/GkeAuthorizedNetwork"

},

"title": "Control plane Authorized Networks",

"type": "array"

},

"enabled": {

"description": "Enable Control Plane Authorized Network. Configure the Networks now or later.",

"title": "Enable Control Plane Authorized Network",

"type": "boolean"

}

},

"type": "object"

},

"GkeDefaultNodeLocation": {

"properties": {

"enabled": {

"description": "Enable providing default node locations",

"title": "Default Node Locations Enabled",

"type": "boolean"

},

"zones": {

"description": "List of zones. Increase availability by providing more than one zone. The same number of nodes will be deployed to each zone in the list.",

"items": {

"type": "string"

},

"title": "Zones",

"type": "array"

}

},

"type": "object"

},

"GkeFeatures": {

"properties": {

"cloudLoggingComponents": {

"description": "List of components for cloud logging",

"items": {

"type": "string"

},

"title": "Cloud Logging Components",

"type": "array"

},

"cloudMonitoringComponents": {

"description": "List of components for cloud monitoring",

"items": {

"type": "string"

},

"title": "Cloud Monitoring Components",

"type": "array"

},

"enableApplicationManagerBeta": {

"description": "Application Manager is a GKE controller for managing the lifecycle of applications. It enables application delivery and updates following Kubernetes and GitOps best practices",

"title": "Enable Application Manager Beta",

"type": "boolean"

},

"enableBackupForGke": {

"description": "Backup for GKE allows you to back up and restore GKE workloads. There is no cost for enabling this feature, but you are charged for backups based on the size of the data and the number of pods you protect",

"title": "Enable Backup For GKE",

"type": "boolean"

},

"enableCloudLogging": {

"description": "Logging collects logs emitted by your applications and by GKE infrastructure",

"title": "Enable Cloud Logging",

"type": "boolean"

},

"enableCloudMonitoring": {

"description": "Monitoring collects metrics emitted by your applications and by GKE infrastructure",

"title": "Enable Cloud Monitoring",

"type": "boolean"

},

"enableComputeEnginePersistentDiskCSIDriver": {

"description": "Enable to automatically deploy and manage the Compute Engine Persistent Disk CSI Driver. This feature is an alternative to using the gcePersistentDisk in-tree volume plugin",

"title": "Enable Compute Engine Persistent Disk CSI Driver",

"type": "boolean"

},

"enableFilestoreCSIDriver": {

"description": "Enable to automatically deploy and manage the Filestore CSI Driver",

"title": "Enable Filestore CSI Driver",

"type": "boolean"

},

"enableImageStreaming": {

"description": "Image streaming allows your workloads to initialize without waiting for the entire image to download",

"title": "Enable Image Streaming",

"type": "boolean"

},

"enableManagedServicePrometheus": {

"description": "This option deploys managed collectors for Prometheus metrics within this cluster. These collectors must be configured using PodMonitoring resources. To enable Managed Service for Prometheus here, you'll need. Cluster version of 1.21.4-gke.300 or greater",

"title": "Enable Managed Service Prometheus",

"type": "boolean"

}

},

"type": "object"

},

"GkeGCEInstanceMetadata": {

"properties": {

"key": {

"description": "Key for this metadata",

"title": "Key",

"type": "string"

},

"value": {

"description": "Value for this metadata",

"title": "Value",

"type": "string"

}

},

"type": "object"

},

"GkeKubernetesLabel": {

"properties": {

"key": {

"description": "Key for this kubernetes label",

"title": "Key",

"type": "string"

},

"value": {

"description": "Value for this kubernetes lable",

"title": "Value",

"type": "string"

}

},

"type": "object"

},

"GkeLocation": {

"properties": {

"config": {

"oneOf": [

{

"properties": {

"zone": {

"description": "Zone in which the cluster's control plane and nodes are located",

"title": "Zone",

"type": "string"

}

},

"type": "object"

},

{

"properties": {

"region": {

"description": "Regional location in which the cluster's control plane and nodes are located",

"title": "Region",

"type": "string"

},

"zone": {

"description": "Zone in the region where bootstrap VM is created for cluster provisioning ",

"title": "Zone",

"type": "string"

}

},

"type": "object"

}

]

},

"defaultNodeLocations": {

"$ref": "#/components/schemas/GkeDefaultNodeLocation"

},

"type": {

"description": "GKE Cluster location can be either zonal or regional",

"enum": [

"zonal",

"regional"

],

"title": "Type",

"type": "string"

}

},

"type": "object"

},

"GkeNetwork": {

"properties": {

"access": {

"$ref": "#/components/schemas/GkeAccess"

},

"controlPlaneAuthorizedNetwork": {

"$ref": "#/components/schemas/GkeControlPlaneAuthorizedNetwork"

},

"enableVPCNativetraffic": {

"description": "This feature uses alias IP and provides a more secure integration with Google Cloud Platform services",

"title": "Enable VPS Native Traffic",

"type": "boolean"

},

"maxPodsPerNode": {

"description": "This value is used to optimize the partitioning of cluster's IP address range to sub-ranges at node level",

"format": "int64",

"title": "Max Pods Per Node",

"type": "integer"

},

"name": {

"description": "Name of the network that the cluster is in. It determines which other Compute Engine resource it is able to communicate with",

"title": "Name",

"type": "string"

},

"podAddressRange": {

"description": "All pods in the cluster are assigned an IP address from this range. Enter a range (in CIDR notation) within a network range, a mask, or leave this field blank to use a default range.",

"title": "Pod Address Range",

"type": "string"

},

"serviceAddressRange": {

"description": "Cluster services will be assigned an IP address from this IP address range. Enter a range (in CIDR notation) within a network range, a mask, or leave this field blank to use a default range.",

"title": "Service Address Range",

"type": "string"

},

"subnetName": {

"description": "Subnetwork to which the Kubernetes cluster will belong. When VPC native is enabled, the subnetwork must contain at least two secondary ranges which are not used by other Kubernetes clusters. Subnet is permanent.",

"title": "Subnetwork Name",

"type": "string"

}

},

"type": "object"

},

"GkeNodeAutoScale": {

"properties": {

"maxNodes": {

"description": "Maximum number of nodes (per zone)",

"format": "int64",

"title": "Max Nodes",

"type": "integer"

},

"minNodes": {

"description": "Minimum number of nodes (per zone)",

"format": "int64",

"title": "Min Nodes",

"type": "integer"

}

},

"type": "object"

},

"GkeNodeLocation": {

"properties": {

"enabled": {

"description": "Enable providing node locations",

"title": "Node Locations Enabled",

"type": "boolean"

},

"zones": {

"description": "List of zones. Additional node zones must be from the same region as the original zone. Kubernetes Engine allocates the same resource footprint for each zone. The Node pool setting overrides the defaults set in Cluster basics",

"items": {

"type": "string"

},

"title": "Zones",

"type": "array"

}

},

"type": "object"

},

"GkeNodeMachineConfig": {

"properties": {

"bootDiskSize": {

"description": "Select Boot disk size. Boot disk size is permanent",

"format": "int64",

"title": "Boot Disk Size",

"type": "integer"

},

"bootDiskType": {

"description": "Select Boot disk type. Storage space is less expensive for a standard persistent disk. An SSD persistent disk is better for random IOPS or for streaming throughput with low latency",

"title": "Boot Disk Type",

"type": "string"

},

"imageType": {

"description": "Choose which operating system image you want to run on each node of this cluster",

"title": "Image Type",

"type": "string"

},

"machineType": {

"description": "Choose the machine type that will best fit the resource needs of your cluster",

"title": "Machine Type",

"type": "string"

}

},

"type": "object"

},

"GkeNodeMetadata": {

"properties": {

"gceInstanceMetadata": {

"description": "Metadata to be stored in the instance",

"items": {

"$ref": "#/components/schemas/GkeGCEInstanceMetadata"

},

"title": "GCE Instance Metadata",

"type": "array"

},

"kubernetesLabels": {

"description": "Use Kubernetes labels to control how workloads are scheduled to your nodes. Labels are applied to all nodes in this node pool and cannot be changed once the cluster is created",

"items": {

"$ref": "#/components/schemas/GkeKubernetesLabel"

},

"title": "Kubernetes Labels",

"type": "array"

},

"nodeTaints": {

"description": "A node taint lets you mark a node so that the scheduler avoids or prevents using it for certain Pods. Node taints can be used with tolerations to ensure that Pods aren't scheduled onto inappropriate nodes",

"items": {

"$ref": "#/components/schemas/GkeNodeTaint"

},

"title": "Node Taints",

"type": "array"

}

},

"type": "object"

},

"GkeNodeNetworking": {

"properties": {

"maxPodsPerNode": {

"description": "This value is used to optimize the partitioning of cluster's IP address range to sub-ranges at node level",

"format": "int64",

"title": "Max Pods Per Node",

"type": "integer"

},

"networkTags": {

"description": "This value is used to optimize the partitioning of cluster's IP address range to sub-ranges at node level",

"items": {

"type": "string"

},

"title": "Network Tags",

"type": "array"

}

},

"type": "object"

},

"GkeNodePool": {

"properties": {

"autoScaling": {

"$ref": "#/components/schemas/GkeNodeAutoScale"

},

"machineConfig": {

"$ref": "#/components/schemas/GkeNodeMachineConfig"

},

"metadata": {

"$ref": "#/components/schemas/GkeNodeMetadata"

},

"name": {

"description": "Node pool names must start with a lowercase letter followed by up to 39 lowercase letters, numbers, or hyphens. They can't end with a hyphen. You cannot change the node pool's name once it's created",

"title": "Name",

"type": "string"

},

"networking": {

"$ref": "#/components/schemas/GkeNodeNetworking"

},

"nodeLocations": {

"$ref": "#/components/schemas/GkeNodeLocation"

},

"nodeVersion": {

"description": "Specify Node k8s version",

"title": "Node Version",

"type": "string"

},

"security": {

"$ref": "#/components/schemas/GkeNodeSecurity"

},

"size": {

"description": "Pod address range limits the maximum size of the cluster",

"format": "int64",

"title": "Size",

"type": "integer"

}

},

"type": "object"

},

"GkeNodeSecurity": {

"properties": {

"enableIntegrityMonitoring": {

"description": "Integrity monitoring lets you monitor and verify the runtime boot integrity of your shielded nodes using Cloud Monitoring",

"title": "Enable Integrity Monitoring",

"type": "boolean"

},

"enableSecureBoot": {

"description": "Secure boot helps protect your nodes against boot-level and kernel-level malware and rootkits",

"title": "Enable Secure Boot",

"type": "boolean"

}

},

"type": "object"

},

"GkeNodeTaint": {

"properties": {

"effect": {

"description": "Available effects are NoSchedule, PreferNoSchedule, NoExecute",

"enum": [

"NoSchedule",

"PreferNoSchedule",

"NoExecute"

],

"title": "Effect",

"type": "string"

},

"key": {

"description": "Key for this Taint effect",

"title": "Key",

"type": "string"

},

"value": {

"description": "Value for this Taint effect",

"title": "Value",

"type": "string"

}

},

"type": "object"

},

"GkePrivateCluster": {

"properties": {

"controlPlaneIPRange": {

"description": "Control plane IP range is for the control plane VPC. The control plane range must not overlap with any subnet in your cluster's VPC. The control plane and your cluster use VPC peering to communicate privately",

"title": "Control Plane IP Range",

"type": "string"

},

"disableSNAT": {

"description": "To use Privately Used Public IPs (PUPI) ranges, the default source NAT used for IP masquerading needs to be disabled",

"title": "Disable SNAT",

"type": "boolean"

},

"enableAccessControlPlaneExternalIP": {

"description": "Disabling this option locks down external access to the cluster control plane. There is still an external IP address used by Google for cluster management purposes, but the IP address is not accessible to anyone",

"title": "Enable Access Control Plane External IP",

"type": "boolean"

},

"enableAccessControlPlaneGlobal": {

"description": "With control plane global access, you can access the control plane's private endpoint from any GCP region or on-premises environment no matter what the private cluster's region is",

"title": "Enable Access Control Plane Global",

"type": "boolean"

}

},

"type": "object"

},

"GkePublicCluster": {

"type": "object"

},

"GkeRegionalCluster": {

"properties": {

"region": {

"description": "Regional location in which the cluster's control plane and nodes are located",

"title": "Region",

"type": "string"

},

"zone": {

"description": "Zone in the region where bootstrap VM is created for cluster provisioning ",

"title": "Zone",

"type": "string"

}

},

"type": "object"

},

"GkeSecurity": {

"properties": {

"enableGoogleGroupsForRabc": {

"description": "Google Groups for RBAC allows you to grant roles to all members of a Google Workspace group",

"title": "Enable Google Groups For RBAC",

"type": "boolean"

},

"enableLegacyAuthorization": {

"description": "Enable legacy authorization to support in-cluster permissions for existing clusters or workflows. Prevents full RBAC support",

"title": "Enable Legacy Authorization",

"type": "boolean"

},

"enableWorkloadIdentity": {

"description": "Workload Identity lets you connect securely to Google APIs from Kubernetes Engine workloads",

"title": "Enable Workload Identity",

"type": "boolean"

},

"issueClientCertificate": {

"description": "Clients use this base64-encoded public certificate to authenticate to the cluster endpoint. Certificates don’t rotate automatically and are difficult to revoke",

"title": "Issue Client Certificate",

"type": "boolean"

},

"securityGroup": {

"description": "Provide the security groups here",

"title": "Security Group",

"type": "string"

}

},

"type": "object"

},

"ClusterStatus": {

"description": "cluster status",

"properties": {

"commonStatus": {

"$ref": "#/components/schemas/Status"

},

"controPlane": {

"$ref": "#/components/schemas/ControlPlaneStatus"

},

"createdAt": {

"$ref": "#/components/schemas/StatusTime"

},

"displayName": {

"type": "string"

},

"gke": {

"$ref": "#/components/schemas/GkeStatus"

},

"id": {

"type": "string"

},

"name": {

"type": "string"

}

},

"title": "Cluster Status",

"type": "object"

},

"GkeStatus": {

"properties": {

"conditions": {

"description": "GKE specific cluster status (Read only)",

"items": {

"$ref": "#/components/schemas/GkeClusterCondition"

},

"title": "GKE Cluster Conditions",

"type": "array"

},

"nodepools": {

"description": " GKE specific nodepool status (Read only)",

"items": {

"$ref": "#/components/schemas/NodePoolStatus"

},

"title": "GKE NodePool Status ",

"type": "array"

}

},

"type": "object"

},

"GkeClusterCondition": {

"properties": {

"duration": {

"description": "Duration of time it took for the condition to be met",

"title": "Duration",

"type": "string"

},

"lastUpdated": {

"$ref": "#/components/schemas/Timestamp"

},

"reason": {

"description": "Text indicating the reason for the condition's last transition.",

"title": "Reason",

"type": "string"

},

"status": {

"description": "Status of this condition type. Indicates whether that condition is applicable, with possible values \"True\", \"False\", or \"Unknown\".",

"title": "Status",

"type": "string"

},

"type": {

"description": "Type of the condition",

"title": "Type",

"type": "string"

}

},

"type": "object"

},

"NodePoolStatus": {

"properties": {

"conditions": {

"description": "Various conditions and their details of the corresponding nodepool",

"items": {

"$ref": "#/components/schemas/NodePoolCondition"

},

"title": "Conditions",

"type": "array"

},

"kubernetesVersion": {

"description": "Kubernetes Version running on this nodepool in SemVer format.",

"title": "Kubernetes Version",

"type": "string"

},

"name": {

"description": "Name of the Node Pool",

"title": "Name",

"type": "string"

}

},

"type": "object"

},

"NodePoolCondition": {

"properties": {

"duration": {

"description": "Duration of time it took for the condition to be met",

"title": "Duration",

"type": "string"

},

"lastUpdated": {

"$ref": "#/components/schemas/Timestamp"

},

"reason": {

"description": "Text indicating the reason for the condition's last transition.",

"title": "Reason",

"type": "string"

},

"status": {

"description": "Status of this nodepool condition type. Indicates whether that condition is applicable, with possible values \"True\", \"False\", or \"Unknown\".",

"title": "Status",

"type": "string"

},

"type": {

"description": "Type of Nodepool condition",

"title": "Type",

"type": "string"

}

},

"type": "object"

}

}

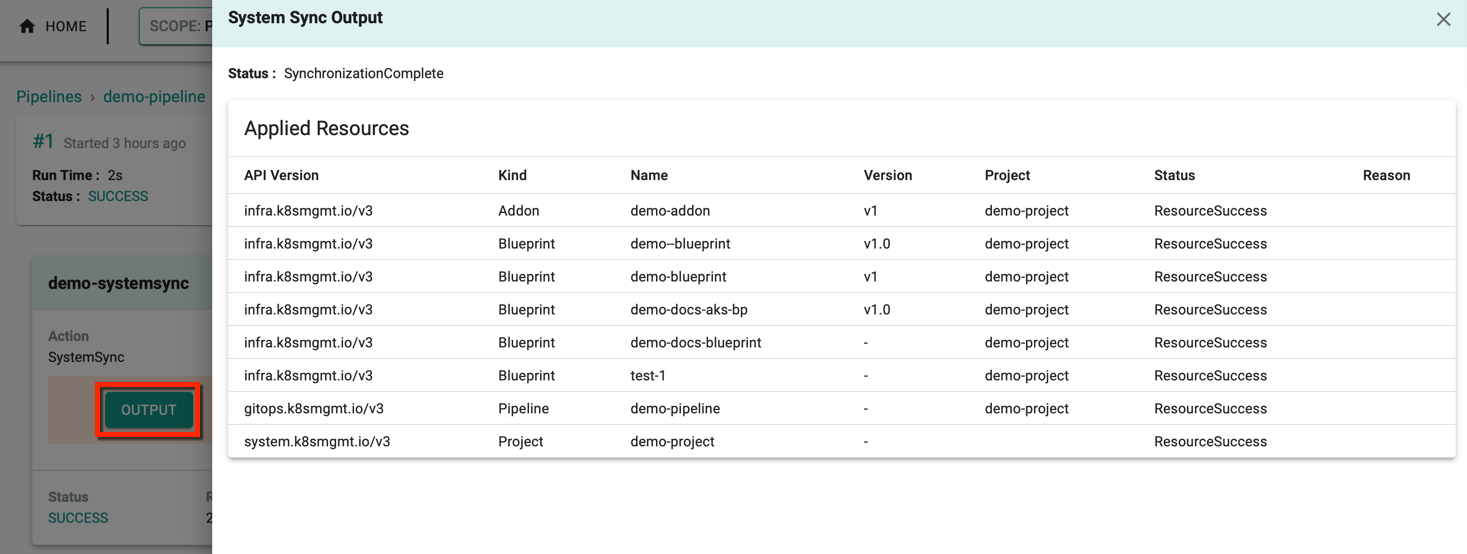

System Sync Output¶

On a successful run, select the job and click Output to view any updates/modifications triggered recently within the system. Below is an example of the resource updates triggered internally in the controller.

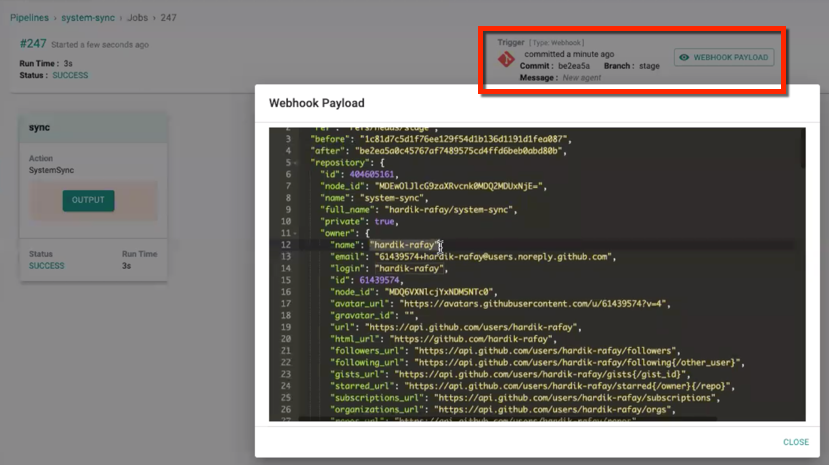

Event Payload

Click Event Payload to view in which resource the changes are performed, user id, type of operation, etc.

- The changes performed internally through the controller, i.e., System To Git Sync, the Trigger type in the controller is Internally Triggered

- The changes performed externally through Git repository, i.e., Git to System Sync, the Trigger type is Webhook

- Manually running the job after a change using the Run icon, the Trigger type is Manually Triggered

System Sync Pipeline Sharing¶

Users can share a system sync pipeline with All Projects/Specific Projects/None. When sharing the system sync pipeline and any resources containing a secret value/key, system sync fails for that specific resource and proceeds with the other resources

Users can also create a new project in Git Repository and use the existing pipeline of any project

Important

To use GitOps for cluster operations, users must upgrade their existing GitOps Agent